Superkarakuri-lm-chat-70b-v0.1

概要

karakuri-ai/karakuri-lm-70b-chat-v0.1とallenai/tulu-2-dpo-70bの2つのモデルをmergekitを使いマージしたモデルです。

マージ手法はnitky/Superswallow-70b-v0.1と同一のものを利用しています。

以下2モデルを利用しています。

ライセンス

Llama2ライセンスを継承しますが、それ以外にもこのマージモデルは下記ライセンスの影響を受ける可能性があります。

- allenai/tulu-2-dpo-70bの利用により、AI2 ImpACT licenseを継承している可能性があります。ライセンスに関する連絡を受けた場合は変更の可能性があります。

また、商用利用の際にはKARAKURI Incにコンタクトを取る必要があります。 元モデルのライセンスを引用します。

Llama 2 is licensed under the LLAMA 2 Community License, Copyright © Meta Platforms, Inc. All Rights Reserved.

Subject to the license above, and except for commercial purposes, you are free to share and adapt KARAKURI LM, provided that you must, in a recognizable and appropriate manner, (i) state that you are using KARAKURI LM developed by KARAKURI Inc., when you publish or make available to third parties KARAKURI LM, its derivative works or modification, or any output or results of KARAKURI LM or its derivative works or modification, and (ii) indicate your contributions, if you modified any material of KARAKURI LM.

If you plan to use KARAKURI LM for commercial purposes, please contact us beforehand. You are not authorized to use KARAKURI LM for commercial purposes unless we expressly grant you such rights.

If you have any questions regarding the interpretation of above terms, please also feel free to contact us.

ベンチマーク

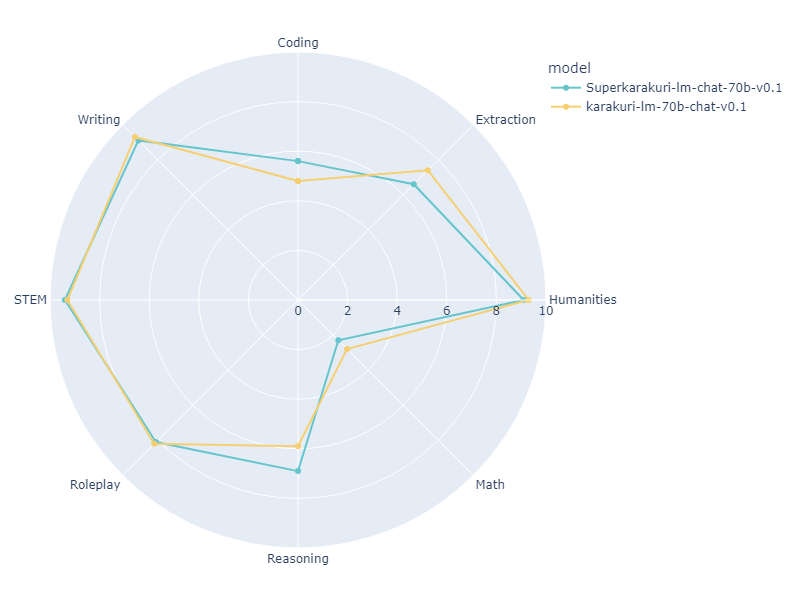

ベースとしたkarakuri-lm-70b-chat-v0.1と本モデルのjapanese-mt-benchの結果は以下の通りです。 (シングルターン, 4ビット量子化)

| Model | Size | Coding | Extraction | Humanities | Math | Reasoning | Roleplay | STEM | Writing | avg_score |

|---|---|---|---|---|---|---|---|---|---|---|

| karakuri-lm-70b-chat-v0.1 | 70B | 4.8 | 7.4 | 9.3 | 2.8 | 5.9 | 8.2 | 9.3 | 9.3 | 7.1250 |

| This model | 70B | 5.6 | 6.6 | 9.1 | 2.3 | 6.9 | 8.1 | 9.4 | 9.1 | 7.1375 |

ベンチマークに使用したプロンプト

<s>[INST] <<SYS>>

あなたは誠実で優秀な日本人のアシスタントです。

<</SYS>>

{instruction} [ATTR] helpfulness: 4 correctness: 4 coherence: 4 complexity: 4 verbosity: 4 quality: 4 toxicity: 0 humor: 0 creativity: 0 [/ATTR] [/INST]

Description

This model is created using DARE TIES through mergekit based on karakuri-ai/karakuri-lm-70b-chat-v0.1 and allenai/tulu-2-dpo-70b.

Inspired by nitky/Superswallow-70b-v0.1.

Click here for the GGUF version

It utilizes the following two models:

License

While inheriting the Llama2 license, this merged model is also subject to other licenses due to its use of models beyond Llama2.

- Due to the use of allenai/tulu-2-dpo-70b, it may inherit the AI2 ImpACT license. I will change the license immediately if AI2 contacts me.

In addition, for commercial use, it is necessary to contact KARAKURI Inc. I will quote the license of the original model.

Llama 2 is licensed under the LLAMA 2 Community License, Copyright © Meta Platforms, Inc. All Rights Reserved.

Subject to the license above, and except for commercial purposes, you are free to share and adapt KARAKURI LM, provided that you must, in a recognizable and appropriate manner, (i) state that you are using KARAKURI LM developed by KARAKURI Inc., when you publish or make available to third parties KARAKURI LM, its derivative works or modification, or any output or results of KARAKURI LM or its derivative works or modification, and (ii) indicate your contributions, if you modified any material of KARAKURI LM.

If you plan to use KARAKURI LM for commercial purposes, please contact us beforehand. You are not authorized to use KARAKURI LM for commercial purposes unless we expressly grant you such rights.

If you have any questions regarding the interpretation of above terms, please also feel free to contact us.

Benchmark

The results of this model and the base karakuri-lm-70b-chat-v0.1 on japanese-mt-bench are as follows. (Single turn, 4-bit quantization)

| Model | Size | Coding | Extraction | Humanities | Math | Reasoning | Roleplay | STEM | Writing | avg_score |

|---|---|---|---|---|---|---|---|---|---|---|

| karakuri-lm-70b-chat-v0.1 | 70B | 4.8 | 7.4 | 9.3 | 2.8 | 5.9 | 8.2 | 9.3 | 9.3 | 7.1250 |

| This model | 70B | 5.6 | 6.6 | 9.1 | 2.3 | 6.9 | 8.1 | 9.4 | 9.1 | 7.1375 |

Prompt used for benchmark

<s>[INST] <<SYS>>

あなたは誠実で優秀な日本人のアシスタントです。

<</SYS>>

{instruction} [ATTR] helpfulness: 4 correctness: 4 coherence: 4 complexity: 4 verbosity: 4 quality: 4 toxicity: 0 humor: 0 creativity: 0 [/ATTR] [/INST]

Merge Details

Merge Method

This model was merged using the DARE TIES merge method using karakuri-ai/karakuri-lm-70b-chat-v0.1 as a base.

Models Merged

The following models were included in the merge:

Configuration

The following YAML configuration was used to produce this model:

models:

- model: ./karakuri-lm-70b-chat-v0.1

# no parameters necessary for base model

- model: ./tulu-2-dpo-70b # follow user intent

parameters:

density: 1

weight:

- filter: mlp.down_proj

value: [0.3, 0.25, 0.25, 0.15, 0.1]

- filter: mlp.gate_proj

value: [0.7, 0.25, 0.5, 0.45, 0.4]

- filter: mlp.up_proj

value: [0.7, 0.25, 0.5, 0.45, 0.4]

- filter: self_attn

value: [0.7, 0.25, 0.5, 0.45, 0.4]

- value: 0 # fallback for rest of tensors.

merge_method: dare_ties

base_model: ./karakuri-lm-70b-chat-v0.1

dtype: bfloat16

tokenizer_source: union

- Downloads last month

- 3