PTA-1: Controlling Computers with Small Models

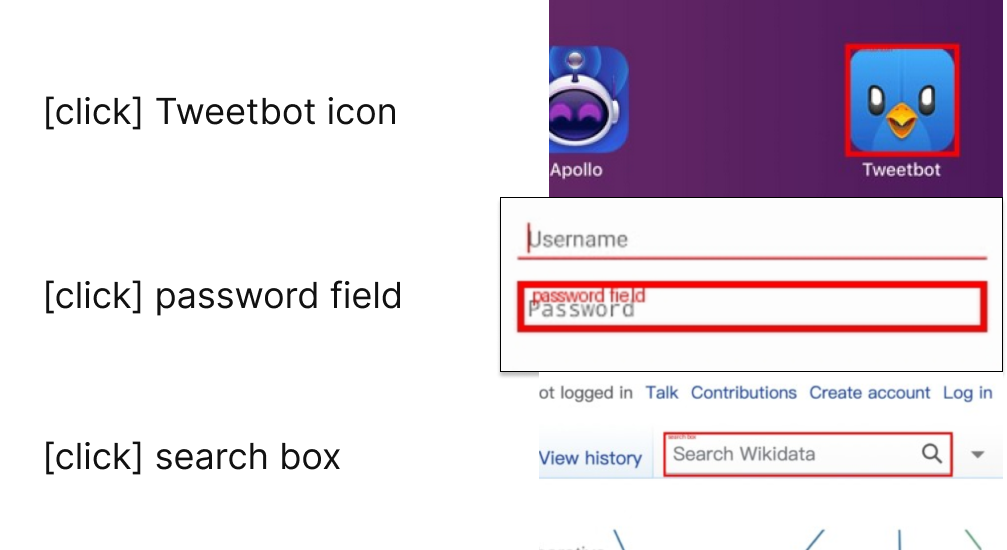

PTA (Prompt-to-Automation) is a vision language model for computer & phone automation, based on Florence-2. With only 270M parameters it outperforms much larger models in GUI text and element localization. This enables low-latency computer automation with local execution.

▶️ Try the demo at: AskUI/PTA-1

Model Input: Screenshot + description_of_target_element

Model Output: BoundingBox for Target Element

How to Get Started with the Model

Use the code below to get started with the model.

Requirements: torch, timm, einops, Pillow, transformers

import torch

from PIL import Image

from transformers import AutoProcessor, AutoModelForCausalLM

device = "cuda:0" if torch.cuda.is_available() else "cpu"

torch_dtype = torch.float16 if torch.cuda.is_available() else torch.float32

model = AutoModelForCausalLM.from_pretrained("AskUI/PTA-1", torch_dtype=torch_dtype, trust_remote_code=True).to(device)

processor = AutoProcessor.from_pretrained("AskUI/PTA-1", trust_remote_code=True)

task_prompt = "<OPEN_VOCABULARY_DETECTION>"

prompt = task_prompt + "description of the target element"

image = Image.open("path to screenshot").convert("RGB")

inputs = processor(text=prompt, images=image, return_tensors="pt").to(device, torch_dtype)

generated_ids = model.generate(

input_ids=inputs["input_ids"],

pixel_values=inputs["pixel_values"],

max_new_tokens=1024,

do_sample=False,

num_beams=3,

)

generated_text = processor.batch_decode(generated_ids, skip_special_tokens=False)[0]

parsed_answer = processor.post_process_generation(generated_text, task="<OPEN_VOCABULARY_DETECTION>", image_size=(image.width, image.height))

print(parsed_answer)

Evaluation

Note: This is a first version of our evaluation, based on 999 samples (333 samples from each dataset). We are still running all models on the full test sets, and we are seeing ±5% deviations for a subset of the models we have already evaluated.

| Model | Parameters | Mean | agentsea/wave-ui | AskUI/pta-text | ivelin/rico_refexp_combined |

|---|---|---|---|---|---|

| AskUI/PTA-1 | 0.27B | 79.98 | 90.69* | 76.28 | 72.97* |

| anthropic.claude-3-5-sonnet-20241022-v2:0 | - | 70.37 | 82.28 | 83.18 | 45.65 |

| agentsea/paligemma-3b-ft-waveui-896 | 3.29B | 57.76 | 70.57* | 67.87 | 34.83 |

| Qwen/Qwen2-VL-7B-Instruct | 8.29B | 57.26 | 47.45 | 60.66 | 63.66 |

| agentsea/paligemma-3b-ft-widgetcap-waveui-448 | 3.29B | 53.15 | 74.17* | 53.45 | 31.83 |

| microsoft/Florence-2-base | 0.27B | 39.44 | 22.22 | 81.38 | 14.71 |

| microsoft/Florence-2-large | 0.82B | 36.64 | 14.11 | 81.98 | 13.81 |

| EasyOCR | - | 29.43 | 3.9 | 75.08 | 9.31 |

| adept/fuyu-8b | 9.41B | 26.83 | 5.71 | 71.47 | 3.3 |

| Qwen/Qwen2-VL-2B-Instruct | 2.21B | 23.32 | 17.12 | 26.13 | 26.73 |

| Qwen/Qwen2-VL-2B-Instruct-GPTQ-Int4 | 0.90B | 18.92 | 10.81 | 22.82 | 23.12 |

* Models is known to be trained on the train split of that dataset.

The high benchmark scores for our model are partially due to data bias. Therefore, we expect users of the model to fine-tune it according to the data distributions of their use case.

Metrics

Click success rate is calculated as the number of clicks inside the target bounding box relative to all clicks. If a model predicts a target bounding box instead of a click coordinate, its center is used as its click prediction.

- Downloads last month

- 647

Model tree for AskUI/PTA-1

Base model

microsoft/Florence-2-base