FusOn-pLM: A Fusion Oncoprotein-Specific Language Model via Focused Probabilistic Masking

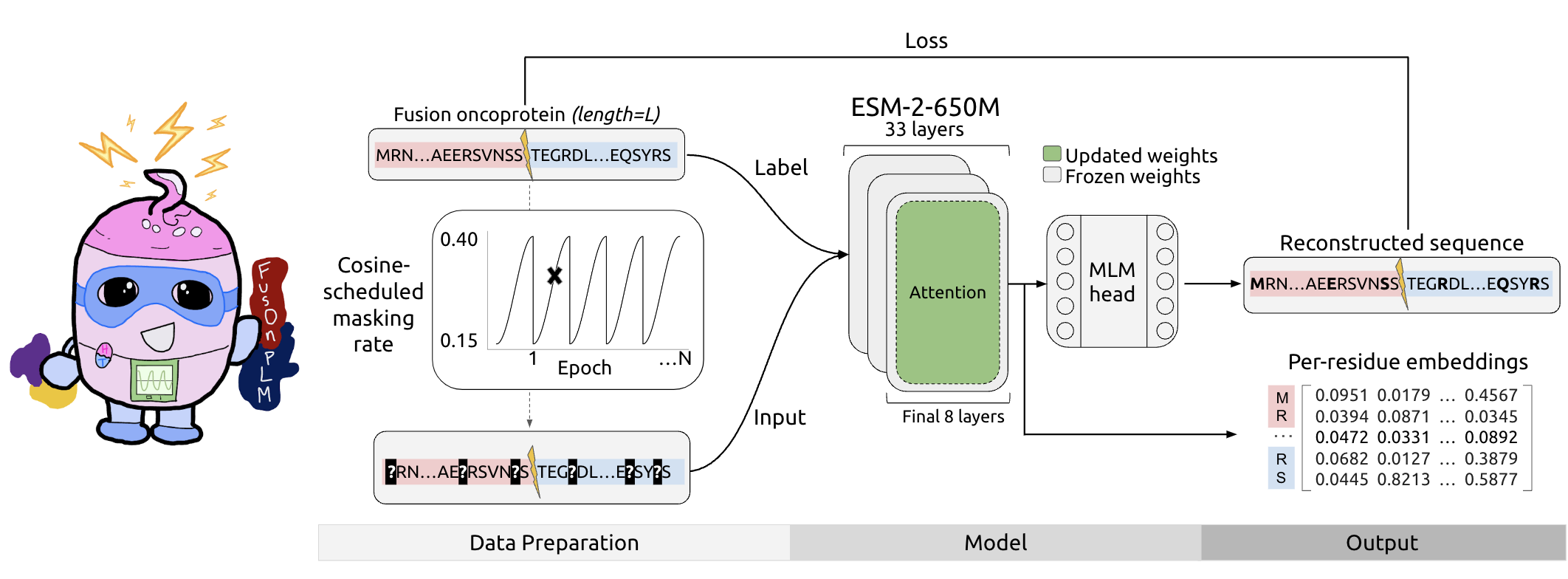

In this work, we introduce FusOn-pLM, a novel pLM that fine-tunes the state-of-the-art ESM-2-650M protein language model (pLM) on fusion oncoprotein sequences, those that drive a large portion of pediatric cancers but are heavily disordered and undruggable. We specifically introduce a novel cosine-scheduled masked language modeling (MLM) strategy which varies the number of masked residues throughout each epoch, thereby increasing the difficulty of the training task and generating more optimal fusion oncoprotein-aware embeddings. Our model improves performance on both fusion oncoprotein-specific benchmarks and disorder prediction tasks in comparison to baseline ESM-2 representations, as well as manually-constructed biophysical embeddings, motivating downstream usage of FusOn-pLM embeddings for therapeutic design tasks targeting these fusions. Please feel free to try out our embeddings and reach out if you have any questions!

In this work, we introduce FusOn-pLM, a novel pLM that fine-tunes the state-of-the-art ESM-2-650M protein language model (pLM) on fusion oncoprotein sequences, those that drive a large portion of pediatric cancers but are heavily disordered and undruggable. We specifically introduce a novel cosine-scheduled masked language modeling (MLM) strategy which varies the number of masked residues throughout each epoch, thereby increasing the difficulty of the training task and generating more optimal fusion oncoprotein-aware embeddings. Our model improves performance on both fusion oncoprotein-specific benchmarks and disorder prediction tasks in comparison to baseline ESM-2 representations, as well as manually-constructed biophysical embeddings, motivating downstream usage of FusOn-pLM embeddings for therapeutic design tasks targeting these fusions. Please feel free to try out our embeddings and reach out if you have any questions!

How to generate FusOn-pLM embeddings for your fusion oncoprotein:

from transformers import AutoTokenizer, AutoModel

import logging

import torch

# Suppress warnings about newly initialized 'esm.pooler.dense.bias', 'esm.pooler.dense.weight' layers - these are not used to extract embeddings

logging.getLogger("transformers.modeling_utils").setLevel(logging.ERROR)

# Set device

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print(f"Using device: {device}")

# Load the tokenizer and model

model_name = "ChatterjeeLab/FusOn-pLM"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModel.from_pretrained(model_name)

model.to(device)

model.eval()

# Example fusion oncoprotein sequence: MLLT10:PICALM, associated with Acute Myeloid Leukemia (LAML)

# Amino acids 1-80 are derived from the head gene, MLLT10

# Amino acids 81-119 are derived from the tail gene, PICALM

sequence = "MVSSDRPVSLEDEVSHSMKEMIGGCCVCSDERGWAENPLVYCDGHGCSVAVHQACYGIVQVPTGPWFCRKCESQERAARVPPQMGSVPVMTQPTLIYSQPVMRPPNPFGPVSGAQIQFM"

# Tokenize the input sequence

inputs = tokenizer(sequence, return_tensors="pt", padding=True, truncation=True,max_length=2000)

inputs = {k: v.to(device) for k, v in inputs.items()}

# Get the embeddings

with torch.no_grad():

outputs = model(**inputs)

# The embeddings are in the last_hidden_state tensor

embeddings = outputs.last_hidden_state

# remove extra dimension

embeddings = embeddings.squeeze(0)

# remove BOS and EOS tokens

embeddings = embeddings[1:-1, :]

# Convert embeddings to numpy array (if needed)

embeddings = embeddings.cpu().numpy()

print("Per-residue embeddings shape:", embeddings.shape)

Repository Authors

Sophia Vincoff, PhD Student at Duke University

Pranam Chatterjee, Assistant Professor at Duke University

Reach out to us with any questions!

- Downloads last month

- 194