metadata

base_model: []

library_name: transformers

tags:

- mergekit

- merge

Midnight-Miqu-70B-v1.0 - EXL2 4.0bpw

This is a 4.0bpw EXL2 quant of sophosympatheia/Midnight-Miqu-70B-v1.0

Details about the model and the merge info can be found at the above mode page.

I have not extensively tested this quant/model other than ensuring I could load it and chat with it.

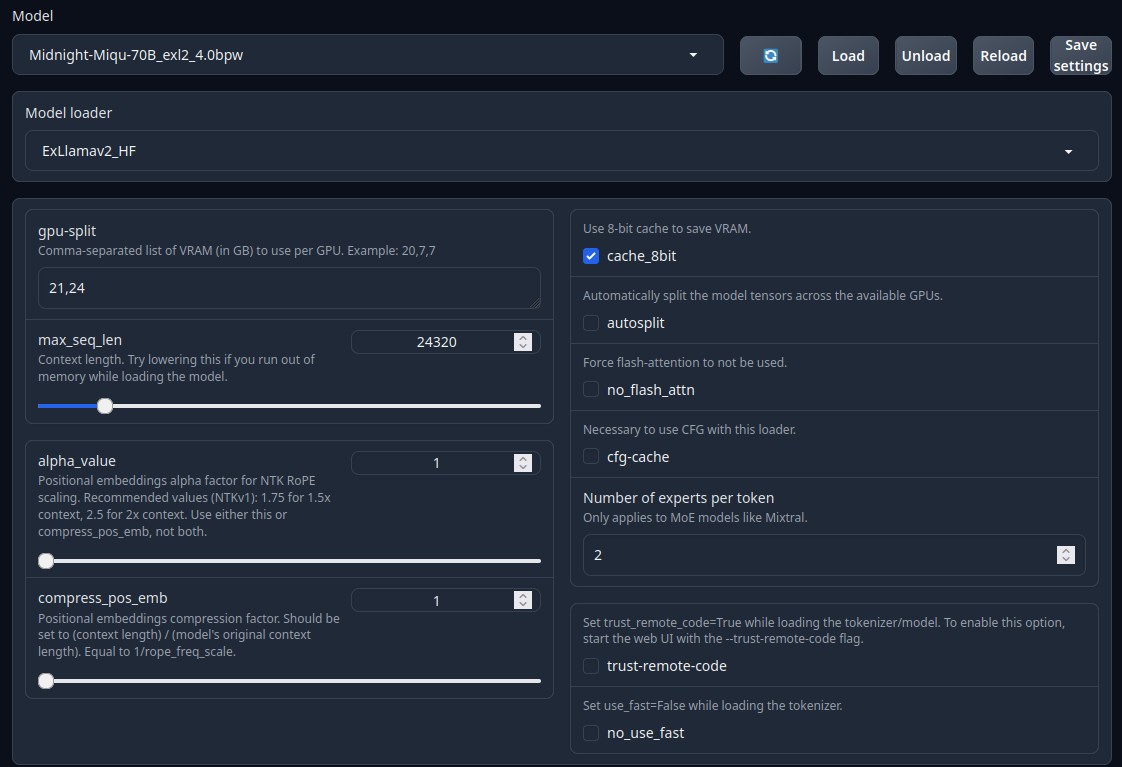

Model Loading

Below is what I used to run this model on a dual 3090 Linux server.

I have not tested inference above a couple K tokens.

Quant Details

This is the script used for quantization.

#!/bin/bash

# Activate the conda environment

source ~/miniconda3/etc/profile.d/conda.sh

conda activate exllamav2

# Define variables

MODEL_DIR="models/sophosympatheia_Midnight-Miqu-70B-v1.0"

OUTPUT_DIR="exl2_midnight70b"

MEASUREMENT_FILE="measurements/midnight70b.json"

BIT_PRECISION=4.0

CONVERTED_FOLDER="models/Midnight-Miqu-70B_exl2_4.0bpw"

# Create directories

mkdir $OUTPUT_DIR

mkdir $CONVERTED_FOLDER

# Run conversion commands

# Below commented out due to using measurement file from 5.0 quant

#python convert.py -i $MODEL_DIR -o $OUTPUT_DIR -nr -om $MEASUREMENT_FILE

python convert.py -i $MODEL_DIR -o $OUTPUT_DIR -nr -m $MEASUREMENT_FILE -b $BIT_PRECISION -cf $CONVERTED_FOLDER