|

--- |

|

|

|

|

|

{} |

|

--- |

|

# Model Card for Model ID |

|

|

|

<!-- Provide a quick summary of what the model is/does. --> |

|

|

|

This project aims to create a text scanner that converts paper images into machine-readable formats (e.g., Markdown, JSON). It is the son of Nougat, and thus, grandson of Douat. |

|

|

|

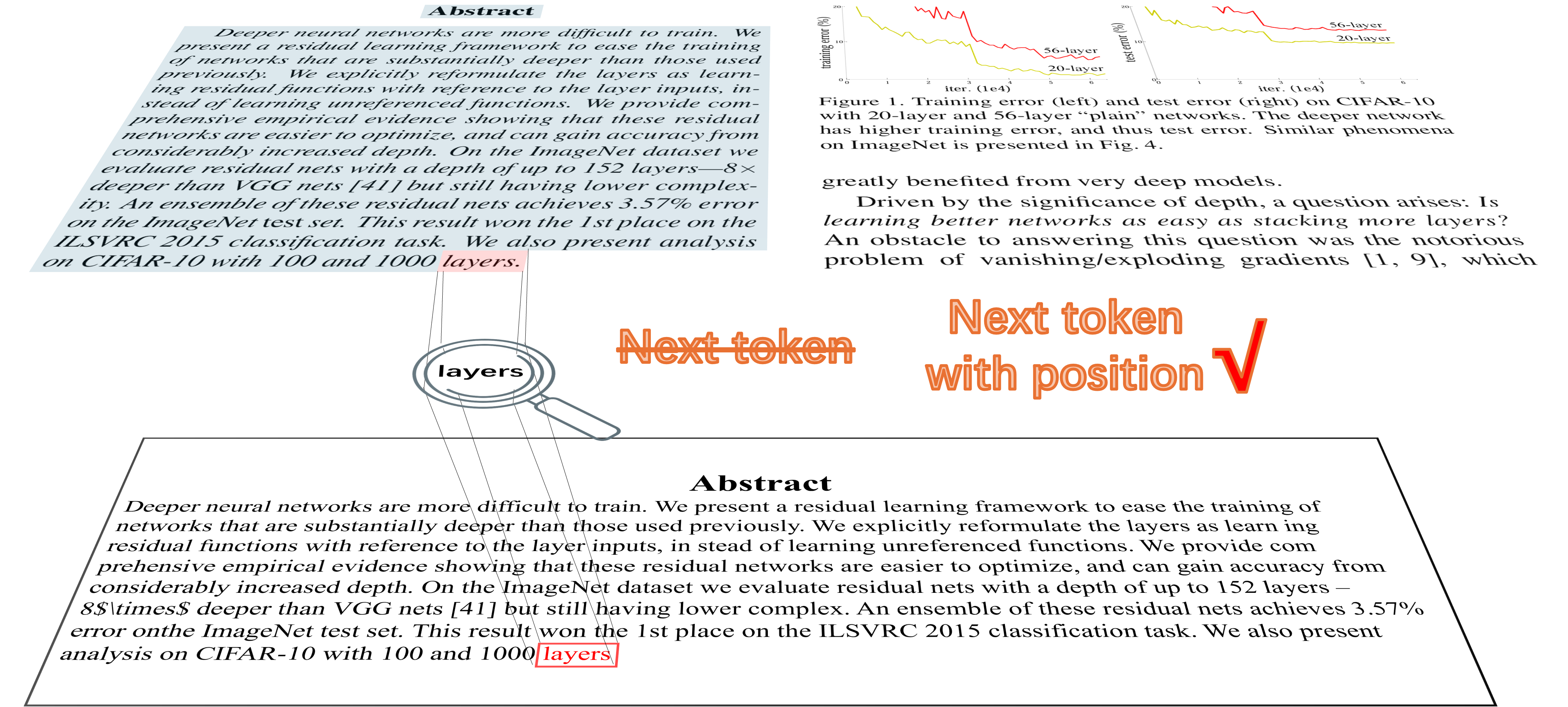

The key idea is to combine the bounding box modality with text, achieving a pixel scan behavior that predicts not only the next token but also the next position. |

|

|

|

|

|

|

|

The name "Lougat" is a combination of LLama and Nougat. The key idea is nature continues of this paper [LOCR: Location-Guided Transformer for Optical Character Recognition]([[2403.02127\] LOCR: Location-Guided Transformer for Optical Character Recognition (arxiv.org)](https://arxiv.org/abs/2403.02127)) |

|

|

|

Current Branch: The **LOCR** model |

|

|

|

Other Branch: |

|

- Florence2 + LLama → Flougat |

|

- Sam2 + LLama → Slougat |

|

- Nougat + Relative Position Embedding LLama → Rlougat |

|

|

|

|

|

# Inference and Train |

|

|

|

Please see `https://github.com/veya2ztn/Lougat` |

|

|

|

|

|

|

|

|