Uploaded as lora model

- Developed by: Labagaite

- License: apache-2.0

- Finetuned from model : unsloth/gemma-2b-it-bnb-4bit

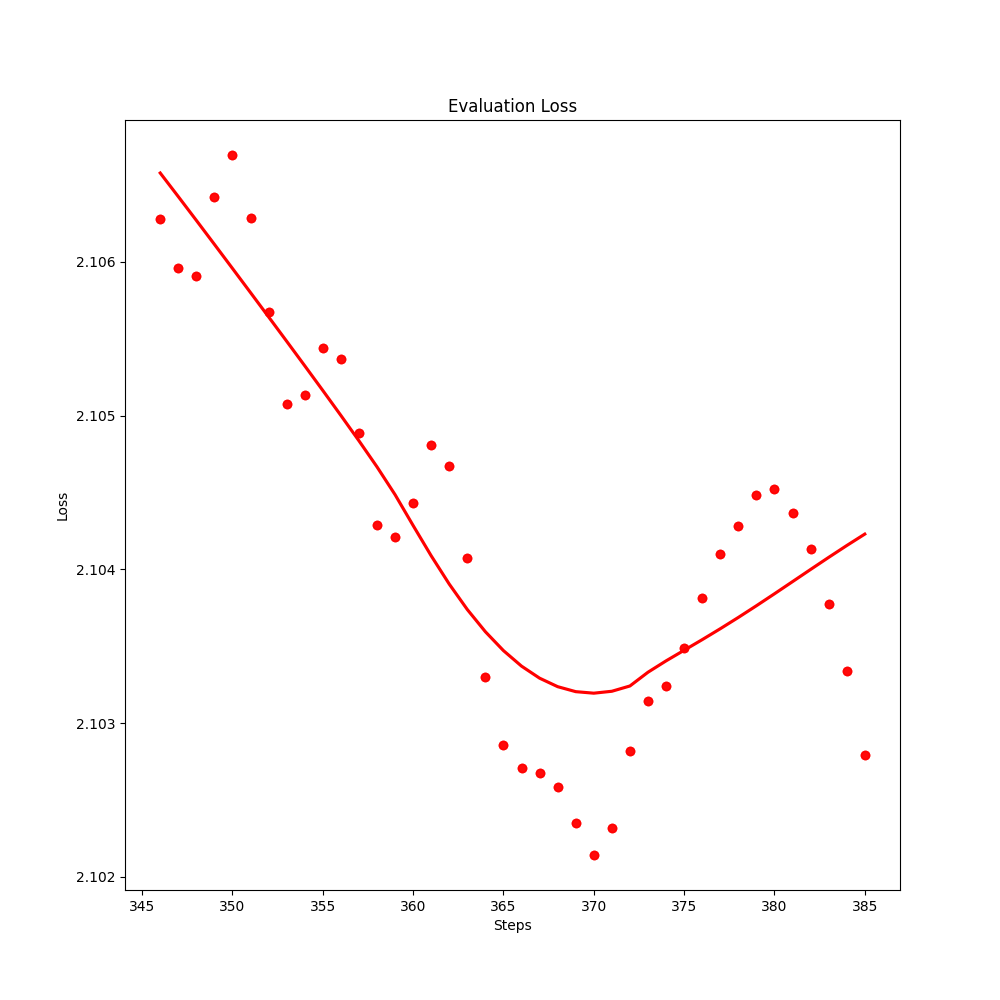

Training Logs

Traning metrics

Evaluation score

Évaluation des rapports générés par les deux modèles d'IA

Modèle de base (unsloth/gemma-2b-it-bnb-4bit)

- Performance de la structuration du rapport: 6/10

- Qualité du langage: 7/10

- Cohérence: 6/10

Modèle fine-tuned (gemma-Summarizer-2b-it-bnb-4bit)

- Performance de la structuration du rapport: 8/10

- Qualité du langage: 8/10

- Cohérence: 8/10

Score global

- Modèle de base: 6.3/10

- Modèle fine-tuned: 8/10

Conclusion

Le modèle fine-tuned a clairement surpassé le modèle de base en termes de structuration du rapport, qualité du langage et cohérence. Le rapport généré par le modèle fine-tuned est plus clair, plus fluide et mieux organisé. Il offre une analyse plus approfondie et une meilleure compréhension des sujets abordés. En revanche, le modèle de base présente quelques lacunes en termes de cohérence et de structuration. Il pourrait bénéficier d'une amélioration pour offrir des rapports plus percutants et informatifs. Evaluation report and scoring

Wandb logs

You can view the training logs .

Training details

training data

- Dataset : fr-summarizer-dataset

- Data-size : 7.65 MB

- train : 1.97k rows

- validation : 440 rows

- roles : user , assistant

- Format chatml "role": "role", "content": "content", "user": "user", "assistant": "assistant"

*French audio podcast transcription*

Project details

Fine-tuned on French audio podcast transcription data for summarization task. As a result, the model is able to summarize French audio podcast transcription data.

The model will be used for an AI application: Report Maker wich is a powerful tool designed to automate the process of transcribing and summarizing meetings.

It leverages state-of-the-art machine learning models to provide detailed and accurate reports.

This gemma model was trained 2x faster with Unsloth and Huggingface's TRL library.

This gemma was trained with LLM summarizer trainer

LLM summarizer trainer

LLM summarizer trainer

![]()

- Downloads last month

- 16

Model tree for Labagaite/gemma-Summarizer-2b-it-LORA-bnb-4bit

Base model

unsloth/gemma-2b-it-bnb-4bit