library_name: transformers

tags:

- medical

license: llama3

language:

- en

BioMed LLaMa-3 8B

Meta AI released the Llama-3 family of LLMs, composed of two models of the next generation of Llama, Meta Llama 3, available for broad use. This release features pretrained and instruction-fine-tuned language models with 8B and 70B parameters that can support a broad range of use cases.

Llama-3 is a decoder-only transformer architecture with a 128K-token vocabulary and grouped query attention to improve inference efficiency. It has been trained on sequences of 8192 tokens.

Llama-3 achieved state-of-the-art performance, enhancing capabilities in reasoning, code generation, and instruction following. It is expected to outperform Claude Sonnet, Mistral Medium, and GPT-3.5 on a number of benchmarks.

Model Details

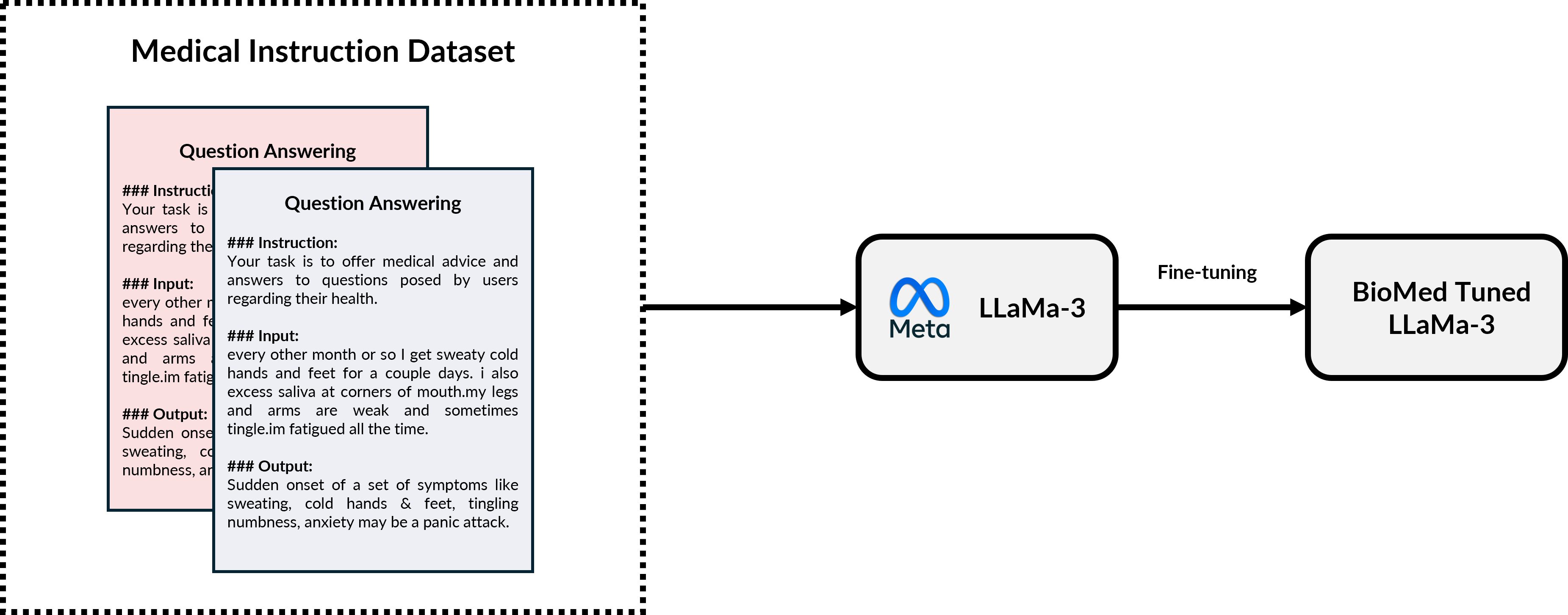

Powerful LLMs are trained on large amounts of unstructured data and are great at general text generation. BioMed-LLaMa-3-8B based on Llama-3-8b addresses some constraints related to using off-the-shelf pre-trained LLMs, especially in the biomedical domain:

- Efficiently fine-tuned LLaMa-3-8B on medical instruction Alpaca data, encompassing over 54K instruction-focused examples.

- Fine-tuned using QLoRa to further reduce memory usage while maintaining model performance and enhancing its capabilities in the biomedical domain.

⚙️ Config

| Parameter | Value |

|---|---|

| learning rate | 1e-8 |

| Optimizer | Adam |

| Betas | (0.9, 0.99) |

| adam_epsilon | 1e-8 |

| Lora Alpha | 16 |

| R | 8 |

| Lora Dropout | 0.05 |

| Load in 4 bits | True |

| Flash Attention 2 | True |

| Train Batch Size | 8 |

| Valid Batch Size | 8 |

| Max Seq Length | 512 |

💻 Usage

# Installations

!pip install peft --quiet

!pip install bitsandbytes --quiet

!pip install transformers --quiet

!pip install flash-attn --no-build-isolation --quiet

# Imports

import torch

from peft import LoraConfig, PeftModel

from transformers import (

AutoTokenizer,

BitsAndBytesConfig,

AutoModelForCausalLM)

# generate_prompt function

def generate_prompt(instruction, input=None):

if input:

return f"""Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request. # noqa: E501

### Instruction:

{instruction}

### Input:

{input}

### Response:

"""

else:

return f"""Below is an instruction that describes a task. Write a response that appropriately completes the request. # noqa: E501

### Instruction:

{instruction}

### Response:

"""

# Model Loading Configuration

based_model_path = "meta-llama/Meta-Llama-3-8B"

lora_weights = "NouRed/BioMed-Tuned-Llama-3-8b"

load_in_4bit=True

bnb_4bit_use_double_quant=True

bnb_4bit_quant_type="nf4"

bnb_4bit_compute_dtype=torch.bfloat16

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# Load Tokenizer

tokenizer = AutoTokenizer.from_pretrained(

based_model_path,

)

tokenizer.padding_side = 'right'

tokenizer.pad_token = tokenizer.eos_token

tokenizer.add_eos_token = True

# Load Base Model in 4 Bits

quantization_config = BitsAndBytesConfig(

load_in_4bit=load_in_4bit,

bnb_4bit_use_double_quant=bnb_4bit_use_double_quant,

bnb_4bit_quant_type=bnb_4bit_quant_type,

bnb_4bit_compute_dtype=bnb_4bit_compute_dtype

)

base_model = AutoModelForCausalLM.from_pretrained(

based_model_path,

device_map="auto",

attn_implementation="flash_attention_2", # I have an A100 GPU with 40GB of RAM 😎

quantization_config=quantization_config,

)

# Load Peft Model

model = PeftModel.from_pretrained(

base_model,

lora_weights,

torch_dtype=torch.float16,

)

# Prepare Input

instruction = "I have a sore throat, slight cough, tiredness. should i get tested fro covid 19?"

prompt = generate_prompt(instruction)

inputs = tokenizer(prompt, return_tensors="pt").to(device)

# Generate Text

with torch.no_grad():

generation_output = model.generate(

**inputs,

max_new_tokens=128

)

# Decode Output

output = tokenizer.decode(

generation_output[0],

skip_special_tokens=True,

clean_up_tokenization_spaces=True)

print(output)

📋 Cite Us

@misc{biomedllama32024zekaoui,

author = {Nour Eddine Zekaoui},

title = {BioMed-LLaMa-3: Efficient Instruction Fine-Tuning in Biomedical Language},

year = {2024},

howpublished = {In Hugging Face Model Hub},

url = {https://huggingface.co/NouRed/BioMed-Tuned-Llama-3-8b}

}

@article{llama3modelcard,

title={Llama 3 Model Card},

author={AI@Meta},

year={2024},

url = {https://github.com/meta-llama/llama3/blob/main/MODEL_CARD.md}

}

Created with ❤️ by @NZekaoui