Simply make AI models cheaper, smaller, faster, and greener!

- Give a thumbs up if you like this model!

- Contact us and tell us which model to compress next here.

- Request access to easily compress your own AI models here.

- Read the documentations to know more here

- Join Pruna AI community on Discord here to share feedback/suggestions or get help.

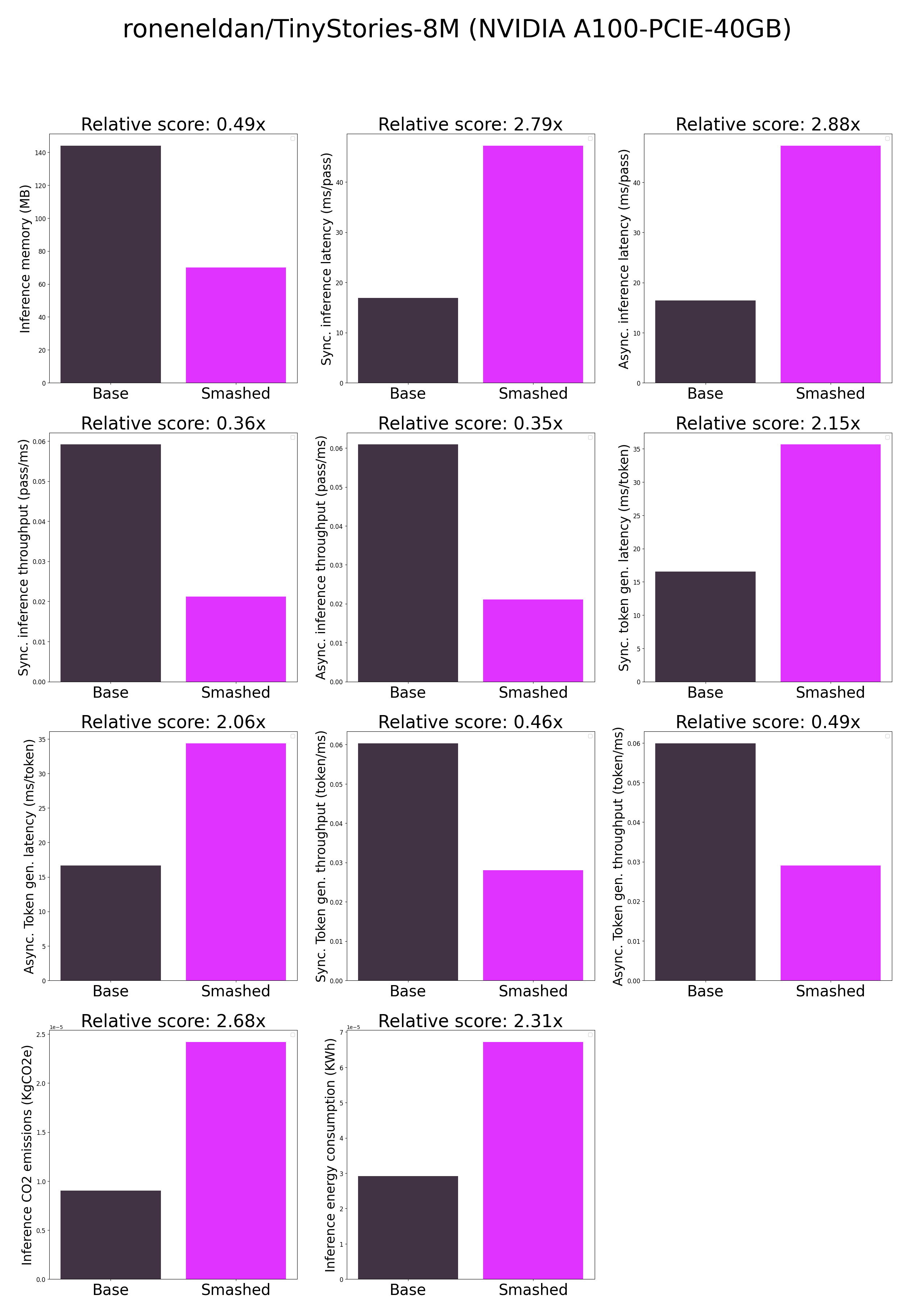

Results

Frequently Asked Questions

- How does the compression work? The model is compressed with llm-int8.

- How does the model quality change? The quality of the model output might vary compared to the base model.

- How is the model efficiency evaluated? These results were obtained on NVIDIA A100-PCIE-40GB with configuration described in

model/smash_config.json and are obtained after a hardware warmup. The smashed model is directly compared to the original base model. Efficiency results may vary in other settings (e.g. other hardware, image size, batch size, ...). We recommend to directly run them in the use-case conditions to know if the smashed model can benefit you.

- What is the model format? We use safetensors.

- What calibration data has been used? If needed by the compression method, we used WikiText as the calibration data.

- What is the naming convention for Pruna Huggingface models? We take the original model name and append "turbo", "tiny", or "green" if the smashed model has a measured inference speed, inference memory, or inference energy consumption which is less than 90% of the original base model.

- How to compress my own models? You can request premium access to more compression methods and tech support for your specific use-cases here.

- What are "first" metrics? Results mentioning "first" are obtained after the first run of the model. The first run might take more memory or be slower than the subsequent runs due cuda overheads.

- What are "Sync" and "Async" metrics? "Sync" metrics are obtained by syncing all GPU processes and stop measurement when all of them are executed. "Async" metrics are obtained without syncing all GPU processes and stop when the model output can be used by the CPU. We provide both metrics since both could be relevant depending on the use-case. We recommend to test the efficiency gains directly in your use-cases.

Setup

You can run the smashed model with these steps:

- Check requirements from the original repo roneneldan/TinyStories-8M installed. In particular, check python, cuda, and transformers versions.

- Make sure that you have installed quantization related packages.

pip install transformers accelerate bitsandbytes>0.37.0

- Load & run the model.

from transformers import AutoModelForCausalLM, AutoTokenizer

model = AutoModelForCausalLM.from_pretrained("PrunaAI/roneneldan-TinyStories-8M-bnb-8bit-smashed",

trust_remote_code=True, device_map='auto')

tokenizer = AutoTokenizer.from_pretrained("roneneldan/TinyStories-8M")

input_ids = tokenizer("What is the color of prunes?,", return_tensors='pt').to(model.device)["input_ids"]

outputs = model.generate(input_ids, max_new_tokens=216)

tokenizer.decode(outputs[0])

Configurations

The configuration info are in smash_config.json.

Credits & License

The license of the smashed model follows the license of the original model. Please check the license of the original model roneneldan/TinyStories-8M before using this model which provided the base model. The license of the pruna-engine is here on Pypi.

Want to compress other models?

- Contact us and tell us which model to compress next here.

- Request access to easily compress your own AI models here.