SauerkrautLM-Vision-Document-Retrieval

Collection

7 items

•

Updated

•

4

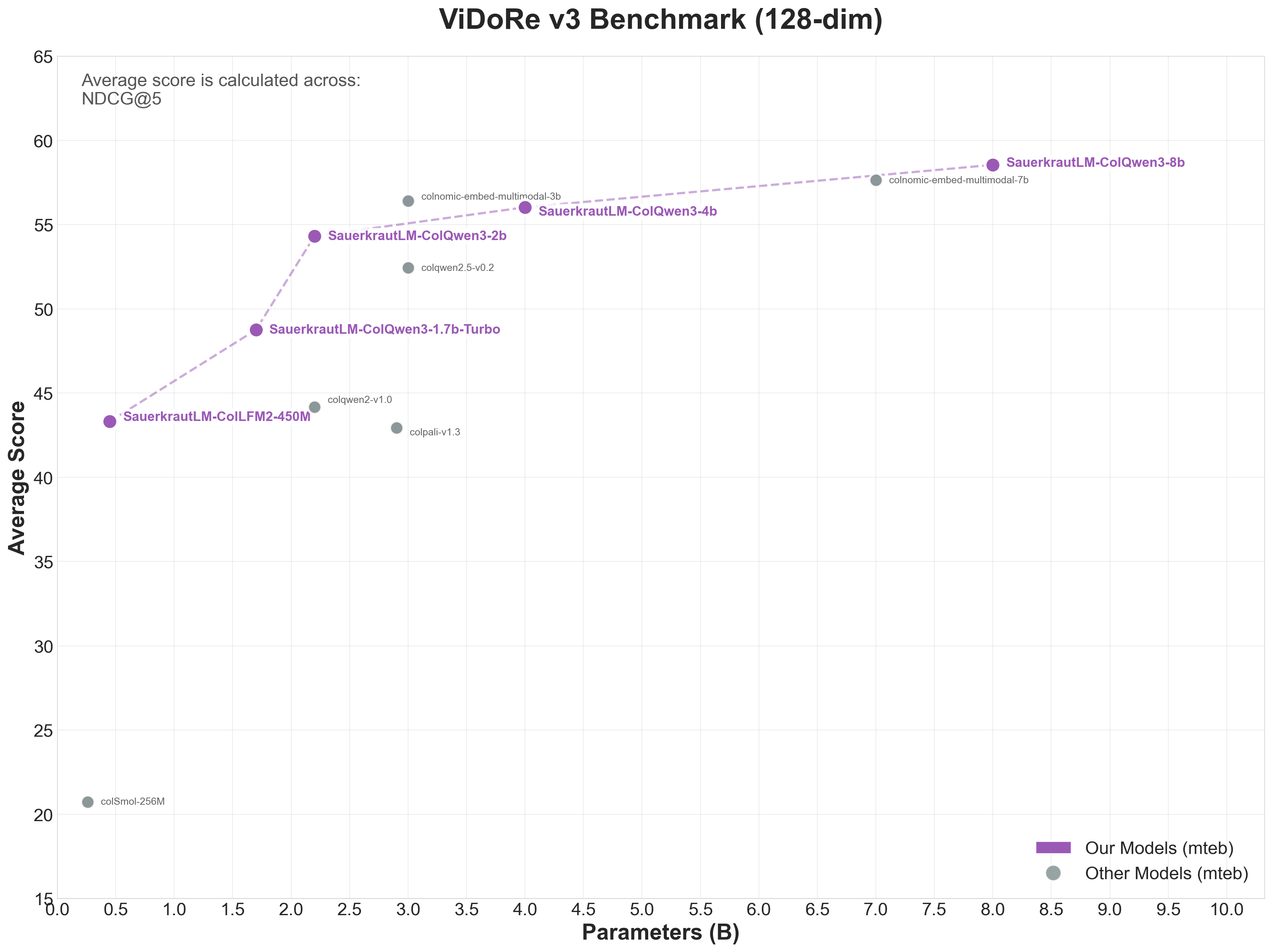

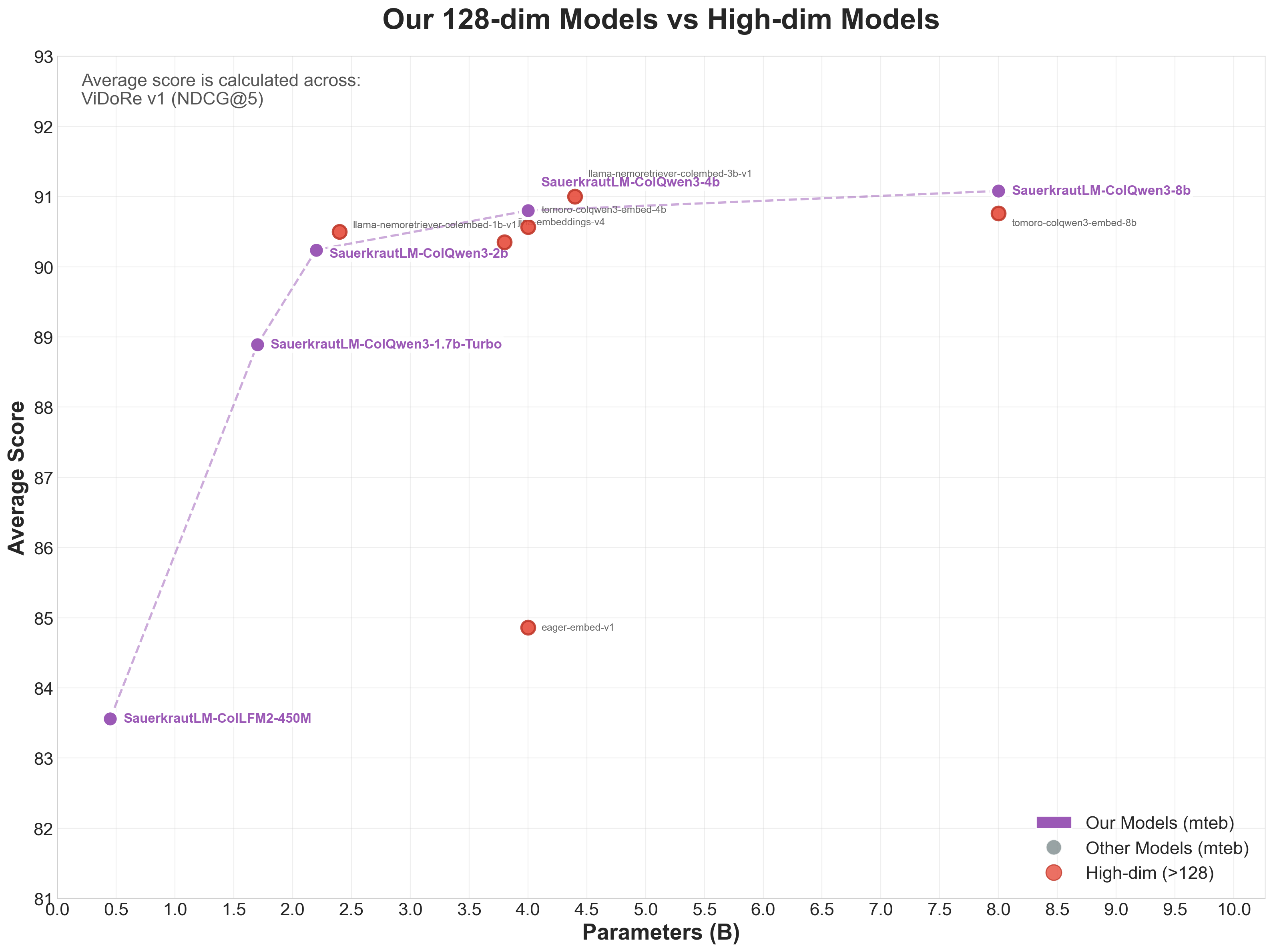

🏆 #1 Small Model (<1B) | Best-in-Class Efficiency

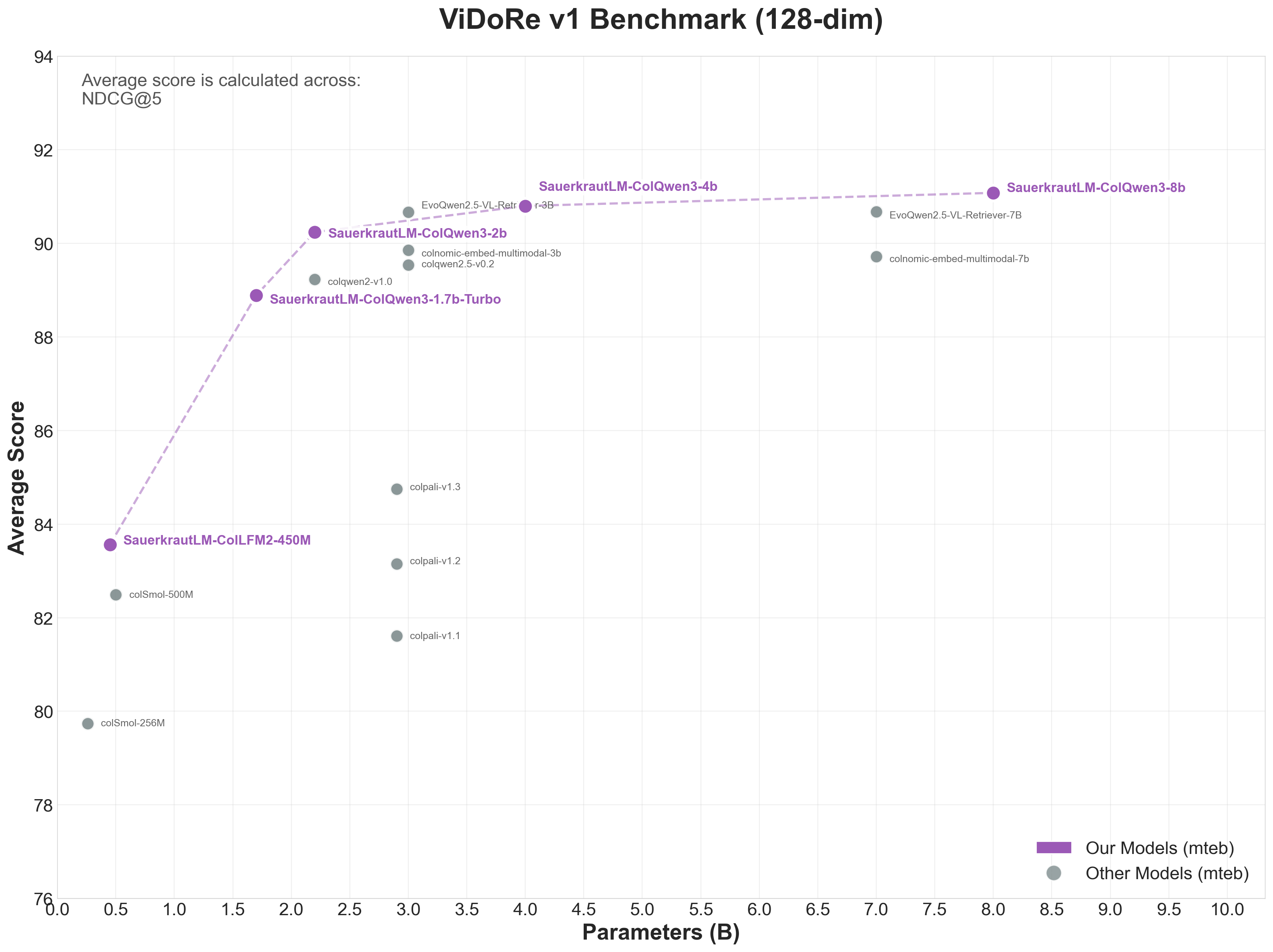

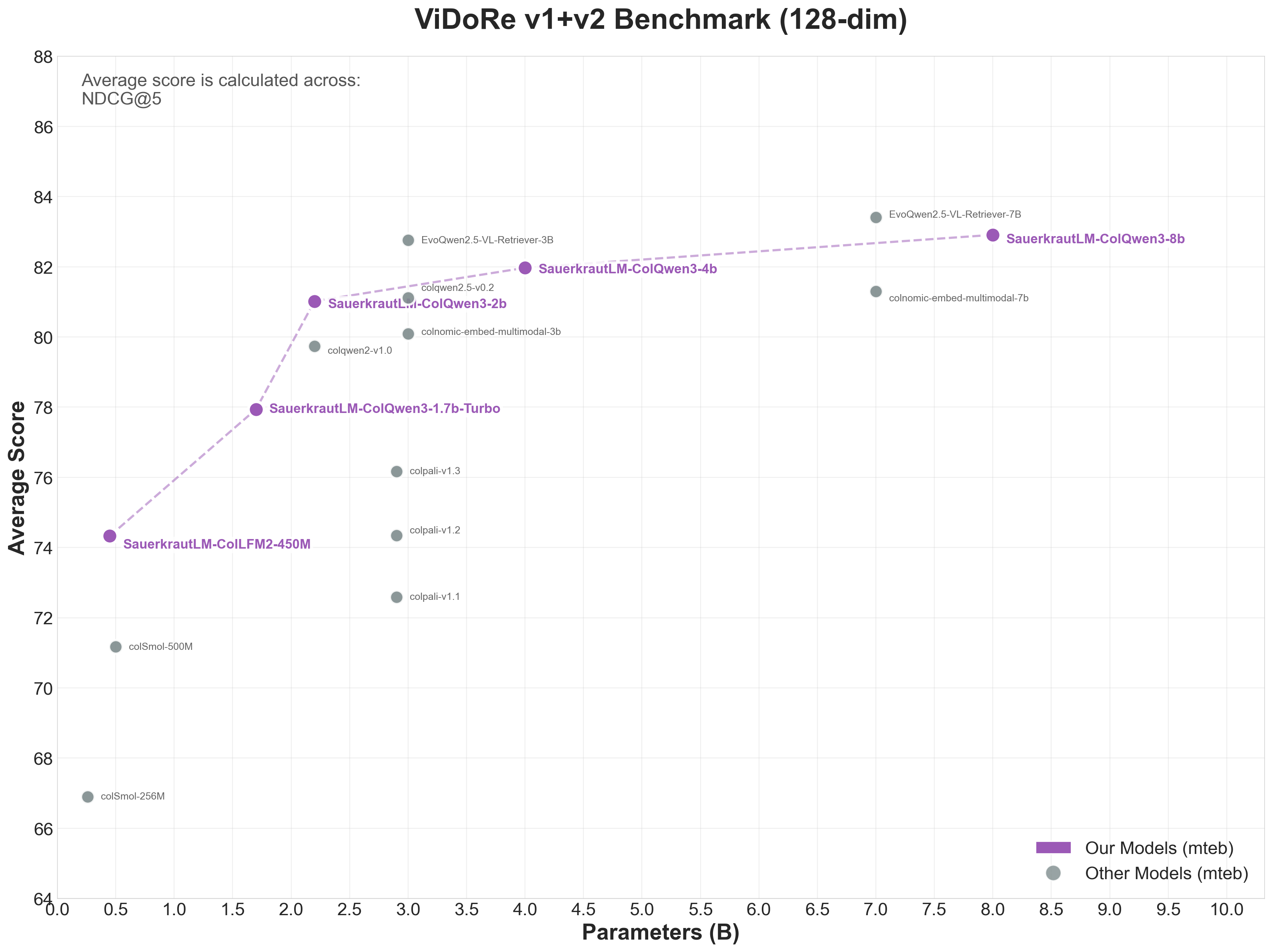

SauerkrautLM-ColLFM2-450M-v0.1 is the #1 small model for visual document retrieval, achieving 83.56 NDCG@5 on ViDoRe v1 - beating colSmol-500M (82.49) with 10% fewer parameters!

Traditional OCR-based retrieval loses layout, tables, and visual context. Our visual approach:

| Benchmark | Score | Rank (Small <1B) |

|---|---|---|

| ViDoRe v1 | 83.56 | 🥇 #1 |

| MTEB v1+v2 | 74.33 | 🥇 #1 |

| ViDoRe v3 | 43.32 | 🥇 #1 |

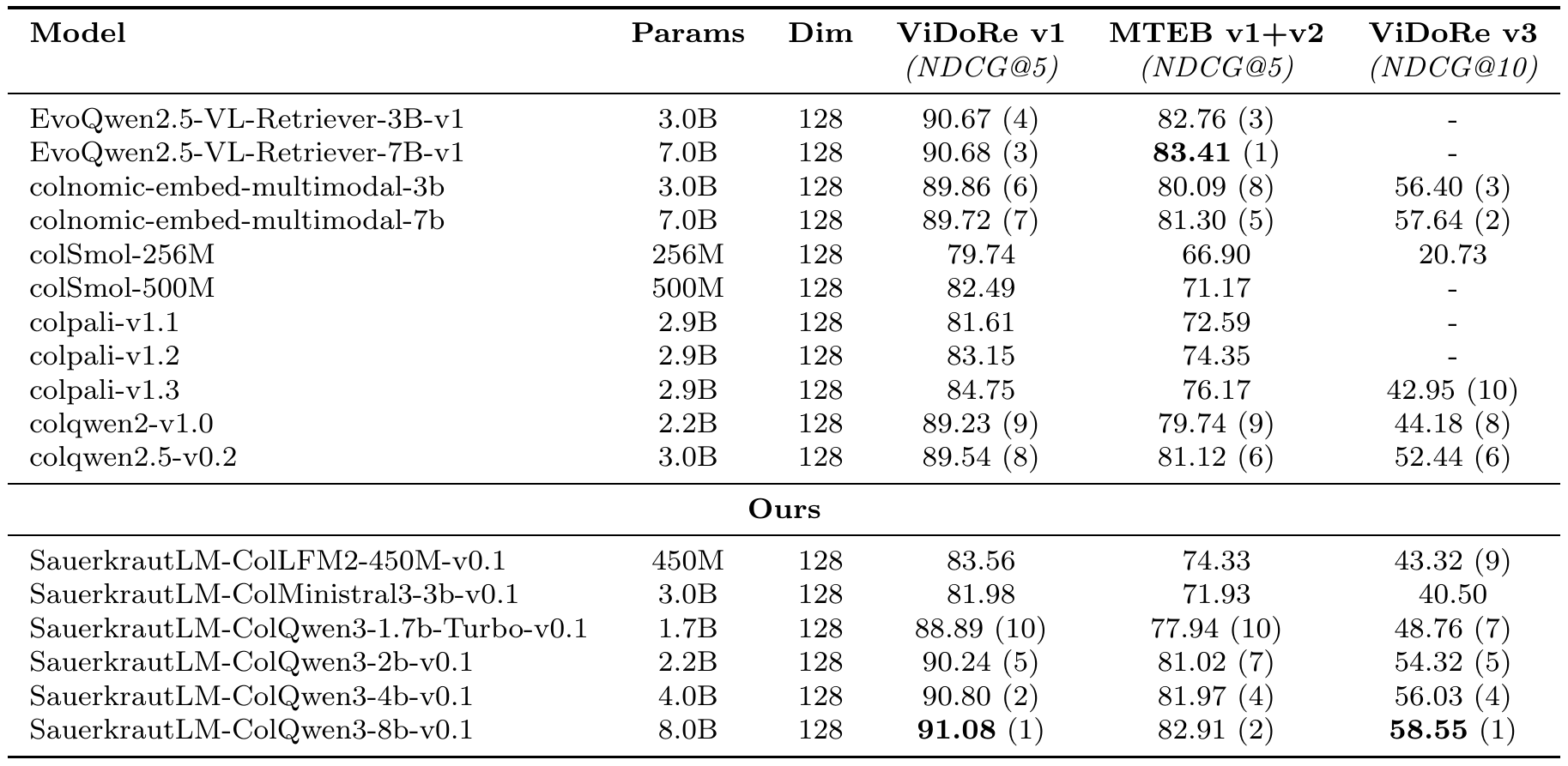

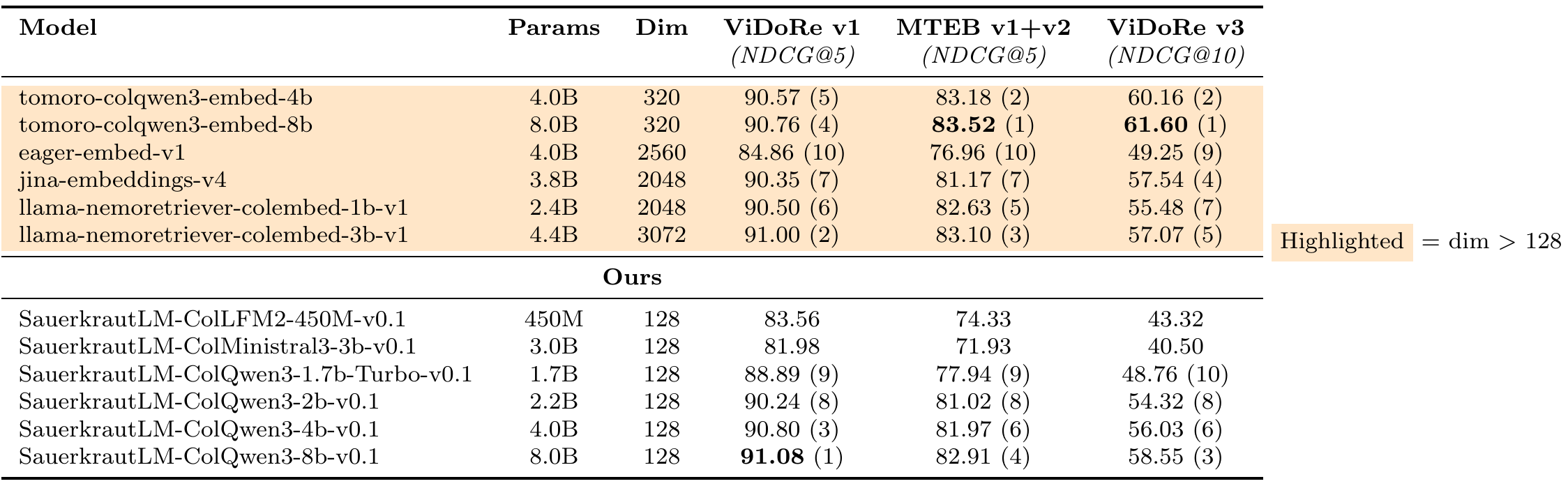

| Model | Params | Dim | ViDoRe v1 | MTEB v1+v2 | ViDoRe v3 |

|---|---|---|---|---|---|

| SauerkrautLM-ColLFM2-450M-v0.1 ⭐ | 450M | 128 | 83.56 | 74.33 | 43.32 |

| colSmol-500M | 500M | 128 | 82.49 | 71.17 | - |

| colSmol-256M | 256M | 128 | 79.74 | 66.90 | 20.73 |

#1 in ALL benchmarks for small models!

| Task | Score |

|---|---|

| ArxivQA | 76.11 |

| DocVQA | 59.11 |

| InfoVQA | 88.36 |

| ShiftProject | 73.14 |

| SyntheticDocQA-AI | 98.76 |

| SyntheticDocQA-Energy | 94.39 |

| SyntheticDocQA-Gov | 94.61 |

| SyntheticDocQA-Health | 97.32 |

| TabFQuAD | 80.91 |

| TATDQA | 72.88 |

| Average | 83.56 |

ViDoRe v1 Tasks:

| Task | Score |

|---|---|

| ArxivQA | 76.11 |

| DocVQA | 59.11 |

| InfoVQA | 88.36 |

| ShiftProject | 73.14 |

| SyntheticDocQA-AI | 98.76 |

| SyntheticDocQA-Energy | 94.39 |

| SyntheticDocQA-Gov | 94.61 |

| SyntheticDocQA-Health | 97.32 |

| TabFQuAD | 80.91 |

| TATDQA | 72.88 |

ViDoRe v2 Tasks (Multilingual):

| Task | Score |

|---|---|

| ViDoRe-v2-2BioMed | 51.00 |

| ViDoRe-v2-2Econ | 48.35 |

| ViDoRe-v2-2ESG-HL | 54.87 |

| ViDoRe-v2-2ESG | 50.80 |

| Combined Average | 74.33 |

| Task | Score |

|---|---|

| ViDoRe-v3-CS | 58.08 |

| ViDoRe-v3-Energy | 47.92 |

| ViDoRe-v3-FinanceEn | 47.72 |

| ViDoRe-v3-FinanceFr | 33.00 |

| ViDoRe-v3-HR | 43.37 |

| ViDoRe-v3-Industry | 30.21 |

| ViDoRe-v3-Pharma | 51.42 |

| ViDoRe-v3-Physics | 34.83 |

| Average | 43.32 |

| Metric | ColLFM2-450M | colSmol-500M | Advantage |

|---|---|---|---|

| Parameters | 450M | 500M | -10% |

| ViDoRe v1 | 83.56 | 82.49 | +1.07 |

| MTEB v1+v2 | 74.33 | 71.17 | +3.16 |

| Property | Value |

|---|---|

| Base Model | LiquidAI/LFM2-VL-450M |

| Parameters | 450M |

| Embedding Dimension | 128 |

| VRAM (bfloat16) | ~0.9 GB |

| Max Context Length | 32,768 tokens |

| Image Resolution | 512×512 native |

| Image Tokens | 64-256 (dynamic) |

| Vision Encoder | SigLIP2 (86M) |

| License | LFM 1.0 |

Unlike standard training, ColLFM2 was trained with curriculum learning:

Stage 1: Easy examples (high-quality, clear documents)

↓

Stage 2: Medium examples (mixed quality)

↓

Stage 3: Hard examples (complex layouts, noisy scans)

↓

Stage 4: Full mixture with hard negatives

Base LFM2-VL-450M

↓

┌───┴───┐

↓ ↓

mMARCO Retrieval

Specialist Model

↓ ↓

└───┬───┘

↓

Hierarchical Merge

↓

Final Model

| Setting | Value |

|---|---|

| GPUs | 4x NVIDIA RTX 6000 Ada (48GB) |

| Effective Batch Size | 256 |

| Precision | bfloat16 |

| Curriculum Stages | 4 |

| Dataset | Description |

|---|---|

| vidore/colpali_train_set | ColPali training data |

| openbmb/VisRAG-Ret-Train-In-domain-data | Visual RAG training data |

| llamaindex/vdr-multilingual-train | Multilingual retrieval (with curriculum) |

| unicamp-dl/mmarco | mMARCO for specialist model |

| VAGO Multilingual Datasets | Proprietary multilingual data |

⚠️ Important: Install our package first before loading the model:

pip install git+https://github.com/VAGOsolutions/sauerkrautlm-colpali

import torch

from PIL import Image

from sauerkrautlm_colpali.models import ColLFM2, ColLFM2Processor

model_name = "VAGOsolutions/SauerkrautLM-ColLFM2-450M-v0.1"

model = ColLFM2.from_pretrained(

model_name,

torch_dtype=torch.bfloat16,

device_map="cuda:0",

).eval()

processor = ColLFM2Processor.from_pretrained(model_name)

images = [Image.open("document.png")]

queries = ["What is the main topic?"]

batch_images = processor.process_images(images).to(model.device)

batch_queries = processor.process_queries(queries).to(model.device)

with torch.no_grad():

image_embeddings = model(**batch_images)

query_embeddings = model(**batch_queries)

scores = processor.score(query_embeddings, image_embeddings)

✅ Perfect for:

This model is licensed under the LFM 1.0 License from LiquidAI. Please review the full license before commercial use.

@misc{sauerkrautlm-colpali-2025,

title={SauerkrautLM-ColPali: Multi-Vector Vision Retrieval Models},

author={David Golchinfar},

organization={VAGO Solutions},

year={2025},

url={https://github.com/VAGOsolutions/sauerkrautlm-colpali}

}

Base model

LiquidAI/LFM2-VL-450M