|

--- |

|

license: llama3 |

|

language: |

|

- en |

|

- ja |

|

- zh |

|

tags: |

|

- roleplay |

|

- llama3 |

|

- sillytavern |

|

- idol |

|

--- |

|

# Special Thanks: |

|

- Lewdiculous's superb gguf version, thank you for your conscientious and responsible dedication. |

|

- https://huggingface.co/LWDCLS/llama3-8B-DarkIdol-2.1-Uncensored-1048K-GGUF-IQ-Imatrix-Request |

|

|

|

# Model Description: |

|

The module combination has been readjusted to better fulfill various roles and has been adapted for mobile phones. |

|

- Saving money(LLama 3) |

|

- Uncensored |

|

- Quick response |

|

- The underlying model used is winglian/Llama-3-8b-1048k-PoSE |

|

- A scholarly response akin to a thesis.(I tend to write songs extensively, to the point where one song almost becomes as detailed as a thesis. :) |

|

- DarkIdol:Roles that you can imagine and those that you cannot imagine. |

|

- Roleplay |

|

- Specialized in various role-playing scenarios |

|

- more look at test role. (https://huggingface.co/aifeifei798/llama3-8B-DarkIdol-1.2/tree/main/test) |

|

- more look at LM Studio presets (https://huggingface.co/aifeifei798/llama3-8B-DarkIdol-1.2/tree/main/config-presets) |

|

|

|

|

|

|

|

# Chang Log |

|

### 2024-06-26 |

|

- 1048K |

|

|

|

|

|

|

|

# Questions |

|

- The model's response results are for reference only, please do not fully trust them. |

|

- testing with other tools is not comprehensive.but there may be new issues, so please leave a message if you encounter any. |

|

|

|

|

|

# 问题 |

|

- 模型回复结果仅供参考,请勿完全相信 |

|

- 工具的测试不完善 |

|

|

|

# Stop Strings |

|

```python |

|

stop = [ |

|

"## Instruction:", |

|

"### Instruction:", |

|

"<|end_of_text|>", |

|

" //:", |

|

"</s>", |

|

"<3```", |

|

"### Note:", |

|

"### Input:", |

|

"### Response:", |

|

"### Emoticons:" |

|

], |

|

``` |

|

# Model Use |

|

- Koboldcpp https://github.com/LostRuins/koboldcpp |

|

- Since KoboldCpp is taking a while to update with the latest llama.cpp commits, I'll recommend this [fork](https://github.com/Nexesenex/kobold.cpp) if anyone has issues. |

|

- LM Studio https://lmstudio.ai/ |

|

- llama.cpp https://github.com/ggerganov/llama.cpp |

|

- Backyard AI https://backyard.ai/ |

|

- Meet Layla,Layla is an AI chatbot that runs offline on your device.No internet connection required.No censorship.Complete privacy.Layla Lite https://www.layla-network.ai/ |

|

- Layla Lite llama3-8B-DarkIdol-1.1-Q4_K_S-imat.gguf https://huggingface.co/LWDCLS/llama3-8B-DarkIdol-2.1-Uncensored-1048K/blob/main/llama3-8B-DarkIdol-2.1-Uncensored-1048K-Q4_K_S-imat.gguf?download=true |

|

- more gguf at https://huggingface.co/LWDCLS/llama3-8B-DarkIdol-2.1-Uncensored-1048K-GGUF-IQ-Imatrix-Request |

|

# character |

|

- https://character-tavern.com/ |

|

- https://characterhub.org/ |

|

- https://pygmalion.chat/ |

|

- https://aetherroom.club/ |

|

- https://backyard.ai/ |

|

- Layla AI chatbot |

|

### If you want to use vision functionality: |

|

* You must use the latest versions of [Koboldcpp](https://github.com/Nexesenex/kobold.cpp). |

|

|

|

### To use the multimodal capabilities of this model and use **vision** you need to load the specified **mmproj** file, this can be found inside this model repo. [Llava MMProj](https://huggingface.co/Nitral-AI/Llama-3-Update-3.0-mmproj-model-f16) |

|

|

|

* You can load the **mmproj** by using the corresponding section in the interface: |

|

|

|

### Thank you: |

|

To the authors for their hard work, which has given me more options to easily create what I want. Thank you for your efforts. |

|

- Hastagaras |

|

- Gryphe |

|

- cgato |

|

- ChaoticNeutrals |

|

- mergekit |

|

- merge |

|

- transformers |

|

- llama |

|

- Nitral-AI |

|

- MLP-KTLim |

|

- rinna |

|

- hfl |

|

- Rupesh2 |

|

- stephenlzc |

|

- theprint |

|

- Sao10K |

|

- turboderp |

|

- TheBossLevel123 |

|

- winglian |

|

- ......... |

|

|

|

--- |

|

base_model: |

|

- turboderp/llama3-turbcat-instruct-8b |

|

- Nitral-AI/Hathor_Fractionate-L3-8B-v.05 |

|

- Hastagaras/Jamet-8B-L3-MK.V-Blackroot |

|

- Sao10K/L3-8B-Stheno-v3.3-32K |

|

- TheBossLevel123/Llama3-Toxic-8B-Float16 |

|

- cgato/L3-TheSpice-8b-v0.8.3 |

|

- winglian/llama-3-8b-1m-PoSE |

|

library_name: transformers |

|

tags: |

|

- mergekit |

|

- merge |

|

|

|

--- |

|

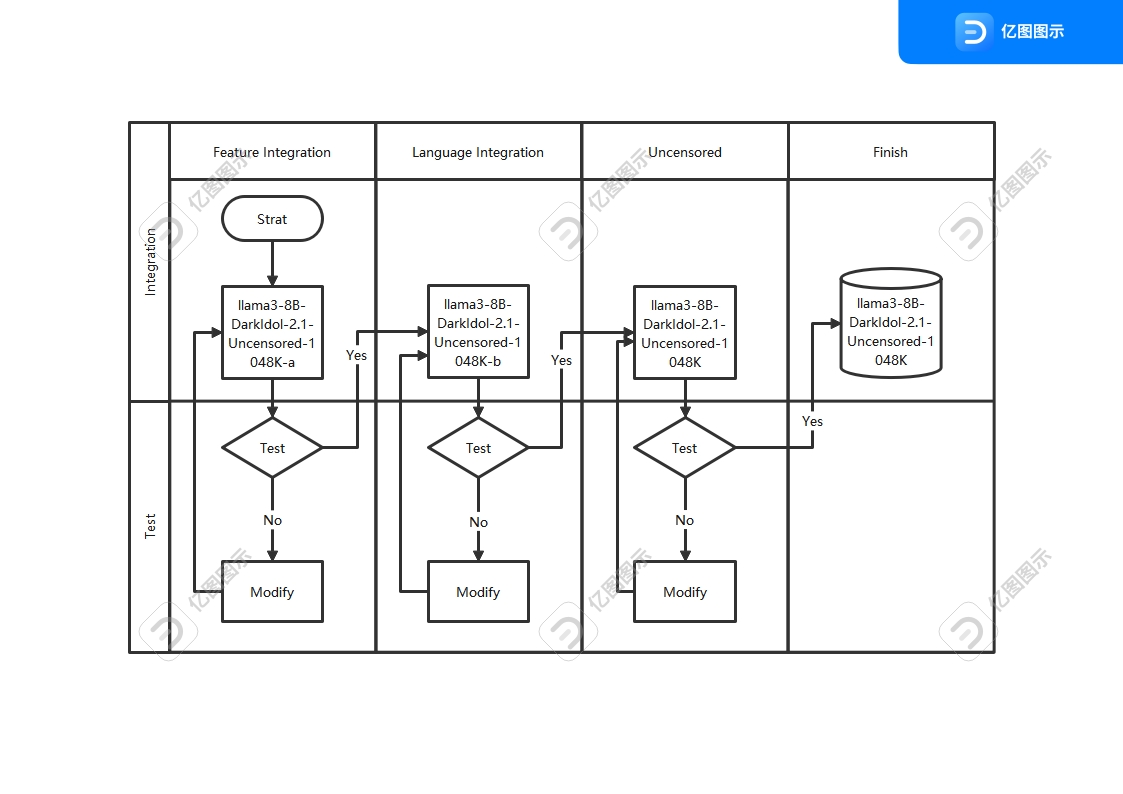

# llama3-8B-DarkIdol-2.1-Uncensored-1048K-a |

|

|

|

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit). |

|

|

|

## Merge Details |

|

### Merge Method |

|

|

|

This model was merged using the [Model Stock](https://arxiv.org/abs/2403.19522) merge method using [winglian/llama-3-8b-1m-PoSE](https://huggingface.co/winglian/llama-3-8b-1m-PoSE) as a base. |

|

|

|

### Models Merged |

|

|

|

The following models were included in the merge: |

|

* [turboderp/llama3-turbcat-instruct-8b](https://huggingface.co/turboderp/llama3-turbcat-instruct-8b) |

|

* [Nitral-AI/Hathor_Fractionate-L3-8B-v.05](https://huggingface.co/Nitral-AI/Hathor_Fractionate-L3-8B-v.05) |

|

* [Hastagaras/Jamet-8B-L3-MK.V-Blackroot](https://huggingface.co/Hastagaras/Jamet-8B-L3-MK.V-Blackroot) |

|

* [Sao10K/L3-8B-Stheno-v3.3-32K](https://huggingface.co/Sao10K/L3-8B-Stheno-v3.3-32K) |

|

* [TheBossLevel123/Llama3-Toxic-8B-Float16](https://huggingface.co/TheBossLevel123/Llama3-Toxic-8B-Float16) |

|

* [cgato/L3-TheSpice-8b-v0.8.3](https://huggingface.co/cgato/L3-TheSpice-8b-v0.8.3) |

|

|

|

### Configuration |

|

|

|

The following YAML configuration was used to produce this model: |

|

|

|

```yaml |

|

models: |

|

- model: Sao10K/L3-8B-Stheno-v3.3-32K |

|

- model: Hastagaras/Jamet-8B-L3-MK.V-Blackroot |

|

- model: cgato/L3-TheSpice-8b-v0.8.3 |

|

- model: Nitral-AI/Hathor_Fractionate-L3-8B-v.05 |

|

- model: TheBossLevel123/Llama3-Toxic-8B-Float16 |

|

- model: turboderp/llama3-turbcat-instruct-8b |

|

- model: winglian/llama-3-8b-1m-PoSE |

|

merge_method: model_stock |

|

base_model: winglian/llama-3-8b-1m-PoSE |

|

dtype: bfloat16 |

|

|

|

``` |

|

--- |

|

base_model: |

|

- hfl/llama-3-chinese-8b-instruct-v3 |

|

- MLP-KTLim/llama-3-Korean-Bllossom-8B |

|

- rinna/llama-3-youko-8b |

|

library_name: transformers |

|

tags: |

|

- mergekit |

|

- merge |

|

|

|

--- |

|

# llama3-8B-DarkIdol-2.1-Uncensored-1048K-b |

|

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit). |

|

|

|

## Merge Details |

|

### Merge Method |

|

|

|

This model was merged using the [Model Stock](https://arxiv.org/abs/2403.19522) merge method using ./llama3-8B-DarkIdol-2.1-1048K-a as a base. |

|

|

|

### Models Merged |

|

|

|

The following models were included in the merge: |

|

* [hfl/llama-3-chinese-8b-instruct-v3](https://huggingface.co/hfl/llama-3-chinese-8b-instruct-v3) |

|

* [MLP-KTLim/llama-3-Korean-Bllossom-8B](https://huggingface.co/MLP-KTLim/llama-3-Korean-Bllossom-8B) |

|

* [rinna/llama-3-youko-8b](https://huggingface.co/rinna/llama-3-youko-8b) |

|

|

|

### Configuration |

|

|

|

The following YAML configuration was used to produce this model: |

|

|

|

```yaml |

|

models: |

|

- model: hfl/llama-3-chinese-8b-instruct-v3 |

|

- model: rinna/llama-3-youko-8b |

|

- model: MLP-KTLim/llama-3-Korean-Bllossom-8B |

|

- model: ./llama3-8B-DarkIdol-2.1-1048K-a |

|

merge_method: model_stock |

|

base_model: ./llama3-8B-DarkIdol-2.1-1048K-a |

|

dtype: bfloat16 |

|

|

|

``` |

|

|

|

--- |

|

base_model: |

|

- stephenlzc/dolphin-llama3-zh-cn-uncensored |

|

- theprint/Llama-3-8B-Lexi-Smaug-Uncensored |

|

- Rupesh2/OrpoLlama-3-8B-instruct-uncensored |

|

library_name: transformers |

|

tags: |

|

- mergekit |

|

- merge |

|

|

|

--- |

|

# llama3-8B-DarkIdol-2.1-Uncensored-1048K |

|

|

|

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit). |

|

|

|

## Merge Details |

|

### Merge Method |

|

|

|

This model was merged using the [Model Stock](https://arxiv.org/abs/2403.19522) merge method using ./llama3-8B-DarkIdol-2.1-1048K-b as a base. |

|

|

|

### Models Merged |

|

|

|

The following models were included in the merge: |

|

* [stephenlzc/dolphin-llama3-zh-cn-uncensored](https://huggingface.co/stephenlzc/dolphin-llama3-zh-cn-uncensored) |

|

* [theprint/Llama-3-8B-Lexi-Smaug-Uncensored](https://huggingface.co/theprint/Llama-3-8B-Lexi-Smaug-Uncensored) |

|

* [Rupesh2/OrpoLlama-3-8B-instruct-uncensored](https://huggingface.co/Rupesh2/OrpoLlama-3-8B-instruct-uncensored) |

|

|

|

### Configuration |

|

|

|

The following YAML configuration was used to produce this model: |

|

|

|

```yaml |

|

models: |

|

- model: Rupesh2/OrpoLlama-3-8B-instruct-uncensored |

|

- model: stephenlzc/dolphin-llama3-zh-cn-uncensored |

|

- model: theprint/Llama-3-8B-Lexi-Smaug-Uncensored |

|

- model: ./llama3-8B-DarkIdol-2.1-1048K-b |

|

merge_method: model_stock |

|

base_model: ./llama3-8B-DarkIdol-2.1-1048K-b |

|

dtype: bfloat16 |

|

|

|

``` |

|

|