metadata

language:

- en

base_model:

- Ultralytics/YOLO11

tags:

- yolo

- yolo11

- yolo11n

- yolo11n-seg

- fish

- Segmentation

datasets:

- akridge/MOUSS_fish_segment_dataset_grayscale

pipeline_tag: image-segmentation

Yolo11n-seg Fish Segmentation

Model Overview

This model was trained to detect and segment fish in underwater Grayscale Imagery using the YOLO11n-seg architecture, leveraging automatic training with the Segment Anything Model (SAM) for generating segmentation masks. The combination of detection and SAM-powered segmentation enhances the model's ability to outline fish boundaries.

- Model Architecture: YOLO11n-seg

- Task: Fish Segmentation

- Footage Type: Grayscale Underwater Footage

- Classes: 1 (Fish)

Demo Space:

Test Results

Model Weights

Download the model weights here

Auto-Training Process

The segmentation dataset was generated using an automated pipeline:

- Detection Model: A pre-trained YOLO model (https://huggingface.co/akridge/yolo11-fish-detector-grayscale/) was used to detect fish.

- Segmentation: The SAM model (

sam_b.pt) was applied to generate precise segmentation masks around detected fish. - Output: The dataset was saved at

/content/sam_dataset/.

This automated process allowed for efficient mask generation without manual annotation, facilitating faster dataset creation.

Intended Use

- Real-time fish detection and segmentation on grayscale underwater imagery.

- Post-processing of video or images for research purposes in marine biology and ecosystem monitoring.

Training Configuration

- Dataset: SAM asisted segmentation dataset.

- Training/Validation Split: 80% training, 20% validation.

- Number of Epochs: 50

- Learning Rate: 0.001

- Batch Size: 16

- Image Size: 640x640

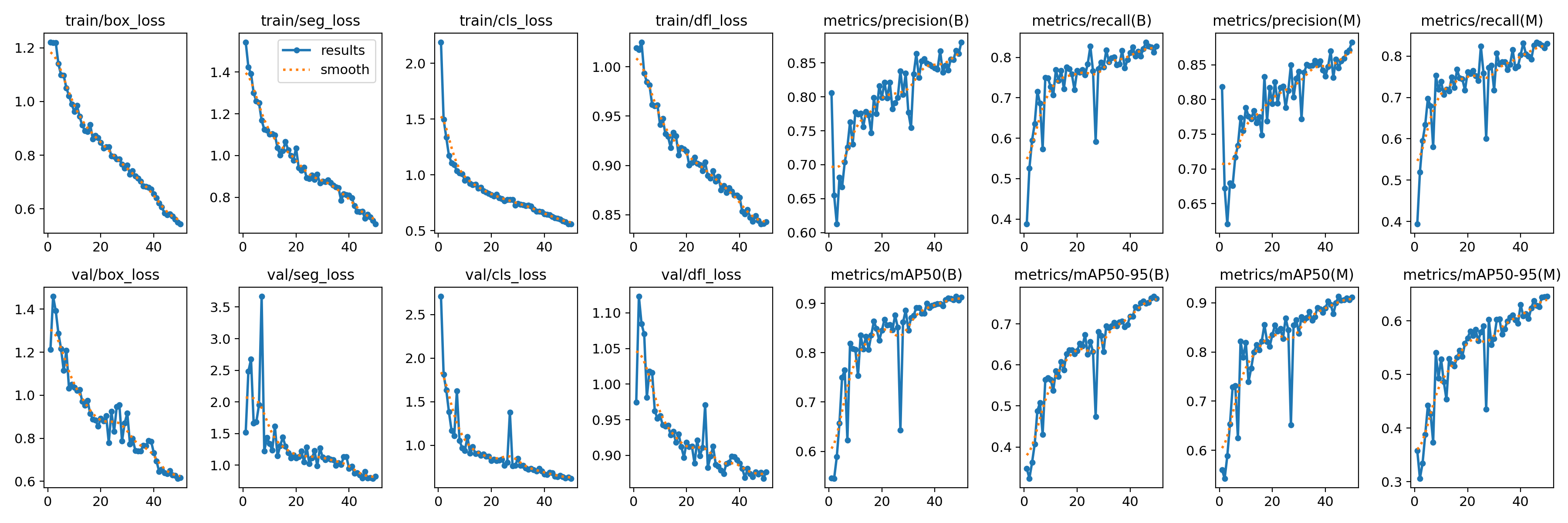

Results and Metrics

The model was trained and evaluated on the generated segmentation dataset with the following results:

Confusion Matrix

How to Use the Model

To use the trained YOLO11n-seg model for fish segmentation:

- Load the Model:

from ultralytics import YOLO

# Load YOLO11n-seg model

model = YOLO("yolo11n_fish_seg_trained.pt")

# Perform inference on an image

results = model("/content/test_image.jpg")

results.show()