|

--- |

|

license: creativeml-openrail-m |

|

tags: |

|

- text-to-image |

|

- stable-diffusion |

|

- anime |

|

- aiart |

|

--- |

|

|

|

|

|

**This model is trained on 33 different concepts from Bofuri: I Don't Want to Get Hurt, so I'll Max Out My Defense (防振り: 痛いのは嫌なので防御力に極振りしたいと思います。)** |

|

|

|

### Example Generations |

|

|

|

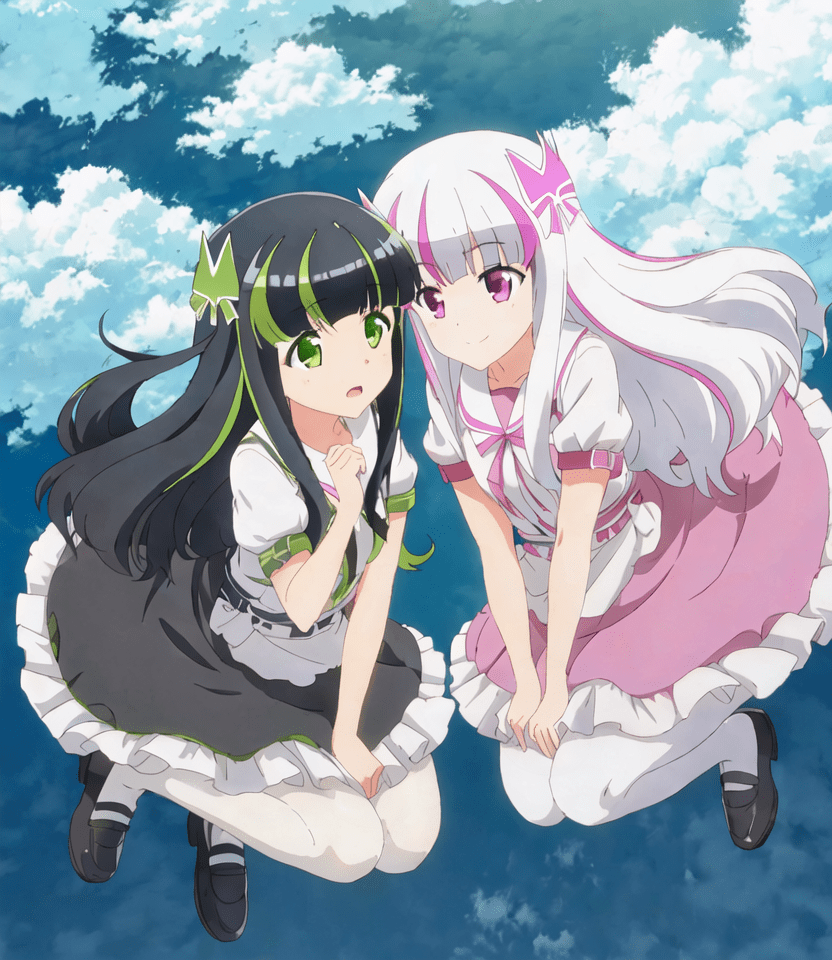

Prompt: `BoMaple uniform BoSally unfirom, yuri, in classroom, 4K wallpaper, beautiful eyes` |

|

|

|

|

|

Prompt: `2girls, BoMay BoYui, yuri, half body, floating in the sky, cloud, sparkling eyes, 4K wallpaer, anime coloring, official art` |

|

|

|

|

|

Prompt: `BoKanade casting magic, 4K wallpaper, outdoors` |

|

|

|

|

|

(Negative is mostly variations of: `bad hands, lowres, bad anatomy, bad hands, text, error, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts, signature, watermark, username, blurry`) |

|

|

|

### Usage |

|

|

|

The model is shared in both diffuser and safetensors format. Intermediate checkpoints are also shared in ckpt format in the directory `checkpoints`. |

|

|

|

### Concepts |

|

|

|

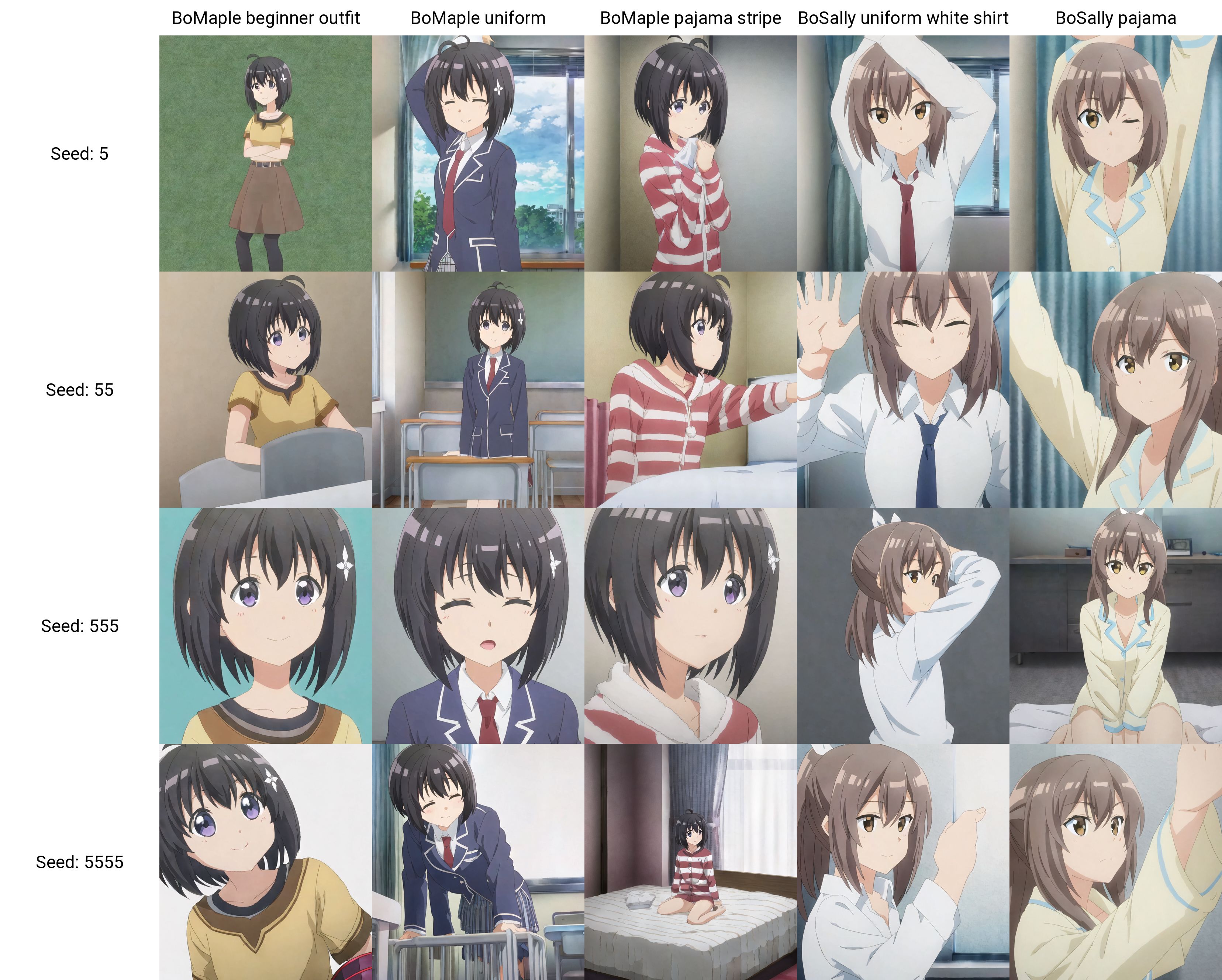

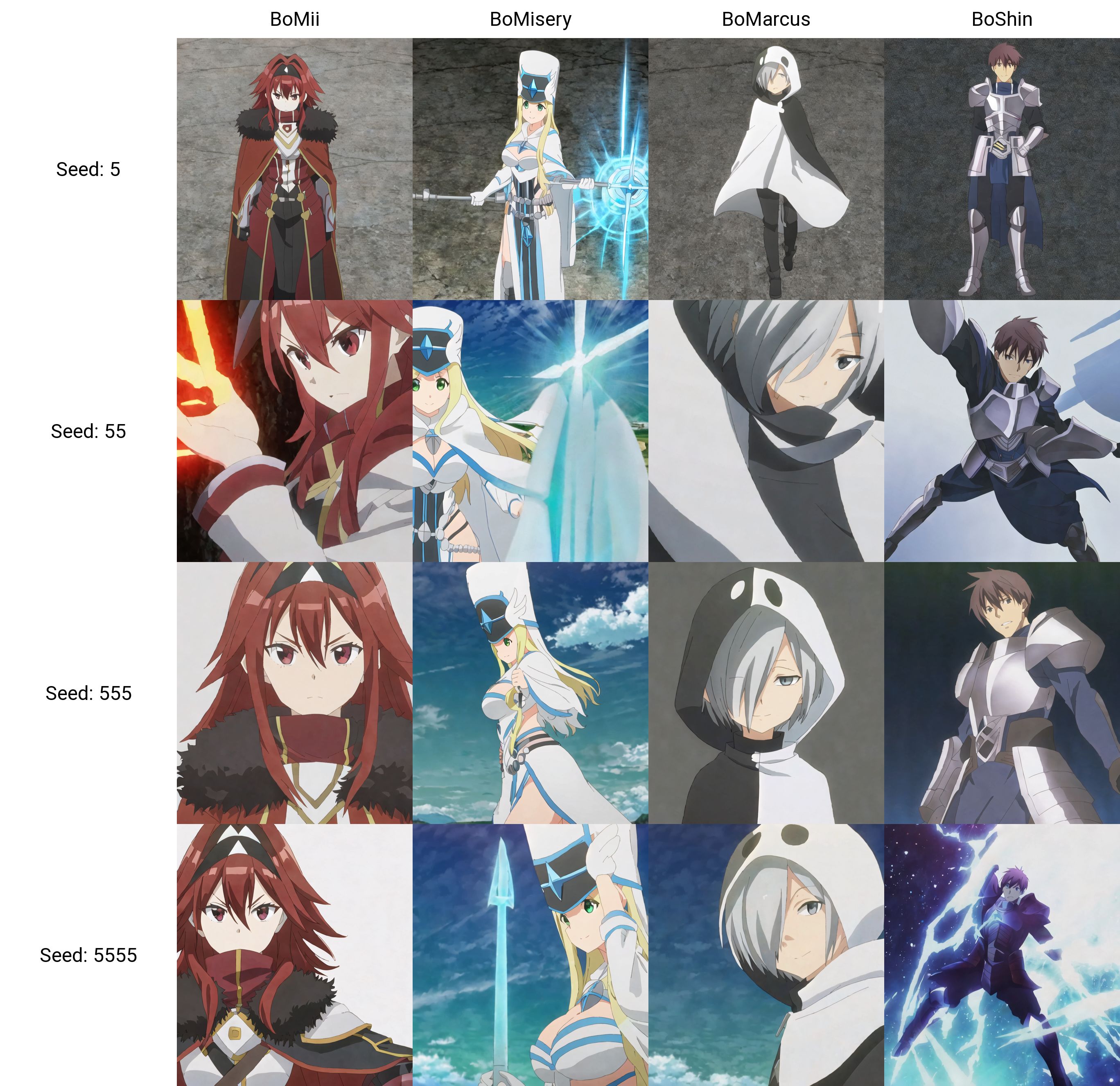

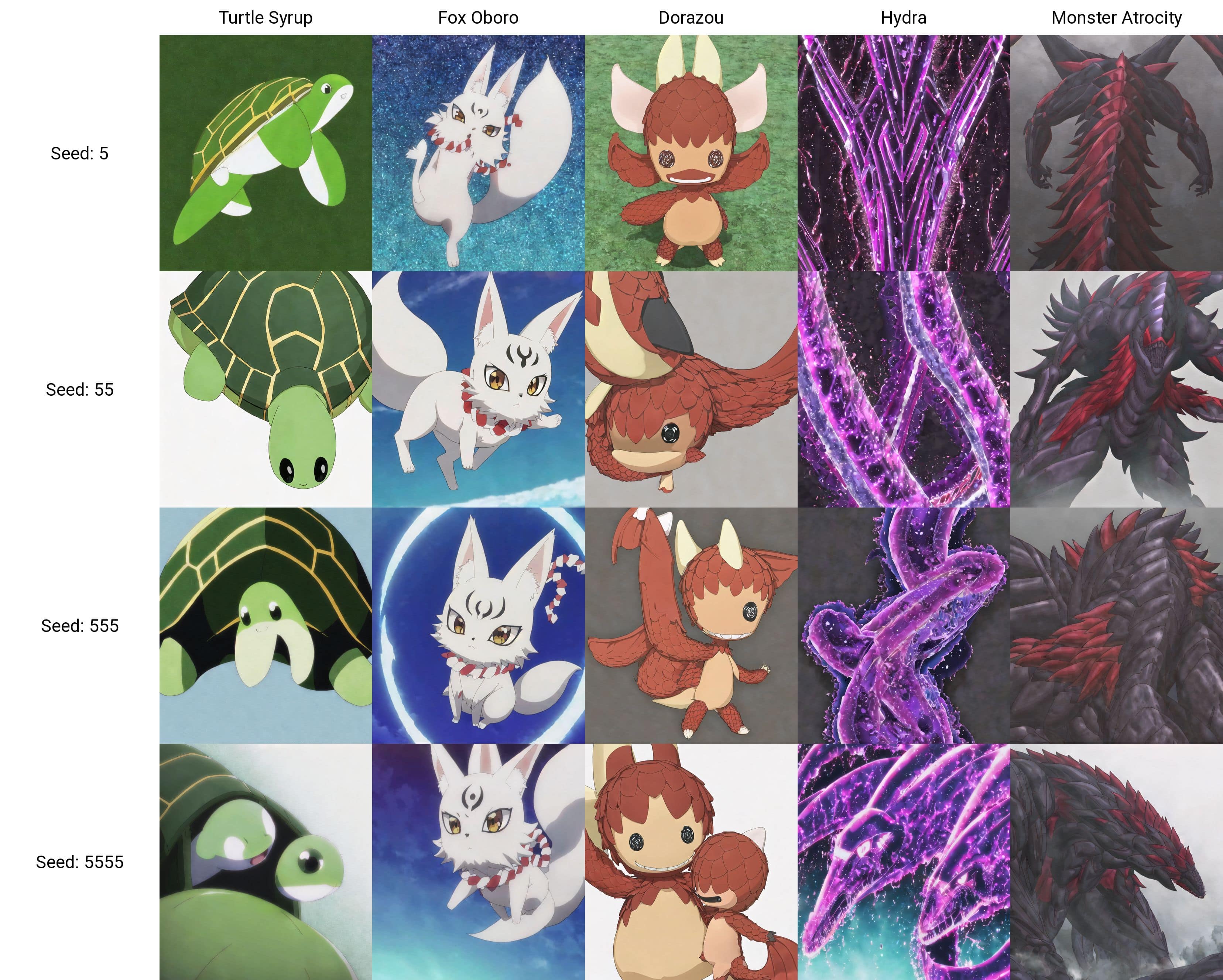

The 33 concepts are listed in `concept_list` and demonstrated below. |

|

|

|

BoMaple + |

|

|

|

BoSally + |

|

|

|

The following use the full name of the concepts |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Expect bad results for `BoMaple sheep form` and non-human concepts. Espeically the model clearly does not understand the anatomy and the size of syrup (well it is true it's size is not fixed). |

|

For `BoKasumi sarashi` adding `bandages` seems to help. For `BoMaple pajama` you can add `stripe` for more similarity to the pajama appearing in anime. |

|

The remaining concepts should go through smoothly. |

|

|

|

#### Prompt format |

|

During training the concept names are put at the beginning of the images separated only by spaces, but not doing so seems to work as well. |

|

Put `aniscreen` after the concept names would reinfoce the anime style. |

|

Having two concepts in a same image is fairly doable as demonstrated above. |

|

However, expect weird blending to happen most of the time starting from three concepts. |

|

This is partially because this model is not trained too much on multi-concept scenes. |

|

Below is roughly the best we can get after multiply tries (there is still clothes blending). |

|

|

|

Prompt: `(BoMaple black armor) BoSally turtleneck BoKasumi, 3girls, 4K wallpaper, ahoge, black hair, brown hair, outdoors, long hair` |

|

|

|

|

|

|

|

### More Generations |

|

|

|

Prompt: `BoMaple black armors aniscreen, 1girl solo, Hydra in the sky, light purple eyes, 4K wallpaper` |

|

|

|

|

|

Prompt: `BoMaple black armors near small turtle syrup, sitting with knees up on rock looking at viewer, turtle shell, beautiful hand in glove, in front of trees , outdoors, close-up, 4K wallpaper` |

|

|

|

|

|

Prompt: `BoMaple pajama stripe, sitting on bed with barefoot, in girl's room, detailed and fancy background, sparkling purple eyes, hand on bed, 4K wallpaper` |

|

|

|

|

|

Prompt: `BoFrederica, cowboy shot, in rubble ruins, ((under blue sky)), cinematic angle, dynamic pose, oblique angle, 4K wallpaer, anime coloring, official art` |

|

|

|

|

|

Prompt: `Turtle Syrup Fox Oboro next to each other simple background white background, animals` |

|

|

|

|

|

Failures are of course unavoidable |

|

|

|

|

|

|

|

Finally, you can always get different styles via model merging |

|

|

|

|

|

|

|

### Dataset Description |

|

|

|

The dataset is prepared via the workflow detailed here: https://github.com/cyber-meow/anime_screenshot_pipeline |

|

|

|

It contains 27031 images with the following composition |

|

|

|

- 7752 bofuri images mainly composed of screenshots from the first season and of the first three episods of the second season |

|

- 19279 regularization images which intend to be as various as possible while being in anime style (i.e. no photorealistic image is used) |

|

|

|

Note that the model is trained with a specific weighting scheme to balance between different concepts so that every image does not weight equally. |

|

After applying the per-image repeat we get around 200K images per epoch. |

|

|

|

|

|

### Training |

|

|

|

Training is done with [EveryDream2](https://github.com/victorchall/EveryDream2trainer) trainer with [ACertainty](https://huggingface.co/JosephusCheung/ACertainty) as base model. |

|

I use the following configuration thanks to the suggestion of 金Goldkoron |

|

|

|

- resolution 512 |

|

- cosine learning rate scheduler, lr 2.5e-6 |

|

- batch size 4 |

|

- conditional dropout 0.05 |

|

- change beta scheduler from `scaler_linear` to `linear` in `config.json` of the scheduler of the model |

|

|

|

The released model is trained for 57751 steps, but among the provided checkpoints all the three starting from 34172 steps seem to work reasonably well. |