|

|

--- |

|

|

license: agpl-3.0 |

|

|

language: |

|

|

- ja |

|

|

base_model: |

|

|

- google/gemma-2-2b-it |

|

|

--- |

|

|

|

|

|

|

|

|

Gemma2 2b Japanese for Embedding generation. |

|

|

|

|

|

Base model is Gemma2B JPN-IT published by Google in October 2024 to general public. |

|

|

|

|

|

Gemma2 2B JPN is the smallest Japanese LLM, |

|

|

so this is very useful for real world practical topics. |

|

|

|

|

|

(all other Japanese 7B LLM cannot be used easily at high volume |

|

|

for embedding purposes due high inference cost). |

|

|

|

|

|

|

|

|

This version has been lightly fine tuned on Japanese triplet dataset |

|

|

and with triplet loss and quantized into 4bit GGUF format. |

|

|

This is still Work In progress. |

|

|

|

|

|

|

|

|

Sample using llama-cpp |

|

|

|

|

|

|

|

|

``` |

|

|

class GemmaSentenceEmbeddingGGUF: |

|

|

def init(self, model_path="agguf/gemma-2-2b-jpn-it-embedding.gguf"): |

|

|

self.model = Llama(model_path=model_path, embedding=True) |

|

|

|

|

|

def encode(self, sentences: list[str], **kwargs) -> list[np.ndarray]: |

|

|

out = [] |

|

|

for sentence in sentences: |

|

|

embedding_result = self.model.create_embedding([sentence]) |

|

|

embedding = embedding_result['data'][0]['embedding'][-1] |

|

|

out.append(np.array(embedding)) |

|

|

|

|

|

return out |

|

|

|

|

|

|

|

|

se = GemmaSentenceEmbeddingGGUF() |

|

|

se.encode(['こんにちは、ケビンです。よろしくおねがいします'])[0] |

|

|

``` |

|

|

|

|

|

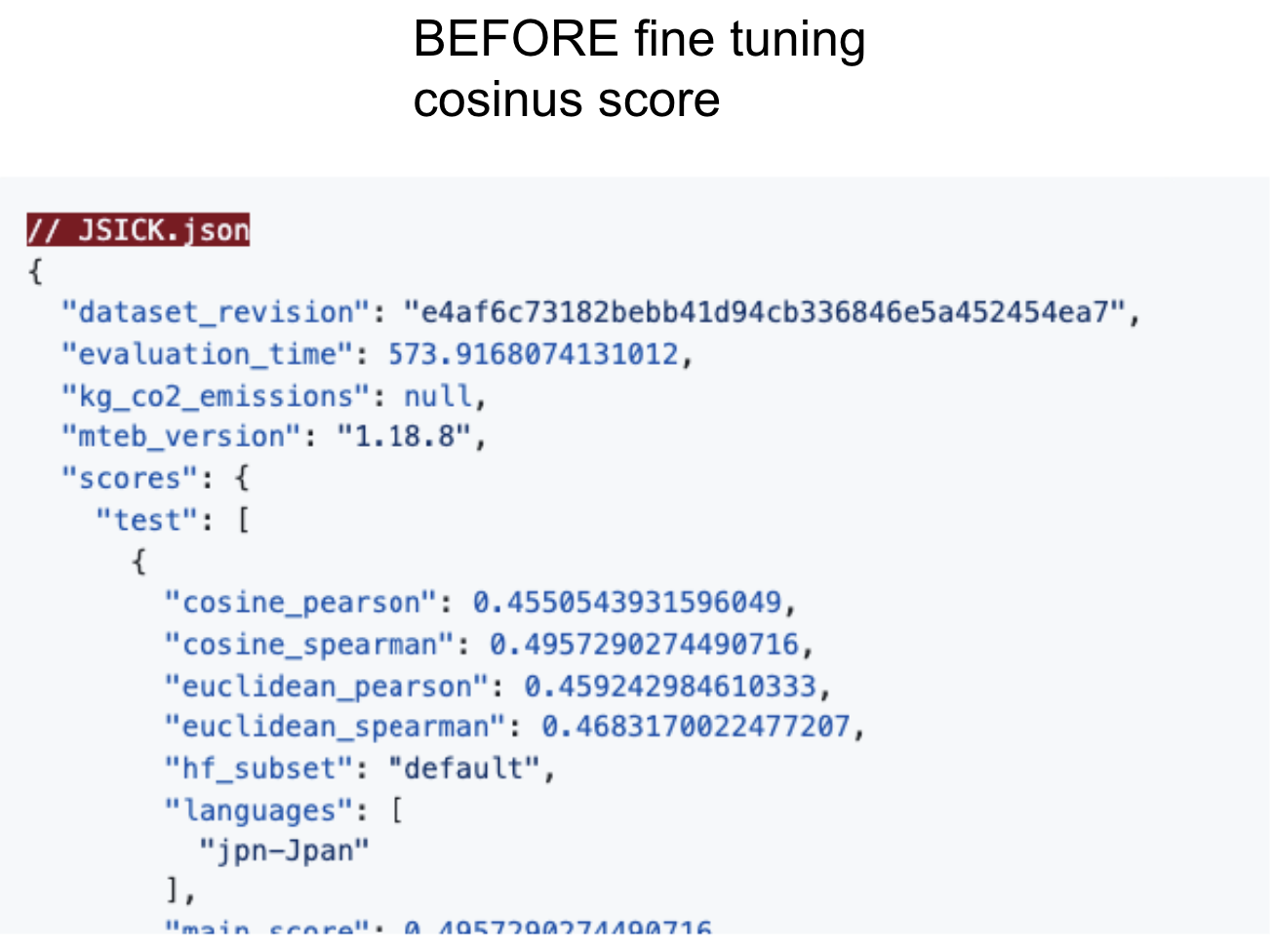

Sample bench (ie partial): |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Model/Full code is accessible for research discussion purpose. |

|

|

|

|

|

To access to this version |

|

|

please contact : kevin.noel at uzabase.com |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|