Liangrj5

commited on

Commit

·

5019d3f

1

Parent(s):

876e08a

init

Browse files- .gitattributes +3 -2

- .gitignore +139 -0

- LICENSE +121 -0

- README.md +81 -3

- figures/taskComparisonV.png +0 -0

- infer.py +33 -0

- infer_top20.sh +17 -0

- modules/ReLoCLNet.py +362 -0

- modules/contrastive.py +167 -0

- modules/dataset_init.py +82 -0

- modules/dataset_tvrr.py +208 -0

- modules/infer_lib.py +101 -0

- modules/model_components.py +317 -0

- modules/ndcg_iou.py +64 -0

- modules/optimization.py +343 -0

- run_top20.sh +14 -0

- train.py +69 -0

- utils/__init__.py +0 -0

- utils/basic_utils.py +270 -0

- utils/run_utils.py +112 -0

- utils/setup.py +101 -0

- utils/temporal_nms.py +74 -0

- utils/tensor_utils.py +141 -0

.gitattributes

CHANGED

|

@@ -1,3 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

| 1 |

*.7z filter=lfs diff=lfs merge=lfs -text

|

| 2 |

*.arrow filter=lfs diff=lfs merge=lfs -text

|

| 3 |

*.bin filter=lfs diff=lfs merge=lfs -text

|

|

@@ -33,5 +36,3 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

-

*.json filter=lfs diff=lfs merge=lfs -text

|

| 37 |

-

*.csv filter=lfs diff=lfs merge=lfs -text

|

|

|

|

| 1 |

+

*.json filter=lfs diff=lfs merge=lfs -text

|

| 2 |

+

*.h5 filter=lfs diff=lfs merge=lfs -text

|

| 3 |

+

*.csv filter=lfs diff=lfs merge=lfs -text

|

| 4 |

*.7z filter=lfs diff=lfs merge=lfs -text

|

| 5 |

*.arrow filter=lfs diff=lfs merge=lfs -text

|

| 6 |

*.bin filter=lfs diff=lfs merge=lfs -text

|

|

|

|

| 36 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 37 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 38 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

.gitignore

ADDED

|

@@ -0,0 +1,139 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Byte-compiled / optimized / DLL files

|

| 2 |

+

__pycache__/

|

| 3 |

+

*.py[cod]

|

| 4 |

+

*$py.class

|

| 5 |

+

|

| 6 |

+

# C extensions

|

| 7 |

+

*.so

|

| 8 |

+

|

| 9 |

+

unused

|

| 10 |

+

|

| 11 |

+

results

|

| 12 |

+

# Distribution / packaging

|

| 13 |

+

.Python

|

| 14 |

+

build/

|

| 15 |

+

develop-eggs/

|

| 16 |

+

dist/

|

| 17 |

+

downloads/

|

| 18 |

+

eggs/

|

| 19 |

+

.eggs/

|

| 20 |

+

lib/

|

| 21 |

+

lib64/

|

| 22 |

+

parts/

|

| 23 |

+

sdist/

|

| 24 |

+

var/

|

| 25 |

+

wheels/

|

| 26 |

+

pip-wheel-metadata/

|

| 27 |

+

share/python-wheels/

|

| 28 |

+

*.egg-info/

|

| 29 |

+

.installed.cfg

|

| 30 |

+

*.egg

|

| 31 |

+

MANIFEST

|

| 32 |

+

|

| 33 |

+

# PyInstaller

|

| 34 |

+

# Usually these files are written by a python script from a template

|

| 35 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 36 |

+

*.manifest

|

| 37 |

+

*.spec

|

| 38 |

+

|

| 39 |

+

# Installer logs

|

| 40 |

+

pip-log.txt

|

| 41 |

+

pip-delete-this-directory.txt

|

| 42 |

+

|

| 43 |

+

# Unit test / coverage reports

|

| 44 |

+

htmlcov/

|

| 45 |

+

.tox/

|

| 46 |

+

.nox/

|

| 47 |

+

.coverage

|

| 48 |

+

.coverage.*

|

| 49 |

+

.cache

|

| 50 |

+

nosetests.xml

|

| 51 |

+

coverage.xml

|

| 52 |

+

*.cover

|

| 53 |

+

*.py,cover

|

| 54 |

+

.hypothesis/

|

| 55 |

+

.pytest_cache/

|

| 56 |

+

|

| 57 |

+

# Translations

|

| 58 |

+

*.mo

|

| 59 |

+

*.pot

|

| 60 |

+

|

| 61 |

+

# Django stuff:

|

| 62 |

+

*.log

|

| 63 |

+

local_settings.py

|

| 64 |

+

db.sqlite3

|

| 65 |

+

db.sqlite3-journal

|

| 66 |

+

|

| 67 |

+

# Flask stuff:

|

| 68 |

+

instance/

|

| 69 |

+

.webassets-cache

|

| 70 |

+

|

| 71 |

+

# Scrapy stuff:

|

| 72 |

+

.scrapy

|

| 73 |

+

|

| 74 |

+

# Sphinx documentation

|

| 75 |

+

docs/_build/

|

| 76 |

+

|

| 77 |

+

# PyBuilder

|

| 78 |

+

target/

|

| 79 |

+

|

| 80 |

+

# Jupyter Notebook

|

| 81 |

+

.ipynb_checkpoints

|

| 82 |

+

|

| 83 |

+

# IPython

|

| 84 |

+

profile_default/

|

| 85 |

+

ipython_config.py

|

| 86 |

+

|

| 87 |

+

# pyenv

|

| 88 |

+

.python-version

|

| 89 |

+

|

| 90 |

+

# pipenv

|

| 91 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 92 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 93 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 94 |

+

# install all needed dependencies.

|

| 95 |

+

#Pipfile.lock

|

| 96 |

+

|

| 97 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow

|

| 98 |

+

__pypackages__/

|

| 99 |

+

|

| 100 |

+

# Celery stuff

|

| 101 |

+

celerybeat-schedule

|

| 102 |

+

celerybeat.pid

|

| 103 |

+

|

| 104 |

+

# SageMath parsed files

|

| 105 |

+

*.sage.py

|

| 106 |

+

|

| 107 |

+

# Environments

|

| 108 |

+

.env

|

| 109 |

+

.venv

|

| 110 |

+

env/

|

| 111 |

+

venv/

|

| 112 |

+

ENV/

|

| 113 |

+

env.bak/

|

| 114 |

+

venv.bak/

|

| 115 |

+

|

| 116 |

+

# Spyder project settings

|

| 117 |

+

.spyderproject

|

| 118 |

+

.spyproject

|

| 119 |

+

|

| 120 |

+

# Rope project settings

|

| 121 |

+

.ropeproject

|

| 122 |

+

|

| 123 |

+

# mkdocs documentation

|

| 124 |

+

/site

|

| 125 |

+

|

| 126 |

+

# mypy

|

| 127 |

+

.mypy_cache/

|

| 128 |

+

.dmypy.json

|

| 129 |

+

dmypy.json

|

| 130 |

+

|

| 131 |

+

# Pyre type checker

|

| 132 |

+

.pyre/

|

| 133 |

+

|

| 134 |

+

# custom

|

| 135 |

+

.idea/

|

| 136 |

+

.vscode/

|

| 137 |

+

data/tvr_feature_release/

|

| 138 |

+

|

| 139 |

+

|

LICENSE

ADDED

|

@@ -0,0 +1,121 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Creative Commons Legal Code

|

| 2 |

+

|

| 3 |

+

CC0 1.0 Universal

|

| 4 |

+

|

| 5 |

+

CREATIVE COMMONS CORPORATION IS NOT A LAW FIRM AND DOES NOT PROVIDE

|

| 6 |

+

LEGAL SERVICES. DISTRIBUTION OF THIS DOCUMENT DOES NOT CREATE AN

|

| 7 |

+

ATTORNEY-CLIENT RELATIONSHIP. CREATIVE COMMONS PROVIDES THIS

|

| 8 |

+

INFORMATION ON AN "AS-IS" BASIS. CREATIVE COMMONS MAKES NO WARRANTIES

|

| 9 |

+

REGARDING THE USE OF THIS DOCUMENT OR THE INFORMATION OR WORKS

|

| 10 |

+

PROVIDED HEREUNDER, AND DISCLAIMS LIABILITY FOR DAMAGES RESULTING FROM

|

| 11 |

+

THE USE OF THIS DOCUMENT OR THE INFORMATION OR WORKS PROVIDED

|

| 12 |

+

HEREUNDER.

|

| 13 |

+

|

| 14 |

+

Statement of Purpose

|

| 15 |

+

|

| 16 |

+

The laws of most jurisdictions throughout the world automatically confer

|

| 17 |

+

exclusive Copyright and Related Rights (defined below) upon the creator

|

| 18 |

+

and subsequent owner(s) (each and all, an "owner") of an original work of

|

| 19 |

+

authorship and/or a database (each, a "Work").

|

| 20 |

+

|

| 21 |

+

Certain owners wish to permanently relinquish those rights to a Work for

|

| 22 |

+

the purpose of contributing to a commons of creative, cultural and

|

| 23 |

+

scientific works ("Commons") that the public can reliably and without fear

|

| 24 |

+

of later claims of infringement build upon, modify, incorporate in other

|

| 25 |

+

works, reuse and redistribute as freely as possible in any form whatsoever

|

| 26 |

+

and for any purposes, including without limitation commercial purposes.

|

| 27 |

+

These owners may contribute to the Commons to promote the ideal of a free

|

| 28 |

+

culture and the further production of creative, cultural and scientific

|

| 29 |

+

works, or to gain reputation or greater distribution for their Work in

|

| 30 |

+

part through the use and efforts of others.

|

| 31 |

+

|

| 32 |

+

For these and/or other purposes and motivations, and without any

|

| 33 |

+

expectation of additional consideration or compensation, the person

|

| 34 |

+

associating CC0 with a Work (the "Affirmer"), to the extent that he or she

|

| 35 |

+

is an owner of Copyright and Related Rights in the Work, voluntarily

|

| 36 |

+

elects to apply CC0 to the Work and publicly distribute the Work under its

|

| 37 |

+

terms, with knowledge of his or her Copyright and Related Rights in the

|

| 38 |

+

Work and the meaning and intended legal effect of CC0 on those rights.

|

| 39 |

+

|

| 40 |

+

1. Copyright and Related Rights. A Work made available under CC0 may be

|

| 41 |

+

protected by copyright and related or neighboring rights ("Copyright and

|

| 42 |

+

Related Rights"). Copyright and Related Rights include, but are not

|

| 43 |

+

limited to, the following:

|

| 44 |

+

|

| 45 |

+

i. the right to reproduce, adapt, distribute, perform, display,

|

| 46 |

+

communicate, and translate a Work;

|

| 47 |

+

ii. moral rights retained by the original author(s) and/or performer(s);

|

| 48 |

+

iii. publicity and privacy rights pertaining to a person's image or

|

| 49 |

+

likeness depicted in a Work;

|

| 50 |

+

iv. rights protecting against unfair competition in regards to a Work,

|

| 51 |

+

subject to the limitations in paragraph 4(a), below;

|

| 52 |

+

v. rights protecting the extraction, dissemination, use and reuse of data

|

| 53 |

+

in a Work;

|

| 54 |

+

vi. database rights (such as those arising under Directive 96/9/EC of the

|

| 55 |

+

European Parliament and of the Council of 11 March 1996 on the legal

|

| 56 |

+

protection of databases, and under any national implementation

|

| 57 |

+

thereof, including any amended or successor version of such

|

| 58 |

+

directive); and

|

| 59 |

+

vii. other similar, equivalent or corresponding rights throughout the

|

| 60 |

+

world based on applicable law or treaty, and any national

|

| 61 |

+

implementations thereof.

|

| 62 |

+

|

| 63 |

+

2. Waiver. To the greatest extent permitted by, but not in contravention

|

| 64 |

+

of, applicable law, Affirmer hereby overtly, fully, permanently,

|

| 65 |

+

irrevocably and unconditionally waives, abandons, and surrenders all of

|

| 66 |

+

Affirmer's Copyright and Related Rights and associated claims and causes

|

| 67 |

+

of action, whether now known or unknown (including existing as well as

|

| 68 |

+

future claims and causes of action), in the Work (i) in all territories

|

| 69 |

+

worldwide, (ii) for the maximum duration provided by applicable law or

|

| 70 |

+

treaty (including future time extensions), (iii) in any current or future

|

| 71 |

+

medium and for any number of copies, and (iv) for any purpose whatsoever,

|

| 72 |

+

including without limitation commercial, advertising or promotional

|

| 73 |

+

purposes (the "Waiver"). Affirmer makes the Waiver for the benefit of each

|

| 74 |

+

member of the public at large and to the detriment of Affirmer's heirs and

|

| 75 |

+

successors, fully intending that such Waiver shall not be subject to

|

| 76 |

+

revocation, rescission, cancellation, termination, or any other legal or

|

| 77 |

+

equitable action to disrupt the quiet enjoyment of the Work by the public

|

| 78 |

+

as contemplated by Affirmer's express Statement of Purpose.

|

| 79 |

+

|

| 80 |

+

3. Public License Fallback. Should any part of the Waiver for any reason

|

| 81 |

+

be judged legally invalid or ineffective under applicable law, then the

|

| 82 |

+

Waiver shall be preserved to the maximum extent permitted taking into

|

| 83 |

+

account Affirmer's express Statement of Purpose. In addition, to the

|

| 84 |

+

extent the Waiver is so judged Affirmer hereby grants to each affected

|

| 85 |

+

person a royalty-free, non transferable, non sublicensable, non exclusive,

|

| 86 |

+

irrevocable and unconditional license to exercise Affirmer's Copyright and

|

| 87 |

+

Related Rights in the Work (i) in all territories worldwide, (ii) for the

|

| 88 |

+

maximum duration provided by applicable law or treaty (including future

|

| 89 |

+

time extensions), (iii) in any current or future medium and for any number

|

| 90 |

+

of copies, and (iv) for any purpose whatsoever, including without

|

| 91 |

+

limitation commercial, advertising or promotional purposes (the

|

| 92 |

+

"License"). The License shall be deemed effective as of the date CC0 was

|

| 93 |

+

applied by Affirmer to the Work. Should any part of the License for any

|

| 94 |

+

reason be judged legally invalid or ineffective under applicable law, such

|

| 95 |

+

partial invalidity or ineffectiveness shall not invalidate the remainder

|

| 96 |

+

of the License, and in such case Affirmer hereby affirms that he or she

|

| 97 |

+

will not (i) exercise any of his or her remaining Copyright and Related

|

| 98 |

+

Rights in the Work or (ii) assert any associated claims and causes of

|

| 99 |

+

action with respect to the Work, in either case contrary to Affirmer's

|

| 100 |

+

express Statement of Purpose.

|

| 101 |

+

|

| 102 |

+

4. Limitations and Disclaimers.

|

| 103 |

+

|

| 104 |

+

a. No trademark or patent rights held by Affirmer are waived, abandoned,

|

| 105 |

+

surrendered, licensed or otherwise affected by this document.

|

| 106 |

+

b. Affirmer offers the Work as-is and makes no representations or

|

| 107 |

+

warranties of any kind concerning the Work, express, implied,

|

| 108 |

+

statutory or otherwise, including without limitation warranties of

|

| 109 |

+

title, merchantability, fitness for a particular purpose, non

|

| 110 |

+

infringement, or the absence of latent or other defects, accuracy, or

|

| 111 |

+

the present or absence of errors, whether or not discoverable, all to

|

| 112 |

+

the greatest extent permissible under applicable law.

|

| 113 |

+

c. Affirmer disclaims responsibility for clearing rights of other persons

|

| 114 |

+

that may apply to the Work or any use thereof, including without

|

| 115 |

+

limitation any person's Copyright and Related Rights in the Work.

|

| 116 |

+

Further, Affirmer disclaims responsibility for obtaining any necessary

|

| 117 |

+

consents, permissions or other rights required for any use of the

|

| 118 |

+

Work.

|

| 119 |

+

d. Affirmer understands and acknowledges that Creative Commons is not a

|

| 120 |

+

party to this document and has no duty or obligation with respect to

|

| 121 |

+

this CC0 or use of the Work.

|

README.md

CHANGED

|

@@ -1,3 +1,81 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

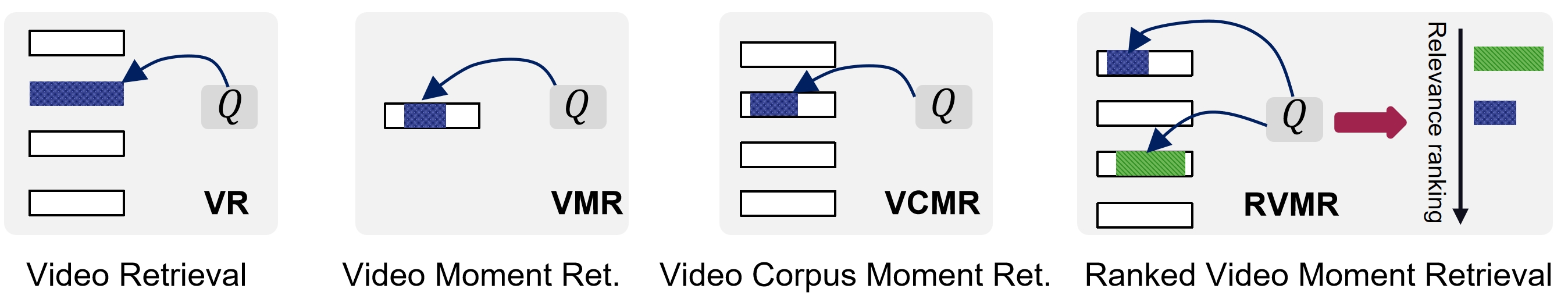

# Video Moment Retrieval in Practical Setting: A Dataset of Ranked Moments for Imprecise Queries

|

| 2 |

+

|

| 3 |

+

The benchmark and dataset for the paper "Video Moment Retrieval in Practical Settings: A Dataset of Ranked Moments for Imprecise Queries" is coming soon.

|

| 4 |

+

|

| 5 |

+

We recommend cloning the code, data, and feature files from the Hugging Face repository at [TVR-Ranking](https://huggingface.co/axgroup/TVR-Ranking).

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

## Getting started

|

| 13 |

+

### 1. Install the requisites

|

| 14 |

+

|

| 15 |

+

The Python packages we used are listed as follows. Commonly, the most recent versions work well.

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

```shell

|

| 19 |

+

conda create --name tvr_ranking python=3.11

|

| 20 |

+

conda activate tvr_ranking

|

| 21 |

+

pip install pytorch # 2.2.1+cu121

|

| 22 |

+

pip install tensorboard

|

| 23 |

+

pip install h5py pandas tqdm easydict pyyaml

|

| 24 |

+

```

|

| 25 |

+

|

| 26 |

+

### 2. Download full dataset

|

| 27 |

+

For the full dataset, please go down from Hugging Face [TVR-Ranking](https://huggingface.co/axgroup/TVR-Ranking). \

|

| 28 |

+

The detailed introduction and raw annotations is available at [Dataset Introduction](data/TVR_Ranking/readme.md).

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

```

|

| 32 |

+

TVR_Ranking/

|

| 33 |

+

-val.json

|

| 34 |

+

-test.json

|

| 35 |

+

-train_top01.json

|

| 36 |

+

-train_top20.json

|

| 37 |

+

-train_top40.json

|

| 38 |

+

-video_corpus.json

|

| 39 |

+

```

|

| 40 |

+

|

| 41 |

+

### 3. Download features

|

| 42 |

+

|

| 43 |

+

For the query BERT features, you can download them from Hugging Face [TVR-Ranking](https://huggingface.co/axgroup/TVR-Ranking). \

|

| 44 |

+

For the video and subtitle features, please request them at [TVR](https://tvr.cs.unc.edu/).

|

| 45 |

+

|

| 46 |

+

```shell

|

| 47 |

+

tar -xf tvr_feature_release.tar.gz -C data/TVR_Ranking/feature

|

| 48 |

+

```

|

| 49 |

+

|

| 50 |

+

### 4. Training

|

| 51 |

+

```shell

|

| 52 |

+

# modify the data path first

|

| 53 |

+

sh run_top20.sh

|

| 54 |

+

```

|

| 55 |

+

|

| 56 |

+

## Baseline

|

| 57 |

+

(ToDo: running the new version...) \

|

| 58 |

+

The baseline performance of $NDGC@20$ was shown as follows.

|

| 59 |

+

Top $N$ moments were comprised of a pseudo training set by the query-caption similarity.

|

| 60 |

+

| Model | $N$ | IoU = 0.3, val | IoU = 0.3, test | IoU = 0.5, val | IoU = 0.5, test | IoU = 0.7, val | IoU = 0.7, test |

|

| 61 |

+

|----------------|-----|----------------|-----------------|----------------|-----------------|----------------|-----------------|

|

| 62 |

+

| **XML** | 1 | 0.1050 | 0.1047 | 0.0767 | 0.0751 | 0.0287 | 0.0314 |

|

| 63 |

+

| | 20 | 0.1948 | 0.1964 | 0.1417 | 0.1434 | 0.0519 | 0.0583 |

|

| 64 |

+

| | 40 | 0.2101 | 0.2110 | 0.1525 | 0.1533 | 0.0613 | 0.0617 |

|

| 65 |

+

| **CONQUER** | 1 | 0.0979 | 0.0830 | 0.0817 | 0.0686 | 0.0547 | 0.0479 |

|

| 66 |

+

| | 20 | 0.2007 | 0.1935 | 0.1844 | 0.1803 | 0.1391 | 0.1341 |

|

| 67 |

+

| | 40 | 0.2094 | 0.1943 | 0.1930 | 0.1825 | 0.1481 | 0.1334 |

|

| 68 |

+

| **ReLoCLNet** | 1 | 0.1306 | 0.1299 | 0.1169 | 0.1154 | 0.0738 | 0.0789 |

|

| 69 |

+

| | 20 | 0.3264 | 0.3214 | 0.3007 | 0.2956 | 0.2074 | 0.2084 |

|

| 70 |

+

| | 40 | 0.3479 | 0.3473 | 0.3221 | 0.3217 | 0.2218 | 0.2275 |

|

| 71 |

+

|

| 72 |

+

|

| 73 |

+

### 4. Inferring

|

| 74 |

+

[ToDo] The checkpoint can all be accessed from Hugging Face [TVR-Ranking](https://huggingface.co/axgroup/TVR-Ranking).

|

| 75 |

+

|

| 76 |

+

|

| 77 |

+

## Citation

|

| 78 |

+

If you feel this project helpful to your research, please cite our work.

|

| 79 |

+

```

|

| 80 |

+

|

| 81 |

+

```

|

figures/taskComparisonV.png

ADDED

|

infer.py

ADDED

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os, json

|

| 2 |

+

import torch

|

| 3 |

+

from tqdm import tqdm

|

| 4 |

+

|

| 5 |

+

from modules.dataset_init import prepare_dataset

|

| 6 |

+

from modules.infer_lib import grab_corpus_feature, eval_epoch

|

| 7 |

+

|

| 8 |

+

from utils.basic_utils import AverageMeter, get_logger

|

| 9 |

+

from utils.setup import set_seed, get_args

|

| 10 |

+

from utils.run_utils import prepare_optimizer, prepare_model, logger_ndcg_iou

|

| 11 |

+

|

| 12 |

+

def main():

|

| 13 |

+

opt = get_args()

|

| 14 |

+

logger = get_logger(opt.results_path, opt.exp_id)

|

| 15 |

+

set_seed(opt.seed)

|

| 16 |

+

logger.info("Arguments:\n%s", json.dumps(vars(opt), indent=4))

|

| 17 |

+

opt.device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

|

| 18 |

+

logger.info(f"device: {opt.device}")

|

| 19 |

+

|

| 20 |

+

train_loader, corpus_loader, corpus_video_list, val_loader, test_loader, val_gt, test_gt = prepare_dataset(opt)

|

| 21 |

+

|

| 22 |

+

model = prepare_model(opt, logger)

|

| 23 |

+

# optimizer = prepare_optimizer(model, opt, len(train_loader) * opt.n_epoch)

|

| 24 |

+

|

| 25 |

+

corpus_feature = grab_corpus_feature(model, corpus_loader, opt.device)

|

| 26 |

+

val_ndcg_iou = eval_epoch(model, corpus_feature, val_loader, val_gt, opt, corpus_video_list)

|

| 27 |

+

test_ndcg_iou = eval_epoch(model, corpus_feature, test_loader, test_gt, opt, corpus_video_list)

|

| 28 |

+

|

| 29 |

+

logger_ndcg_iou(val_ndcg_iou, logger, "VAL")

|

| 30 |

+

logger_ndcg_iou(test_ndcg_iou, logger, "TEST")

|

| 31 |

+

|

| 32 |

+

if __name__ == '__main__':

|

| 33 |

+

main()

|

infer_top20.sh

ADDED

|

@@ -0,0 +1,17 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

python infer.py \

|

| 2 |

+

--results_path results/tvr_ranking \

|

| 3 |

+

--checkpoint results/tvr_ranking/best_model.pt \

|

| 4 |

+

--train_path data/TVR_Ranking/train_top20.json \

|

| 5 |

+

--val_path data/TVR_Ranking/val.json \

|

| 6 |

+

--test_path data/TVR_Ranking/test.json \

|

| 7 |

+

--corpus_path data/TVR_Ranking/video_corpus.json \

|

| 8 |

+

--desc_bert_path /home/renjie.liang/datasets/TVR_Ranking/features/query_bert.h5 \

|

| 9 |

+

--video_feat_path /home/share/czzhang/Dataset/TVR/TVR_feature/video_feature/tvr_i3d_rgb600_avg_cl-1.5.h5 \

|

| 10 |

+

--sub_bert_path /home/share/czzhang/Dataset/TVR/TVR_feature/bert_feature/sub_query/tvr_sub_pretrained_w_sub_query_max_cl-1.5.h5 \

|

| 11 |

+

--exp_id infer

|

| 12 |

+

|

| 13 |

+

# qsub -I -l select=1:ngpus=1 -P gs_slab -q slab_gpu8

|

| 14 |

+

# cd /home/renjie.liang/11_TVR-Ranking/ReLoCLNet; conda activate py11; sh infer_top20.sh

|

| 15 |

+

# --hard_negative_start_epoch 0 \

|

| 16 |

+

# --no_norm_vfeat \

|

| 17 |

+

# --use_hard_negative

|

modules/ReLoCLNet.py

ADDED

|

@@ -0,0 +1,362 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import copy

|

| 2 |

+

import torch

|

| 3 |

+

import torch.nn as nn

|

| 4 |

+

import torch.nn.functional as F

|

| 5 |

+

from easydict import EasyDict as edict

|

| 6 |

+

from modules.model_components import BertAttention, LinearLayer, BertSelfAttention, TrainablePositionalEncoding

|

| 7 |

+

from modules.model_components import MILNCELoss

|

| 8 |

+

from modules.contrastive import batch_video_query_loss

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

class ReLoCLNet(nn.Module):

|

| 12 |

+

def __init__(self, config):

|

| 13 |

+

super(ReLoCLNet, self).__init__()

|

| 14 |

+

self.config = config

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

self.query_pos_embed = TrainablePositionalEncoding(max_position_embeddings=config.max_desc_l,

|

| 18 |

+

hidden_size=config.hidden_size, dropout=config.input_drop)

|

| 19 |

+

self.ctx_pos_embed = TrainablePositionalEncoding(max_position_embeddings=config.max_ctx_l,

|

| 20 |

+

hidden_size=config.hidden_size, dropout=config.input_drop)

|

| 21 |

+

|

| 22 |

+

self.query_input_proj = LinearLayer(config.query_input_size, config.hidden_size, layer_norm=True,

|

| 23 |

+

dropout=config.input_drop, relu=True)

|

| 24 |

+

|

| 25 |

+

self.query_encoder = BertAttention(edict(hidden_size=config.hidden_size, intermediate_size=config.hidden_size,

|

| 26 |

+

hidden_dropout_prob=config.drop, num_attention_heads=config.n_heads,

|

| 27 |

+

attention_probs_dropout_prob=config.drop))

|

| 28 |

+

self.query_encoder1 = copy.deepcopy(self.query_encoder)

|

| 29 |

+

|

| 30 |

+

cross_att_cfg = edict(hidden_size=config.hidden_size, num_attention_heads=config.n_heads,

|

| 31 |

+

attention_probs_dropout_prob=config.drop)

|

| 32 |

+

# use_video

|

| 33 |

+

self.video_input_proj = LinearLayer(config.visual_input_size, config.hidden_size, layer_norm=True,

|

| 34 |

+

dropout=config.input_drop, relu=True)

|

| 35 |

+

self.video_encoder1 = copy.deepcopy(self.query_encoder)

|

| 36 |

+

self.video_encoder2 = copy.deepcopy(self.query_encoder)

|

| 37 |

+

self.video_encoder3 = copy.deepcopy(self.query_encoder)

|

| 38 |

+

self.video_cross_att = BertSelfAttention(cross_att_cfg)

|

| 39 |

+

self.video_cross_layernorm = nn.LayerNorm(config.hidden_size)

|

| 40 |

+

self.video_query_linear = nn.Linear(config.hidden_size, config.hidden_size)

|

| 41 |

+

|

| 42 |

+

# use_sub

|

| 43 |

+

self.sub_input_proj = LinearLayer(config.sub_input_size, config.hidden_size, layer_norm=True,

|

| 44 |

+

dropout=config.input_drop, relu=True)

|

| 45 |

+

self.sub_encoder1 = copy.deepcopy(self.query_encoder)

|

| 46 |

+

self.sub_encoder2 = copy.deepcopy(self.query_encoder)

|

| 47 |

+

self.sub_encoder3 = copy.deepcopy(self.query_encoder)

|

| 48 |

+

self.sub_cross_att = BertSelfAttention(cross_att_cfg)

|

| 49 |

+

self.sub_cross_layernorm = nn.LayerNorm(config.hidden_size)

|

| 50 |

+

self.sub_query_linear = nn.Linear(config.hidden_size, config.hidden_size)

|

| 51 |

+

|

| 52 |

+

self.modular_vector_mapping = nn.Linear(in_features=config.hidden_size, out_features=2, bias=False)

|

| 53 |

+

|

| 54 |

+

conv_cfg = dict(in_channels=1, out_channels=1, kernel_size=config.conv_kernel_size,

|

| 55 |

+

stride=config.conv_stride, padding=config.conv_kernel_size // 2, bias=False)

|

| 56 |

+

self.merged_st_predictor = nn.Conv1d(**conv_cfg)

|

| 57 |

+

self.merged_ed_predictor = nn.Conv1d(**conv_cfg)

|

| 58 |

+

|

| 59 |

+

# self.temporal_criterion = nn.CrossEntropyLoss(reduction="mean")

|

| 60 |

+

self.temporal_criterion = nn.CrossEntropyLoss(reduction="none")

|

| 61 |

+

self.nce_criterion = MILNCELoss(reduction=False)

|

| 62 |

+

# self.nce_criterion = MILNCELoss(reduction='mean')

|

| 63 |

+

|

| 64 |

+

self.reset_parameters()

|

| 65 |

+

|

| 66 |

+

def reset_parameters(self):

|

| 67 |

+

""" Initialize the weights."""

|

| 68 |

+

def re_init(module):

|

| 69 |

+

if isinstance(module, (nn.Linear, nn.Embedding)):

|

| 70 |

+

# Slightly different from the TF version which uses truncated_normal for initialization

|

| 71 |

+

# cf https://github.com/pytorch/pytorch/pull/5617

|

| 72 |

+

module.weight.data.normal_(mean=0.0, std=self.config.initializer_range)

|

| 73 |

+

elif isinstance(module, nn.LayerNorm):

|

| 74 |

+

module.bias.data.zero_()

|

| 75 |

+

module.weight.data.fill_(1.0)

|

| 76 |

+

elif isinstance(module, nn.Conv1d):

|

| 77 |

+

module.reset_parameters()

|

| 78 |

+

if isinstance(module, nn.Linear) and module.bias is not None:

|

| 79 |

+

module.bias.data.zero_()

|

| 80 |

+

|

| 81 |

+

self.apply(re_init)

|

| 82 |

+

|

| 83 |

+

def set_hard_negative(self, use_hard_negative, hard_pool_size):

|

| 84 |

+

"""use_hard_negative: bool; hard_pool_size: int, """

|

| 85 |

+

self.config.use_hard_negative = use_hard_negative

|

| 86 |

+

self.config.hard_pool_size = hard_pool_size

|

| 87 |

+

|

| 88 |

+

|

| 89 |

+

def forward(self, query_feat, query_mask, video_feat, video_mask, sub_feat, sub_mask, st_ed_indices, match_labels, simi):

|

| 90 |

+

"""

|

| 91 |

+

Args:

|

| 92 |

+

query_feat: (N, Lq, Dq)

|

| 93 |

+

query_mask: (N, Lq)

|

| 94 |

+

video_feat: (N, Lv, Dv) or None

|

| 95 |

+

video_mask: (N, Lv) or None

|

| 96 |

+

sub_feat: (N, Lv, Ds) or None

|

| 97 |

+

sub_mask: (N, Lv) or None

|

| 98 |

+

st_ed_indices: (N, 2), torch.LongTensor, 1st, 2nd columns are st, ed labels respectively.

|

| 99 |

+

match_labels: (N, Lv), torch.LongTensor, matching labels for detecting foreground and background (not used)

|

| 100 |

+

"""

|

| 101 |

+

video_feat, sub_feat, mid_x_video_feat, mid_x_sub_feat, x_video_feat, x_sub_feat = self.encode_context(

|

| 102 |

+

video_feat, video_mask, sub_feat, sub_mask, return_mid_output=True)

|

| 103 |

+

video_query, sub_query, query_context_scores, st_prob, ed_prob = self.get_pred_from_raw_query(

|

| 104 |

+

query_feat, query_mask, x_video_feat, video_mask, x_sub_feat, sub_mask, cross=False,

|

| 105 |

+

return_query_feats=True)

|

| 106 |

+

# frame level contrastive learning loss (FrameCL)

|

| 107 |

+

loss_fcl = 0

|

| 108 |

+

if self.config.lw_fcl != 0:

|

| 109 |

+

loss_fcl_vq = batch_video_query_loss(mid_x_video_feat, video_query, match_labels, video_mask, measure='JSD')

|

| 110 |

+

loss_fcl_sq = batch_video_query_loss(mid_x_sub_feat, sub_query, match_labels, sub_mask, measure='JSD')

|

| 111 |

+

loss_fcl = (loss_fcl_vq + loss_fcl_sq) / 2.0

|

| 112 |

+

loss_fcl = self.config.lw_fcl * loss_fcl

|

| 113 |

+

# video level contrastive learning loss (VideoCL)

|

| 114 |

+

loss_vcl = 0

|

| 115 |

+

if self.config.lw_vcl != 0:

|

| 116 |

+

mid_video_q2ctx_scores = self.get_unnormalized_video_level_scores(video_query, mid_x_video_feat, video_mask)

|

| 117 |

+

mid_sub_q2ctx_scores = self.get_unnormalized_video_level_scores(sub_query, mid_x_sub_feat, sub_mask)

|

| 118 |

+

mid_video_q2ctx_scores, _ = torch.max(mid_video_q2ctx_scores, dim=1)

|

| 119 |

+

mid_sub_q2ctx_scores, _ = torch.max(mid_sub_q2ctx_scores, dim=1)

|

| 120 |

+

# exclude the contrastive loss for the same query

|

| 121 |

+

mid_q2ctx_scores = (mid_video_q2ctx_scores + mid_sub_q2ctx_scores) / 2.0 # * video_contrastive_mask

|

| 122 |

+

loss_vcl = self.nce_criterion(mid_q2ctx_scores)

|

| 123 |

+

loss_vcl = self.config.lw_vcl * loss_vcl

|

| 124 |

+

# moment localization loss

|

| 125 |

+

loss_st_ed = 0

|

| 126 |

+

if self.config.lw_st_ed != 0:

|

| 127 |

+

loss_st = self.temporal_criterion(st_prob, st_ed_indices[:, 0])

|

| 128 |

+

loss_ed = self.temporal_criterion(ed_prob, st_ed_indices[:, 1])

|

| 129 |

+

loss_st_ed = loss_st + loss_ed

|

| 130 |

+

loss_st_ed = self.config.lw_st_ed * loss_st_ed

|

| 131 |

+

# video level retrieval loss

|

| 132 |

+

loss_neg_ctx, loss_neg_q = 0, 0

|

| 133 |

+

if self.config.lw_neg_ctx != 0 or self.config.lw_neg_q != 0:

|

| 134 |

+

loss_neg_ctx, loss_neg_q = self.get_video_level_loss(query_context_scores)

|

| 135 |

+

loss_neg_ctx = self.config.lw_neg_ctx * loss_neg_ctx

|

| 136 |

+

loss_neg_q = self.config.lw_neg_q * loss_neg_q

|

| 137 |

+

# sum loss

|

| 138 |

+

# loss = loss_fcl + loss_vcl + loss_st_ed + loss_neg_ctx + loss_neg_q

|

| 139 |

+

# simi = torch.exp(10*(simi-0.7))

|

| 140 |

+

simi = simi

|

| 141 |

+

loss = ((loss_fcl + loss_vcl + loss_st_ed) * simi).mean() + loss_neg_ctx + loss_neg_q

|

| 142 |

+

return loss

|

| 143 |

+

|

| 144 |

+

def encode_query(self, query_feat, query_mask):

|

| 145 |

+

encoded_query = self.encode_input(query_feat, query_mask, self.query_input_proj, self.query_encoder,

|

| 146 |

+

self.query_pos_embed) # (N, Lq, D)

|

| 147 |

+

encoded_query = self.query_encoder1(encoded_query, query_mask.unsqueeze(1))

|

| 148 |

+

video_query, sub_query = self.get_modularized_queries(encoded_query, query_mask) # (N, D) * 2

|

| 149 |

+

return video_query, sub_query

|

| 150 |

+

|

| 151 |

+

def encode_context(self, video_feat, video_mask, sub_feat, sub_mask, return_mid_output=False):

|

| 152 |

+

# encoding video and subtitle features, respectively

|

| 153 |

+

encoded_video_feat = self.encode_input(video_feat, video_mask, self.video_input_proj, self.video_encoder1,

|

| 154 |

+

self.ctx_pos_embed)

|

| 155 |

+

encoded_sub_feat = self.encode_input(sub_feat, sub_mask, self.sub_input_proj, self.sub_encoder1,

|

| 156 |

+

self.ctx_pos_embed)

|

| 157 |

+

# cross encoding subtitle features

|

| 158 |

+

x_encoded_video_feat = self.cross_context_encoder(encoded_video_feat, video_mask, encoded_sub_feat, sub_mask,

|

| 159 |

+

self.video_cross_att, self.video_cross_layernorm) # (N, L, D)

|

| 160 |

+

x_encoded_video_feat_ = self.video_encoder2(x_encoded_video_feat, video_mask.unsqueeze(1))

|

| 161 |

+

# cross encoding video features

|

| 162 |

+

x_encoded_sub_feat = self.cross_context_encoder(encoded_sub_feat, sub_mask, encoded_video_feat, video_mask,

|

| 163 |

+

self.sub_cross_att, self.sub_cross_layernorm) # (N, L, D)

|

| 164 |

+

x_encoded_sub_feat_ = self.sub_encoder2(x_encoded_sub_feat, sub_mask.unsqueeze(1))

|

| 165 |

+

# additional self encoding process

|

| 166 |

+

x_encoded_video_feat = self.video_encoder3(x_encoded_video_feat_, video_mask.unsqueeze(1))

|

| 167 |

+

x_encoded_sub_feat = self.sub_encoder3(x_encoded_sub_feat_, sub_mask.unsqueeze(1))

|

| 168 |

+

if return_mid_output:

|

| 169 |

+

return (encoded_video_feat, encoded_sub_feat, x_encoded_video_feat_, x_encoded_sub_feat_,

|

| 170 |

+

x_encoded_video_feat, x_encoded_sub_feat)

|

| 171 |

+

else:

|

| 172 |

+

return x_encoded_video_feat, x_encoded_sub_feat

|

| 173 |

+

|

| 174 |

+

@staticmethod

|

| 175 |

+

def cross_context_encoder(main_context_feat, main_context_mask, side_context_feat, side_context_mask,

|

| 176 |

+

cross_att_layer, norm_layer):

|

| 177 |

+

"""

|

| 178 |

+

Args:

|

| 179 |

+

main_context_feat: (N, Lq, D)

|

| 180 |

+

main_context_mask: (N, Lq)

|

| 181 |

+

side_context_feat: (N, Lk, D)

|

| 182 |

+

side_context_mask: (N, Lk)

|

| 183 |

+

cross_att_layer: cross attention layer

|

| 184 |

+

norm_layer: layer norm layer

|

| 185 |

+

"""

|

| 186 |

+

cross_mask = torch.einsum("bm,bn->bmn", main_context_mask, side_context_mask) # (N, Lq, Lk)

|

| 187 |

+

cross_out = cross_att_layer(main_context_feat, side_context_feat, side_context_feat, cross_mask) # (N, Lq, D)

|

| 188 |

+

residual_out = norm_layer(cross_out + main_context_feat)

|

| 189 |

+

return residual_out

|

| 190 |

+

|

| 191 |

+

@staticmethod

|

| 192 |

+

def encode_input(feat, mask, input_proj_layer, encoder_layer, pos_embed_layer):

|

| 193 |

+

"""

|

| 194 |

+

Args:

|

| 195 |

+

feat: (N, L, D_input), torch.float32

|

| 196 |

+

mask: (N, L), torch.float32, with 1 indicates valid query, 0 indicates mask

|

| 197 |

+

input_proj_layer: down project input

|

| 198 |

+

encoder_layer: encoder layer

|

| 199 |

+

pos_embed_layer: positional embedding layer

|

| 200 |

+

"""

|

| 201 |

+

feat = input_proj_layer(feat)

|

| 202 |

+

feat = pos_embed_layer(feat)

|

| 203 |

+

mask = mask.unsqueeze(1) # (N, 1, L), torch.FloatTensor

|

| 204 |

+

return encoder_layer(feat, mask) # (N, L, D_hidden)

|

| 205 |

+

|

| 206 |

+

def get_modularized_queries(self, encoded_query, query_mask, return_modular_att=False):

|

| 207 |

+

"""

|

| 208 |

+

Args:

|

| 209 |

+

encoded_query: (N, L, D)

|

| 210 |

+

query_mask: (N, L)

|

| 211 |

+

return_modular_att: bool

|

| 212 |

+

"""

|

| 213 |

+

modular_attention_scores = self.modular_vector_mapping(encoded_query) # (N, L, 2 or 1)

|

| 214 |

+

modular_attention_scores = F.softmax(mask_logits(modular_attention_scores, query_mask.unsqueeze(2)), dim=1)

|

| 215 |

+

modular_queries = torch.einsum("blm,bld->bmd", modular_attention_scores, encoded_query) # (N, 2 or 1, D)

|

| 216 |

+

if return_modular_att:

|

| 217 |

+

assert modular_queries.shape[1] == 2

|

| 218 |

+

return modular_queries[:, 0], modular_queries[:, 1], modular_attention_scores

|

| 219 |

+

else:

|

| 220 |

+

assert modular_queries.shape[1] == 2

|

| 221 |

+

return modular_queries[:, 0], modular_queries[:, 1] # (N, D) * 2

|

| 222 |

+

|

| 223 |

+

@staticmethod

|

| 224 |

+

def get_video_level_scores(modularied_query, context_feat, context_mask):

|

| 225 |

+

""" Calculate video2query scores for each pair of video and query inside the batch.

|

| 226 |

+

Args:

|

| 227 |

+

modularied_query: (N, D)

|

| 228 |

+

context_feat: (N, L, D), output of the first transformer encoder layer

|

| 229 |

+

context_mask: (N, L)

|

| 230 |

+

Returns:

|

| 231 |

+

context_query_scores: (N, N) score of each query w.r.t. each video inside the batch,

|

| 232 |

+

diagonal positions are positive. used to get negative samples.

|

| 233 |

+

"""

|

| 234 |

+

modularied_query = F.normalize(modularied_query, dim=-1)

|

| 235 |

+

context_feat = F.normalize(context_feat, dim=-1)

|

| 236 |

+

query_context_scores = torch.einsum("md,nld->mln", modularied_query, context_feat) # (N, L, N)

|

| 237 |

+

context_mask = context_mask.transpose(0, 1).unsqueeze(0) # (1, L, N)

|

| 238 |

+

query_context_scores = mask_logits(query_context_scores, context_mask) # (N, L, N)

|

| 239 |

+

query_context_scores, _ = torch.max(query_context_scores, dim=1) # (N, N) diagonal positions are positive pairs

|

| 240 |

+

return query_context_scores

|

| 241 |

+

|

| 242 |

+

@staticmethod

|

| 243 |

+

def get_unnormalized_video_level_scores(modularied_query, context_feat, context_mask):

|

| 244 |

+

""" Calculate video2query scores for each pair of video and query inside the batch.

|

| 245 |

+

Args:

|

| 246 |

+

modularied_query: (N, D)

|

| 247 |

+

context_feat: (N, L, D), output of the first transformer encoder layer

|

| 248 |

+

context_mask: (N, L)

|

| 249 |

+

Returns:

|

| 250 |

+

context_query_scores: (N, N) score of each query w.r.t. each video inside the batch,

|

| 251 |

+

diagonal positions are positive. used to get negative samples.

|

| 252 |

+

"""

|

| 253 |

+

query_context_scores = torch.einsum("md,nld->mln", modularied_query, context_feat) # (N, L, N)

|

| 254 |

+

context_mask = context_mask.transpose(0, 1).unsqueeze(0) # (1, L, N)

|

| 255 |

+

query_context_scores = mask_logits(query_context_scores, context_mask) # (N, L, N)

|

| 256 |

+

return query_context_scores

|

| 257 |

+

|

| 258 |

+

def get_merged_score(self, video_query, video_feat, sub_query, sub_feat, cross=False):

|

| 259 |

+

video_query = self.video_query_linear(video_query)

|

| 260 |

+

sub_query = self.sub_query_linear(sub_query)

|

| 261 |

+

if cross:

|

| 262 |

+

video_similarity = torch.einsum("md,nld->mnl", video_query, video_feat)

|

| 263 |

+

sub_similarity = torch.einsum("md,nld->mnl", sub_query, sub_feat)

|

| 264 |

+

similarity = (video_similarity + sub_similarity) / 2 # (Nq, Nv, L) from query to all videos.

|

| 265 |

+

else:

|

| 266 |

+

video_similarity = torch.einsum("bd,bld->bl", video_query, video_feat) # (N, L)

|

| 267 |

+

sub_similarity = torch.einsum("bd,bld->bl", sub_query, sub_feat) # (N, L)

|

| 268 |

+

similarity = (video_similarity + sub_similarity) / 2

|

| 269 |

+

return similarity

|

| 270 |

+

|

| 271 |

+

def get_merged_st_ed_prob(self, similarity, context_mask, cross=False):

|

| 272 |

+

if cross:

|

| 273 |

+

n_q, n_c, length = similarity.shape

|

| 274 |

+

similarity = similarity.view(n_q * n_c, 1, length)

|

| 275 |

+

st_prob = self.merged_st_predictor(similarity).view(n_q, n_c, length) # (Nq, Nv, L)

|

| 276 |

+

ed_prob = self.merged_ed_predictor(similarity).view(n_q, n_c, length) # (Nq, Nv, L)

|

| 277 |

+

else:

|

| 278 |

+

st_prob = self.merged_st_predictor(similarity.unsqueeze(1)).squeeze() # (N, L)

|

| 279 |

+

ed_prob = self.merged_ed_predictor(similarity.unsqueeze(1)).squeeze() # (N, L)

|

| 280 |

+

st_prob = mask_logits(st_prob, context_mask) # (N, L)

|

| 281 |

+

ed_prob = mask_logits(ed_prob, context_mask)

|

| 282 |

+

return st_prob, ed_prob

|

| 283 |

+

|

| 284 |

+

def get_pred_from_raw_query(self, query_feat, query_mask, video_feat, video_mask, sub_feat, sub_mask, cross=False,

|

| 285 |

+

return_query_feats=False):

|

| 286 |

+

"""

|

| 287 |

+

Args:

|

| 288 |

+

query_feat: (N, Lq, Dq)

|

| 289 |

+

query_mask: (N, Lq)

|

| 290 |

+

video_feat: (N, Lv, D) or None

|

| 291 |

+

video_mask: (N, Lv)

|

| 292 |

+

sub_feat: (N, Lv, D) or None

|

| 293 |

+

sub_mask: (N, Lv)

|

| 294 |

+

cross:

|

| 295 |

+

return_query_feats:

|

| 296 |

+

"""

|

| 297 |

+

video_query, sub_query = self.encode_query(query_feat, query_mask)

|

| 298 |

+

# get video-level retrieval scores

|

| 299 |

+

video_q2ctx_scores = self.get_video_level_scores(video_query, video_feat, video_mask)

|

| 300 |

+

sub_q2ctx_scores = self.get_video_level_scores(sub_query, sub_feat, sub_mask)

|

| 301 |

+

q2ctx_scores = (video_q2ctx_scores + sub_q2ctx_scores) / 2 # (N, N)

|

| 302 |

+

# compute start and end probs

|

| 303 |

+

similarity = self.get_merged_score(video_query, video_feat, sub_query, sub_feat, cross=cross)

|

| 304 |

+

st_prob, ed_prob = self.get_merged_st_ed_prob(similarity, video_mask, cross=cross)

|

| 305 |

+

if return_query_feats:

|

| 306 |

+

return video_query, sub_query, q2ctx_scores, st_prob, ed_prob

|

| 307 |

+

else:

|

| 308 |

+

return q2ctx_scores, st_prob, ed_prob # un-normalized masked probabilities!!!!!

|

| 309 |

+

|

| 310 |

+

def get_video_level_loss(self, query_context_scores):

|

| 311 |

+

""" ranking loss between (pos. query + pos. video) and (pos. query + neg. video) or (neg. query + pos. video)

|

| 312 |

+

Args:

|

| 313 |

+

query_context_scores: (N, N), cosine similarity [-1, 1],

|

| 314 |

+

Each row contains the scores between the query to each of the videos inside the batch.

|

| 315 |

+

"""

|

| 316 |

+

bsz = len(query_context_scores)

|

| 317 |

+

diagonal_indices = torch.arange(bsz).to(query_context_scores.device)

|

| 318 |

+

pos_scores = query_context_scores[diagonal_indices, diagonal_indices] # (N, )

|

| 319 |

+

query_context_scores_masked = copy.deepcopy(query_context_scores.data)

|

| 320 |

+

# impossibly large for cosine similarity, the copy is created as modifying the original will cause error

|

| 321 |

+

query_context_scores_masked[diagonal_indices, diagonal_indices] = 999

|

| 322 |

+

pos_query_neg_context_scores = self.get_neg_scores(query_context_scores, query_context_scores_masked)

|

| 323 |

+

neg_query_pos_context_scores = self.get_neg_scores(query_context_scores.transpose(0, 1),

|

| 324 |

+

query_context_scores_masked.transpose(0, 1))

|

| 325 |

+

loss_neg_ctx = self.get_ranking_loss(pos_scores, pos_query_neg_context_scores)

|

| 326 |

+

loss_neg_q = self.get_ranking_loss(pos_scores, neg_query_pos_context_scores)

|

| 327 |

+

return loss_neg_ctx, loss_neg_q

|

| 328 |

+

|

| 329 |

+

def get_neg_scores(self, scores, scores_masked):

|

| 330 |

+

"""

|

| 331 |

+

scores: (N, N), cosine similarity [-1, 1],

|

| 332 |

+

Each row are scores: query --> all videos. Transposed version: video --> all queries.

|

| 333 |

+

scores_masked: (N, N) the same as scores, except that the diagonal (positive) positions

|

| 334 |

+

are masked with a large value.

|

| 335 |

+

"""

|

| 336 |

+

bsz = len(scores)

|

| 337 |

+

batch_indices = torch.arange(bsz).to(scores.device)

|

| 338 |

+

_, sorted_scores_indices = torch.sort(scores_masked, descending=True, dim=1)

|

| 339 |

+

sample_min_idx = 1 # skip the masked positive

|

| 340 |

+

sample_max_idx = min(sample_min_idx + self.config.hard_pool_size, bsz) if self.config.use_hard_negative else bsz

|

| 341 |

+

# (N, )

|

| 342 |