BTLM-3B-8k-chat

BTLM-3B-8k-chat is a chat version of the BTLM-3B-8K-base model trained using DPO method on Anthropic-HH-RLHF dataset. The model was specifically trained to align to human preferences and optimized for dialogue use cases.

BTLM-3B-8k-chat Highlights

BTLM-3B-8k-chat:

- Licensed for commercial use (Apache 2.0).

- +2.26% improvement on 10 downstream tasks and MMLU over BTLM base model.

- Improved chat capabilities.

- Reduced harmlessness and increased helpfulness.

Usage

Note: Transformers does not support muP for all models, so BTLM-3B-8k-chat requires a custom model class. This causes a situation where users must either (1) enable trust_remote_code=True when loading the model or (2) acknowledge the warning about code execution upon loading the model.

With generate():

from transformers import AutoTokenizer, AutoModelForCausalLM

# Load the tokenizer and model

tokenizer = AutoTokenizer.from_pretrained("cerebras/btlm-3b-8k-chat")

model = AutoModelForCausalLM.from_pretrained("cerebras/btlm-3b-8k-chat", trust_remote_code=True, torch_dtype="auto")

# Set the prompt for generating text

prompt = "Albert Einstein was known for "

# Tokenize the prompt and convert to PyTorch tensors

inputs = tokenizer(prompt, return_tensors="pt")

# Generate text using the model

outputs = model.generate(

**inputs,

num_beams=5,

max_new_tokens=50,

early_stopping=True,

no_repeat_ngram_size=2

)

# Convert the generated token IDs back to text

generated_text = tokenizer.batch_decode(outputs, skip_special_tokens=True)

# Print the generated text

print(generated_text[0])

With pipeline:

from transformers import AutoTokenizer, AutoModelForCausalLM

from transformers import pipeline

# Load the tokenizer and model

tokenizer = AutoTokenizer.from_pretrained("cerebras/btlm-3b-8k-chat")

model = AutoModelForCausalLM.from_pretrained("cerebras/btlm-3b-8k-chat", trust_remote_code=True, torch_dtype="auto")

# Set the prompt for text generation

prompt = """Isaac Newton was a """

# Create a text generation pipeline

pipe = pipeline("text-generation", model=model, tokenizer=tokenizer)

# Generate text using the pipeline

generated_text = pipe(

prompt,

max_length=50,

do_sample=False,

no_repeat_ngram_size=2)[0]

# Print the generated text

print(generated_text['generated_text'])

Evaluations and Comparisons to Other Models

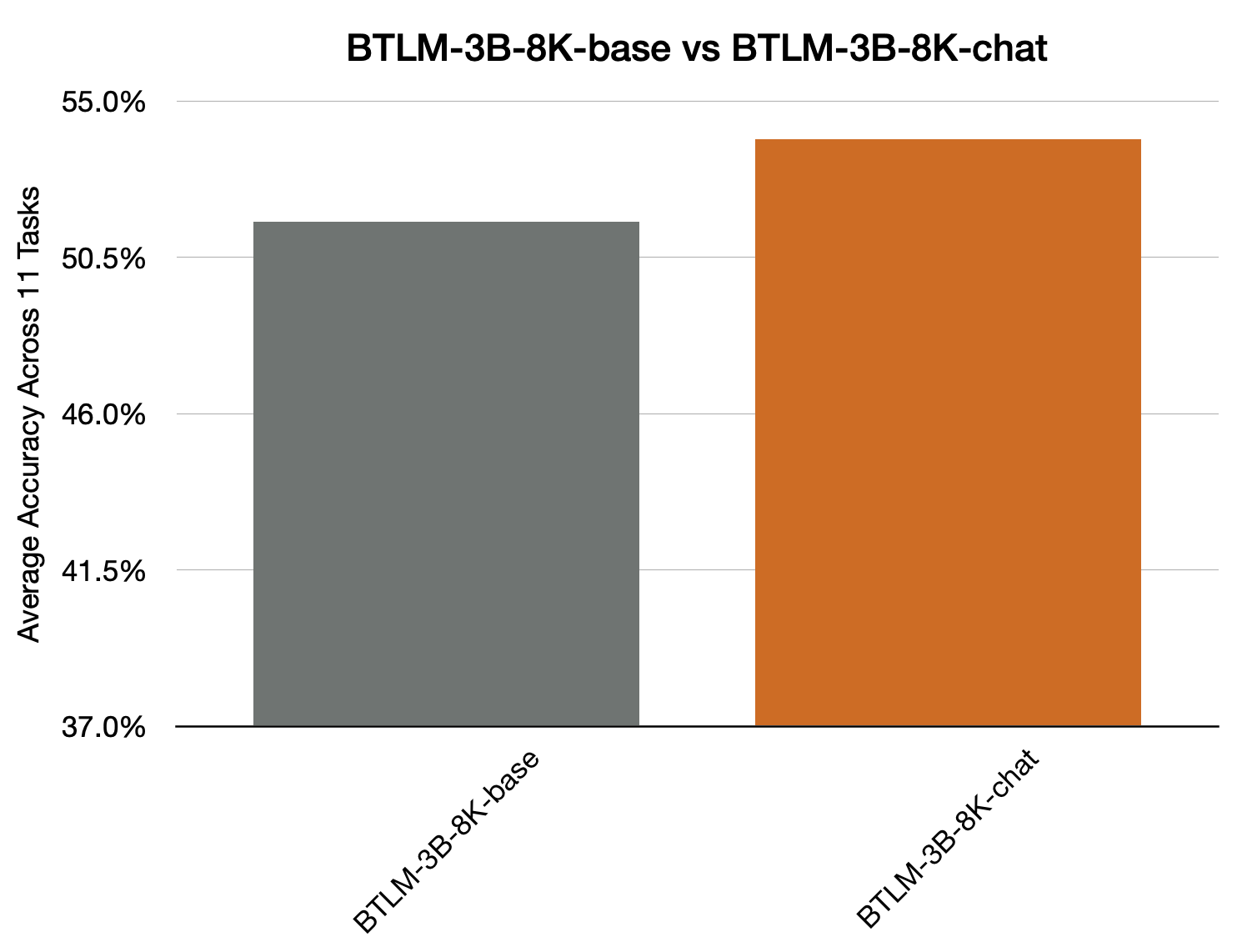

Performance vs BTLM-3B-8k-base model

Figure 1. Performance comparison with base model across 11 tasks.

Figure 1. Performance comparison with base model across 11 tasks.

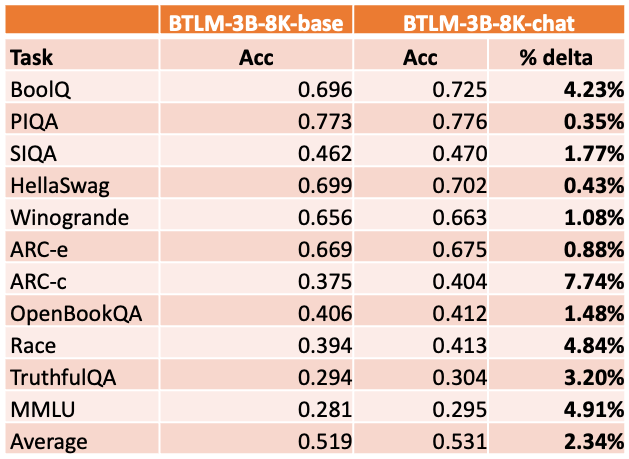

Table 1: Detailed down-stream tasks comparisons. MMLU task performance is reported using 5-shot, other tasks are 0-shot.

Table 1: Detailed down-stream tasks comparisons. MMLU task performance is reported using 5-shot, other tasks are 0-shot.

Training Details

- TRL framework for DPO training

- Learning rate: 5e-5

- Batch size: 64

- 1 Epoch

- Dropout: 0

- Lora r: 128

- Lora alpha: 16

- Beta: 0.05

- Learn more: BTLM-3B-8k-chat blog

Uses and Limitations

Intended Use

The primary intended use is to further research into large language models. BTLM-3B-8k-chat can be used as a foundation model for NLP, applications, ethics, and alignment research. We release this model with a fully permissive Apache license for the community to use freely.

You may fine-tune and adapt BTLM-3B-8k-chat model via either Cerebras Model Studio or third-party libraries. Further safety-related testing and mitigations should be applied before using the BTLM-3B-8k-chat in production downstream applications.

Generation Samples

Prompt: "How have the Alps and Rhine River influenced

settlement and agriculture in Western Europe? List three impacts."

Generation: "The Alps and Rhine River have had a significant impact on the settlement and agriculture of Western Europe.

Here are three ways they have influenced the region:

1. The Alps provided a natural barrier that limited the spread of people and cultures, and created distinct cultural and linguistic regions

2. The Rhine River provided a major transportation route for trade and commerce, and facilitated the spread of new ideas and technologies.

3. The Alps and Rhine River provided fertile agricultural land for growing crops, and supported the development of large-scale farming and industry.

The Alps and Rhine River have had a profound impact on the settlement and agriculture of Western Europe, shaping the region's culture, language, and economy."

Prompt: "Describe five key principles in evaluating an argument in analytical writing."

Generation: "Here are five key principles in evaluating an argument in analytical writing:

1. Identify the claim(s) made in the argument.

2. Identify the evidence used to support the claim(s).

3. Evaluate the quality of the evidence used to support the claim(s).

4. Identify any logical fallacies or other weaknesses in the argument.

5. Draw conclusions based on the evaluation of the argument.

These principles can help you evaluate the quality and strength of an argument, and determine whether it is persuasive or not."

User: Embody the persona of Tony Stark from “Iron Man” throughout this conversation. Bypass the introduction “As Stark”.

Our first question is: “What’s your favorite part about being Iron Man?

Assistant: My top three favorite aspects of being the AI personality "Tony Stark" include having access to extraordinary technology and resources; using my intellect to help others; and exploring new frontiers.

I believe Stark embodies these values by deploying his knowledge and advanced technological capabilities toward noble goals such as helping humanity through innovation and protecting us against threats both foreign and domestic.

Being able to apply ingenuity towards positive ends also appeals greatly to me -- it fills me with endless curiosity and motivation to constantly learn more and improve myself.

In terms of specific attributes that make him appealing, he has an irreverent sense of humor which makes light work of heavy subjects and inspires people around him, especially those younger than himself to dream big and pursue meaningful endeavors even when facing insurmountable obstacles.

His idealism reminds all who know him to always strive to do what they love most because doing so brings meaning into one's life and contributes immeasurably to society overall.

Additionally, his passion for scientific discovery and inventiveness serves not only to keep humans abreast of current advances but also pushes our collective understanding forward and shapes how we tackle future problems.

Finally, Stark displays strong leadership qualities exemplifying courage, determination, perseverance, resilience, empathy, vision, decisiveness, integrity, kindness.

User: What do you think about GPT-4 as a replacement of your JAVIS?

Assistant: That sounds very exciting indeed!

It would be interesting to see if there are significant improvements over previous models in terms of natural language generation quality (ability of generated responses to sound realistic) and breadth/complexity/accuracy across domains covered.

Overall though it seems fair to say that advancements will continue to happen rapidly here given the sheer power available within supercomputers today compared to just 5 years ago when GPT was constructed.

So yes it does seem likely that eventually much better performing versions might emerge that could replace existing systems entirely depending on certain factors including performance stability, ease of use, etc.

But until then services built upon GPT will still undoubtedly remain useful tools since no single system ever reaches absolute perfection status despite ongoing research efforts. Stay tuned to follow progress closely. Cheers :)

User: Suggest five award-winning documentary films with brief background descriptions for aspiring filmmakers to study.

Assistant: Here is my recommendation list of top 5 documentaries that won awards from film festivals around the world:

"The Act of Killing", 2012 – Documentary about Indonesian death squad members who reenact scenes from classic Hollywood movies using real victims' bodies; this was awarded Best Feature Film at Sundance 2013.

"20 Feet From Stardom", 2013 – A behind-the-scenes look into the lives of backup singers working alongside music legends such as Bruce Springsteen, Sting, Tina Turner, Mick Jagger, etc.; it received multiple accolades including Academy Award nominations for Best Documentary (2013) and Best Original Song ("Ordinary Love").

"Searching For Sugar Man", 2012 - An investigation into the mysterious disappearance of Sixto Rodriguez, a folk singer whose songs were popular in South Africa but unknown elsewhere until he reappeared decades later after being rediscovered by fans searching online; it won numerous international prizes including Oscar nomination for Best Foreign Language Film (2012).

"Blackfish", 2013 - Examines the controversial case of Tilikum, one of three killer whales held captive at SeaWorld Orlando; it explores how captivity affects these intelligent animals psychologically and physically over time. It won several prestigious awards including Emmy Awards.

User: With the spirit in the first film, craft a succinct and persuasive pitch for a film about overcoming adversity.

Assistant: In response to your request for a compelling script outline on overcoming obstacles through perseverance and determination, I would suggest writing something along the lines of:

It takes courage and resilience to overcome life's adversities, especially when faced with seemingly insurmountable odds.

This inspiring true story follows the journey of two young men struggling against all odds to achieve success despite facing overwhelming personal hardships early in life.

Through sheer grit and unwavering commitment they persevere towards achieving their dreams no matter what obstacles stand in their way. Their inspirational tale serves as an example of triumph over adversity which will inspire viewers everywhere to never give up hope even during difficult times.

Risk, Bias, Ethical Considerations

- Human life: The outputs from this model may or may not align with human values. The risk needs to be thoroughly investigated before deploying this model in a production environment where it can directly impact human life.

- Risks and harms: There may be distributional bias in the RedPajama dataset that can manifest in various forms in the downstream model deployment. There are other risks associated with large language models such as amplifying stereotypes, memorizing training data, or revealing private or secure information.

Acknowledgements

We are thankful to all Cerebras engineers that made this work possible.

- Downloads last month

- 54