url

stringlengths 61

61

| repository_url

stringclasses 1

value | labels_url

stringlengths 75

75

| comments_url

stringlengths 70

70

| events_url

stringlengths 68

68

| html_url

stringlengths 49

51

| id

int64 2.28B

2.65B

| node_id

stringlengths 18

19

| number

int64 6.87k

7.29k

| title

stringlengths 2

159

| user

dict | labels

listlengths 0

2

| state

stringclasses 2

values | locked

bool 1

class | assignee

dict | assignees

listlengths 0

1

| milestone

dict | comments

sequencelengths 0

21

| created_at

unknown | updated_at

unknown | closed_at

unknown | author_association

stringclasses 4

values | active_lock_reason

float64 | body

stringlengths 10

47.9k

⌀ | closed_by

dict | reactions

dict | timeline_url

stringlengths 70

70

| performed_via_github_app

float64 | state_reason

stringclasses 3

values | draft

float64 0

1

⌀ | pull_request

dict | is_pull_request

bool 2

classes |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/datasets/issues/7180 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7180/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7180/comments | https://api.github.com/repos/huggingface/datasets/issues/7180/events | https://github.com/huggingface/datasets/issues/7180 | 2,554,244,750 | I_kwDODunzps6YPq6O | 7,180 | Memory leak when wrapping datasets into PyTorch Dataset without explicit deletion | {

"avatar_url": "https://avatars.githubusercontent.com/u/38123329?v=4",

"events_url": "https://api.github.com/users/iamwangyabin/events{/privacy}",

"followers_url": "https://api.github.com/users/iamwangyabin/followers",

"following_url": "https://api.github.com/users/iamwangyabin/following{/other_user}",

"gists_url": "https://api.github.com/users/iamwangyabin/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/iamwangyabin",

"id": 38123329,

"login": "iamwangyabin",

"node_id": "MDQ6VXNlcjM4MTIzMzI5",

"organizations_url": "https://api.github.com/users/iamwangyabin/orgs",

"received_events_url": "https://api.github.com/users/iamwangyabin/received_events",

"repos_url": "https://api.github.com/users/iamwangyabin/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/iamwangyabin/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/iamwangyabin/subscriptions",

"type": "User",

"url": "https://api.github.com/users/iamwangyabin",

"user_view_type": "public"

} | [] | closed | false | null | [] | null | [

"> I've encountered a memory leak when wrapping the HuggingFace dataset into a PyTorch Dataset. The RAM usage constantly increases during iteration if items are not explicitly deleted after use.\r\n\r\nDatasets are memory mapped so they work like SWAP memory. In particular as long as you have RAM available the data will stay in RAM, and get paged out once your system needs RAM for something else (no OOM).\r\n\r\nrelated: https://github.com/huggingface/datasets/issues/4883"

] | "2024-09-28T14:00:47Z" | "2024-09-30T12:07:56Z" | "2024-09-30T12:07:56Z" | NONE | null | ### Describe the bug

I've encountered a memory leak when wrapping the HuggingFace dataset into a PyTorch Dataset. The RAM usage constantly increases during iteration if items are not explicitly deleted after use.

### Steps to reproduce the bug

Steps to reproduce:

Create a PyTorch Dataset wrapper for 'nebula/cc12m':

````

from torch.utils.data import Dataset

from tqdm import tqdm

from datasets import load_dataset

from torchvision import transforms

Image.MAX_IMAGE_PIXELS = None

class CC12M(Dataset):

def __init__(self, path_or_name='nebula/cc12m', split='train', transform=None, single_caption=True):

self.raw_dataset = load_dataset(path_or_name)[split]

if transform is None:

self.transform = transforms.Compose([

transforms.Resize((224, 224)),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize(

mean=[0.48145466, 0.4578275, 0.40821073],

std=[0.26862954, 0.26130258, 0.27577711]

)

])

else:

self.transform = transforms.Compose(transform)

self.single_caption = single_caption

self.length = len(self.raw_dataset)

def __len__(self):

return self.length

def __getitem__(self, index):

item = self.raw_dataset[index]

caption = item['txt']

with io.BytesIO(item['webp']) as buffer:

image = Image.open(buffer).convert('RGB')

if self.transform:

image = self.transform(image)

# del item # Uncomment this line to prevent the memory leak

return image, caption

````

Iterate through the dataset without the del item line in __getitem__.

Observe RAM usage increasing constantly.

Add del item at the end of __getitem__:

```

def __getitem__(self, index):

item = self.raw_dataset[index]

caption = item['txt']

with io.BytesIO(item['webp']) as buffer:

image = Image.open(buffer).convert('RGB')

if self.transform:

image = self.transform(image)

del item # This line prevents the memory leak

return image, caption

```

Iterate through the dataset again and observe that RAM usage remains stable.

### Expected behavior

Expected behavior:

RAM usage should remain stable during iteration without needing to explicitly delete items.

Actual behavior:

RAM usage constantly increases unless items are explicitly deleted after use

### Environment info

- `datasets` version: 2.21.0

- Platform: Linux-4.18.0-513.5.1.el8_9.x86_64-x86_64-with-glibc2.28

- Python version: 3.12.4

- `huggingface_hub` version: 0.24.6

- PyArrow version: 17.0.0

- Pandas version: 2.2.2

- `fsspec` version: 2024.6.1

| {

"avatar_url": "https://avatars.githubusercontent.com/u/38123329?v=4",

"events_url": "https://api.github.com/users/iamwangyabin/events{/privacy}",

"followers_url": "https://api.github.com/users/iamwangyabin/followers",

"following_url": "https://api.github.com/users/iamwangyabin/following{/other_user}",

"gists_url": "https://api.github.com/users/iamwangyabin/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/iamwangyabin",

"id": 38123329,

"login": "iamwangyabin",

"node_id": "MDQ6VXNlcjM4MTIzMzI5",

"organizations_url": "https://api.github.com/users/iamwangyabin/orgs",

"received_events_url": "https://api.github.com/users/iamwangyabin/received_events",

"repos_url": "https://api.github.com/users/iamwangyabin/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/iamwangyabin/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/iamwangyabin/subscriptions",

"type": "User",

"url": "https://api.github.com/users/iamwangyabin",

"user_view_type": "public"

} | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7180/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/7180/timeline | null | completed | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/7179 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7179/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7179/comments | https://api.github.com/repos/huggingface/datasets/issues/7179/events | https://github.com/huggingface/datasets/pull/7179 | 2,552,387,980 | PR_kwDODunzps585Jcd | 7,179 | Support Python 3.11 | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

} | [] | closed | false | null | [] | null | [

"The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/datasets/pr_7179). All of your documentation changes will be reflected on that endpoint. The docs are available until 30 days after the last update."

] | "2024-09-27T08:55:44Z" | "2024-10-08T16:21:06Z" | "2024-10-08T16:21:03Z" | MEMBER | null | Support Python 3.11.

Fix #7178. | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

} | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7179/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/7179/timeline | null | null | 0 | {

"diff_url": "https://github.com/huggingface/datasets/pull/7179.diff",

"html_url": "https://github.com/huggingface/datasets/pull/7179",

"merged_at": "2024-10-08T16:21:03Z",

"patch_url": "https://github.com/huggingface/datasets/pull/7179.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/7179"

} | true |

https://api.github.com/repos/huggingface/datasets/issues/7178 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7178/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7178/comments | https://api.github.com/repos/huggingface/datasets/issues/7178/events | https://github.com/huggingface/datasets/issues/7178 | 2,552,378,330 | I_kwDODunzps6YIjPa | 7,178 | Support Python 3.11 | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

} | [

{

"color": "a2eeef",

"default": true,

"description": "New feature or request",

"id": 1935892871,

"name": "enhancement",

"node_id": "MDU6TGFiZWwxOTM1ODkyODcx",

"url": "https://api.github.com/repos/huggingface/datasets/labels/enhancement"

}

] | closed | false | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

} | [

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

}

] | null | [] | "2024-09-27T08:50:47Z" | "2024-10-08T16:21:04Z" | "2024-10-08T16:21:04Z" | MEMBER | null | Support Python 3.11: https://peps.python.org/pep-0664/ | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

} | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7178/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/7178/timeline | null | completed | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/7177 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7177/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7177/comments | https://api.github.com/repos/huggingface/datasets/issues/7177/events | https://github.com/huggingface/datasets/pull/7177 | 2,552,371,082 | PR_kwDODunzps585Fx2 | 7,177 | Fix release instructions | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

} | [] | closed | false | null | [] | null | [

"The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/datasets/pr_7177). All of your documentation changes will be reflected on that endpoint. The docs are available until 30 days after the last update."

] | "2024-09-27T08:47:01Z" | "2024-09-27T08:57:35Z" | "2024-09-27T08:57:32Z" | MEMBER | null | Fix release instructions.

During last release, I had to make this additional update. | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

} | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7177/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/7177/timeline | null | null | 0 | {

"diff_url": "https://github.com/huggingface/datasets/pull/7177.diff",

"html_url": "https://github.com/huggingface/datasets/pull/7177",

"merged_at": "2024-09-27T08:57:32Z",

"patch_url": "https://github.com/huggingface/datasets/pull/7177.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/7177"

} | true |

https://api.github.com/repos/huggingface/datasets/issues/7176 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7176/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7176/comments | https://api.github.com/repos/huggingface/datasets/issues/7176/events | https://github.com/huggingface/datasets/pull/7176 | 2,551,025,564 | PR_kwDODunzps580hTn | 7,176 | fix grammar in fingerprint.py | {

"avatar_url": "https://avatars.githubusercontent.com/u/13238952?v=4",

"events_url": "https://api.github.com/users/jxmorris12/events{/privacy}",

"followers_url": "https://api.github.com/users/jxmorris12/followers",

"following_url": "https://api.github.com/users/jxmorris12/following{/other_user}",

"gists_url": "https://api.github.com/users/jxmorris12/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/jxmorris12",

"id": 13238952,

"login": "jxmorris12",

"node_id": "MDQ6VXNlcjEzMjM4OTUy",

"organizations_url": "https://api.github.com/users/jxmorris12/orgs",

"received_events_url": "https://api.github.com/users/jxmorris12/received_events",

"repos_url": "https://api.github.com/users/jxmorris12/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/jxmorris12/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/jxmorris12/subscriptions",

"type": "User",

"url": "https://api.github.com/users/jxmorris12",

"user_view_type": "public"

} | [] | open | false | null | [] | null | [] | "2024-09-26T16:13:42Z" | "2024-09-26T16:13:42Z" | null | CONTRIBUTOR | null | I see this error all the time and it was starting to get to me. | null | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7176/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/7176/timeline | null | null | 0 | {

"diff_url": "https://github.com/huggingface/datasets/pull/7176.diff",

"html_url": "https://github.com/huggingface/datasets/pull/7176",

"merged_at": null,

"patch_url": "https://github.com/huggingface/datasets/pull/7176.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/7176"

} | true |

https://api.github.com/repos/huggingface/datasets/issues/7175 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7175/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7175/comments | https://api.github.com/repos/huggingface/datasets/issues/7175/events | https://github.com/huggingface/datasets/issues/7175 | 2,550,957,337 | I_kwDODunzps6YDIUZ | 7,175 | [FSTimeoutError] load_dataset | {

"avatar_url": "https://avatars.githubusercontent.com/u/53268607?v=4",

"events_url": "https://api.github.com/users/cosmo3769/events{/privacy}",

"followers_url": "https://api.github.com/users/cosmo3769/followers",

"following_url": "https://api.github.com/users/cosmo3769/following{/other_user}",

"gists_url": "https://api.github.com/users/cosmo3769/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/cosmo3769",

"id": 53268607,

"login": "cosmo3769",

"node_id": "MDQ6VXNlcjUzMjY4NjA3",

"organizations_url": "https://api.github.com/users/cosmo3769/orgs",

"received_events_url": "https://api.github.com/users/cosmo3769/received_events",

"repos_url": "https://api.github.com/users/cosmo3769/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/cosmo3769/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/cosmo3769/subscriptions",

"type": "User",

"url": "https://api.github.com/users/cosmo3769",

"user_view_type": "public"

} | [] | closed | false | null | [] | null | [

"Is this `FSTimeoutError` due to download network issue from remote resource (from where it is being accessed)?",

"It seems to happen for all datasets, not just a specific one, and especially for versions after 3.0. (3.0.0, 3.0.1 have this problem)\r\n\r\nI had the same error on a different dataset, but after downgrading to datasets==2.21.0, the problem was solved.",

"Same as https://github.com/huggingface/datasets/issues/7164\r\n\r\nThis dataset is made of a python script that downloads data from elsewhere than HF, so availability depends on the original host. Ultimately it would be nice to host the files of this dataset on HF\r\n\r\nin `datasets` <3.0 there were lots of mechanisms that got removed after the decision to make datasets with python loading scripts legacy for security and maintenance reasons (we only do very basic support now)",

"@lhoestq Thank you for the clarification! Closing the issue.",

"I'm getting this too, and also at 5 minutes. But for `CSTR-Edinburgh/vctk`, so it's not just this dataset, it seems to be a timeout that was introduced and needs to be raised. The progress bar was moving along just fine before the timeout, and I get more or less of it depending on how fast the network is.",

"You can change the `aiohttp` timeout from 5min to 1h like this:\r\n\r\n```python\r\nimport datasets, aiohttp\r\ndataset = datasets.load_dataset(\r\n dataset_name,\r\n storage_options={'client_kwargs': {'timeout': aiohttp.ClientTimeout(total=3600)}}\r\n)\r\n```"

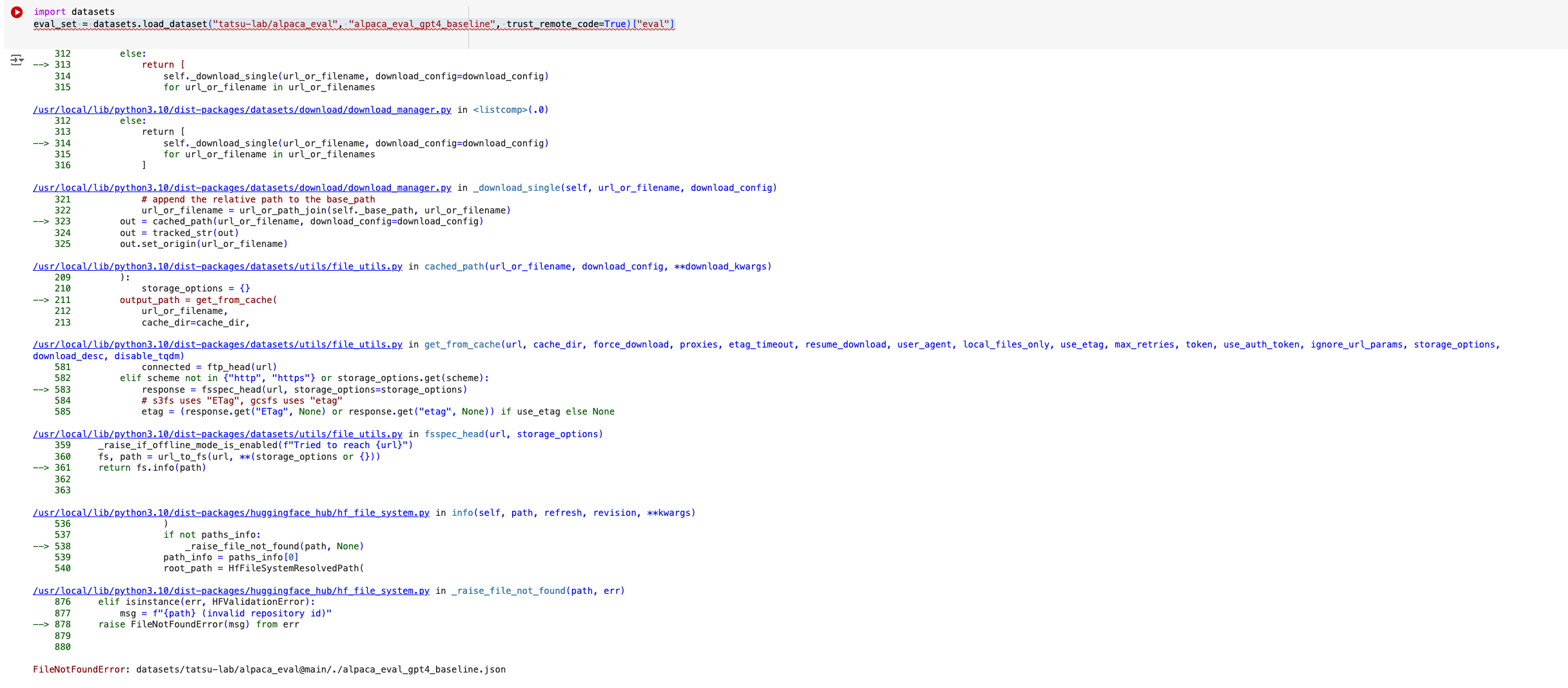

] | "2024-09-26T15:42:29Z" | "2024-10-26T16:12:57Z" | "2024-09-30T17:28:35Z" | NONE | null | ### Describe the bug

When using `load_dataset`to load [HuggingFaceM4/VQAv2](https://huggingface.co/datasets/HuggingFaceM4/VQAv2), I am getting `FSTimeoutError`.

### Error

```

TimeoutError:

The above exception was the direct cause of the following exception:

FSTimeoutError Traceback (most recent call last)

[/usr/local/lib/python3.10/dist-packages/fsspec/asyn.py](https://klh9mr78js-496ff2e9c6d22116-0-colab.googleusercontent.com/outputframe.html?vrz=colab_20240924-060116_RC00_678132060#) in sync(loop, func, timeout, *args, **kwargs)

99 if isinstance(return_result, asyncio.TimeoutError):

100 # suppress asyncio.TimeoutError, raise FSTimeoutError

--> 101 raise FSTimeoutError from return_result

102 elif isinstance(return_result, BaseException):

103 raise return_result

FSTimeoutError:

```

It usually fails around 5-6 GB.

<img width="847" alt="Screenshot 2024-09-26 at 9 10 19 PM" src="https://github.com/user-attachments/assets/ff91995a-fb55-4de6-8214-94025d6c8470">

### Steps to reproduce the bug

To reproduce it, run this in colab notebook:

```

!pip install -q -U datasets

from datasets import load_dataset

ds = load_dataset('HuggingFaceM4/VQAv2', split="train[:10%]")

```

### Expected behavior

It should download properly.

### Environment info

Using Colab Notebook. | {

"avatar_url": "https://avatars.githubusercontent.com/u/53268607?v=4",

"events_url": "https://api.github.com/users/cosmo3769/events{/privacy}",

"followers_url": "https://api.github.com/users/cosmo3769/followers",

"following_url": "https://api.github.com/users/cosmo3769/following{/other_user}",

"gists_url": "https://api.github.com/users/cosmo3769/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/cosmo3769",

"id": 53268607,

"login": "cosmo3769",

"node_id": "MDQ6VXNlcjUzMjY4NjA3",

"organizations_url": "https://api.github.com/users/cosmo3769/orgs",

"received_events_url": "https://api.github.com/users/cosmo3769/received_events",

"repos_url": "https://api.github.com/users/cosmo3769/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/cosmo3769/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/cosmo3769/subscriptions",

"type": "User",

"url": "https://api.github.com/users/cosmo3769",

"user_view_type": "public"

} | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7175/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/7175/timeline | null | completed | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/7174 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7174/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7174/comments | https://api.github.com/repos/huggingface/datasets/issues/7174/events | https://github.com/huggingface/datasets/pull/7174 | 2,549,892,315 | PR_kwDODunzps58wluR | 7,174 | Set dev version | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

} | [] | closed | false | null | [] | null | [

"The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/datasets/pr_7174). All of your documentation changes will be reflected on that endpoint. The docs are available until 30 days after the last update."

] | "2024-09-26T08:30:11Z" | "2024-09-26T08:32:39Z" | "2024-09-26T08:30:21Z" | MEMBER | null | null | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

} | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7174/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/7174/timeline | null | null | 0 | {

"diff_url": "https://github.com/huggingface/datasets/pull/7174.diff",

"html_url": "https://github.com/huggingface/datasets/pull/7174",

"merged_at": "2024-09-26T08:30:21Z",

"patch_url": "https://github.com/huggingface/datasets/pull/7174.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/7174"

} | true |

https://api.github.com/repos/huggingface/datasets/issues/7173 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7173/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7173/comments | https://api.github.com/repos/huggingface/datasets/issues/7173/events | https://github.com/huggingface/datasets/pull/7173 | 2,549,882,529 | PR_kwDODunzps58wjjc | 7,173 | Release: 3.0.1 | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

} | [] | closed | false | null | [] | null | [

"The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/datasets/pr_7173). All of your documentation changes will be reflected on that endpoint. The docs are available until 30 days after the last update."

] | "2024-09-26T08:25:54Z" | "2024-09-26T08:28:29Z" | "2024-09-26T08:26:03Z" | MEMBER | null | null | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

} | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7173/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/7173/timeline | null | null | 0 | {

"diff_url": "https://github.com/huggingface/datasets/pull/7173.diff",

"html_url": "https://github.com/huggingface/datasets/pull/7173",

"merged_at": "2024-09-26T08:26:03Z",

"patch_url": "https://github.com/huggingface/datasets/pull/7173.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/7173"

} | true |

https://api.github.com/repos/huggingface/datasets/issues/7172 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7172/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7172/comments | https://api.github.com/repos/huggingface/datasets/issues/7172/events | https://github.com/huggingface/datasets/pull/7172 | 2,549,781,691 | PR_kwDODunzps58wNQ7 | 7,172 | Add torchdata as a regular test dependency | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

} | [] | closed | false | null | [] | null | [

"The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/datasets/pr_7172). All of your documentation changes will be reflected on that endpoint. The docs are available until 30 days after the last update."

] | "2024-09-26T07:45:55Z" | "2024-09-26T08:12:12Z" | "2024-09-26T08:05:40Z" | MEMBER | null | Add `torchdata` as a regular test dependency.

Note that previously, `torchdata` was installed from their repo and current main branch (0.10.0.dev) requires Python>=3.9.

Also note they made a recent release: 0.8.0 on Jul 31, 2024.

Fix #7171. | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

} | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7172/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/7172/timeline | null | null | 0 | {

"diff_url": "https://github.com/huggingface/datasets/pull/7172.diff",

"html_url": "https://github.com/huggingface/datasets/pull/7172",

"merged_at": "2024-09-26T08:05:40Z",

"patch_url": "https://github.com/huggingface/datasets/pull/7172.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/7172"

} | true |

https://api.github.com/repos/huggingface/datasets/issues/7171 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7171/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7171/comments | https://api.github.com/repos/huggingface/datasets/issues/7171/events | https://github.com/huggingface/datasets/issues/7171 | 2,549,738,919 | I_kwDODunzps6X-e2n | 7,171 | CI is broken: No solution found when resolving dependencies | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

} | [

{

"color": "d73a4a",

"default": true,

"description": "Something isn't working",

"id": 1935892857,

"name": "bug",

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug"

}

] | closed | false | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

} | [

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

}

] | null | [] | "2024-09-26T07:24:58Z" | "2024-09-26T08:05:41Z" | "2024-09-26T08:05:41Z" | MEMBER | null | See: https://github.com/huggingface/datasets/actions/runs/11046967444/job/30687294297

```

Run uv pip install --system -r additional-tests-requirements.txt --no-deps

× No solution found when resolving dependencies:

╰─▶ Because the current Python version (3.8.18) does not satisfy Python>=3.9

and torchdata==0.10.0a0+1a98f21 depends on Python>=3.9, we can conclude

that torchdata==0.10.0a0+1a98f21 cannot be used.

And because only torchdata==0.10.0a0+1a98f21 is available and

you require torchdata, we can conclude that your requirements are

unsatisfiable.

Error: Process completed with exit code 1.

``` | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

} | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7171/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/7171/timeline | null | completed | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/7170 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7170/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7170/comments | https://api.github.com/repos/huggingface/datasets/issues/7170/events | https://github.com/huggingface/datasets/pull/7170 | 2,546,944,016 | PR_kwDODunzps58mfF5 | 7,170 | Support JSON lines with missing columns | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

} | [] | closed | false | null | [] | null | [

"The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/datasets/pr_7170). All of your documentation changes will be reflected on that endpoint. The docs are available until 30 days after the last update."

] | "2024-09-25T05:08:15Z" | "2024-09-26T06:42:09Z" | "2024-09-26T06:42:07Z" | MEMBER | null | Support JSON lines with missing columns.

Fix #7169.

The implemented test raised:

```

datasets.table.CastError: Couldn't cast

age: int64

to

{'age': Value(dtype='int32', id=None), 'name': Value(dtype='string', id=None)}

because column names don't match

```

Related to:

- #7160

- #7162 | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

} | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7170/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/7170/timeline | null | null | 0 | {

"diff_url": "https://github.com/huggingface/datasets/pull/7170.diff",

"html_url": "https://github.com/huggingface/datasets/pull/7170",

"merged_at": "2024-09-26T06:42:07Z",

"patch_url": "https://github.com/huggingface/datasets/pull/7170.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/7170"

} | true |

https://api.github.com/repos/huggingface/datasets/issues/7169 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7169/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7169/comments | https://api.github.com/repos/huggingface/datasets/issues/7169/events | https://github.com/huggingface/datasets/issues/7169 | 2,546,894,076 | I_kwDODunzps6XzoT8 | 7,169 | JSON lines with missing columns raise CastError | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

} | [

{

"color": "d73a4a",

"default": true,

"description": "Something isn't working",

"id": 1935892857,

"name": "bug",

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug"

}

] | closed | false | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

} | [

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

}

] | null | [] | "2024-09-25T04:43:28Z" | "2024-09-26T06:42:08Z" | "2024-09-26T06:42:08Z" | MEMBER | null | JSON lines with missing columns raise CastError:

> CastError: Couldn't cast ... to ... because column names don't match

Related to:

- #7159

- #7161 | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova",

"user_view_type": "public"

} | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7169/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/7169/timeline | null | completed | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/7168 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7168/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7168/comments | https://api.github.com/repos/huggingface/datasets/issues/7168/events | https://github.com/huggingface/datasets/issues/7168 | 2,546,710,631 | I_kwDODunzps6Xy7hn | 7,168 | sd1.5 diffusers controlnet training script gives new error | {

"avatar_url": "https://avatars.githubusercontent.com/u/90132896?v=4",

"events_url": "https://api.github.com/users/Night1099/events{/privacy}",

"followers_url": "https://api.github.com/users/Night1099/followers",

"following_url": "https://api.github.com/users/Night1099/following{/other_user}",

"gists_url": "https://api.github.com/users/Night1099/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/Night1099",

"id": 90132896,

"login": "Night1099",

"node_id": "MDQ6VXNlcjkwMTMyODk2",

"organizations_url": "https://api.github.com/users/Night1099/orgs",

"received_events_url": "https://api.github.com/users/Night1099/received_events",

"repos_url": "https://api.github.com/users/Night1099/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/Night1099/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Night1099/subscriptions",

"type": "User",

"url": "https://api.github.com/users/Night1099",

"user_view_type": "public"

} | [] | closed | false | null | [] | null | [

"not sure why the issue is formatting oddly",

"I guess this is a dupe of\r\n\r\nhttps://github.com/huggingface/datasets/issues/7071",

"this turned out to be because of a bad image in dataset"

] | "2024-09-25T01:42:49Z" | "2024-09-30T05:24:03Z" | "2024-09-30T05:24:02Z" | NONE | null | ### Describe the bug

This will randomly pop up during training now

```

Traceback (most recent call last):

File "/workspace/diffusers/examples/controlnet/train_controlnet.py", line 1192, in <module>

main(args)

File "/workspace/diffusers/examples/controlnet/train_controlnet.py", line 1041, in main

for step, batch in enumerate(train_dataloader):

File "/usr/local/lib/python3.11/dist-packages/accelerate/data_loader.py", line 561, in __iter__

next_batch = next(dataloader_iter)

^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/torch/utils/data/dataloader.py", line 630, in __next__

data = self._next_data()

^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/torch/utils/data/dataloader.py", line 673, in _next_data

data = self._dataset_fetcher.fetch(index) # may raise StopIteration

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/torch/utils/data/_utils/fetch.py", line 50, in fetch

data = self.dataset.__getitems__(possibly_batched_index)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/datasets/arrow_dataset.py", line 2746, in __getitems__

batch = self.__getitem__(keys)

^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/datasets/arrow_dataset.py", line 2742, in __getitem__

return self._getitem(key)

^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/datasets/arrow_dataset.py", line 2727, in _getitem

formatted_output = format_table(

^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/datasets/formatting/formatting.py", line 639, in format_table

return formatter(pa_table, query_type=query_type)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/datasets/formatting/formatting.py", line 407, in __call__

return self.format_batch(pa_table)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/datasets/formatting/formatting.py", line 521, in format_batch

batch = self.python_features_decoder.decode_batch(batch)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/datasets/formatting/formatting.py", line 228, in decode_batch

return self.features.decode_batch(batch) if self.features else batch

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/datasets/features/features.py", line 2084, in decode_batch

[

File "/usr/local/lib/python3.11/dist-packages/datasets/features/features.py", line 2085, in <listcomp>

decode_nested_example(self[column_name], value, token_per_repo_id=token_per_repo_id)

File "/usr/local/lib/python3.11/dist-packages/datasets/features/features.py", line 1403, in decode_nested_example

return schema.decode_example(obj, token_per_repo_id=token_per_repo_id)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/datasets/features/image.py", line 188, in decode_example

image.load() # to avoid "Too many open files" errors

```

### Steps to reproduce the bug

Train on diffusers sd1.5 controlnet example script

This will pop up randomly, you can see in wandb below when i manually resume run everytime this error appears

### Expected behavior

Training to continue without above error

### Environment info

- datasets version: 3.0.0

- Platform: Linux-6.5.0-44-generic-x86_64-with-glibc2.35

- Python version: 3.11.9

- huggingface_hub version: 0.25.1

- PyArrow version: 17.0.0

- Pandas version: 2.2.3

- fsspec version: 2024.6.1

Training on 4090 | {

"avatar_url": "https://avatars.githubusercontent.com/u/90132896?v=4",

"events_url": "https://api.github.com/users/Night1099/events{/privacy}",

"followers_url": "https://api.github.com/users/Night1099/followers",

"following_url": "https://api.github.com/users/Night1099/following{/other_user}",

"gists_url": "https://api.github.com/users/Night1099/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/Night1099",

"id": 90132896,

"login": "Night1099",

"node_id": "MDQ6VXNlcjkwMTMyODk2",

"organizations_url": "https://api.github.com/users/Night1099/orgs",

"received_events_url": "https://api.github.com/users/Night1099/received_events",

"repos_url": "https://api.github.com/users/Night1099/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/Night1099/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Night1099/subscriptions",

"type": "User",

"url": "https://api.github.com/users/Night1099",

"user_view_type": "public"

} | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7168/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/7168/timeline | null | completed | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/7167 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7167/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7167/comments | https://api.github.com/repos/huggingface/datasets/issues/7167/events | https://github.com/huggingface/datasets/issues/7167 | 2,546,708,014 | I_kwDODunzps6Xy64u | 7,167 | Error Mapping on sd3, sdxl and upcoming flux controlnet training scripts in diffusers | {

"avatar_url": "https://avatars.githubusercontent.com/u/90132896?v=4",

"events_url": "https://api.github.com/users/Night1099/events{/privacy}",

"followers_url": "https://api.github.com/users/Night1099/followers",

"following_url": "https://api.github.com/users/Night1099/following{/other_user}",

"gists_url": "https://api.github.com/users/Night1099/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/Night1099",

"id": 90132896,

"login": "Night1099",

"node_id": "MDQ6VXNlcjkwMTMyODk2",

"organizations_url": "https://api.github.com/users/Night1099/orgs",

"received_events_url": "https://api.github.com/users/Night1099/received_events",

"repos_url": "https://api.github.com/users/Night1099/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/Night1099/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Night1099/subscriptions",

"type": "User",

"url": "https://api.github.com/users/Night1099",

"user_view_type": "public"

} | [] | closed | false | null | [] | null | [

"this is happening on large datasets, if anyone happens upon this i was able to fix by changing\r\n\r\n```\r\ntrain_dataset = train_dataset.map(compute_embeddings_fn, batched=True, new_fingerprint=new_fingerprint)\r\n```\r\n\r\nto\r\n\r\n```\r\ntrain_dataset = train_dataset.map(compute_embeddings_fn, batched=True, batch_size=16, new_fingerprint=new_fingerprint)\r\n```"

] | "2024-09-25T01:39:51Z" | "2024-09-30T05:28:15Z" | "2024-09-30T05:28:04Z" | NONE | null | ### Describe the bug

```

Map: 6%|██████ | 8000/138120 [19:27<5:16:36, 6.85 examples/s]

Traceback (most recent call last):

File "/workspace/diffusers/examples/controlnet/train_controlnet_sd3.py", line 1416, in <module>

main(args)

File "/workspace/diffusers/examples/controlnet/train_controlnet_sd3.py", line 1132, in main

train_dataset = train_dataset.map(compute_embeddings_fn, batched=True, new_fingerprint=new_fingerprint)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/datasets/arrow_dataset.py", line 560, in wrapper

out: Union["Dataset", "DatasetDict"] = func(self, *args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/datasets/arrow_dataset.py", line 3035, in map

for rank, done, content in Dataset._map_single(**dataset_kwargs):

File "/usr/local/lib/python3.11/dist-packages/datasets/arrow_dataset.py", line 3461, in _map_single

writer.write_batch(batch)

File "/usr/local/lib/python3.11/dist-packages/datasets/arrow_writer.py", line 567, in write_batch

self.write_table(pa_table, writer_batch_size)

File "/usr/local/lib/python3.11/dist-packages/datasets/arrow_writer.py", line 579, in write_table

pa_table = pa_table.combine_chunks()

^^^^^^^^^^^^^^^^^^^^^^^^^

File "pyarrow/table.pxi", line 4387, in pyarrow.lib.Table.combine_chunks

File "pyarrow/error.pxi", line 155, in pyarrow.lib.pyarrow_internal_check_status

File "pyarrow/error.pxi", line 92, in pyarrow.lib.check_status

pyarrow.lib.ArrowInvalid: offset overflow while concatenating arrays

Traceback (most recent call last):

File "/usr/local/bin/accelerate", line 8, in <module>

sys.exit(main())

^^^^^^

File "/usr/local/lib/python3.11/dist-packages/accelerate/commands/accelerate_cli.py", line 48, in main

args.func(args)

File "/usr/local/lib/python3.11/dist-packages/accelerate/commands/launch.py", line 1174, in launch_command

simple_launcher(args)

File "/usr/local/lib/python3.11/dist-packages/accelerate/commands/launch.py", line 769, in simple_launcher

```

### Steps to reproduce the bug

The dataset has no problem training on sd1.5 controlnet train script

### Expected behavior

Script not randomly erroing with error above

### Environment info

- `datasets` version: 3.0.0

- Platform: Linux-6.5.0-44-generic-x86_64-with-glibc2.35

- Python version: 3.11.9

- `huggingface_hub` version: 0.25.1

- PyArrow version: 17.0.0

- Pandas version: 2.2.3

- `fsspec` version: 2024.6.1

training on A100 | {

"avatar_url": "https://avatars.githubusercontent.com/u/90132896?v=4",

"events_url": "https://api.github.com/users/Night1099/events{/privacy}",

"followers_url": "https://api.github.com/users/Night1099/followers",

"following_url": "https://api.github.com/users/Night1099/following{/other_user}",

"gists_url": "https://api.github.com/users/Night1099/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/Night1099",

"id": 90132896,

"login": "Night1099",

"node_id": "MDQ6VXNlcjkwMTMyODk2",

"organizations_url": "https://api.github.com/users/Night1099/orgs",

"received_events_url": "https://api.github.com/users/Night1099/received_events",

"repos_url": "https://api.github.com/users/Night1099/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/Night1099/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Night1099/subscriptions",

"type": "User",

"url": "https://api.github.com/users/Night1099",

"user_view_type": "public"

} | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7167/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/7167/timeline | null | completed | null | null | false |

https://api.github.com/repos/huggingface/datasets/issues/7166 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7166/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7166/comments | https://api.github.com/repos/huggingface/datasets/issues/7166/events | https://github.com/huggingface/datasets/pull/7166 | 2,545,608,736 | PR_kwDODunzps58h8pd | 7,166 | fix docstring code example for distributed shuffle | {

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/lhoestq",

"id": 42851186,

"login": "lhoestq",

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"type": "User",

"url": "https://api.github.com/users/lhoestq",

"user_view_type": "public"

} | [] | closed | false | null | [] | null | [

"The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/datasets/pr_7166). All of your documentation changes will be reflected on that endpoint. The docs are available until 30 days after the last update."

] | "2024-09-24T14:39:54Z" | "2024-09-24T14:42:41Z" | "2024-09-24T14:40:14Z" | MEMBER | null | close https://github.com/huggingface/datasets/issues/7163 | {

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/lhoestq",

"id": 42851186,

"login": "lhoestq",

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"type": "User",

"url": "https://api.github.com/users/lhoestq",

"user_view_type": "public"

} | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7166/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/7166/timeline | null | null | 0 | {

"diff_url": "https://github.com/huggingface/datasets/pull/7166.diff",

"html_url": "https://github.com/huggingface/datasets/pull/7166",

"merged_at": "2024-09-24T14:40:14Z",

"patch_url": "https://github.com/huggingface/datasets/pull/7166.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/7166"

} | true |

https://api.github.com/repos/huggingface/datasets/issues/7165 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7165/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7165/comments | https://api.github.com/repos/huggingface/datasets/issues/7165/events | https://github.com/huggingface/datasets/pull/7165 | 2,544,972,541 | PR_kwDODunzps58fva1 | 7,165 | fix increase_load_count | {

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/lhoestq",

"id": 42851186,

"login": "lhoestq",

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"type": "User",

"url": "https://api.github.com/users/lhoestq",

"user_view_type": "public"

} | [] | closed | false | null | [] | null | [

"The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/datasets/pr_7165). All of your documentation changes will be reflected on that endpoint. The docs are available until 30 days after the last update.",

"I tested a few load_dataset and they do show up in download stats now",

"Thanks for having noticed and fixed."

] | "2024-09-24T10:14:40Z" | "2024-09-24T17:31:07Z" | "2024-09-24T13:48:00Z" | MEMBER | null | it was failing since 3.0 and therefore not updating download counts on HF or in our dashboard | {

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",