metadata

license: apache-2.0

datasets:

- dongsheng/DTA-Tool

base_model:

- meta-llama/Llama-2-7b

Model Description

DTA_llama2_7b is from the paper "Divide-Then-Aggregate: An Efficient Tool Learning Method via Parallel Tool Invocation". It is a large language model capable of invoking tools and can parallel invoke multiple tools within a single round. The tool format it used is similar to OpenAI's Function Call.

Uses

The related code can be found in our GitHub repository.

Training Data

The training data comes from our specially constructed DTA-Tool, which is derived from ToolBench.

Evaluation

Testing Data

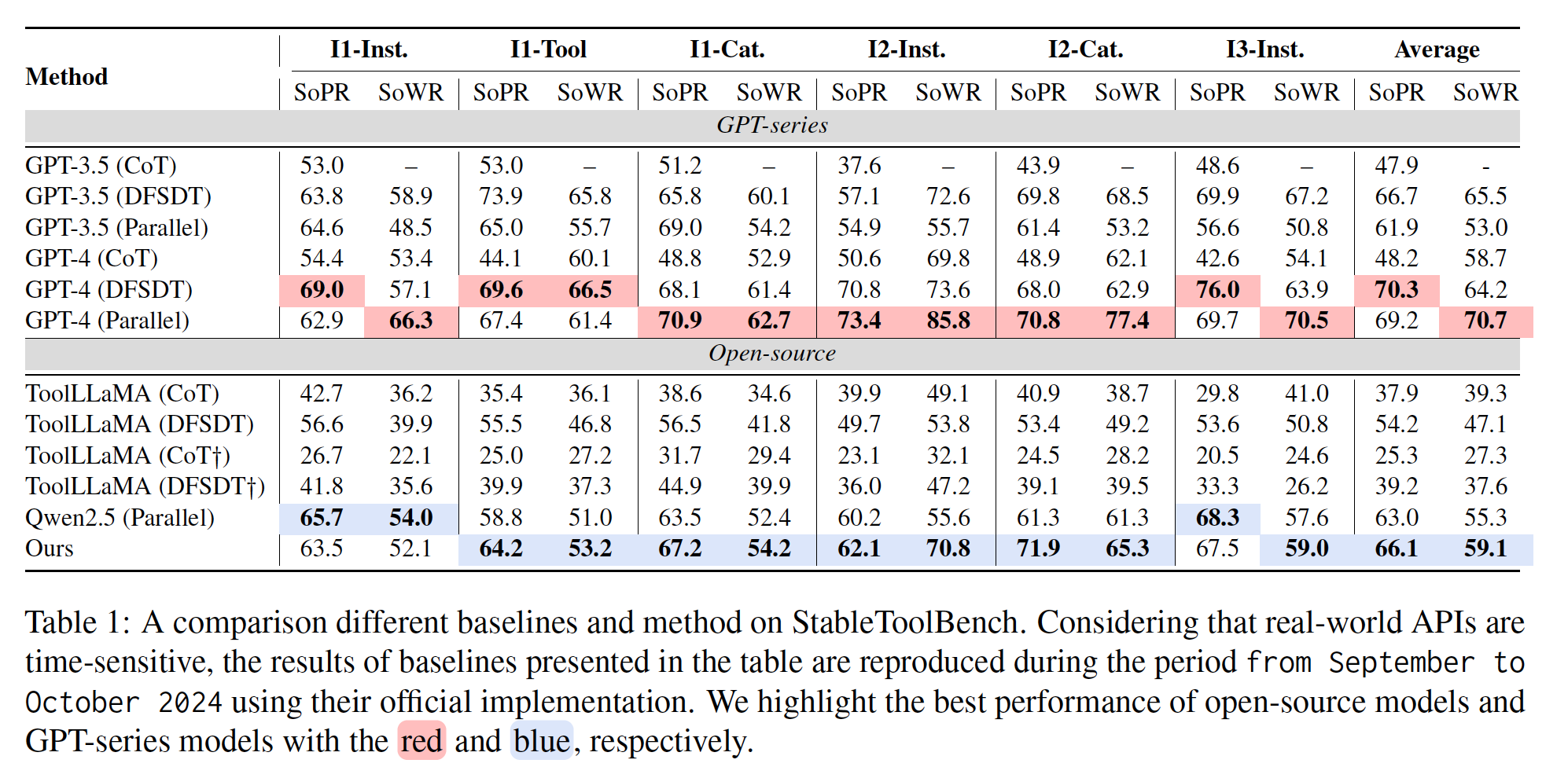

We evaluated the performance of DTA-Llama on StableToolBench.

Results

Citation

@misc{zhu2025dividethenaggregateefficienttoollearning,

title={Divide-Then-Aggregate: An Efficient Tool Learning Method via Parallel Tool Invocation},

author={Dongsheng Zhu and Weixian Shi and Zhengliang Shi and Zhaochun Ren and Shuaiqiang Wang and Lingyong Yan and Dawei Yin},

year={2025},

eprint={2501.12432},

archivePrefix={arXiv},

primaryClass={cs.LG},

url={https://arxiv.org/abs/2501.12432},

}