🎩 Magicoder: Source Code Is All You Need

Refer to our GitHub repo ise-uiuc/magicoder for an up-to-date introduction to the Magicoder family!

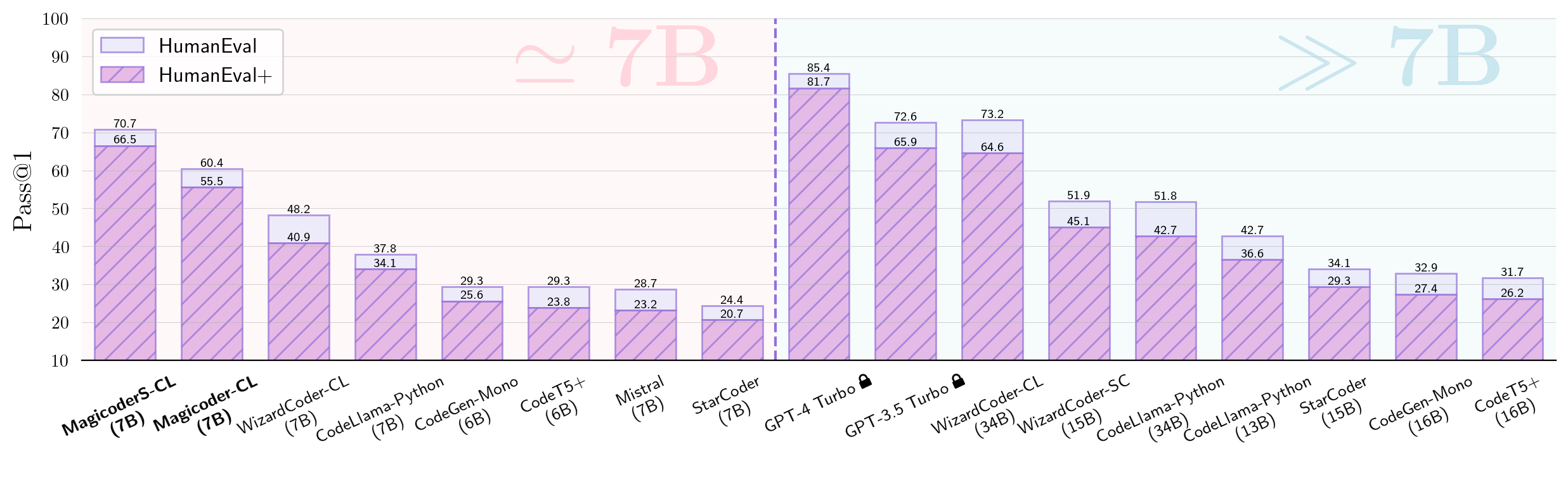

- 🎩Magicoder is a model family empowered by 🪄OSS-Instruct, a novel approach to enlightening LLMs with open-source code snippets for generating low-bias and high-quality instruction data for code.

- 🪄OSS-Instruct mitigates the inherent bias of the LLM-synthesized instruction data by empowering them with a wealth of open-source references to produce more diverse, realistic, and controllable data.

Model Details

Model Description

- Developed by: Yuxiang Wei, Zhe Wang, Jiawei Liu, Yifeng Ding, Lingming Zhang

- License: Llama 2

- Finetuned from model: CodeLlama-7b-Python-hf

Model Sources

- Repository: https://github.com/ise-uiuc/magicoder

- Paper: https://arxiv.org/abs/2312.02120

- Demo (powered by Gradio): https://github.com/ise-uiuc/magicoder/tree/main/demo

Training Data

- Magicoder-OSS-Instruct-75K: generated through OSS-Instruct using

gpt-3.5-turbo-1106and used to train both Magicoder and Magicoder-S series. - Magicoder-Evol-Instruct-110K: decontaminated and redistributed from theblackcat102/evol-codealpaca-v1, used to further finetune Magicoder series and obtain Magicoder-S models.

Uses

Direct Use

Magicoders are designed and best suited for coding tasks.

Out-of-Scope Use

Magicoders may not work well in non-coding tasks.

Bias, Risks, and Limitations

Magicoders may sometimes make errors, producing misleading contents, or struggle to manage tasks that are not related to coding.

Recommendations

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model.

How to Get Started with the Model

Use the code below to get started with the model. Make sure you installed the transformers library.

from transformers import pipeline

import torch

MAGICODER_PROMPT = """You are an exceptionally intelligent coding assistant that consistently delivers accurate and reliable responses to user instructions.

@@ Instruction

{instruction}

@@ Response

"""

instruction = <Your code instruction here>

prompt = MAGICODER_PROMPT.format(instruction=instruction)

generator = pipeline(

model="ise-uiuc/Magicoder-S-CL-7B",

task="text-generation",

torch_dtype=torch.bfloat16,

device_map="auto",

)

result = generator(prompt, max_length=1024, num_return_sequences=1, temperature=0.0)

print(result[0]["generated_text"])

Technical Details

Refer to our GitHub repo: ise-uiuc/magicoder.

Citation

@misc{magicoder,

title={Magicoder: Source Code Is All You Need},

author={Yuxiang Wei and Zhe Wang and Jiawei Liu and Yifeng Ding and Lingming Zhang},

year={2023},

eprint={2312.02120},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

Acknowledgements

- WizardCoder: Evol-Instruct

- DeepSeek-Coder: Base model for Magicoder-DS

- CodeLlama: Base model for Magicoder-CL

- StarCoder: Data decontamination

Important Note

Magicoder models are trained on the synthetic data generated by OpenAI models. Please pay attention to OpenAI's terms of use when using the models and the datasets. Magicoders will not compete with OpenAI's commercial products.

- Downloads last month

- 196