mwpt5/t5-mawps-pen

0.2B

•

Updated

•

1

•

1

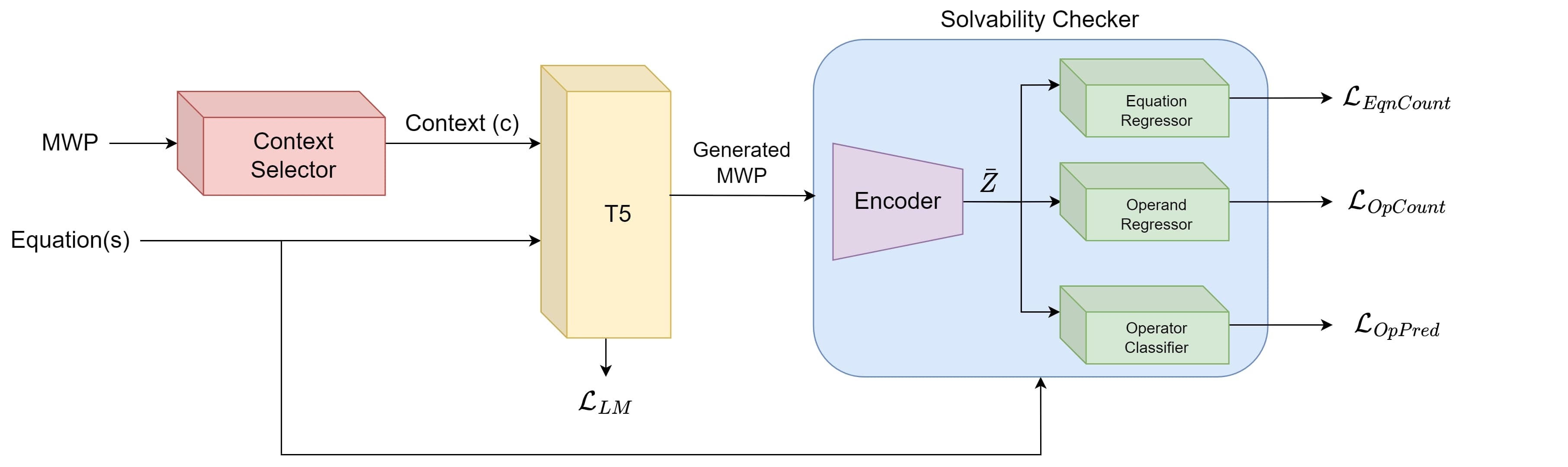

MWP generation, language modelling, representation learning

This repository contains the code for the paper Fine-tuning with Numeracy for Math Word Problem Generation [PDF] published at IEEE T4E 2023.

You can access our source code here. If you would like to cite our work, please use the following BiBTex entry:

MWP-T5 achieves state-of-the-art performance on the MAWPS and PEN datasets. The following tables show the performance of MWP-T5 and other models on the MAWPS and PEN datasets. For more information, please refer to the paper.

| Model Name | BLEU-4 | ROUGE-L | METEOR |

|---|---|---|---|

| seq2seq-rnn | 0.153 | 0.362 | 0.175 |

| seq2seq-rnn + GLoVe | 0.592 | 0.705 | 0.412 |

| seq2seq-tf | 0.554 | 0.663 | 0.387 |

| GPT | 0.368 | 0.538 | 0.294 |

| GPT-pre | 0.504 | 0.664 | 0.391 |

| GPT2-mwp2eq | 0.596 | 0.715 | 0.427 |

| MWP-T5 | 0.885 | 0.930 | 0.930 |

| Model Name | BLEU-4 | ROUGE-L | METEOR |

|---|---|---|---|

| MWP-T5 | 0.669 | 0.768 | 0.772 |

Please feel free to contact us by emailing us to report any issues or suggestions, or if you have any further questions.

Contact: - Yashi Chawla

You can also contact the other maintainers listed below.