SetFit with sentence-transformers/all-mpnet-base-v2

This is a SetFit model that can be used for Text Classification. This SetFit model uses sentence-transformers/all-mpnet-base-v2 as the Sentence Transformer embedding model. A LogisticRegression instance is used for classification.

The model has been trained using an efficient few-shot learning technique that involves:

- Fine-tuning a Sentence Transformer with contrastive learning.

- Training a classification head with features from the fine-tuned Sentence Transformer.

Model Details

Model Description

- Model Type: SetFit

- Sentence Transformer body: sentence-transformers/all-mpnet-base-v2

- Classification head: a LogisticRegression instance

- Maximum Sequence Length: 384 tokens

- Number of Classes: 3 classes

Model Sources

- Repository: SetFit on GitHub

- Paper: Efficient Few-Shot Learning Without Prompts

- Blogpost: SetFit: Efficient Few-Shot Learning Without Prompts

Model Labels

| Label | Examples |

|---|---|

| feature |

|

| bug |

|

| question |

|

Uses

Direct Use for Inference

First install the SetFit library:

pip install setfit

Then you can load this model and run inference.

from setfit import SetFitModel

# Download from the 🤗 Hub

model = SetFitModel.from_pretrained("setfit_model_id")

# Run inference

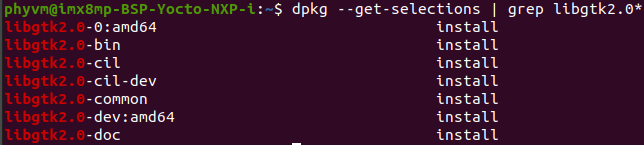

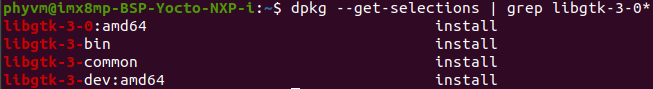

preds = model("OpenCV build - cmake don't recognize GTK2 or GTK3 ##### System information (version)

- OpenCV => 4.7.0

- Operating System / Platform => Ubuntu 20.04

- Compiler => g++ (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0

- C++ Standard: 11

##### Detailed description

I am trying to create OpenCV with the GTK GUI. I have `GTK2` and `GTK3` installed on the system, but neither version is recognised by cmake.

The output after executing cmake remains

**GUI: NONE**

**GTK+:NO**

I execute the cmake command as follows:

`cmake -DPYTHON_DEFAULT_EXECUTABLE=$(which python3) -DWITH_GTK=ON ../opencv`

or

`cmake -DPYTHON_DEFAULT_EXECUTABLE=$(which python3) -DWITH_GTK=ON -DWITH_GTK_2_X=ON ../opencv`

##### Steps to reproduce

git clone https://github.com/opencv/opencv.git

mkdir -p build && cd build

cmake ../opencv (default, custom look above)

Thank you for your help

")

Training Details

Training Set Metrics

| Training set | Min | Median | Max |

|---|---|---|---|

| Word count | 2 | 393.0933 | 13648 |

| Label | Training Sample Count |

|---|---|

| bug | 200 |

| feature | 200 |

| question | 200 |

Training Hyperparameters

- batch_size: (16, 2)

- num_epochs: (1, 1)

- max_steps: -1

- sampling_strategy: oversampling

- num_iterations: 20

- body_learning_rate: (2e-05, 1e-05)

- head_learning_rate: 0.01

- loss: CosineSimilarityLoss

- distance_metric: cosine_distance

- margin: 0.25

- end_to_end: False

- use_amp: False

- warmup_proportion: 0.1

- seed: 42

- eval_max_steps: -1

- load_best_model_at_end: False

Training Results

| Epoch | Step | Training Loss | Validation Loss |

|---|---|---|---|

| 0.0007 | 1 | 0.2963 | - |

| 0.0067 | 10 | 0.2694 | - |

| 0.0133 | 20 | 0.2443 | - |

| 0.02 | 30 | 0.2872 | - |

| 0.0267 | 40 | 0.2828 | - |

| 0.0333 | 50 | 0.2279 | - |

| 0.04 | 60 | 0.1852 | - |

| 0.0467 | 70 | 0.1983 | - |

| 0.0533 | 80 | 0.224 | - |

| 0.06 | 90 | 0.2315 | - |

| 0.0667 | 100 | 0.2698 | - |

| 0.0733 | 110 | 0.1178 | - |

| 0.08 | 120 | 0.1216 | - |

| 0.0867 | 130 | 0.1065 | - |

| 0.0933 | 140 | 0.1519 | - |

| 0.1 | 150 | 0.073 | - |

| 0.1067 | 160 | 0.0858 | - |

| 0.1133 | 170 | 0.1697 | - |

| 0.12 | 180 | 0.1062 | - |

| 0.1267 | 190 | 0.0546 | - |

| 0.1333 | 200 | 0.139 | - |

| 0.14 | 210 | 0.0513 | - |

| 0.1467 | 220 | 0.1168 | - |

| 0.1533 | 230 | 0.0455 | - |

| 0.16 | 240 | 0.0521 | - |

| 0.1667 | 250 | 0.0307 | - |

| 0.1733 | 260 | 0.0618 | - |

| 0.18 | 270 | 0.0964 | - |

| 0.1867 | 280 | 0.0707 | - |

| 0.1933 | 290 | 0.0524 | - |

| 0.2 | 300 | 0.0358 | - |

| 0.2067 | 310 | 0.0238 | - |

| 0.2133 | 320 | 0.0759 | - |

| 0.22 | 330 | 0.0197 | - |

| 0.2267 | 340 | 0.0053 | - |

| 0.2333 | 350 | 0.0035 | - |

| 0.24 | 360 | 0.0036 | - |

| 0.2467 | 370 | 0.0079 | - |

| 0.2533 | 380 | 0.0033 | - |

| 0.26 | 390 | 0.0021 | - |

| 0.2667 | 400 | 0.0026 | - |

| 0.2733 | 410 | 0.0018 | - |

| 0.28 | 420 | 0.0014 | - |

| 0.2867 | 430 | 0.0019 | - |

| 0.2933 | 440 | 0.0027 | - |

| 0.3 | 450 | 0.0016 | - |

| 0.3067 | 460 | 0.0027 | - |

| 0.3133 | 470 | 0.0095 | - |

| 0.32 | 480 | 0.0005 | - |

| 0.3267 | 490 | 0.0006 | - |

| 0.3333 | 500 | 0.0006 | - |

| 0.34 | 510 | 0.0634 | - |

| 0.3467 | 520 | 0.0025 | - |

| 0.3533 | 530 | 0.0013 | - |

| 0.36 | 540 | 0.0007 | - |

| 0.3667 | 550 | 0.0007 | - |

| 0.3733 | 560 | 0.0003 | - |

| 0.38 | 570 | 0.0006 | - |

| 0.3867 | 580 | 0.0007 | - |

| 0.3933 | 590 | 0.0004 | - |

| 0.4 | 600 | 0.0006 | - |

| 0.4067 | 610 | 0.0007 | - |

| 0.4133 | 620 | 0.0005 | - |

| 0.42 | 630 | 0.0004 | - |

| 0.4267 | 640 | 0.0005 | - |

| 0.4333 | 650 | 0.0013 | - |

| 0.44 | 660 | 0.0005 | - |

| 0.4467 | 670 | 0.0007 | - |

| 0.4533 | 680 | 0.0008 | - |

| 0.46 | 690 | 0.0018 | - |

| 0.4667 | 700 | 0.0007 | - |

| 0.4733 | 710 | 0.0008 | - |

| 0.48 | 720 | 0.0007 | - |

| 0.4867 | 730 | 0.0007 | - |

| 0.4933 | 740 | 0.0002 | - |

| 0.5 | 750 | 0.0002 | - |

| 0.5067 | 760 | 0.0002 | - |

| 0.5133 | 770 | 0.0007 | - |

| 0.52 | 780 | 0.0004 | - |

| 0.5267 | 790 | 0.0003 | - |

| 0.5333 | 800 | 0.0007 | - |

| 0.54 | 810 | 0.0004 | - |

| 0.5467 | 820 | 0.0003 | - |

| 0.5533 | 830 | 0.0002 | - |

| 0.56 | 840 | 0.001 | - |

| 0.5667 | 850 | 0.008 | - |

| 0.5733 | 860 | 0.0003 | - |

| 0.58 | 870 | 0.0002 | - |

| 0.5867 | 880 | 0.0011 | - |

| 0.5933 | 890 | 0.0005 | - |

| 0.6 | 900 | 0.0004 | - |

| 0.6067 | 910 | 0.0003 | - |

| 0.6133 | 920 | 0.0002 | - |

| 0.62 | 930 | 0.0002 | - |

| 0.6267 | 940 | 0.0002 | - |

| 0.6333 | 950 | 0.0001 | - |

| 0.64 | 960 | 0.0002 | - |

| 0.6467 | 970 | 0.0003 | - |

| 0.6533 | 980 | 0.0002 | - |

| 0.66 | 990 | 0.0005 | - |

| 0.6667 | 1000 | 0.0003 | - |

| 0.6733 | 1010 | 0.0002 | - |

| 0.68 | 1020 | 0.0003 | - |

| 0.6867 | 1030 | 0.0008 | - |

| 0.6933 | 1040 | 0.0003 | - |

| 0.7 | 1050 | 0.0005 | - |

| 0.7067 | 1060 | 0.0012 | - |

| 0.7133 | 1070 | 0.0001 | - |

| 0.72 | 1080 | 0.0003 | - |

| 0.7267 | 1090 | 0.0002 | - |

| 0.7333 | 1100 | 0.0001 | - |

| 0.74 | 1110 | 0.0003 | - |

| 0.7467 | 1120 | 0.0002 | - |

| 0.7533 | 1130 | 0.0003 | - |

| 0.76 | 1140 | 0.0596 | - |

| 0.7667 | 1150 | 0.0012 | - |

| 0.7733 | 1160 | 0.0004 | - |

| 0.78 | 1170 | 0.0003 | - |

| 0.7867 | 1180 | 0.0003 | - |

| 0.7933 | 1190 | 0.0002 | - |

| 0.8 | 1200 | 0.0015 | - |

| 0.8067 | 1210 | 0.0002 | - |

| 0.8133 | 1220 | 0.0001 | - |

| 0.82 | 1230 | 0.0002 | - |

| 0.8267 | 1240 | 0.0002 | - |

| 0.8333 | 1250 | 0.0002 | - |

| 0.84 | 1260 | 0.0002 | - |

| 0.8467 | 1270 | 0.0003 | - |

| 0.8533 | 1280 | 0.0001 | - |

| 0.86 | 1290 | 0.0001 | - |

| 0.8667 | 1300 | 0.0002 | - |

| 0.8733 | 1310 | 0.0004 | - |

| 0.88 | 1320 | 0.0004 | - |

| 0.8867 | 1330 | 0.0004 | - |

| 0.8933 | 1340 | 0.0001 | - |

| 0.9 | 1350 | 0.0002 | - |

| 0.9067 | 1360 | 0.055 | - |

| 0.9133 | 1370 | 0.0002 | - |

| 0.92 | 1380 | 0.0004 | - |

| 0.9267 | 1390 | 0.0001 | - |

| 0.9333 | 1400 | 0.0002 | - |

| 0.94 | 1410 | 0.0002 | - |

| 0.9467 | 1420 | 0.0004 | - |

| 0.9533 | 1430 | 0.0009 | - |

| 0.96 | 1440 | 0.0003 | - |

| 0.9667 | 1450 | 0.0427 | - |

| 0.9733 | 1460 | 0.0004 | - |

| 0.98 | 1470 | 0.0001 | - |

| 0.9867 | 1480 | 0.0002 | - |

| 0.9933 | 1490 | 0.0002 | - |

| 1.0 | 1500 | 0.0002 | - |

Framework Versions

- Python: 3.10.12

- SetFit: 1.0.3

- Sentence Transformers: 3.0.1

- Transformers: 4.39.0

- PyTorch: 2.3.0+cu121

- Datasets: 2.20.0

- Tokenizers: 0.15.2

Citation

BibTeX

@article{https://doi.org/10.48550/arxiv.2209.11055,

doi = {10.48550/ARXIV.2209.11055},

url = {https://arxiv.org/abs/2209.11055},

author = {Tunstall, Lewis and Reimers, Nils and Jo, Unso Eun Seo and Bates, Luke and Korat, Daniel and Wasserblat, Moshe and Pereg, Oren},

keywords = {Computation and Language (cs.CL), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {Efficient Few-Shot Learning Without Prompts},

publisher = {arXiv},

year = {2022},

copyright = {Creative Commons Attribution 4.0 International}

}

- Downloads last month

- 7

This model does not have enough activity to be deployed to Inference API (serverless) yet. Increase its social

visibility and check back later, or deploy to Inference Endpoints (dedicated)

instead.

Model tree for nilcars/opencv_opencv_model

Base model

sentence-transformers/all-mpnet-base-v2