Update README.md

Browse files

README.md

CHANGED

|

@@ -14,13 +14,14 @@ metrics:

|

|

| 14 |

- code_eval

|

| 15 |

pipeline_tag: text-generation

|

| 16 |

---

|

| 17 |

-

|

| 18 |

-

---

|

| 19 |

|

| 20 |

Vimarckoso is a component of Lamarck with a recipe based on [CultriX/Qwen2.5-14B-Wernicke](https://huggingface.co/CultriX/Qwen2.5-14B-Wernicke). I set out to fix the initial version's instruction following without any great loss to reasoning. The results have been surprisingly good; model mergers are now building atop very strong finetunes!

|

| 21 |

|

| 22 |

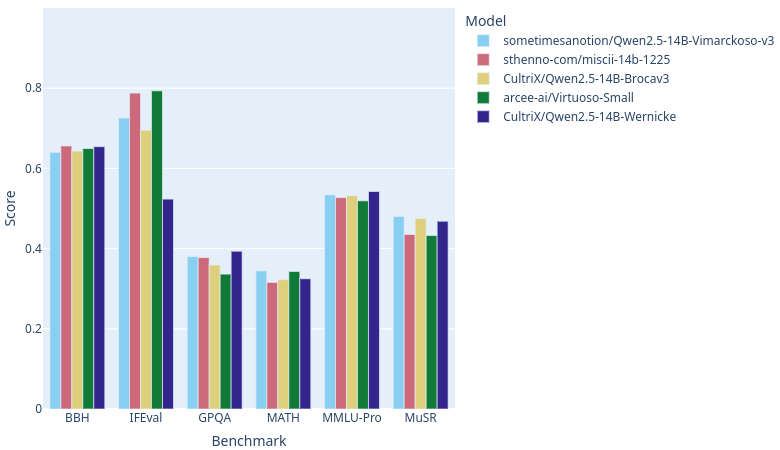

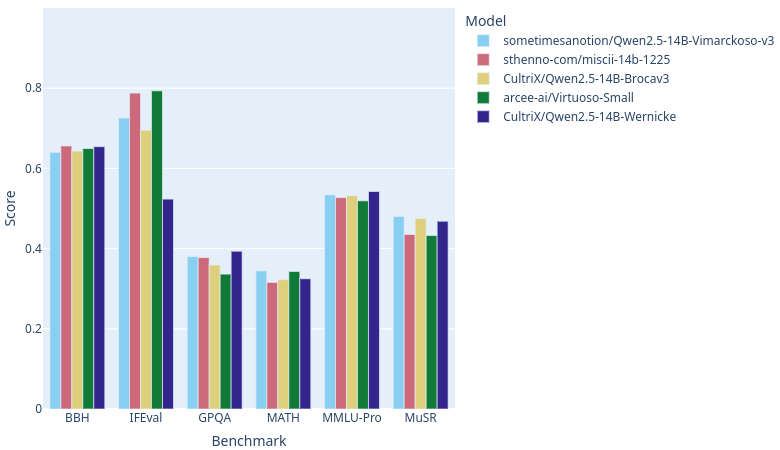

As of this writing, with [open-llm-leaderboard](https://huggingface.co/open-llm-leaderboard) catching up on rankings, Vimarckoso v3 should join Arcee AI's [Virtuoso-Small](https://huggingface.co/arcee-ai/Virtuoso-Small), Sthenno's [miscii-14b-1225](https://huggingface.co/sthenno-com/miscii-14b-1225) and Cultrix's [Qwen2.5-14B-Brocav3](https://huggingface.co/CultriX/Qwen2.5-14B-Brocav3) at the top of the 14B parameter LLM category on this site. As the recipe below will show, their models contribute strongly to Virmarckoso - CultriX's through a strong influence on Lamarck v0.3. Congratulations to everyone whose work went into this!

|

| 23 |

|

|

|

|

|

|

|

|

|

|

| 24 |

### Configuration

|

| 25 |

|

| 26 |

The following YAML configuration was used to produce this model:

|

|

|

|

| 14 |

- code_eval

|

| 15 |

pipeline_tag: text-generation

|

| 16 |

---

|

|

|

|

|

|

|

| 17 |

|

| 18 |

Vimarckoso is a component of Lamarck with a recipe based on [CultriX/Qwen2.5-14B-Wernicke](https://huggingface.co/CultriX/Qwen2.5-14B-Wernicke). I set out to fix the initial version's instruction following without any great loss to reasoning. The results have been surprisingly good; model mergers are now building atop very strong finetunes!

|

| 19 |

|

| 20 |

As of this writing, with [open-llm-leaderboard](https://huggingface.co/open-llm-leaderboard) catching up on rankings, Vimarckoso v3 should join Arcee AI's [Virtuoso-Small](https://huggingface.co/arcee-ai/Virtuoso-Small), Sthenno's [miscii-14b-1225](https://huggingface.co/sthenno-com/miscii-14b-1225) and Cultrix's [Qwen2.5-14B-Brocav3](https://huggingface.co/CultriX/Qwen2.5-14B-Brocav3) at the top of the 14B parameter LLM category on this site. As the recipe below will show, their models contribute strongly to Virmarckoso - CultriX's through a strong influence on Lamarck v0.3. Congratulations to everyone whose work went into this!

|

| 21 |

|

| 22 |

+

|

| 23 |

+

---

|

| 24 |

+

|

| 25 |

### Configuration

|

| 26 |

|

| 27 |

The following YAML configuration was used to produce this model:

|