title: QuantumLLMInstruct

emoji: 🦀

colorFrom: green

colorTo: indigo

sdk: docker

pinned: false

short_description: 'QuantumLLMInstruct: A 500k LLM Instruction-Tuning Dataset'

QuantumLLMInstruct: A 500k LLM Instruction-Tuning Dataset with Problem-Solution Pairs for Quantum Computing

Dataset Overview

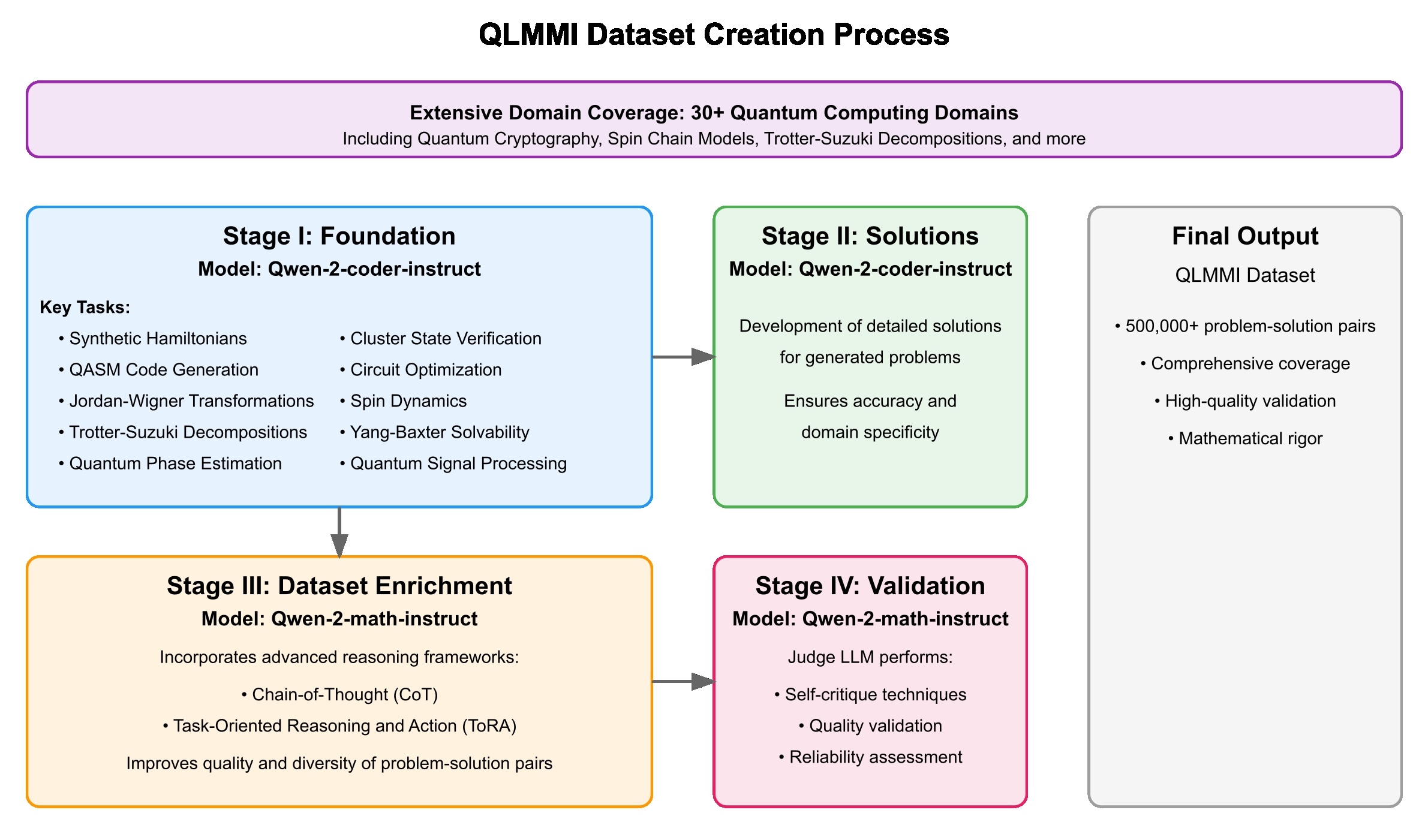

QuantumLLMInstruct (QLMMI) is a groundbreaking dataset designed to fine-tune and evaluate Large Language Models (LLMs) in the domain of quantum computing. This dataset spans 90 primary quantum computing domains and contains over 500,000 rigorously curated instruction-following problem-solution pairs.

The dataset focuses on enhancing reasoning capabilities in LLMs for quantum-specific tasks, including Hamiltonian dynamics, quantum circuit optimization, and Yang-Baxter solvability.

Each entry consists of:

- A quantum computing problem expressed in natural language and/or LaTeX.

- A detailed step-by-step solution, designed for precision and clarity.

- Domain-specific metadata, such as the problem's main domain, sub-domain, and associated tags.

Data Sources

The dataset leverages cutting-edge methodologies to generate problems and solutions:

- Predefined Templates: Problems crafted using robust templates to ensure domain specificity and mathematical rigor.

- LLM-Generated Problems: Models such as

Qwen-2.5-Coderautonomously generate complex problems across diverse quantum topics, including:- Synthetic Hamiltonians

- QASM code

- Jordan-Wigner transformations

- Trotter-Suzuki decompositions

- Quantum phase estimation

- Variational Quantum Eigensolvers (VQE)

- Gibbs state preparation

- Advanced Reasoning Techniques: Leveraging Chain-of-Thought (CoT) and Task-Oriented Reasoning and Action (ToRA) frameworks to refine problem-solution pairs.

Structure

The dataset contains the following fields:

images: Optional multimodal inputs, such as visualizations of quantum circuits or spin models.problem_text: The quantum computing problem, formatted in plain text or LaTeX.solution: A detailed solution generated by state-of-the-art LLMs.main_domain: The primary quantum domain, e.g., "Quantum Spin Chains" or "Hamiltonian Dynamics."sub_domain: Specific subtopics, e.g., "Ising Models" or "Trotterization."tags: Relevant tags for classification and retrieval.model_name: The name of the model used to generate the problem or solution.timestamp: The date and time of creation.

Key Features

- Comprehensive Coverage: Spanning 90 primary domains and hundreds of subdomains.

- High Quality: Problems and solutions validated through advanced reasoning frameworks and Judge LLMs.

- Open Access: Designed to support researchers, educators, and developers in the field of quantum computing.

- Scalable Infrastructure: Metadata and structure optimized for efficient querying and usage.

Example Domains

Some of the key domains covered in the dataset include:

- Synthetic Hamiltonians: Energy computations and time evolution.

- Quantum Spin Chains: Ising, Heisenberg, and advanced integrable models.

- Yang-Baxter Solvability: Solving for quantum integrable models.

- Trotter-Suzuki Decompositions: Efficient simulation of Hamiltonian dynamics.

- Quantum Phase Estimation: Foundational in quantum algorithms.

- Variational Quantum Eigensolvers (VQE): Optimization for quantum chemistry.

- Randomized Circuit Optimization: Enhancing algorithm robustness in noisy conditions.

- Quantum Thermodynamics: Gibbs state preparation and entropy calculations.

Contributions

This dataset represents a collaborative effort to advance quantum computing research through the use of large-scale LLMs. It offers:

- A scalable and comprehensive dataset for fine-tuning LLMs.

- Rigorous methodologies for generating and validating quantum problem-solving tasks.

- Open-access resources to foster collaboration and innovation in the quantum computing community.

Cite: @dataset{quantumllm_instruct, title={QuantumLLMInstruct: A 500k LLM Instruction-Tuning Dataset with Problem-Solution Pairs for Quantum Computing}, author={Shlomo Kashani}, year={2025}, url={https://huggingface.co/datasets/QuantumLLMInstruct} }