Spaces:

Sleeping

A newer version of the Gradio SDK is available:

5.6.0

title: AudioNLtoSQL

app_file: app.py

sdk: gradio

sdk_version: 4.37.2

NLToSQL

- This project aims to convert Natural Language inputs in form of text and audio to SQL Queries leveraging LLMs and prompt engineering and automatically running these queries and providing an output from the DB, for the same we are leveraging Chat GPT 3.5 Turbo, Langchain and Huggingface Transformers for the implementation.

- We have also finetuned the performance by providing a way to select only the required tables for the prompts, using a csv which contains the description of the database, for the same we have used a vector database for semantic matching to choose relevant tables as per the table descriptions provided. Refer to the database_table_descriptions.csv file.

- And have leveraged few shot learning method provided for openAI models via langchain to train the model to the pecific kind of queries that we need answered. Refer to the few_shot_samples.json file.

- For the audio module, I trained a Automatic Speech Recognition model using hugingface transformers saved the same to the HuggingFace Hub and used it to convert recorded audio into text which is then passed as input to the Langchain LLM setup to convert to SQL queries which are run on the database and outputs from the same are returned. Model link on HuggingFace: https://huggingface.co/avnishkanungo/whisper-small-dv

This script can be run on any local msql database provided you input the correct username, password, host name and DB name. All of these can be passed as arguments to the command to run the provided script.

To install required libraries:

pip install -r requirements.txt

To run this code please traverse into the directory where the NLT0SQL.py script is present and use run the below command with your Open AI API key on your own database please use the below command:

python3 NLToSQL.py --db_user "SQL_DB_Username" --db_password "PASSWORD" --db_host "HOSTNAME" --db_name "DATABSE_NAME" --open_ai_key "YOUR_OPEN_AI_API_KEY"

You can make required changes to the the database_table_descriptions.csv and few_shot_samples.json files as per your requirement. If need be you can use your own files for the same too, please refer to the below example command:

python3 NLToSQL.py --desc_path "PATH_FOR_DATABASE_DESCRIPTION" --example_path "few_shot_examples_path" --db_user "SQL_DB_Username" --db_password "PASSWORD" --db_host "HOSTNAME" --db_name "DATABSE_NAME" --open_ai_key "YOUR_OPEN_AI_API_KEY"

Setting up the dummy DB for testing(Implement this before running the above command if you intend to test on a dummy database):

sudo apt-get -y install mysql-server

sudo service mysql start

sudo mysql -e "ALTER USER 'root'@'localhost' IDENTIFIED WITH 'mysql_native_password' BY 'root';FLUSH PRIVILEGES;"

mysql -u root -p (then input the password i.e. root)

mysql> source ~/database/mysqlsampledatabase.sql

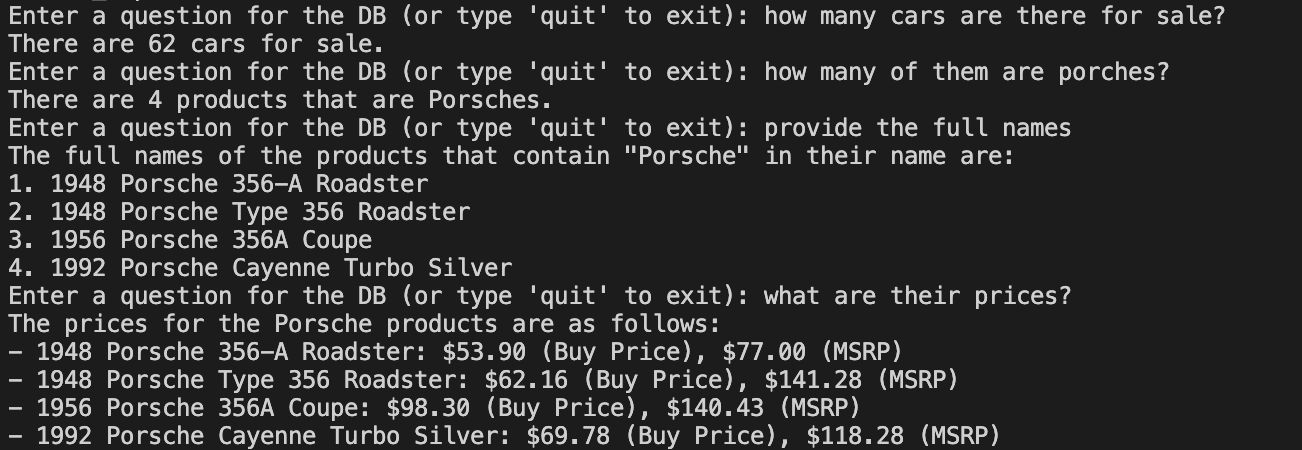

Example implementation on dummy database post running the code:

References: