Spaces:

Runtime error

title: AI Content Detector

emoji: 📊

colorFrom: purple

colorTo: red

sdk: streamlit

sdk_version: 1.19.0

app_file: app.py

pinned: false

Introduction

The following App is based on devloverumar/chatgpt-content-detector model that is extended from PirateXX/AI-Content-Detector-V2 and fine-tuned on Hello-SimpleAI/HC3 to improve poerformance. This project introduces a novel tool that can determine whether a given text is created by a large language model (LLM), like ChatGPT. The tool functions by analyzing language patterns and sentence structures that are distinctive to human-generated content versus those produced by LLM systems using machine learning models. The output of the tool is a prediction with corresponding confidence scores, and it also displays the key factors that influenced the decision. These factors are displayed as percentiles based on the model's previous encounters with text. It is crucial to note that this tool is not infallible and may occasionally flag human or AI-created texts incorrectly, making it inappropriate to rely solely on it for cheating detection.

For this App AI-Content-Detector-V2 model is fine-tuned on splitted sentences of answers

from Hello-SimpleAI/HC3.

More details refer to arxiv: 2301.07597 and Gtihub project Hello-SimpleAI/chatgpt-comparison-detection.

Usage

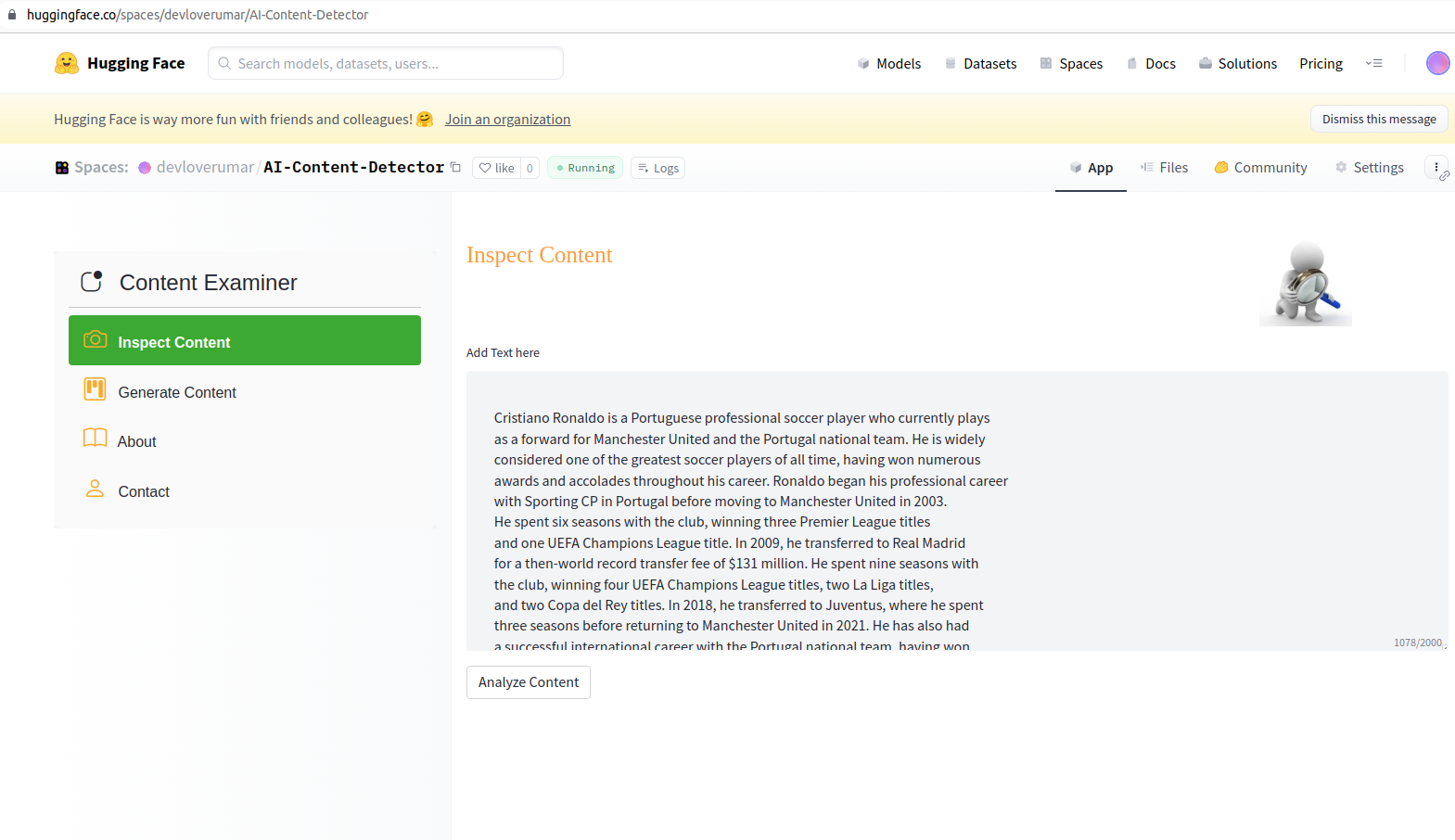

To run this project, visit https://huggingface.co/spaces/devloverumar/AI-Content-Detector. The interface allows you to enter any text and click "Analyze Content" to evaluate it. On the right, you will see output as Real or Fake Content:

First App will be laoded with a ChatGPT generatedtext. When you click the Analyze button, Detection model will be loaded and text is splitted to sequence of sentences. Each sentence is tokenized and passed through model to predict whether it's real or AI generated. At the end, probabilities from all splitted sentences are integrated to show to overall prediction. If probablit is above 90% then content is predicted as Fake otherwise Human written.

Documentation

I trained an existing model PirateXX/AI-Content-Detector-V2 and fine-tuned on Hello-SimpleAI/HC3(https://huggingface.co/datasets/Hello-SimpleAI/HC3) dataset. PirateXX/AI-Content-Detector-V2 author doesn't provide much details about the base model but as per performance and my exploration it's based on https://huggingface.co/roberta-base and trained on some dataset other than HC3. roberta builds on BERT and modifies key hyperparameters, removing the next-sentence pretraining objective and training with much larger mini-batches and learning rates. So it's performance is comparatively low compared to https://huggingface.co/Hello-SimpleAI/chatgpt-detector-roberta which is trained on HC3 dataset. My intention was to acheive the same results as of chatgpt-detector-roberta by training on HC3 Dataset. HC3 Dataset and the dataset is released in 2023 specificly to perform AI content detection. Dataset have three columns, question, human_answers, chatgpt_answers. I explored many other large text datasets (OpenWebText, Reddit, and many other) but finally decided to train in a binary class fashion as anomaly detection task. So I trained only on HC3 by taking chatgpt_answers with label as AI generated content. HC3 dataset is recently released so have some empty values. I filtered those values to make it work for tokenizer and preprocessing without any errors. For tokenization I truncated and padded text input to make it fixed lenght of 512 as tokenizaer for AI-Content-Detector-V2 expects input of size 512. For UI I selected streamlit as it offers more flexibility and variety of UI wigets compared to gradio and is more moduler.

Contributions

The core contributions I made inlude fine-tuning of model to improve accuracy (from 98% to 99%) and preprocessing of a dataset having some corrupted data. train a binary anomaly detection model using the HC3 dataset and the PirateXX/AI-Content-Detector-V2 model. The tutorial covers data preparation, fine-tuning, evaluation, and deployment, with code snippets and examples to illustrate each step. I also provided tips and best practices for optimizing the model's performance and avoiding common pitfalls. I conducted a benchmarking study to compare the performance of the PirateXX/AI-Content-Detector-V2 model trained on the HC3 dataset with other state-of-the-art AI content detection models. I used several public datasets and metrics to evaluate the models' accuracy, precision, recall, and F1 score, and analyzed the results to identify their strengths and weaknesses. I also discussed the implications of the findings for real-world applications and future research. I developed a web-based AI content detector using the PirateXX/AI-Content-Detector-V2 model and Streamlit. The detector allows users to input text and get a prediction on whether it was generated by an AI model or not, with a user-friendly interface and interactive features such as visualizations and explanations. I also integrated the model with other AI tools and services to enhance its functionality and usability. I fine-tuned the PirateXX/AI-Content-Detector-V2 model on several large text datasets, including OpenWebText and Reddit, and compared its performance with the HC3-trained version. The experiments showed that the model's ability to detect AI-generated content varied depending on the dataset's characteristics and the pre-training objectives of the base model. I also discussed the trade-offs between model complexity, dataset size, and computational resources, and proposed strategies for optimizing the model's performance on different datasets.

Limitations

Limitations are like having false positives, as I trained on chatGPT only text so sometimes it detects human written text as fake text. Additionally it can't process long inputs as a whole rather have to split in individual sentences. And it can't highlight individual sentences that's something I have to address in next version as on backend it's doing so but I have to mark individual sentences and highlight then on frontend.