Spaces:

Runtime error

Runtime error

Upload folder using huggingface_hub

Browse files- .gitattributes +9 -0

- .gitignore +168 -0

- LICENSE +407 -0

- PROMPT_GUIDE.md +91 -0

- README.md +169 -14

- assets/cond_and_image.jpg +3 -0

- assets/examples/id_customization/chenhao/image_0.png +0 -0

- assets/examples/id_customization/chenhao/image_1.png +0 -0

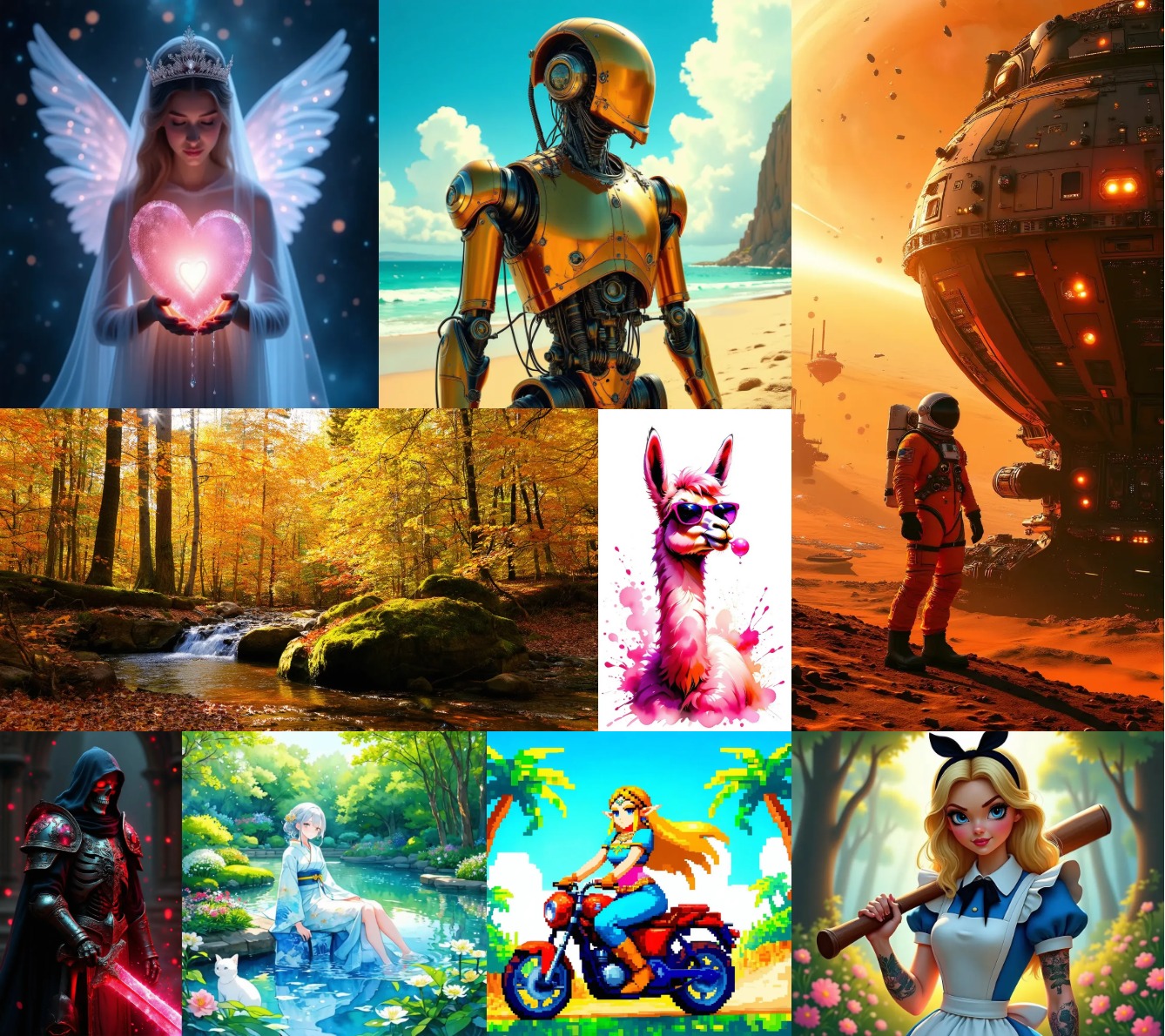

- assets/examples/id_customization/chenhao/image_2.png +0 -0

- assets/onediffusion_appendix_faceid.jpg +3 -0

- assets/onediffusion_appendix_faceid_3.jpg +3 -0

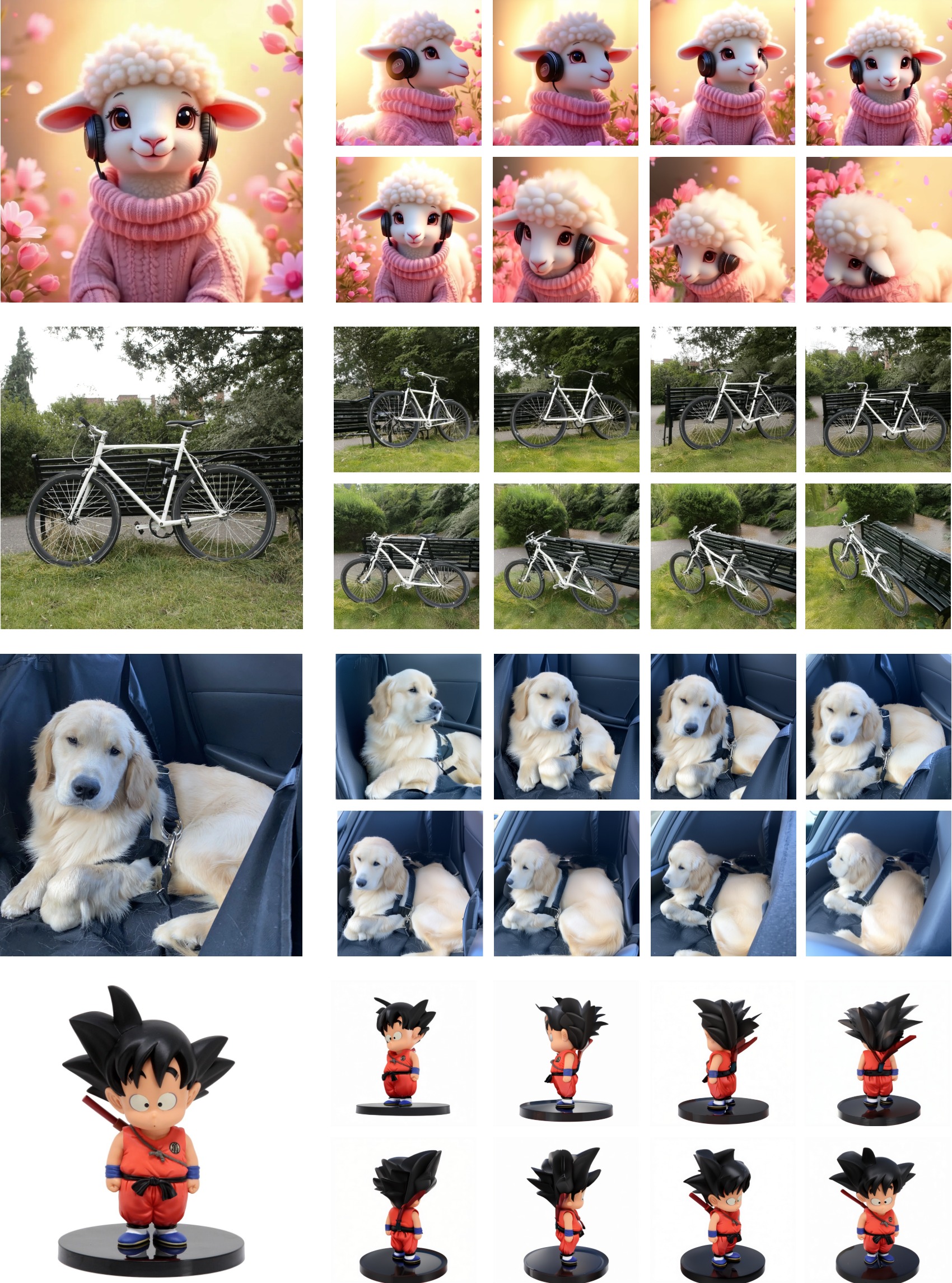

- assets/onediffusion_appendix_multiview.jpg +3 -0

- assets/onediffusion_appendix_multiview_2.jpg +0 -0

- assets/onediffusion_appendix_text2multiview.pdf +3 -0

- assets/onediffusion_editing.jpg +0 -0

- assets/onediffusion_zeroshot.jpg +3 -0

- assets/promptguide_complex.jpg +3 -0

- assets/promptguide_idtask.jpg +0 -0

- assets/subject_driven.jpg +0 -0

- assets/teaser.png +3 -0

- assets/text2image.jpg +0 -0

- assets/text2multiview.jpg +3 -0

- docker/Dockerfile +119 -0

- gradio_demo.py +715 -0

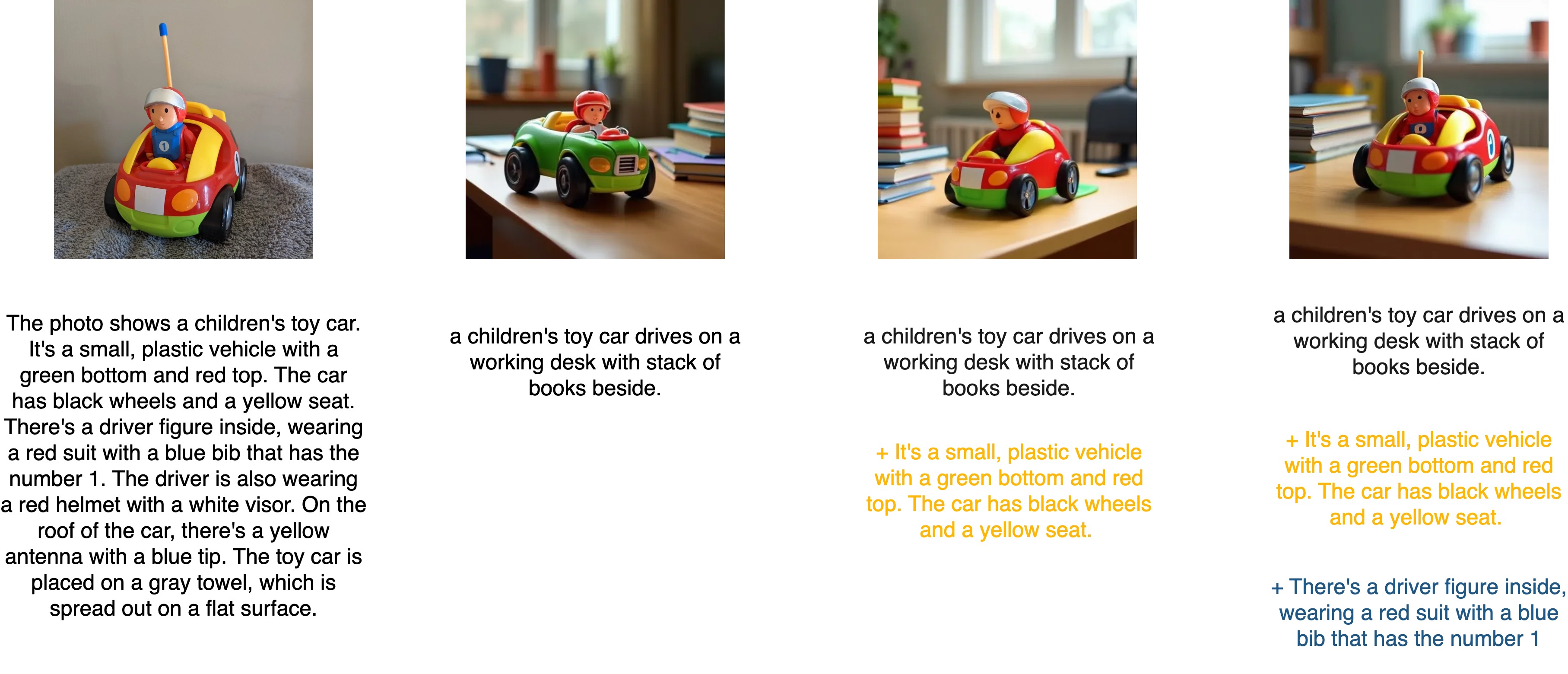

- inference.py +37 -0

- onediffusion/dataset/multitask/multiview.py +277 -0

- onediffusion/dataset/raydiff_utils.py +739 -0

- onediffusion/dataset/transforms.py +133 -0

- onediffusion/dataset/utils.py +175 -0

- onediffusion/diffusion/pipelines/image_processor.py +674 -0

- onediffusion/diffusion/pipelines/onediffusion.py +1080 -0

- onediffusion/models/denoiser/__init__.py +3 -0

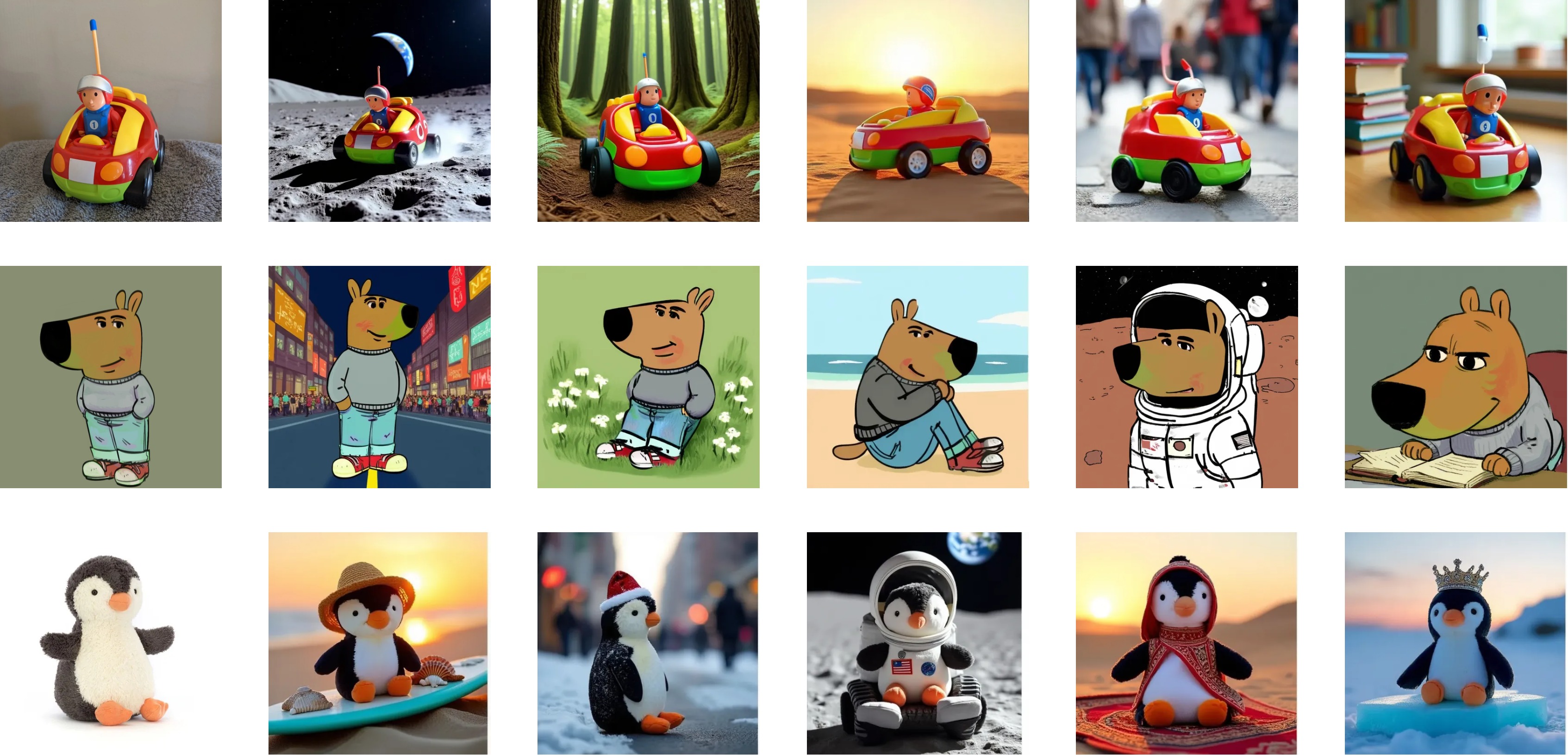

- onediffusion/models/denoiser/nextdit/__init__.py +1 -0

- onediffusion/models/denoiser/nextdit/layers.py +132 -0

- onediffusion/models/denoiser/nextdit/modeling_nextdit.py +571 -0

- requirements.txt +27 -6

.gitattributes

CHANGED

|

@@ -33,3 +33,12 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

assets/cond_and_image.jpg filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

assets/onediffusion_appendix_faceid.jpg filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

assets/onediffusion_appendix_faceid_3.jpg filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

assets/onediffusion_appendix_multiview.jpg filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

assets/onediffusion_appendix_text2multiview.pdf filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

assets/onediffusion_zeroshot.jpg filter=lfs diff=lfs merge=lfs -text

|

| 42 |

+

assets/promptguide_complex.jpg filter=lfs diff=lfs merge=lfs -text

|

| 43 |

+

assets/teaser.png filter=lfs diff=lfs merge=lfs -text

|

| 44 |

+

assets/text2multiview.jpg filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,168 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Byte-compiled / optimized / DLL files

|

| 2 |

+

__pycache__/

|

| 3 |

+

*.py[cod]

|

| 4 |

+

*$py.class

|

| 5 |

+

|

| 6 |

+

# C extensions

|

| 7 |

+

*.so

|

| 8 |

+

|

| 9 |

+

# Distribution / packaging

|

| 10 |

+

.Python

|

| 11 |

+

build/

|

| 12 |

+

develop-eggs/

|

| 13 |

+

dist/

|

| 14 |

+

downloads/

|

| 15 |

+

eggs/

|

| 16 |

+

.eggs/

|

| 17 |

+

lib/

|

| 18 |

+

lib64/

|

| 19 |

+

parts/

|

| 20 |

+

sdist/

|

| 21 |

+

var/

|

| 22 |

+

wheels/

|

| 23 |

+

share/python-wheels/

|

| 24 |

+

*.egg-info/

|

| 25 |

+

.installed.cfg

|

| 26 |

+

*.egg

|

| 27 |

+

MANIFEST

|

| 28 |

+

|

| 29 |

+

# PyInstaller

|

| 30 |

+

# Usually these files are written by a python script from a template

|

| 31 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 32 |

+

*.manifest

|

| 33 |

+

*.spec

|

| 34 |

+

|

| 35 |

+

# Installer logs

|

| 36 |

+

pip-log.txt

|

| 37 |

+

pip-delete-this-directory.txt

|

| 38 |

+

|

| 39 |

+

# Unit test / coverage reports

|

| 40 |

+

htmlcov/

|

| 41 |

+

.tox/

|

| 42 |

+

.nox/

|

| 43 |

+

.coverage

|

| 44 |

+

.coverage.*

|

| 45 |

+

.cache

|

| 46 |

+

nosetests.xml

|

| 47 |

+

coverage.xml

|

| 48 |

+

*.cover

|

| 49 |

+

*.py,cover

|

| 50 |

+

.hypothesis/

|

| 51 |

+

.pytest_cache/

|

| 52 |

+

cover/

|

| 53 |

+

|

| 54 |

+

# Translations

|

| 55 |

+

*.mo

|

| 56 |

+

*.pot

|

| 57 |

+

|

| 58 |

+

# Django stuff:

|

| 59 |

+

*.log

|

| 60 |

+

local_settings.py

|

| 61 |

+

db.sqlite3

|

| 62 |

+

db.sqlite3-journal

|

| 63 |

+

|

| 64 |

+

# Flask stuff:

|

| 65 |

+

instance/

|

| 66 |

+

.webassets-cache

|

| 67 |

+

|

| 68 |

+

# Scrapy stuff:

|

| 69 |

+

.scrapy

|

| 70 |

+

|

| 71 |

+

# Sphinx documentation

|

| 72 |

+

docs/_build/

|

| 73 |

+

|

| 74 |

+

# PyBuilder

|

| 75 |

+

.pybuilder/

|

| 76 |

+

target/

|

| 77 |

+

|

| 78 |

+

# Jupyter Notebook

|

| 79 |

+

.ipynb_checkpoints

|

| 80 |

+

|

| 81 |

+

# IPython

|

| 82 |

+

profile_default/

|

| 83 |

+

ipython_config.py

|

| 84 |

+

|

| 85 |

+

# pyenv

|

| 86 |

+

# For a library or package, you might want to ignore these files since the code is

|

| 87 |

+

# intended to run in multiple environments; otherwise, check them in:

|

| 88 |

+

# .python-version

|

| 89 |

+

|

| 90 |

+

# pipenv

|

| 91 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 92 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 93 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 94 |

+

# install all needed dependencies.

|

| 95 |

+

#Pipfile.lock

|

| 96 |

+

|

| 97 |

+

# UV

|

| 98 |

+

# Similar to Pipfile.lock, it is generally recommended to include uv.lock in version control.

|

| 99 |

+

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

| 100 |

+

# commonly ignored for libraries.

|

| 101 |

+

#uv.lock

|

| 102 |

+

|

| 103 |

+

# poetry

|

| 104 |

+

# Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

|

| 105 |

+

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

| 106 |

+

# commonly ignored for libraries.

|

| 107 |

+

# https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

|

| 108 |

+

#poetry.lock

|

| 109 |

+

|

| 110 |

+

# pdm

|

| 111 |

+

# Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

|

| 112 |

+

#pdm.lock

|

| 113 |

+

# pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

|

| 114 |

+

# in version control.

|

| 115 |

+

# https://pdm.fming.dev/latest/usage/project/#working-with-version-control

|

| 116 |

+

.pdm.toml

|

| 117 |

+

.pdm-python

|

| 118 |

+

.pdm-build/

|

| 119 |

+

|

| 120 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

|

| 121 |

+

__pypackages__/

|

| 122 |

+

|

| 123 |

+

# Celery stuff

|

| 124 |

+

celerybeat-schedule

|

| 125 |

+

celerybeat.pid

|

| 126 |

+

|

| 127 |

+

# SageMath parsed files

|

| 128 |

+

*.sage.py

|

| 129 |

+

|

| 130 |

+

# Environments

|

| 131 |

+

.env

|

| 132 |

+

.venv

|

| 133 |

+

env/

|

| 134 |

+

venv/

|

| 135 |

+

ENV/

|

| 136 |

+

env.bak/

|

| 137 |

+

venv.bak/

|

| 138 |

+

|

| 139 |

+

# Spyder project settings

|

| 140 |

+

.spyderproject

|

| 141 |

+

.spyproject

|

| 142 |

+

|

| 143 |

+

# Rope project settings

|

| 144 |

+

.ropeproject

|

| 145 |

+

|

| 146 |

+

# mkdocs documentation

|

| 147 |

+

/site

|

| 148 |

+

|

| 149 |

+

# mypy

|

| 150 |

+

.mypy_cache/

|

| 151 |

+

.dmypy.json

|

| 152 |

+

dmypy.json

|

| 153 |

+

|

| 154 |

+

# Pyre type checker

|

| 155 |

+

.pyre/

|

| 156 |

+

|

| 157 |

+

# pytype static type analyzer

|

| 158 |

+

.pytype/

|

| 159 |

+

|

| 160 |

+

# Cython debug symbols

|

| 161 |

+

cython_debug/

|

| 162 |

+

|

| 163 |

+

# PyCharm

|

| 164 |

+

# JetBrains specific template is maintained in a separate JetBrains.gitignore that can

|

| 165 |

+

# be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

|

| 166 |

+

# and can be added to the global gitignore or merged into this file. For a more nuclear

|

| 167 |

+

# option (not recommended) you can uncomment the following to ignore the entire idea folder.

|

| 168 |

+

#.idea/

|

LICENSE

ADDED

|

@@ -0,0 +1,407 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Attribution-NonCommercial 4.0 International

|

| 2 |

+

|

| 3 |

+

=======================================================================

|

| 4 |

+

|

| 5 |

+

Creative Commons Corporation ("Creative Commons") is not a law firm and

|

| 6 |

+

does not provide legal services or legal advice. Distribution of

|

| 7 |

+

Creative Commons public licenses does not create a lawyer-client or

|

| 8 |

+

other relationship. Creative Commons makes its licenses and related

|

| 9 |

+

information available on an "as-is" basis. Creative Commons gives no

|

| 10 |

+

warranties regarding its licenses, any material licensed under their

|

| 11 |

+

terms and conditions, or any related information. Creative Commons

|

| 12 |

+

disclaims all liability for damages resulting from their use to the

|

| 13 |

+

fullest extent possible.

|

| 14 |

+

|

| 15 |

+

Using Creative Commons Public Licenses

|

| 16 |

+

|

| 17 |

+

Creative Commons public licenses provide a standard set of terms and

|

| 18 |

+

conditions that creators and other rights holders may use to share

|

| 19 |

+

original works of authorship and other material subject to copyright

|

| 20 |

+

and certain other rights specified in the public license below. The

|

| 21 |

+

following considerations are for informational purposes only, are not

|

| 22 |

+

exhaustive, and do not form part of our licenses.

|

| 23 |

+

|

| 24 |

+

Considerations for licensors: Our public licenses are

|

| 25 |

+

intended for use by those authorized to give the public

|

| 26 |

+

permission to use material in ways otherwise restricted by

|

| 27 |

+

copyright and certain other rights. Our licenses are

|

| 28 |

+

irrevocable. Licensors should read and understand the terms

|

| 29 |

+

and conditions of the license they choose before applying it.

|

| 30 |

+

Licensors should also secure all rights necessary before

|

| 31 |

+

applying our licenses so that the public can reuse the

|

| 32 |

+

material as expected. Licensors should clearly mark any

|

| 33 |

+

material not subject to the license. This includes other CC-

|

| 34 |

+

licensed material, or material used under an exception or

|

| 35 |

+

limitation to copyright. More considerations for licensors:

|

| 36 |

+

wiki.creativecommons.org/Considerations_for_licensors

|

| 37 |

+

|

| 38 |

+

Considerations for the public: By using one of our public

|

| 39 |

+

licenses, a licensor grants the public permission to use the

|

| 40 |

+

licensed material under specified terms and conditions. If

|

| 41 |

+

the licensor's permission is not necessary for any reason--for

|

| 42 |

+

example, because of any applicable exception or limitation to

|

| 43 |

+

copyright--then that use is not regulated by the license. Our

|

| 44 |

+

licenses grant only permissions under copyright and certain

|

| 45 |

+

other rights that a licensor has authority to grant. Use of

|

| 46 |

+

the licensed material may still be restricted for other

|

| 47 |

+

reasons, including because others have copyright or other

|

| 48 |

+

rights in the material. A licensor may make special requests,

|

| 49 |

+

such as asking that all changes be marked or described.

|

| 50 |

+

Although not required by our licenses, you are encouraged to

|

| 51 |

+

respect those requests where reasonable. More considerations

|

| 52 |

+

for the public:

|

| 53 |

+

wiki.creativecommons.org/Considerations_for_licensees

|

| 54 |

+

|

| 55 |

+

=======================================================================

|

| 56 |

+

|

| 57 |

+

Creative Commons Attribution-NonCommercial 4.0 International Public

|

| 58 |

+

License

|

| 59 |

+

|

| 60 |

+

By exercising the Licensed Rights (defined below), You accept and agree

|

| 61 |

+

to be bound by the terms and conditions of this Creative Commons

|

| 62 |

+

Attribution-NonCommercial 4.0 International Public License ("Public

|

| 63 |

+

License"). To the extent this Public License may be interpreted as a

|

| 64 |

+

contract, You are granted the Licensed Rights in consideration of Your

|

| 65 |

+

acceptance of these terms and conditions, and the Licensor grants You

|

| 66 |

+

such rights in consideration of benefits the Licensor receives from

|

| 67 |

+

making the Licensed Material available under these terms and

|

| 68 |

+

conditions.

|

| 69 |

+

|

| 70 |

+

|

| 71 |

+

Section 1 -- Definitions.

|

| 72 |

+

|

| 73 |

+

a. Adapted Material means material subject to Copyright and Similar

|

| 74 |

+

Rights that is derived from or based upon the Licensed Material

|

| 75 |

+

and in which the Licensed Material is translated, altered,

|

| 76 |

+

arranged, transformed, or otherwise modified in a manner requiring

|

| 77 |

+

permission under the Copyright and Similar Rights held by the

|

| 78 |

+

Licensor. For purposes of this Public License, where the Licensed

|

| 79 |

+

Material is a musical work, performance, or sound recording,

|

| 80 |

+

Adapted Material is always produced where the Licensed Material is

|

| 81 |

+

synched in timed relation with a moving image.

|

| 82 |

+

|

| 83 |

+

b. Adapter's License means the license You apply to Your Copyright

|

| 84 |

+

and Similar Rights in Your contributions to Adapted Material in

|

| 85 |

+

accordance with the terms and conditions of this Public License.

|

| 86 |

+

|

| 87 |

+

c. Copyright and Similar Rights means copyright and/or similar rights

|

| 88 |

+

closely related to copyright including, without limitation,

|

| 89 |

+

performance, broadcast, sound recording, and Sui Generis Database

|

| 90 |

+

Rights, without regard to how the rights are labeled or

|

| 91 |

+

categorized. For purposes of this Public License, the rights

|

| 92 |

+

specified in Section 2(b)(1)-(2) are not Copyright and Similar

|

| 93 |

+

Rights.

|

| 94 |

+

d. Effective Technological Measures means those measures that, in the

|

| 95 |

+

absence of proper authority, may not be circumvented under laws

|

| 96 |

+

fulfilling obligations under Article 11 of the WIPO Copyright

|

| 97 |

+

Treaty adopted on December 20, 1996, and/or similar international

|

| 98 |

+

agreements.

|

| 99 |

+

|

| 100 |

+

e. Exceptions and Limitations means fair use, fair dealing, and/or

|

| 101 |

+

any other exception or limitation to Copyright and Similar Rights

|

| 102 |

+

that applies to Your use of the Licensed Material.

|

| 103 |

+

|

| 104 |

+

f. Licensed Material means the artistic or literary work, database,

|

| 105 |

+

or other material to which the Licensor applied this Public

|

| 106 |

+

License.

|

| 107 |

+

|

| 108 |

+

g. Licensed Rights means the rights granted to You subject to the

|

| 109 |

+

terms and conditions of this Public License, which are limited to

|

| 110 |

+

all Copyright and Similar Rights that apply to Your use of the

|

| 111 |

+

Licensed Material and that the Licensor has authority to license.

|

| 112 |

+

|

| 113 |

+

h. Licensor means the individual(s) or entity(ies) granting rights

|

| 114 |

+

under this Public License.

|

| 115 |

+

|

| 116 |

+

i. NonCommercial means not primarily intended for or directed towards

|

| 117 |

+

commercial advantage or monetary compensation. For purposes of

|

| 118 |

+

this Public License, the exchange of the Licensed Material for

|

| 119 |

+

other material subject to Copyright and Similar Rights by digital

|

| 120 |

+

file-sharing or similar means is NonCommercial provided there is

|

| 121 |

+

no payment of monetary compensation in connection with the

|

| 122 |

+

exchange.

|

| 123 |

+

|

| 124 |

+

j. Share means to provide material to the public by any means or

|

| 125 |

+

process that requires permission under the Licensed Rights, such

|

| 126 |

+

as reproduction, public display, public performance, distribution,

|

| 127 |

+

dissemination, communication, or importation, and to make material

|

| 128 |

+

available to the public including in ways that members of the

|

| 129 |

+

public may access the material from a place and at a time

|

| 130 |

+

individually chosen by them.

|

| 131 |

+

|

| 132 |

+

k. Sui Generis Database Rights means rights other than copyright

|

| 133 |

+

resulting from Directive 96/9/EC of the European Parliament and of

|

| 134 |

+

the Council of 11 March 1996 on the legal protection of databases,

|

| 135 |

+

as amended and/or succeeded, as well as other essentially

|

| 136 |

+

equivalent rights anywhere in the world.

|

| 137 |

+

|

| 138 |

+

l. You means the individual or entity exercising the Licensed Rights

|

| 139 |

+

under this Public License. Your has a corresponding meaning.

|

| 140 |

+

|

| 141 |

+

|

| 142 |

+

Section 2 -- Scope.

|

| 143 |

+

|

| 144 |

+

a. License grant.

|

| 145 |

+

|

| 146 |

+

1. Subject to the terms and conditions of this Public License,

|

| 147 |

+

the Licensor hereby grants You a worldwide, royalty-free,

|

| 148 |

+

non-sublicensable, non-exclusive, irrevocable license to

|

| 149 |

+

exercise the Licensed Rights in the Licensed Material to:

|

| 150 |

+

|

| 151 |

+

a. reproduce and Share the Licensed Material, in whole or

|

| 152 |

+

in part, for NonCommercial purposes only; and

|

| 153 |

+

|

| 154 |

+

b. produce, reproduce, and Share Adapted Material for

|

| 155 |

+

NonCommercial purposes only.

|

| 156 |

+

|

| 157 |

+

2. Exceptions and Limitations. For the avoidance of doubt, where

|

| 158 |

+

Exceptions and Limitations apply to Your use, this Public

|

| 159 |

+

License does not apply, and You do not need to comply with

|

| 160 |

+

its terms and conditions.

|

| 161 |

+

|

| 162 |

+

3. Term. The term of this Public License is specified in Section

|

| 163 |

+

6(a).

|

| 164 |

+

|

| 165 |

+

4. Media and formats; technical modifications allowed. The

|

| 166 |

+

Licensor authorizes You to exercise the Licensed Rights in

|

| 167 |

+

all media and formats whether now known or hereafter created,

|

| 168 |

+

and to make technical modifications necessary to do so. The

|

| 169 |

+

Licensor waives and/or agrees not to assert any right or

|

| 170 |

+

authority to forbid You from making technical modifications

|

| 171 |

+

necessary to exercise the Licensed Rights, including

|

| 172 |

+

technical modifications necessary to circumvent Effective

|

| 173 |

+

Technological Measures. For purposes of this Public License,

|

| 174 |

+

simply making modifications authorized by this Section 2(a)

|

| 175 |

+

(4) never produces Adapted Material.

|

| 176 |

+

|

| 177 |

+

5. Downstream recipients.

|

| 178 |

+

|

| 179 |

+

a. Offer from the Licensor -- Licensed Material. Every

|

| 180 |

+

recipient of the Licensed Material automatically

|

| 181 |

+

receives an offer from the Licensor to exercise the

|

| 182 |

+

Licensed Rights under the terms and conditions of this

|

| 183 |

+

Public License.

|

| 184 |

+

|

| 185 |

+

b. No downstream restrictions. You may not offer or impose

|

| 186 |

+

any additional or different terms or conditions on, or

|

| 187 |

+

apply any Effective Technological Measures to, the

|

| 188 |

+

Licensed Material if doing so restricts exercise of the

|

| 189 |

+

Licensed Rights by any recipient of the Licensed

|

| 190 |

+

Material.

|

| 191 |

+

|

| 192 |

+

6. No endorsement. Nothing in this Public License constitutes or

|

| 193 |

+

may be construed as permission to assert or imply that You

|

| 194 |

+

are, or that Your use of the Licensed Material is, connected

|

| 195 |

+

with, or sponsored, endorsed, or granted official status by,

|

| 196 |

+

the Licensor or others designated to receive attribution as

|

| 197 |

+

provided in Section 3(a)(1)(A)(i).

|

| 198 |

+

|

| 199 |

+

b. Other rights.

|

| 200 |

+

|

| 201 |

+

1. Moral rights, such as the right of integrity, are not

|

| 202 |

+

licensed under this Public License, nor are publicity,

|

| 203 |

+

privacy, and/or other similar personality rights; however, to

|

| 204 |

+

the extent possible, the Licensor waives and/or agrees not to

|

| 205 |

+

assert any such rights held by the Licensor to the limited

|

| 206 |

+

extent necessary to allow You to exercise the Licensed

|

| 207 |

+

Rights, but not otherwise.

|

| 208 |

+

|

| 209 |

+

2. Patent and trademark rights are not licensed under this

|

| 210 |

+

Public License.

|

| 211 |

+

|

| 212 |

+

3. To the extent possible, the Licensor waives any right to

|

| 213 |

+

collect royalties from You for the exercise of the Licensed

|

| 214 |

+

Rights, whether directly or through a collecting society

|

| 215 |

+

under any voluntary or waivable statutory or compulsory

|

| 216 |

+

licensing scheme. In all other cases the Licensor expressly

|

| 217 |

+

reserves any right to collect such royalties, including when

|

| 218 |

+

the Licensed Material is used other than for NonCommercial

|

| 219 |

+

purposes.

|

| 220 |

+

|

| 221 |

+

|

| 222 |

+

Section 3 -- License Conditions.

|

| 223 |

+

|

| 224 |

+

Your exercise of the Licensed Rights is expressly made subject to the

|

| 225 |

+

following conditions.

|

| 226 |

+

|

| 227 |

+

a. Attribution.

|

| 228 |

+

|

| 229 |

+

1. If You Share the Licensed Material (including in modified

|

| 230 |

+

form), You must:

|

| 231 |

+

|

| 232 |

+

a. retain the following if it is supplied by the Licensor

|

| 233 |

+

with the Licensed Material:

|

| 234 |

+

|

| 235 |

+

i. identification of the creator(s) of the Licensed

|

| 236 |

+

Material and any others designated to receive

|

| 237 |

+

attribution, in any reasonable manner requested by

|

| 238 |

+

the Licensor (including by pseudonym if

|

| 239 |

+

designated);

|

| 240 |

+

|

| 241 |

+

ii. a copyright notice;

|

| 242 |

+

|

| 243 |

+

iii. a notice that refers to this Public License;

|

| 244 |

+

|

| 245 |

+

iv. a notice that refers to the disclaimer of

|

| 246 |

+

warranties;

|

| 247 |

+

|

| 248 |

+

v. a URI or hyperlink to the Licensed Material to the

|

| 249 |

+

extent reasonably practicable;

|

| 250 |

+

|

| 251 |

+

b. indicate if You modified the Licensed Material and

|

| 252 |

+

retain an indication of any previous modifications; and

|

| 253 |

+

|

| 254 |

+

c. indicate the Licensed Material is licensed under this

|

| 255 |

+

Public License, and include the text of, or the URI or

|

| 256 |

+

hyperlink to, this Public License.

|

| 257 |

+

|

| 258 |

+

2. You may satisfy the conditions in Section 3(a)(1) in any

|

| 259 |

+

reasonable manner based on the medium, means, and context in

|

| 260 |

+

which You Share the Licensed Material. For example, it may be

|

| 261 |

+

reasonable to satisfy the conditions by providing a URI or

|

| 262 |

+

hyperlink to a resource that includes the required

|

| 263 |

+

information.

|

| 264 |

+

|

| 265 |

+

3. If requested by the Licensor, You must remove any of the

|

| 266 |

+

information required by Section 3(a)(1)(A) to the extent

|

| 267 |

+

reasonably practicable.

|

| 268 |

+

|

| 269 |

+

4. If You Share Adapted Material You produce, the Adapter's

|

| 270 |

+

License You apply must not prevent recipients of the Adapted

|

| 271 |

+

Material from complying with this Public License.

|

| 272 |

+

|

| 273 |

+

|

| 274 |

+

Section 4 -- Sui Generis Database Rights.

|

| 275 |

+

|

| 276 |

+

Where the Licensed Rights include Sui Generis Database Rights that

|

| 277 |

+

apply to Your use of the Licensed Material:

|

| 278 |

+

|

| 279 |

+

a. for the avoidance of doubt, Section 2(a)(1) grants You the right

|

| 280 |

+

to extract, reuse, reproduce, and Share all or a substantial

|

| 281 |

+

portion of the contents of the database for NonCommercial purposes

|

| 282 |

+

only;

|

| 283 |

+

|

| 284 |

+

b. if You include all or a substantial portion of the database

|

| 285 |

+

contents in a database in which You have Sui Generis Database

|

| 286 |

+

Rights, then the database in which You have Sui Generis Database

|

| 287 |

+

Rights (but not its individual contents) is Adapted Material; and

|

| 288 |

+

|

| 289 |

+

c. You must comply with the conditions in Section 3(a) if You Share

|

| 290 |

+

all or a substantial portion of the contents of the database.

|

| 291 |

+

|

| 292 |

+

For the avoidance of doubt, this Section 4 supplements and does not

|

| 293 |

+

replace Your obligations under this Public License where the Licensed

|

| 294 |

+

Rights include other Copyright and Similar Rights.

|

| 295 |

+

|

| 296 |

+

|

| 297 |

+

Section 5 -- Disclaimer of Warranties and Limitation of Liability.

|

| 298 |

+

|

| 299 |

+

a. UNLESS OTHERWISE SEPARATELY UNDERTAKEN BY THE LICENSOR, TO THE

|

| 300 |

+

EXTENT POSSIBLE, THE LICENSOR OFFERS THE LICENSED MATERIAL AS-IS

|

| 301 |

+

AND AS-AVAILABLE, AND MAKES NO REPRESENTATIONS OR WARRANTIES OF

|

| 302 |

+

ANY KIND CONCERNING THE LICENSED MATERIAL, WHETHER EXPRESS,

|

| 303 |

+

IMPLIED, STATUTORY, OR OTHER. THIS INCLUDES, WITHOUT LIMITATION,

|

| 304 |

+

WARRANTIES OF TITLE, MERCHANTABILITY, FITNESS FOR A PARTICULAR

|

| 305 |

+

PURPOSE, NON-INFRINGEMENT, ABSENCE OF LATENT OR OTHER DEFECTS,

|

| 306 |

+

ACCURACY, OR THE PRESENCE OR ABSENCE OF ERRORS, WHETHER OR NOT

|

| 307 |

+

KNOWN OR DISCOVERABLE. WHERE DISCLAIMERS OF WARRANTIES ARE NOT

|

| 308 |

+

ALLOWED IN FULL OR IN PART, THIS DISCLAIMER MAY NOT APPLY TO YOU.

|

| 309 |

+

|

| 310 |

+

b. TO THE EXTENT POSSIBLE, IN NO EVENT WILL THE LICENSOR BE LIABLE

|

| 311 |

+

TO YOU ON ANY LEGAL THEORY (INCLUDING, WITHOUT LIMITATION,

|

| 312 |

+

NEGLIGENCE) OR OTHERWISE FOR ANY DIRECT, SPECIAL, INDIRECT,

|

| 313 |

+

INCIDENTAL, CONSEQUENTIAL, PUNITIVE, EXEMPLARY, OR OTHER LOSSES,

|

| 314 |

+

COSTS, EXPENSES, OR DAMAGES ARISING OUT OF THIS PUBLIC LICENSE OR

|

| 315 |

+

USE OF THE LICENSED MATERIAL, EVEN IF THE LICENSOR HAS BEEN

|

| 316 |

+

ADVISED OF THE POSSIBILITY OF SUCH LOSSES, COSTS, EXPENSES, OR

|

| 317 |

+

DAMAGES. WHERE A LIMITATION OF LIABILITY IS NOT ALLOWED IN FULL OR

|

| 318 |

+

IN PART, THIS LIMITATION MAY NOT APPLY TO YOU.

|

| 319 |

+

|

| 320 |

+

c. The disclaimer of warranties and limitation of liability provided

|

| 321 |

+

above shall be interpreted in a manner that, to the extent

|

| 322 |

+

possible, most closely approximates an absolute disclaimer and

|

| 323 |

+

waiver of all liability.

|

| 324 |

+

|

| 325 |

+

|

| 326 |

+

Section 6 -- Term and Termination.

|

| 327 |

+

|

| 328 |

+

a. This Public License applies for the term of the Copyright and

|

| 329 |

+

Similar Rights licensed here. However, if You fail to comply with

|

| 330 |

+

this Public License, then Your rights under this Public License

|

| 331 |

+

terminate automatically.

|

| 332 |

+

|

| 333 |

+

b. Where Your right to use the Licensed Material has terminated under

|

| 334 |

+

Section 6(a), it reinstates:

|

| 335 |

+

|

| 336 |

+

1. automatically as of the date the violation is cured, provided

|

| 337 |

+

it is cured within 30 days of Your discovery of the

|

| 338 |

+

violation; or

|

| 339 |

+

|

| 340 |

+

2. upon express reinstatement by the Licensor.

|

| 341 |

+

|

| 342 |

+

For the avoidance of doubt, this Section 6(b) does not affect any

|

| 343 |

+

right the Licensor may have to seek remedies for Your violations

|

| 344 |

+

of this Public License.

|

| 345 |

+

|

| 346 |

+

c. For the avoidance of doubt, the Licensor may also offer the

|

| 347 |

+

Licensed Material under separate terms or conditions or stop

|

| 348 |

+

distributing the Licensed Material at any time; however, doing so

|

| 349 |

+

will not terminate this Public License.

|

| 350 |

+

|

| 351 |

+

d. Sections 1, 5, 6, 7, and 8 survive termination of this Public

|

| 352 |

+

License.

|

| 353 |

+

|

| 354 |

+

|

| 355 |

+

Section 7 -- Other Terms and Conditions.

|

| 356 |

+

|

| 357 |

+

a. The Licensor shall not be bound by any additional or different

|

| 358 |

+

terms or conditions communicated by You unless expressly agreed.

|

| 359 |

+

|

| 360 |

+

b. Any arrangements, understandings, or agreements regarding the

|

| 361 |

+

Licensed Material not stated herein are separate from and

|

| 362 |

+

independent of the terms and conditions of this Public License.

|

| 363 |

+

|

| 364 |

+

|

| 365 |

+

Section 8 -- Interpretation.

|

| 366 |

+

|

| 367 |

+

a. For the avoidance of doubt, this Public License does not, and

|

| 368 |

+

shall not be interpreted to, reduce, limit, restrict, or impose

|

| 369 |

+

conditions on any use of the Licensed Material that could lawfully

|

| 370 |

+

be made without permission under this Public License.

|

| 371 |

+

|

| 372 |

+

b. To the extent possible, if any provision of this Public License is

|

| 373 |

+

deemed unenforceable, it shall be automatically reformed to the

|

| 374 |

+

minimum extent necessary to make it enforceable. If the provision

|

| 375 |

+

cannot be reformed, it shall be severed from this Public License

|

| 376 |

+

without affecting the enforceability of the remaining terms and

|

| 377 |

+

conditions.

|

| 378 |

+

|

| 379 |

+

c. No term or condition of this Public License will be waived and no

|

| 380 |

+

failure to comply consented to unless expressly agreed to by the

|

| 381 |

+

Licensor.

|

| 382 |

+

|

| 383 |

+

d. Nothing in this Public License constitutes or may be interpreted

|

| 384 |

+

as a limitation upon, or waiver of, any privileges and immunities

|

| 385 |

+

that apply to the Licensor or You, including from the legal

|

| 386 |

+

processes of any jurisdiction or authority.

|

| 387 |

+

|

| 388 |

+

=======================================================================

|

| 389 |

+

|

| 390 |

+

Creative Commons is not a party to its public

|

| 391 |

+

licenses. Notwithstanding, Creative Commons may elect to apply one of

|

| 392 |

+

its public licenses to material it publishes and in those instances

|

| 393 |

+

will be considered the “Licensor.” The text of the Creative Commons

|

| 394 |

+

public licenses is dedicated to the public domain under the CC0 Public

|

| 395 |

+

Domain Dedication. Except for the limited purpose of indicating that

|

| 396 |

+

material is shared under a Creative Commons public license or as

|

| 397 |

+

otherwise permitted by the Creative Commons policies published at

|

| 398 |

+

creativecommons.org/policies, Creative Commons does not authorize the

|

| 399 |

+

use of the trademark "Creative Commons" or any other trademark or logo

|

| 400 |

+

of Creative Commons without its prior written consent including,

|

| 401 |

+

without limitation, in connection with any unauthorized modifications

|

| 402 |

+

to any of its public licenses or any other arrangements,

|

| 403 |

+

understandings, or agreements concerning use of licensed material. For

|

| 404 |

+

the avoidance of doubt, this paragraph does not form part of the

|

| 405 |

+

public licenses.

|

| 406 |

+

|

| 407 |

+

Creative Commons may be contacted at creativecommons.org.

|

PROMPT_GUIDE.md

ADDED

|

@@ -0,0 +1,91 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Prompt Guide

|

| 2 |

+

|

| 3 |

+

All examples are generated with a CFG of $4.2$, $50$ steps, and are non-cherrypicked unless otherwise stated. Negative prompt is set to:

|

| 4 |

+

```

|

| 5 |

+

monochrome, greyscale, low-res, bad anatomy, bad hands, text, error, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts, signature, watermark, username, blurry, artist name, poorly drawn, bad anatomy, wrong anatomy, extra limb, missing limb, floating limbs, disconnected limbs, mutation, mutated, ugly, disgusting, blurry, amputation

|

| 6 |

+

```

|

| 7 |

+

|

| 8 |

+

## 1. Text-to-Image

|

| 9 |

+

|

| 10 |

+

### 1.1 Long and detailed prompts give (much) better results.

|

| 11 |

+

|

| 12 |

+

Since our training comprised of long and detailed prompts, the model is more likely to generate better images with detailed prompts.

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

The model shows good text adherence with long and complex prompts as in below images. We use the first $20$ prompts from [simoryu's examples](https://cloneofsimo.github.io/compare_aura_sd3/). For detailed prompts, results of other models, refer to the above link.

|

| 16 |

+

|

| 17 |

+

<p align="center">

|

| 18 |

+

<img src="assets/promptguide_complex.jpg" alt="Text-to-Image results" width="800">

|

| 19 |

+

</p>

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

### 1.2 Resolution

|

| 23 |

+

|

| 24 |

+

The model generally works well with height and width in range of $[768; 1280]$ (height/width must be divisible by 16) for text-to-image. For other tasks, it performs best with resolution around $512$.

|

| 25 |

+

|

| 26 |

+

## 2. ID Customization & Subject-driven generation

|

| 27 |

+

|

| 28 |

+

- The expected length of source captions is $30$ to $75$ words. Empirically, we find that longer prompt can help preserve the ID better but it might hinder the text-adherence for target caption.

|

| 29 |

+

|

| 30 |

+

- We find it better to add some descriptions (e.g., from source caption) to target to preserve the identity, especially for complex subjects with delicate details.

|

| 31 |

+

|

| 32 |

+

<p align="center">

|

| 33 |

+

<img src="assets/promptguide_idtask.jpg" alt="ablation id task" width="800">

|

| 34 |

+

</p>

|

| 35 |

+

|

| 36 |

+

## 3. Multiview generation

|

| 37 |

+

|

| 38 |

+

We recommend not use captions, which describe the facial features e.g., looking at the camera, etc, to mitigate multifaced/janus problems.

|

| 39 |

+

|

| 40 |

+

## 4. Image editing

|

| 41 |

+

|

| 42 |

+

We find it's generally better to set the guidance scale to lower value e.g., $[3; 3.5]$ to avoid over-saturation results.

|

| 43 |

+

|

| 44 |

+

## 5. Special tokens and available colors

|

| 45 |

+

|

| 46 |

+

### 5.1 Task Tokens

|

| 47 |

+

|

| 48 |

+

| Task | Token | Additional Tokens |

|

| 49 |

+

|:---------------------|:---------------------------|:------------------|

|

| 50 |

+

| Text to Image | `[[text2image]]` | |

|

| 51 |

+

| Deblurring | `[[deblurring]]` | |

|

| 52 |

+

| Inpainting | `[[image_inpainting]]` | |

|

| 53 |

+

| Canny-edge and Image | `[[canny2image]]` | |

|

| 54 |

+

| Depth and Image | `[[depth2image]]` | |

|

| 55 |

+

| Hed and Image | `[[hed2img]]` | |

|

| 56 |

+

| Pose and Image | `[[pose2image]]` | |

|

| 57 |

+

| Image editing with Instruction | `[[image_editing]]` | |

|

| 58 |

+

| Semantic map and Image| `[[semanticmap2image]]` | `<#00FFFF cyan mask: object/to/segment>` |

|

| 59 |

+

| Boundingbox and Image | `[[boundingbox2image]]` | `<#00FFFF cyan boundingbox: object/to/detect>` |

|

| 60 |

+

| ID customization | `[[faceid]]` | `[[img0]] target/caption [[img1]] caption/of/source/image_1 [[img2]] caption/of/source/image_2 [[img3]] caption/of/source/image_3` |

|

| 61 |

+

| Multiview | `[[multiview]]` | |

|

| 62 |

+

| Subject-Driven | `[[subject_driven]]` | `<item: name/of/subject> [[img0]] target/caption/goes/here [[img1]] insert/source/caption` |

|

| 63 |

+

|

| 64 |

+

|

| 65 |

+

Note that you can replace the cyan color above with any from below table and have multiple additional tokens to detect/segment multiple classes.

|

| 66 |

+

|

| 67 |

+

### 5.2 Available colors

|

| 68 |

+

|

| 69 |

+

|

| 70 |

+

| Hex Code | Color Name |

|

| 71 |

+

|:---------|:-----------|

|

| 72 |

+

| #FF0000 | <span style="color: #FF0000">red</span> |

|

| 73 |

+

| #00FF00 | <span style="color: #00FF00">lime</span> |

|

| 74 |

+

| #0000FF | <span style="color: #0000FF">blue</span> |

|

| 75 |

+

| #FFFF00 | <span style="color: #FFFF00">yellow</span> |

|

| 76 |

+

| #FF00FF | <span style="color: #FF00FF">magenta</span> |

|

| 77 |

+

| #00FFFF | <span style="color: #00FFFF">cyan</span> |

|

| 78 |

+

| #FFA500 | <span style="color: #FFA500">orange</span> |

|

| 79 |

+

| #800080 | <span style="color: #800080">purple</span> |

|

| 80 |

+

| #A52A2A | <span style="color: #A52A2A">brown</span> |

|

| 81 |

+

| #008000 | <span style="color: #008000">green</span> |

|

| 82 |

+

| #FFC0CB | <span style="color: #FFC0CB">pink</span> |

|

| 83 |

+

| #008080 | <span style="color: #008080">teal</span> |

|

| 84 |

+

| #FF8C00 | <span style="color: #FF8C00">darkorange</span> |

|

| 85 |

+

| #8A2BE2 | <span style="color: #8A2BE2">blueviolet</span> |

|

| 86 |

+

| #006400 | <span style="color: #006400">darkgreen</span> |

|

| 87 |

+

| #FF4500 | <span style="color: #FF4500">orangered</span> |

|

| 88 |

+

| #000080 | <span style="color: #000080">navy</span> |

|

| 89 |

+

| #FFD700 | <span style="color: #FFD700">gold</span> |

|

| 90 |

+

| #40E0D0 | <span style="color: #40E0D0">turquoise</span> |

|

| 91 |

+

| #DA70D6 | <span style="color: #DA70D6">orchid</span> |

|

README.md

CHANGED

|

@@ -1,14 +1,169 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

| 14 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# One Diffusion to Generate Them All

|

| 2 |

+

|

| 3 |

+

<p align="left">

|

| 4 |

+

<a href="https://lehduong.github.io/OneDiffusion-homepage/">

|

| 5 |

+

<img alt="Build" src="https://img.shields.io/badge/Project%20Page-OneDiffusion-yellow">

|

| 6 |

+

</a>

|

| 7 |

+

<a href="https://arxiv.org/abs/2411.16318">

|

| 8 |

+

<img alt="Build" src="https://img.shields.io/badge/arXiv%20paper-2411.16318-b31b1b.svg">

|

| 9 |

+

</a>

|

| 10 |

+

<a href="https://huggingface.co/spaces/lehduong/OneDiffusion">

|

| 11 |

+

<img alt="License" src="https://img.shields.io/badge/HF%20Demo-🤗-lightblue">

|

| 12 |

+

</a>

|

| 13 |

+

<a href="https://huggingface.co/lehduong/OneDiffusion">

|

| 14 |

+

<img alt="Build" src="https://img.shields.io/badge/HF%20Model-🤗-yellow">

|

| 15 |

+

</a>

|

| 16 |

+

</p>

|

| 17 |

+

|

| 18 |

+

<h4 align="left">

|

| 19 |

+

<p>

|

| 20 |

+

<a href=#news>News</a> |

|

| 21 |

+

<a href=#quick-start>Quick start</a> |

|

| 22 |

+

<a href=https://github.com/lehduong/OneDiffusion/blob/main/PROMPT_GUIDE.md>Prompt guide & Supported tasks </a> |

|

| 23 |

+

<a href=#qualitative-results>Qualitative results</a> |

|

| 24 |

+

<a href="#license">License</a> |

|

| 25 |

+

<a href="#citation">Citation</a>

|

| 26 |

+

<p>

|

| 27 |

+

</h4>

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

<p align="center">

|

| 31 |

+

<img src="assets/teaser.png" alt="Teaser Image" width="800">

|

| 32 |

+

</p>

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

This is official repo of OneDiffusion, a versatile, large-scale diffusion model that seamlessly supports bidirectional image synthesis and understanding across diverse tasks.

|

| 36 |

+

|

| 37 |

+

## News

|

| 38 |

+

- 📦 2024/12/10: Released weight.

|

| 39 |

+

- 📝 2024/12/06: Added image editing from instruction.

|

| 40 |

+

- ✨ 2024/12/02: Added subject-driven generation

|

| 41 |

+

|

| 42 |

+

## Installation

|

| 43 |

+

```

|

| 44 |

+

conda create -n onediffusion_env python=3.8 &&

|

| 45 |

+

conda activate onediffusion_env &&

|

| 46 |

+

pip install torch==2.3.1 torchvision==0.18.1 torchaudio==2.3.1 --index-url https://download.pytorch.org/whl/cu118 &&

|

| 47 |

+

pip install "git+https://github.com/facebookresearch/pytorch3d.git" &&

|

| 48 |

+

pip install -r requirements.txt

|

| 49 |

+

```

|

| 50 |

+

|

| 51 |

+

## Quick start

|

| 52 |

+

|

| 53 |

+