A newer version of the Gradio SDK is available:

5.9.1

title: IEBins Depth Estimation

emoji: 🏢

colorFrom: pink

colorTo: pink

sdk: gradio

sdk_version: 4.15.0

app_file: app.py

pinned: false

license: mit

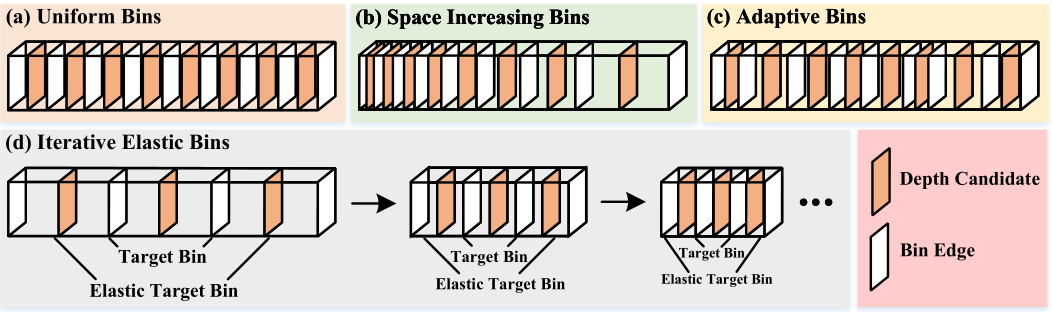

IEBins: Iterative Elastic Bins for Monocular Depth Estimation

• NeurIPS 2023 •

Abstract

Installation

conda create -n iebins python=3.8

conda activate iebins

conda install pytorch=1.10.0 torchvision cudatoolkit=11.1

pip install matplotlib, tqdm, tensorboardX, timm, mmcv, open3d

Datasets

You can prepare the datasets KITTI and NYUv2 according to here and download the SUN RGB-D dataset from here, and then modify the data path in the config files to your dataset locations.

Training

First download the pretrained encoder backbone from here, and then modify the pretrain path in the config files. If you want to train the KITTI_Official model, first download the pretrained encoder backbone from here, which is provided by MIM.

Training the NYUv2 model:

python iebins/train.py configs/arguments_train_nyu.txt

Training the KITTI_Eigen model:

python iebins/train.py configs/arguments_train_kittieigen.txt

Training the KITTI_Official model:

python iebins_kittiofficial/train.py configs/arguments_train_kittiofficial.txt

Evaluation

Evaluate the NYUv2 model:

python iebins/eval.py configs/arguments_eval_nyu.txt

Evaluate the NYUv2 model on the SUN RGB-D dataset:

python iebins/eval_sun.py configs/arguments_eval_sun.txt

Evaluate the KITTI_Eigen model:

python iebins/eval.py configs/arguments_eval_kittieigen.txt

To generate KITTI Online evaluation data for the KITTI_Official model:

python iebins_kittiofficial/test.py --data_path path to dataset --filenames_file ./data_splits/kitti_official_test.txt --max_depth 80 --checkpoint_path path to pretrained checkpoint --dataset kitti --do_kb_crop

Gradio Demo

- Install the gradio and other required libraries

pip install gradio gradio_imageslider timm -q

- Run the demo

python app.py

Qualitative Depth and Point Cloud Results

You can download the qualitative depth results of IEBins, NDDepth, NeWCRFs, PixelFormer, AdaBins and BTS on the test sets of NYUv2 and KITTI_Eigen from here and download the qualitative point cloud results of IEBins, NDDepth, NeWCRFS, PixelFormer, AdaBins and BTS on the NYUv2 test set from here.

If you want to derive these results by yourself, please refer to the test.py.

If you want to perform inference on a single image, run:

python iebins/inference_single_image.py --dataset kitti or nyu --image_path path to image --checkpoint_path path to pretrained checkpoint --max_depth 80 or 10

Then you can acquire the qualitative depth result.

Models

| Model | Abs Rel | Sq Rel | RMSE | a1 | a2 | a3 | Link |

|---|---|---|---|---|---|---|---|

| NYUv2 (Swin-L) | 0.087 | 0.040 | 0.314 | 0.936 | 0.992 | 0.998 | [Google] [Baidu] |

| NYUv2 (Swin-T) | 0.108 | 0.061 | 0.375 | 0.893 | 0.984 | 0.996 | [Google] [Baidu] |

| KITTI_Eigen (Swin-L) | 0.050 | 0.142 | 2.011 | 0.978 | 0.998 | 0.999 | [Google] [Baidu] |

| KITTI_Eigen (Swin-T) | 0.056 | 0.169 | 2.205 | 0.970 | 0.996 | 0.999 | [Google] [Baidu] |

| Model | SILog | Abs Rel | Sq Rel | RMSE | a1 | a2 | a3 | Link |

|---|---|---|---|---|---|---|---|---|

| KITTI_Official (Swinv2-L) | 7.48 | 5.20 | 0.79 | 2.34 | 0.974 | 0.996 | 0.999 | [Google] |

Citation

If you find our work useful in your research please consider citing our paper:

@inproceedings{shao2023IEBins,

title={IEBins: Iterative Elastic Bins for Monocular Depth Estimation},

author={Shao, Shuwei and Pei, Zhongcai and Wu, Xingming and Liu, Zhong and Chen, Weihai and Li, Zhengguo},

booktitle={Advances in Neural Information Processing Systems (NeurIPS)},

year={2023}

}

Contact

If you have any questions, please feel free to contact swshao@buaa.edu.cn.

Acknowledgement

Our code is based on the implementation of NeWCRFs and BTS. We thank their excellent works.