license: cc-by-sa-3.0

language:

- de

xLSTM Model trained on German Wikipedia

Research & development of an xLSTM model trained on German Wikipedia.

The Flair team is currently working on the integration of xLSTM (both LM training and fine-tuning models for downstream tasks).

For pretraining this xLSTM model, we this fork (from Patrick Haller) of the awesome Helibrunna library.

Initially, we integrated xLSTM model training into Flair - for more information about this, please refer to the archived flair-old branch of this repository.

Changelog

- 28.08.2024: Model training is now done with Helibrunna fork - find it here.

- 10.06.2024: Initial version. xLSTM was trained with Flair library, see this old branch.

Training

The current model was trained with commit f66cc55 from the main branch of the forked Helibrunna repo.

The xlstm library needs to be installed manually - also check that pip3 install Ninja is installed.

The German Wikipedia dump from this repository is used.

The following training configuration is used:

description: "Train a wikipedia xLSTM"

training:

model_name: "german_wikipedia"

batch_size: 10

lr: 6e-4

lr_warmup_steps: 4584

lr_decay_until_steps: "auto"

lr_decay_factor: 0.001

weight_decay: 0.1

amp_precision: bfloat16

weight_precision: float32

enable_mixed_precision: true

num_epochs: 1

output_dir: "./output"

save_every_step: 2000

log_every_step: 10

generate_every_step: 5000

wandb_project: "xlstm"

gradient_clipping: "auto"

# wandb_project: "lovecraftxlstm"

model:

num_blocks: 24

embedding_dim: 768

mlstm_block:

mlstm:

num_heads: 4

slstm_block: {}

slstm_at: []

context_length: 512

dataset:

output_path: "./output/german-wikipedia-dataset"

hugging_face_id: ["stefan-it/dewiki-20230701"]

split: "train" # Also subsetting is possible: "train[:100000]"

shuffle: False

seed: 42

tokenizer:

type: "pretrained"

pretrained_class: "LlamaTokenizer"

pretrained_id: "meta-llama/Llama-2-7b-hf"

Caveats

Notice: this model integration is heavily under development. And in the process of finding good hyper-parameters. Also downstream experiments are coming very soon.

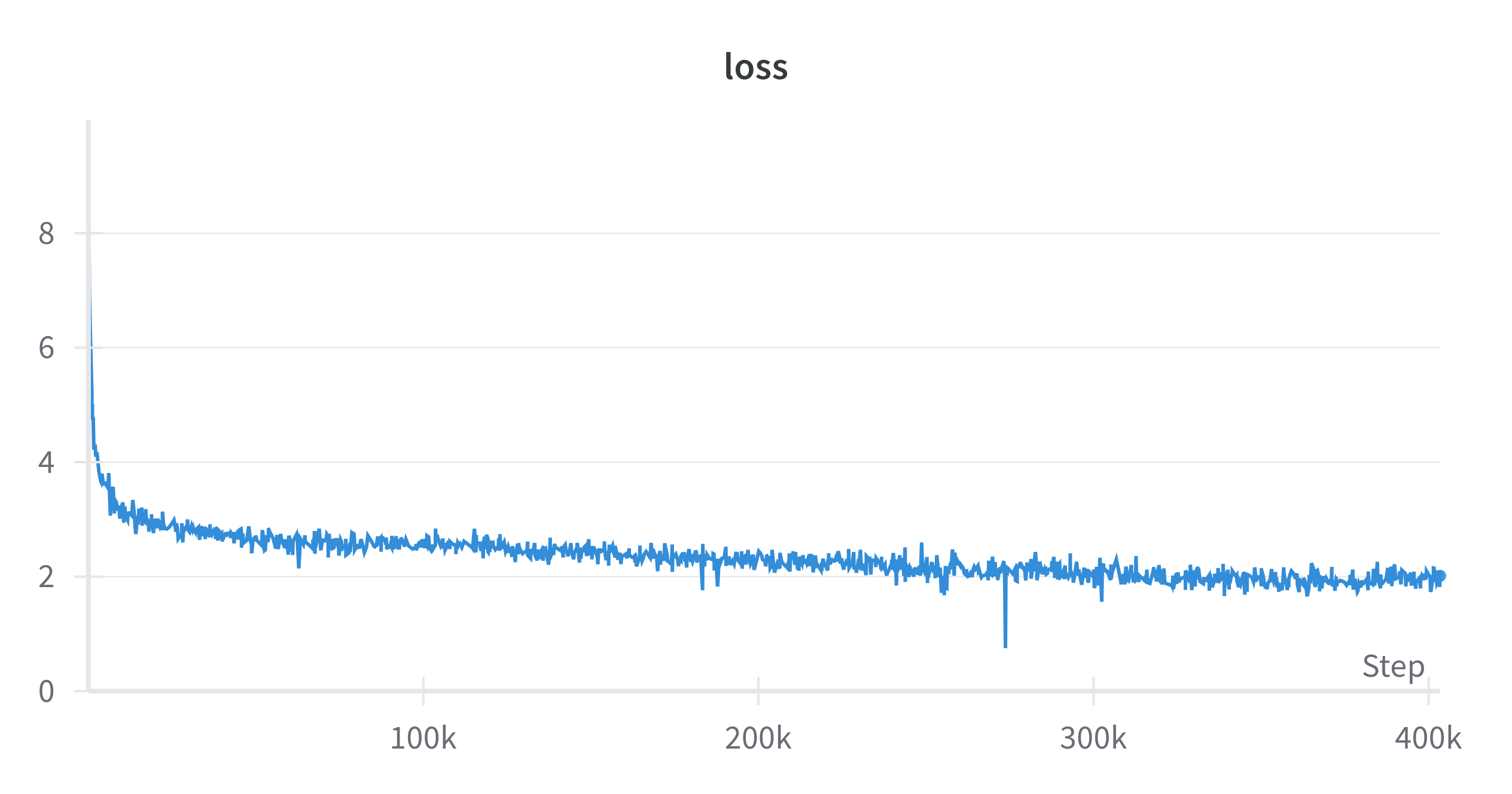

Unfortunately, there are nan's occuring in the training: