Marigold Pipelines for Computer Vision Tasks

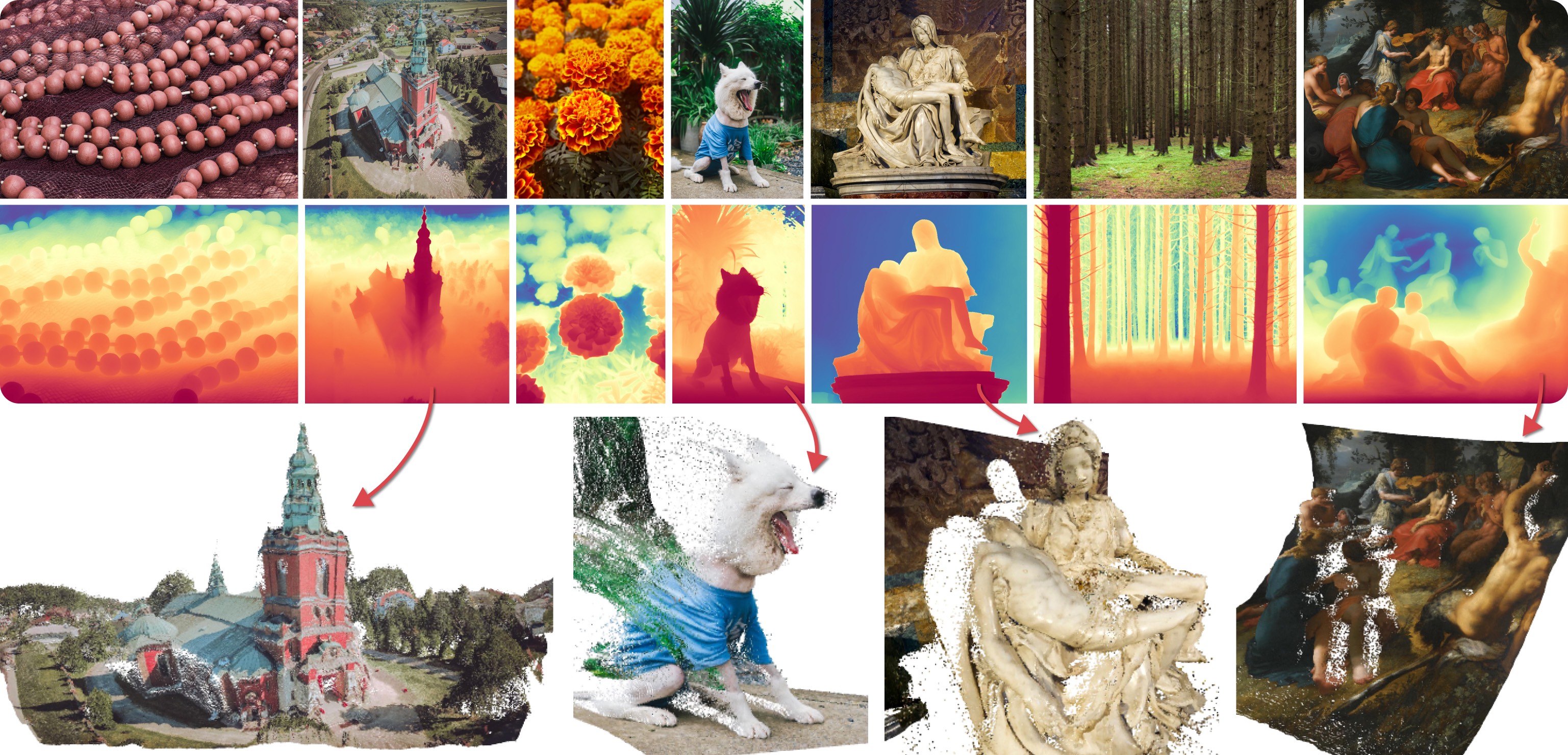

Marigold was proposed in Repurposing Diffusion-Based Image Generators for Monocular Depth Estimation, a CVPR 2024 Oral paper by Bingxin Ke, Anton Obukhov, Shengyu Huang, Nando Metzger, Rodrigo Caye Daudt, and Konrad Schindler. The idea is to repurpose the rich generative prior of Text-to-Image Latent Diffusion Models (LDMs) for traditional computer vision tasks. Initially, this idea was explored to fine-tune Stable Diffusion for Monocular Depth Estimation, as shown in the teaser above. Later,

- Tianfu Wang trained the first Latent Consistency Model (LCM) of Marigold, which unlocked fast single-step inference;

- Kevin Qu extended the approach to Surface Normals Estimation;

- Anton Obukhov contributed the pipelines and documentation into diffusers (enabled and supported by YiYi Xu and Sayak Paul).

The abstract from the paper is:

Monocular depth estimation is a fundamental computer vision task. Recovering 3D depth from a single image is geometrically ill-posed and requires scene understanding, so it is not surprising that the rise of deep learning has led to a breakthrough. The impressive progress of monocular depth estimators has mirrored the growth in model capacity, from relatively modest CNNs to large Transformer architectures. Still, monocular depth estimators tend to struggle when presented with images with unfamiliar content and layout, since their knowledge of the visual world is restricted by the data seen during training, and challenged by zero-shot generalization to new domains. This motivates us to explore whether the extensive priors captured in recent generative diffusion models can enable better, more generalizable depth estimation. We introduce Marigold, a method for affine-invariant monocular depth estimation that is derived from Stable Diffusion and retains its rich prior knowledge. The estimator can be fine-tuned in a couple of days on a single GPU using only synthetic training data. It delivers state-of-the-art performance across a wide range of datasets, including over 20% performance gains in specific cases. Project page: https://marigoldmonodepth.github.io.

Available Pipelines

Each pipeline supports one Computer Vision task, which takes an input RGB image as input and produces a prediction of the modality of interest, such as a depth map of the input image. Currently, the following tasks are implemented:

| Pipeline | Predicted Modalities | Demos |

|---|---|---|

| MarigoldDepthPipeline | Depth, Disparity | Fast Demo (LCM), Slow Original Demo (DDIM) |

| MarigoldNormalsPipeline | Surface normals | Fast Demo (LCM) |

Available Checkpoints

The original checkpoints can be found under the PRS-ETH Hugging Face organization.

Make sure to check out the Schedulers guide to learn how to explore the tradeoff between scheduler speed and quality, and see the reuse components across pipelines section to learn how to efficiently load the same components into multiple pipelines. Also, to know more about reducing the memory usage of this pipeline, refer to the ["Reduce memory usage"] section here.

Marigold pipelines were designed and tested only with DDIMScheduler and LCMScheduler.

Depending on the scheduler, the number of inference steps required to get reliable predictions varies, and there is no universal value that works best across schedulers.

Because of that, the default value of num_inference_steps in the __call__ method of the pipeline is set to None (see the API reference).

Unless set explicitly, its value will be taken from the checkpoint configuration model_index.json.

This is done to ensure high-quality predictions when calling the pipeline with just the image argument.

See also Marigold usage examples.

MarigoldDepthPipeline

[[autodoc]] MarigoldDepthPipeline - all - call

MarigoldNormalsPipeline

[[autodoc]] MarigoldNormalsPipeline - all - call

MarigoldDepthOutput

[[autodoc]] pipelines.marigold.pipeline_marigold_depth.MarigoldDepthOutput

MarigoldNormalsOutput

[[autodoc]] pipelines.marigold.pipeline_marigold_normals.MarigoldNormalsOutput