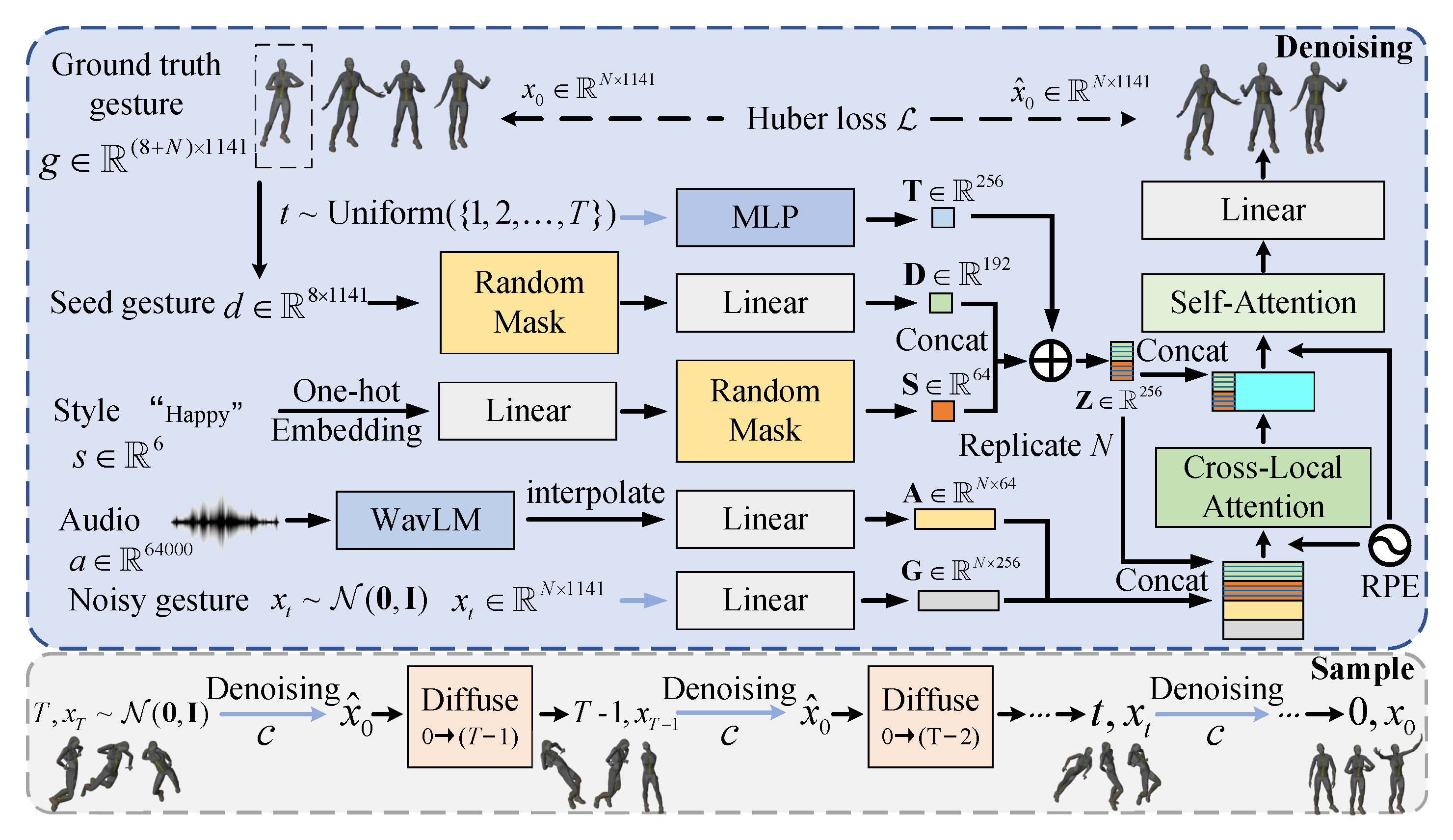

DiffuseStyleGesture: Stylized Audio-Driven Co-Speech Gesture Generation with Diffusion Models

News

📢 9/May/23 - First release - arxiv, code and pre-trained models.

1. Getting started

This code was tested on NVIDIA GeForce RTX 2080 Ti and requires:

- conda3 or miniconda3

conda create -n DiffuseStyleGesture python=3.7

pip install -r requirements.txt

2. Quick Start

- Download pre-trained model from Tsinghua Cloud or Google Cloud

and put it into

./main/mydiffusion_zeggs/. - Download the WavLM Large and put it into

./main/mydiffusion_zeggs/WavLM/. - cd

./main/mydiffusion_zeggs/and run

python sample.py --config=./configs/DiffuseStyleGesture.yml --no_cuda 0 --gpu 0 --model_path './model000450000.pt' --audiowavlm_path "./015_Happy_4_x_1_0.wav" --max_len 320

You will get the .bvh file named yyyymmdd_hhmmss_smoothing_SG_minibatch_320_[1, 0, 0, 0, 0, 0]_123456.bvh in the sample_dir folder, which can then be visualized using Blender.

3. Train your own model

(1) Get ZEGGS dataset

Same as ZEGGS.

An example is as follows.

Download original ZEGGS datasets from here and put it in ./ubisoft-laforge-ZeroEGGS-main/data/ folder.

Then cd ./ubisoft-laforge-ZeroEGGS-main/ZEGGS and run python data_pipeline.py to process the dataset.

You will get ./ubisoft-laforge-ZeroEGGS-main/data/processed_v1/trimmed/train/ and ./ubisoft-laforge-ZeroEGGS-main/data/processed_v1/trimmed/test/ folders.

If you find it difficult to obtain and process the data, you can download the data after it has been processed by ZEGGS from Tsinghua Cloud or Baidu Cloud.

And put it in ./ubisoft-laforge-ZeroEGGS-main/data/processed_v1/trimmed/ folder.

(2) Process ZEGGS dataset

cd ./main/mydiffusion_zeggs/

python zeggs_data_to_lmdb.py

(3) Train

python end2end.py --config=./configs/DiffuseStyleGesture.yml --no_cuda 0 --gpu 0

The model will save in ./main/mydiffusion_zeggs/zeggs_mymodel3_wavlm/ folder.

Reference

Our work mainly inspired by: MDM, Text2Gesture, Listen, denoise, action!

Citation

If you find this code useful in your research, please cite:

@inproceedings{yang2023DiffuseStyleGesture,

author = {Sicheng Yang and Zhiyong Wu and Minglei Li and Zhensong Zhang and Lei Hao and Weihong Bao and Ming Cheng and Long Xiao},

title = {DiffuseStyleGesture: Stylized Audio-Driven Co-Speech Gesture Generation with Diffusion Models},

booktitle = {Proceedings of the 32nd International Joint Conference on Artificial Intelligence, {IJCAI} 2023},

publisher = {ijcai.org},

year = {2023},

}

Please feel free to contact us (yangsc21@mails.tsinghua.edu.cn) with any question or concerns.