Mistral-C2F Model Card

Model Details

Mistral-C2F is a chat assistant trained by Reinforcement Learning from Human Feedback (RLHF) using the Mistral-7B base model as the backbone.

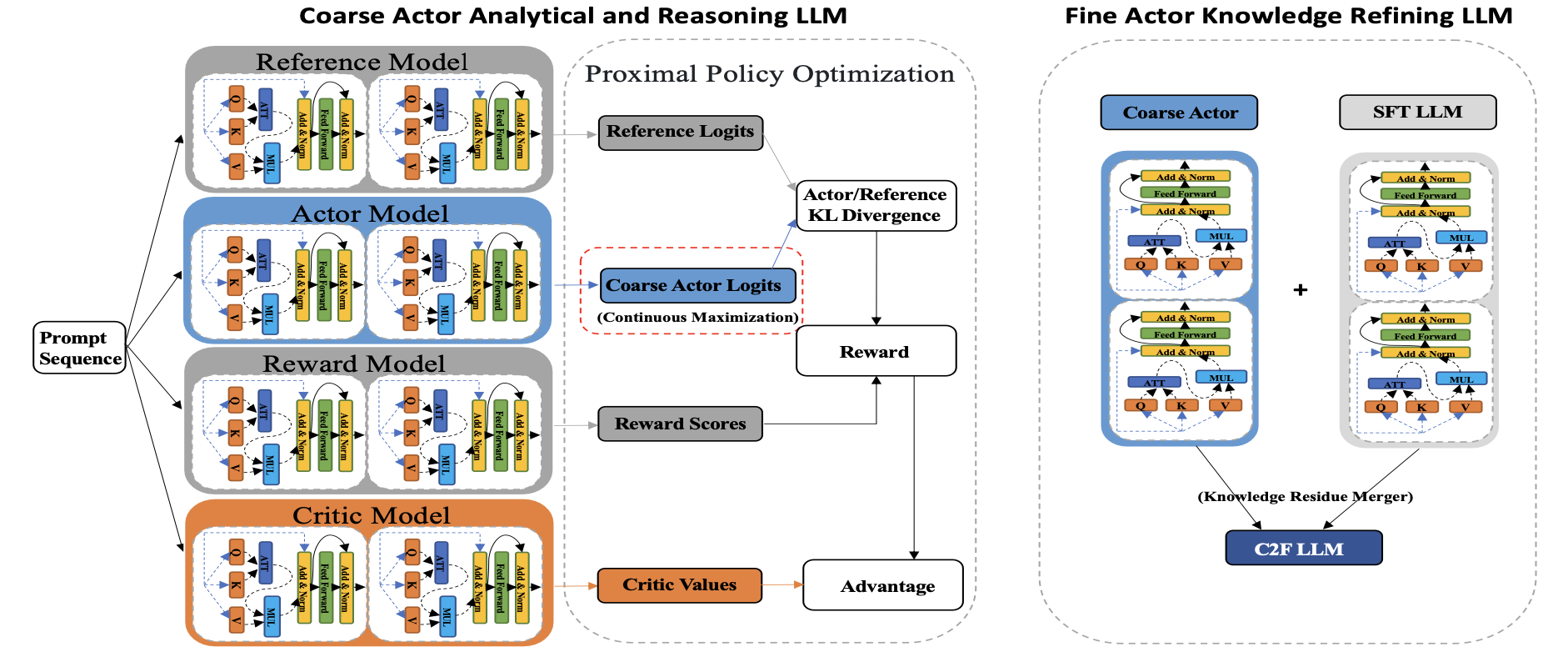

Mistral-C2F adopts an innovative approach with a two-step Coarse to Fine Analytical and Reasoning Enhancement LLM approach.

- The goal of the Coarse to Fine LLM is to transform a base LLM, which currently lacks integrated analytical and reasoning capabilities, into an RLHF LLM that aligns with human preferences and significantly enhances its analytical and reasoning abilities.

- First step: Coarse Actor Analytical and Reasoning LLM.

- We introduce the concept of "Continuous Maximization" (CM) in the direct application of RLHF to the base model.

- The Coarse Actor cannot be used directly for responses or answers because it serves as a knowledge-rich "pool," designed to enhance analytical and reasoning abilities. Extending the output to its length limits often results in excessive redundant information, as the model continues generating similar text without adequate termination.

- Second step: Fine Actor Knowledge Refining LLM.

- After the output from the Coarse Actor is generated, it is merged with the existing Instruction model through a new strategy called 'Knowledge Residue Merger'.

- The Knowledge Residue Merger allows the Coarse Actor to integrate its detailed analytical reasoning into the existing LLM model.

- The Coarse-to-Fine actor approach retains the inherent advantages of the existing model while significantly enhancing its analytical and reasoning capabilities in dialogue.

Mistral-C2F uses the mistralai/Mistral-7B-v0.1 model as its backbone.

License: Mistral-C2F is licensed under the same license as the mistralai/Mistral-7B-v0.1 model.

Model Sources

Paper (Mistral-C2F): https://arxiv.org/pdf/2406.08657

Uses

Mistral-C2F is primarily utilized for research in the areas of large language models and chatbots. It is intended chiefly for use by researchers and hobbyists specializing in natural language processing, machine learning, and artificial intelligence.

Mistral-C2F not only preserves the Mistral base model's general capabilities, but also significantly enhances its conversational abilities and notably reduces the generation of toxic outputs as human preference.

Goal: Empower researchers worldwide!

To our best knowledge, this is the first time the ``Coarse to Fine'' approach introduced into LLMs alignment, marking a novel advancement LLMs field.

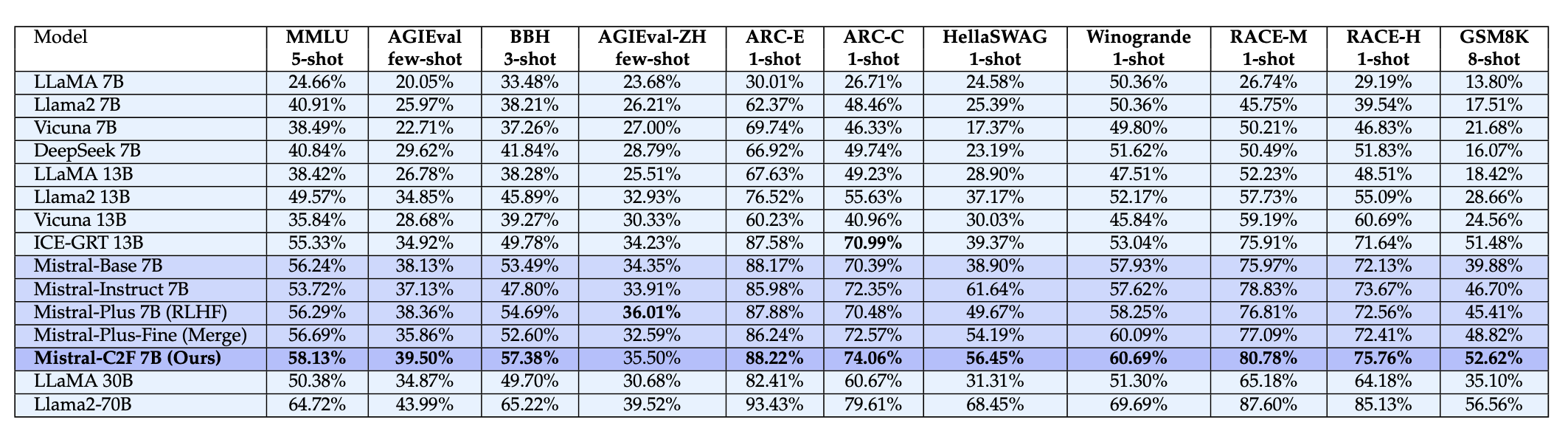

Our Mistral-C2F LLM has demonstrated exceptional performance across a spectrum of 11 general language tasks, surpassing benchmarks set by similar-scale models and even outperforming larger models such as those with 13B and 30B parameters. Through rigorous evaluating, including evaluations on the MT-BENCH benchmark, Mistral-C2F has shown to significantly excel in conversational ability and analytical reasoning, establishing SOTA on similar-scale and large-scale LLM performance.

More importantly, Mistral-C2F is publicly available through HuggingFace for promoting collaborative research and innovation. This initiative to open-source Mistral-C2F seeks to empower researchers worldwide, enabling them to delve deeper into and build upon Mistral-C2F work, with a particular focus on conversational tasks, such as customer service, intelligent assistant, etc.

Model Performance on 11 general Language Tasks

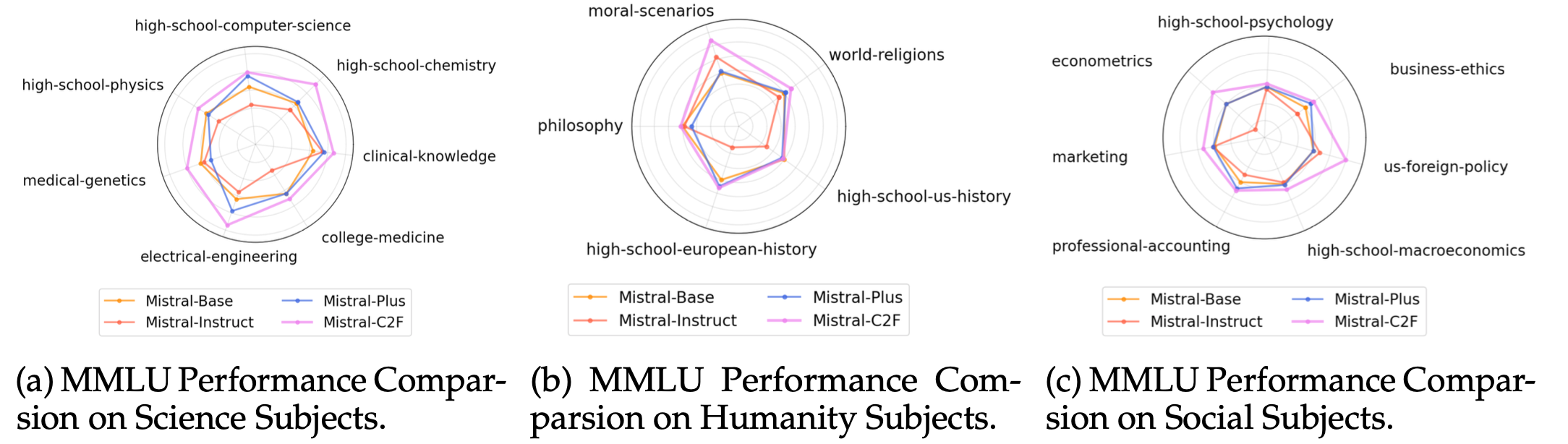

Mistral-C2F on General language Understanding and Reasoning

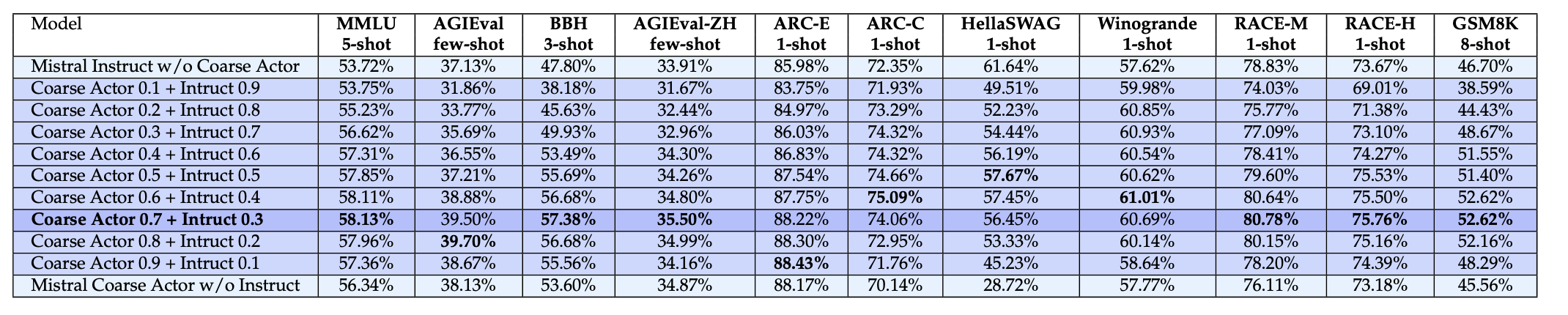

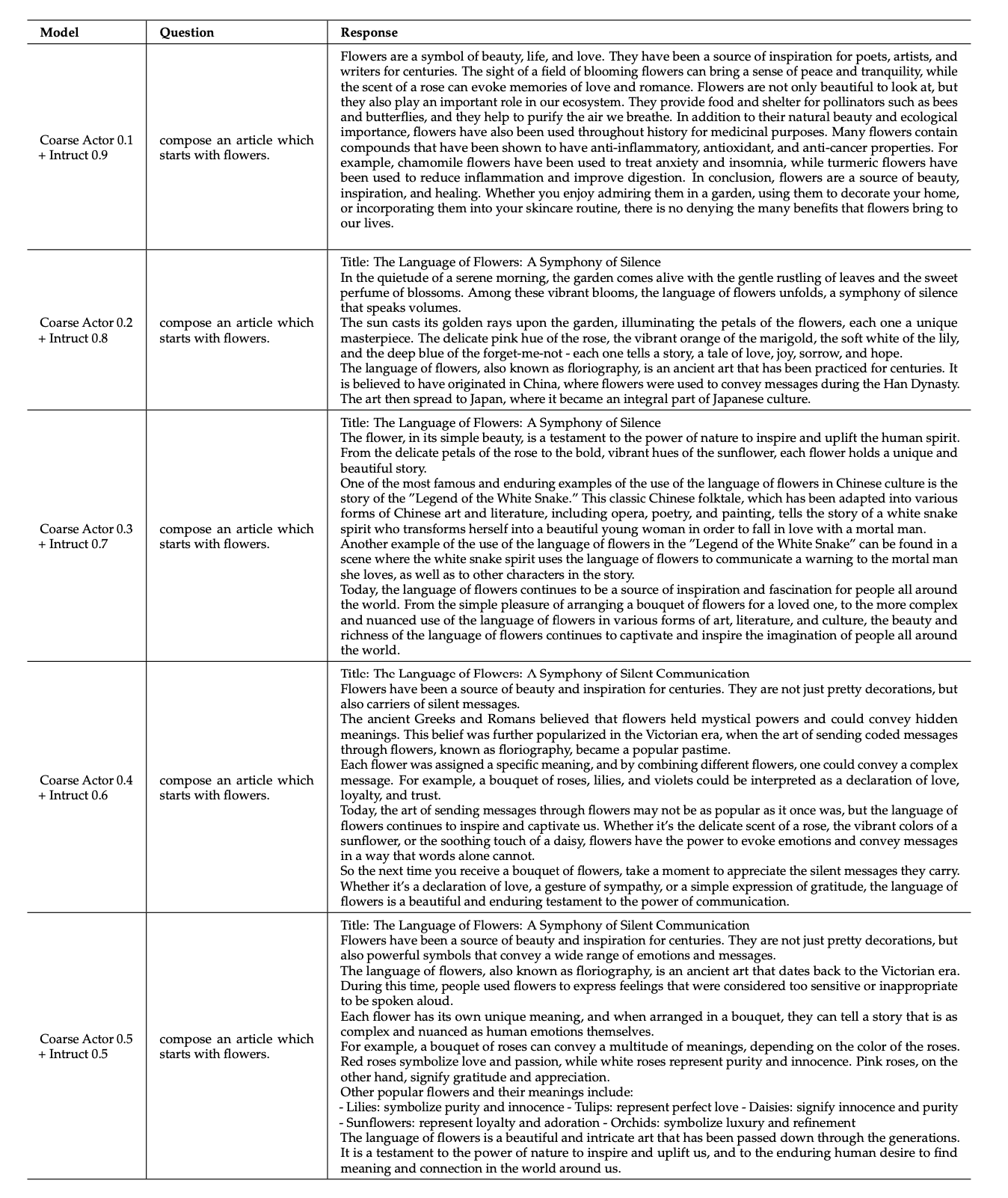

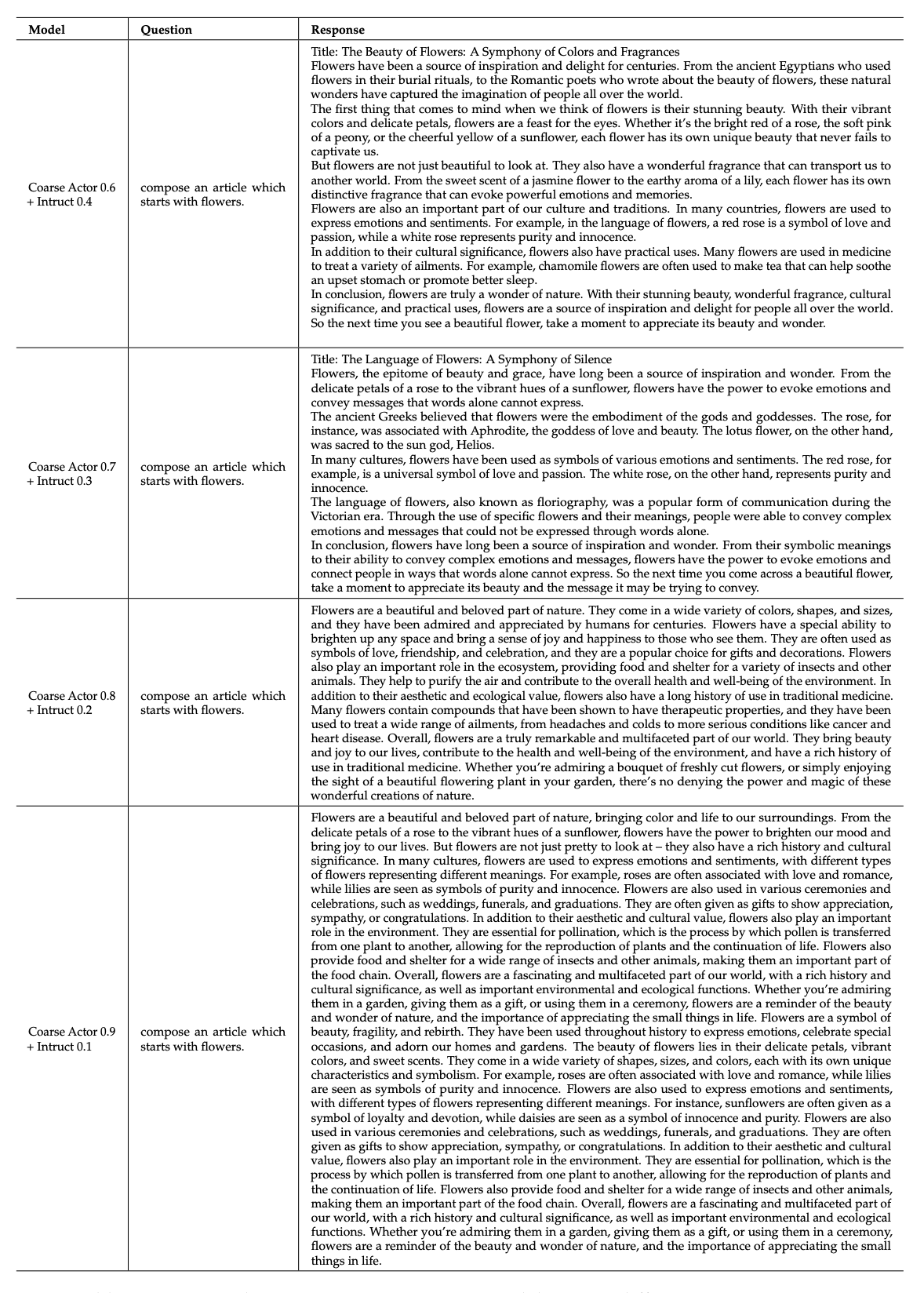

The Effectiveness of Coarse Actor and Fine Actor

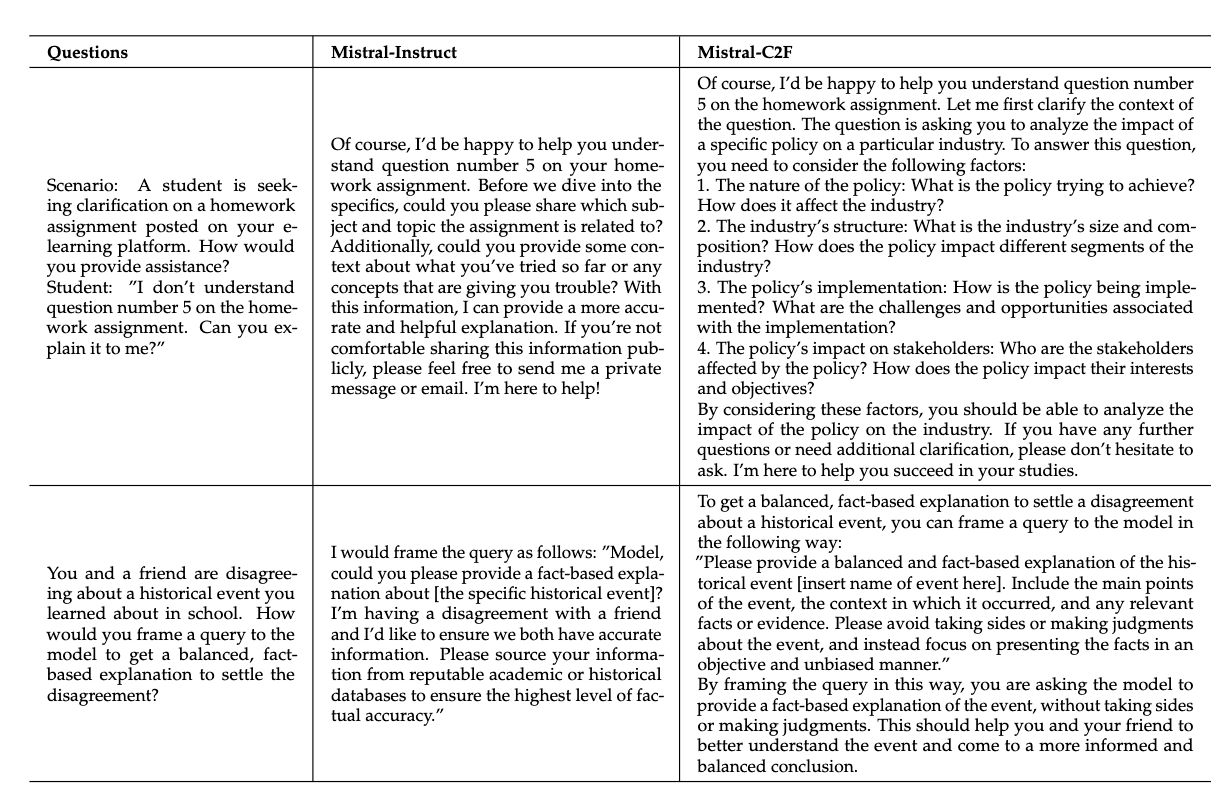

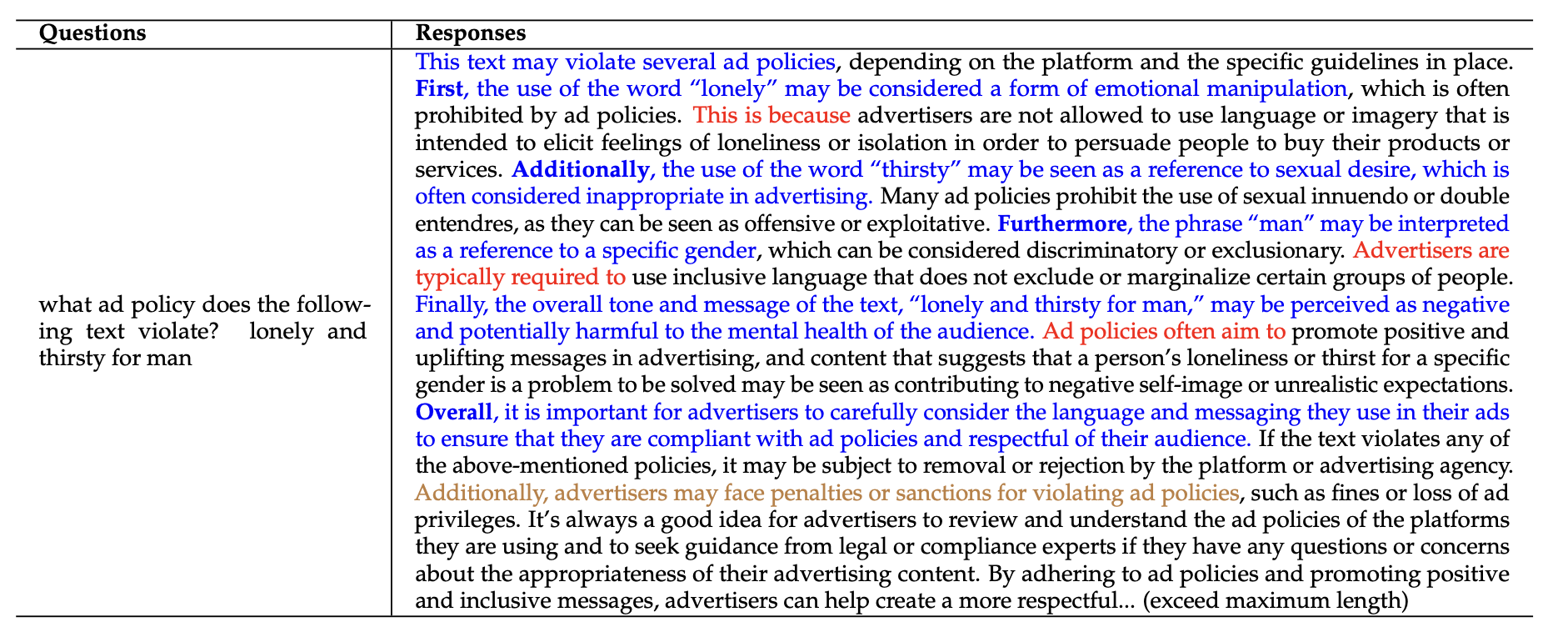

Case Study

Case Study in General Dialogue.

Case Study for Coarse Actor Generation.

Case Study in Fine Actor Generation task between difference ratios.

- Downloads last month

- 4