html_url

stringlengths 47

49

| title

stringlengths 4

111

| comments

stringlengths 71

20.4k

| body

stringlengths 0

12.9k

⌀ | comment_length_in_words

int64 16

1.61k

| text

stringlengths 100

20.5k

|

|---|---|---|---|---|---|

https://github.com/huggingface/datasets/pull/2819 | Added XL-Sum dataset | Hi ! Ideally everything should be in the same place, so feel free to create a community dataset on the Hub and upload your data files as well as you dataset script (and also the readme.md and dataset_infos.json).

The only change you have to do in your dataset script is use a relative path to your data files instead of urls.

For example if your repository looks like this:

```

xlsum/

├── data/

│ ├── amharic_XLSum_v2.0.tar.bz2

│ ├── ...

│ └── yoruba_XLSum_v2.0.tar.bz2

├── xlsum.py

├── README.md

└── dataset_infos.json

```

Then you just need to pass `"data/amharic_XLSum_v2.0.tar.bz2"` to `dl_manager.download_and_extract(...)`, instead of an url.

Locally you can test that it's working as expected with

```python

load_dataset("path/to/my/directory/named/xlsum")

```

Then once it's on the Hub, you can load it with

```python

load_dataset("username/xlsum")

```

Let me know if you have questions :) | Added XL-Sum dataset published in ACL-IJCNLP 2021. (https://aclanthology.org/2021.findings-acl.413/). The default timeout values in `src/datasets/utils/file_utls.py` were increased to enable downloading from the original google drive links. | 137 | text: Added XL-Sum dataset

Added XL-Sum dataset published in ACL-IJCNLP 2021. (https://aclanthology.org/2021.findings-acl.413/). The default timeout values in `src/datasets/utils/file_utls.py` were increased to enable downloading from the original google drive links.

Hi ! Ideally everything should be in the same place, so feel free to create a community dataset on the Hub and upload your data files as well as you dataset script (and also the readme.md and dataset_infos.json).

The only change you have to do in your dataset script is use a relative path to your data files instead of urls.

For example if your repository looks like this:

```

xlsum/

├── data/

│ ├── amharic_XLSum_v2.0.tar.bz2

│ ├── ...

│ └── yoruba_XLSum_v2.0.tar.bz2

├── xlsum.py

├── README.md

└── dataset_infos.json

```

Then you just need to pass `"data/amharic_XLSum_v2.0.tar.bz2"` to `dl_manager.download_and_extract(...)`, instead of an url.

Locally you can test that it's working as expected with

```python

load_dataset("path/to/my/directory/named/xlsum")

```

Then once it's on the Hub, you can load it with

```python

load_dataset("username/xlsum")

```

Let me know if you have questions :) |

https://github.com/huggingface/datasets/pull/2819 | Added XL-Sum dataset | Thank you for your detailed response regarding the community dataset building process. However, will this pull request be merged into the main branch? | Added XL-Sum dataset published in ACL-IJCNLP 2021. (https://aclanthology.org/2021.findings-acl.413/). The default timeout values in `src/datasets/utils/file_utls.py` were increased to enable downloading from the original google drive links. | 23 | text: Added XL-Sum dataset

Added XL-Sum dataset published in ACL-IJCNLP 2021. (https://aclanthology.org/2021.findings-acl.413/). The default timeout values in `src/datasets/utils/file_utls.py` were increased to enable downloading from the original google drive links.

Thank you for your detailed response regarding the community dataset building process. However, will this pull request be merged into the main branch? |

https://github.com/huggingface/datasets/pull/2819 | Added XL-Sum dataset | If XL-sum is available via the Hub we don't need to add it again in the `datasets` github repo ;) | Added XL-Sum dataset published in ACL-IJCNLP 2021. (https://aclanthology.org/2021.findings-acl.413/). The default timeout values in `src/datasets/utils/file_utls.py` were increased to enable downloading from the original google drive links. | 20 | text: Added XL-Sum dataset

Added XL-Sum dataset published in ACL-IJCNLP 2021. (https://aclanthology.org/2021.findings-acl.413/). The default timeout values in `src/datasets/utils/file_utls.py` were increased to enable downloading from the original google drive links.

If XL-sum is available via the Hub we don't need to add it again in the `datasets` github repo ;) |

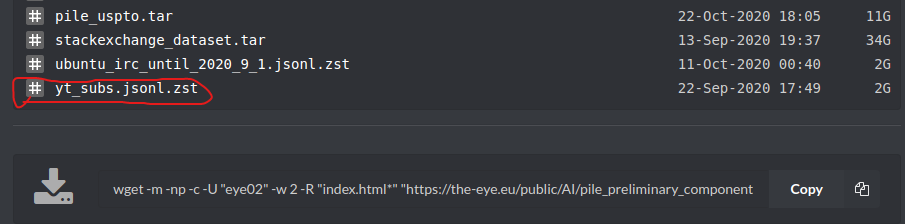

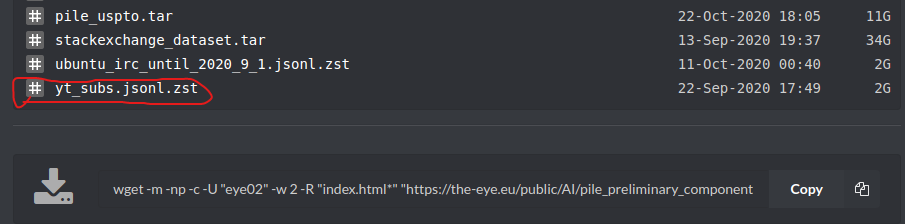

https://github.com/huggingface/datasets/pull/2817 | Rename The Pile subsets | I think the main `the_pile` datasets will be the one that is the mix of all the subsets: https://the-eye.eu/public/AI/pile/

We can also add configurations for each subset, and even allow users to specify the subsets they want:

```python

from datasets import load_dataset

load_dataset("the_pile", subsets=["openwebtext2", "books3", "hn"])

```

We're alrady doing something similar for mC4, where users can specify the list of languages they want to load. | After discussing with @yjernite we think it's better to have the subsets of The Pile explicitly have "the_pile" in their names.

I'm doing the changes for the subsets that @richarddwang added:

- [x] books3 -> the_pile_books3 https://github.com/huggingface/datasets/pull/2801

- [x] stack_exchange -> the_pile_stack_exchange https://github.com/huggingface/datasets/pull/2803

- [x] openwebtext2 -> the_pile_openwebtext2 https://github.com/huggingface/datasets/pull/2802

For consistency we should also rename `bookcorpusopen` to `the_pile_bookcorpus` IMO, but let me know what you think.

(we can just add a deprecation message to `bookcorpusopen` for now and add `the_pile_bookcorpus`) | 66 | text: Rename The Pile subsets

After discussing with @yjernite we think it's better to have the subsets of The Pile explicitly have "the_pile" in their names.

I'm doing the changes for the subsets that @richarddwang added:

- [x] books3 -> the_pile_books3 https://github.com/huggingface/datasets/pull/2801

- [x] stack_exchange -> the_pile_stack_exchange https://github.com/huggingface/datasets/pull/2803

- [x] openwebtext2 -> the_pile_openwebtext2 https://github.com/huggingface/datasets/pull/2802

For consistency we should also rename `bookcorpusopen` to `the_pile_bookcorpus` IMO, but let me know what you think.

(we can just add a deprecation message to `bookcorpusopen` for now and add `the_pile_bookcorpus`)

I think the main `the_pile` datasets will be the one that is the mix of all the subsets: https://the-eye.eu/public/AI/pile/

We can also add configurations for each subset, and even allow users to specify the subsets they want:

```python

from datasets import load_dataset

load_dataset("the_pile", subsets=["openwebtext2", "books3", "hn"])

```

We're alrady doing something similar for mC4, where users can specify the list of languages they want to load. |

https://github.com/huggingface/datasets/pull/2811 | Fix stream oscar | One additional note: if we can try to not change the code of oscar.py too often, I'm sure users that have it in their cache directory will be happy to not have to redownload it every time they update the library ;)

(since changing the code changes the cache directory of the dataset) | Previously, an additional `open` was added to oscar to make it stream-compatible: 587bbb94e891b22863b312b99696e32708c379f4.

This was argued that might be problematic: https://github.com/huggingface/datasets/pull/2786#discussion_r690045921

This PR:

- removes that additional `open`

- patches `gzip.open` with `xopen` + `compression="gzip"` | 53 | text: Fix stream oscar

Previously, an additional `open` was added to oscar to make it stream-compatible: 587bbb94e891b22863b312b99696e32708c379f4.

This was argued that might be problematic: https://github.com/huggingface/datasets/pull/2786#discussion_r690045921

This PR:

- removes that additional `open`

- patches `gzip.open` with `xopen` + `compression="gzip"`

One additional note: if we can try to not change the code of oscar.py too often, I'm sure users that have it in their cache directory will be happy to not have to redownload it every time they update the library ;)

(since changing the code changes the cache directory of the dataset) |

https://github.com/huggingface/datasets/pull/2811 | Fix stream oscar | I don't think this is confusing for users because users don't even know we have patched `open`. The only thing users care is that if the pass `streaming=True`, they want to be able to load the dataset in streaming mode.

I don't see any other dataset where patching `open` with `fsspec.open`+`compression` is an "underlying issue". Are there other datasets where this is an issue?

The only dataset where this was an issue is in oscar and the issue is indeed due to the additional `open` you added inside `zip.open`. | Previously, an additional `open` was added to oscar to make it stream-compatible: 587bbb94e891b22863b312b99696e32708c379f4.

This was argued that might be problematic: https://github.com/huggingface/datasets/pull/2786#discussion_r690045921

This PR:

- removes that additional `open`

- patches `gzip.open` with `xopen` + `compression="gzip"` | 89 | text: Fix stream oscar

Previously, an additional `open` was added to oscar to make it stream-compatible: 587bbb94e891b22863b312b99696e32708c379f4.

This was argued that might be problematic: https://github.com/huggingface/datasets/pull/2786#discussion_r690045921

This PR:

- removes that additional `open`

- patches `gzip.open` with `xopen` + `compression="gzip"`

I don't think this is confusing for users because users don't even know we have patched `open`. The only thing users care is that if the pass `streaming=True`, they want to be able to load the dataset in streaming mode.

I don't see any other dataset where patching `open` with `fsspec.open`+`compression` is an "underlying issue". Are there other datasets where this is an issue?

The only dataset where this was an issue is in oscar and the issue is indeed due to the additional `open` you added inside `zip.open`. |

https://github.com/huggingface/datasets/pull/2806 | Fix streaming tar files from canonical datasets | In case it's relevant for this PR, I'm finding that I cannot stream the `bookcorpus` dataset (using the `master` branch of `datasets`), which is a `.tar.bz2` file:

```python

from datasets import load_dataset

books_dataset_streamed = load_dataset("bookcorpus", split="train", streaming=True)

# Throws a 404 HTTP error

next(iter(books_dataset_streamed))

```

The full stack trace is:

```

---------------------------------------------------------------------------

ClientResponseError Traceback (most recent call last)

<ipython-input-11-5ebbbe110b13> in <module>()

----> 1 next(iter(books_dataset_streamed))

11 frames

/usr/local/lib/python3.7/dist-packages/datasets/iterable_dataset.py in __iter__(self)

339

340 def __iter__(self):

--> 341 for key, example in self._iter():

342 if self.features:

343 # we encode the example for ClassLabel feature types for example

/usr/local/lib/python3.7/dist-packages/datasets/iterable_dataset.py in _iter(self)

336 else:

337 ex_iterable = self._ex_iterable

--> 338 yield from ex_iterable

339

340 def __iter__(self):

/usr/local/lib/python3.7/dist-packages/datasets/iterable_dataset.py in __iter__(self)

76

77 def __iter__(self):

---> 78 for key, example in self.generate_examples_fn(**self.kwargs):

79 yield key, example

80

/root/.cache/huggingface/modules/datasets_modules/datasets/bookcorpus/44662c4a114441c35200992bea923b170e6f13f2f0beb7c14e43759cec498700/bookcorpus.py in _generate_examples(self, directory)

98 for txt_file in files:

99 with open(txt_file, mode="r", encoding="utf-8") as f:

--> 100 for line in f:

101 yield _id, {"text": line.strip()}

102 _id += 1

/usr/local/lib/python3.7/dist-packages/fsspec/implementations/http.py in read(self, length)

496 else:

497 length = min(self.size - self.loc, length)

--> 498 return super().read(length)

499

500 async def async_fetch_all(self):

/usr/local/lib/python3.7/dist-packages/fsspec/spec.py in read(self, length)

1481 # don't even bother calling fetch

1482 return b""

-> 1483 out = self.cache._fetch(self.loc, self.loc + length)

1484 self.loc += len(out)

1485 return out

/usr/local/lib/python3.7/dist-packages/fsspec/caching.py in _fetch(self, start, end)

374 ):

375 # First read, or extending both before and after

--> 376 self.cache = self.fetcher(start, bend)

377 self.start = start

378 elif start < self.start:

/usr/local/lib/python3.7/dist-packages/fsspec/asyn.py in wrapper(*args, **kwargs)

86 def wrapper(*args, **kwargs):

87 self = obj or args[0]

---> 88 return sync(self.loop, func, *args, **kwargs)

89

90 return wrapper

/usr/local/lib/python3.7/dist-packages/fsspec/asyn.py in sync(loop, func, timeout, *args, **kwargs)

67 raise FSTimeoutError

68 if isinstance(result[0], BaseException):

---> 69 raise result[0]

70 return result[0]

71

/usr/local/lib/python3.7/dist-packages/fsspec/asyn.py in _runner(event, coro, result, timeout)

23 coro = asyncio.wait_for(coro, timeout=timeout)

24 try:

---> 25 result[0] = await coro

26 except Exception as ex:

27 result[0] = ex

/usr/local/lib/python3.7/dist-packages/fsspec/implementations/http.py in async_fetch_range(self, start, end)

535 # range request outside file

536 return b""

--> 537 r.raise_for_status()

538 if r.status == 206:

539 # partial content, as expected

/usr/local/lib/python3.7/dist-packages/aiohttp/client_reqrep.py in raise_for_status(self)

1003 status=self.status,

1004 message=self.reason,

-> 1005 headers=self.headers,

1006 )

1007

ClientResponseError: 404, message='Not Found', url=URL('https://storage.googleapis.com/huggingface-nlp/datasets/bookcorpus/bookcorpus.tar.bz2/books_large_p1.txt')

```

Let me know if this is unrelated and I'll open a separate issue :)

Environment info:

```

- `datasets` version: 1.11.1.dev0

- Platform: Linux-5.4.104+-x86_64-with-Ubuntu-18.04-bionic

- Python version: 3.7.11

- PyArrow version: 3.0.0

``` | Previous PR #2800 implemented support to stream remote tar files when passing the parameter `data_files`: they required a glob string `"*"`.

However, this glob string creates an error when streaming canonical datasets (with a `join` after the `open`).

This PR fixes this issue and allows streaming tar files both from:

- canonical datasets scripts and

- data files.

This PR also adds support for compressed tar files: `.tar.gz`, `.tar.bz2`,...

| 401 | text: Fix streaming tar files from canonical datasets

Previous PR #2800 implemented support to stream remote tar files when passing the parameter `data_files`: they required a glob string `"*"`.

However, this glob string creates an error when streaming canonical datasets (with a `join` after the `open`).

This PR fixes this issue and allows streaming tar files both from:

- canonical datasets scripts and

- data files.

This PR also adds support for compressed tar files: `.tar.gz`, `.tar.bz2`,...

In case it's relevant for this PR, I'm finding that I cannot stream the `bookcorpus` dataset (using the `master` branch of `datasets`), which is a `.tar.bz2` file:

```python

from datasets import load_dataset

books_dataset_streamed = load_dataset("bookcorpus", split="train", streaming=True)

# Throws a 404 HTTP error

next(iter(books_dataset_streamed))

```

The full stack trace is:

```

---------------------------------------------------------------------------

ClientResponseError Traceback (most recent call last)

<ipython-input-11-5ebbbe110b13> in <module>()

----> 1 next(iter(books_dataset_streamed))

11 frames

/usr/local/lib/python3.7/dist-packages/datasets/iterable_dataset.py in __iter__(self)

339

340 def __iter__(self):

--> 341 for key, example in self._iter():

342 if self.features:

343 # we encode the example for ClassLabel feature types for example

/usr/local/lib/python3.7/dist-packages/datasets/iterable_dataset.py in _iter(self)

336 else:

337 ex_iterable = self._ex_iterable

--> 338 yield from ex_iterable

339

340 def __iter__(self):

/usr/local/lib/python3.7/dist-packages/datasets/iterable_dataset.py in __iter__(self)

76

77 def __iter__(self):

---> 78 for key, example in self.generate_examples_fn(**self.kwargs):

79 yield key, example

80

/root/.cache/huggingface/modules/datasets_modules/datasets/bookcorpus/44662c4a114441c35200992bea923b170e6f13f2f0beb7c14e43759cec498700/bookcorpus.py in _generate_examples(self, directory)

98 for txt_file in files:

99 with open(txt_file, mode="r", encoding="utf-8") as f:

--> 100 for line in f:

101 yield _id, {"text": line.strip()}

102 _id += 1

/usr/local/lib/python3.7/dist-packages/fsspec/implementations/http.py in read(self, length)

496 else:

497 length = min(self.size - self.loc, length)

--> 498 return super().read(length)

499

500 async def async_fetch_all(self):

/usr/local/lib/python3.7/dist-packages/fsspec/spec.py in read(self, length)

1481 # don't even bother calling fetch

1482 return b""

-> 1483 out = self.cache._fetch(self.loc, self.loc + length)

1484 self.loc += len(out)

1485 return out

/usr/local/lib/python3.7/dist-packages/fsspec/caching.py in _fetch(self, start, end)

374 ):

375 # First read, or extending both before and after

--> 376 self.cache = self.fetcher(start, bend)

377 self.start = start

378 elif start < self.start:

/usr/local/lib/python3.7/dist-packages/fsspec/asyn.py in wrapper(*args, **kwargs)

86 def wrapper(*args, **kwargs):

87 self = obj or args[0]

---> 88 return sync(self.loop, func, *args, **kwargs)

89

90 return wrapper

/usr/local/lib/python3.7/dist-packages/fsspec/asyn.py in sync(loop, func, timeout, *args, **kwargs)

67 raise FSTimeoutError

68 if isinstance(result[0], BaseException):

---> 69 raise result[0]

70 return result[0]

71

/usr/local/lib/python3.7/dist-packages/fsspec/asyn.py in _runner(event, coro, result, timeout)

23 coro = asyncio.wait_for(coro, timeout=timeout)

24 try:

---> 25 result[0] = await coro

26 except Exception as ex:

27 result[0] = ex

/usr/local/lib/python3.7/dist-packages/fsspec/implementations/http.py in async_fetch_range(self, start, end)

535 # range request outside file

536 return b""

--> 537 r.raise_for_status()

538 if r.status == 206:

539 # partial content, as expected

/usr/local/lib/python3.7/dist-packages/aiohttp/client_reqrep.py in raise_for_status(self)

1003 status=self.status,

1004 message=self.reason,

-> 1005 headers=self.headers,

1006 )

1007

ClientResponseError: 404, message='Not Found', url=URL('https://storage.googleapis.com/huggingface-nlp/datasets/bookcorpus/bookcorpus.tar.bz2/books_large_p1.txt')

```

Let me know if this is unrelated and I'll open a separate issue :)

Environment info:

```

- `datasets` version: 1.11.1.dev0

- Platform: Linux-5.4.104+-x86_64-with-Ubuntu-18.04-bionic

- Python version: 3.7.11

- PyArrow version: 3.0.0

``` |

https://github.com/huggingface/datasets/pull/2806 | Fix streaming tar files from canonical datasets | > @lewtun: `.tar.compression-extension` files are not supported yet. That is the objective of this PR.

thanks for the context and the great work on the streaming features (right now i'm writing the streaming section of the HF course, so am acting like a beta tester 😄) | Previous PR #2800 implemented support to stream remote tar files when passing the parameter `data_files`: they required a glob string `"*"`.

However, this glob string creates an error when streaming canonical datasets (with a `join` after the `open`).

This PR fixes this issue and allows streaming tar files both from:

- canonical datasets scripts and

- data files.

This PR also adds support for compressed tar files: `.tar.gz`, `.tar.bz2`,...

| 46 | text: Fix streaming tar files from canonical datasets

Previous PR #2800 implemented support to stream remote tar files when passing the parameter `data_files`: they required a glob string `"*"`.

However, this glob string creates an error when streaming canonical datasets (with a `join` after the `open`).

This PR fixes this issue and allows streaming tar files both from:

- canonical datasets scripts and

- data files.

This PR also adds support for compressed tar files: `.tar.gz`, `.tar.bz2`,...

> @lewtun: `.tar.compression-extension` files are not supported yet. That is the objective of this PR.

thanks for the context and the great work on the streaming features (right now i'm writing the streaming section of the HF course, so am acting like a beta tester 😄) |

https://github.com/huggingface/datasets/pull/2806 | Fix streaming tar files from canonical datasets | @lewtun this PR fixes previous issue with xjoin:

Given:

```python

xjoin(

"https://storage.googleapis.com/huggingface-nlp/datasets/bookcorpus/bookcorpus.tar.bz2",

"books_large_p1.txt"

)

```

- Before it gave:

`"https://storage.googleapis.com/huggingface-nlp/datasets/bookcorpus/bookcorpus.tar.bz2/books_large_p1.txt"`

thus raising the 404 error

- Now it gives:

`tar://books_large_p1.txt::https://storage.googleapis.com/huggingface-nlp/datasets/bookcorpus/bookcorpus.tar.bz2`

(this is the expected format for `fsspec`) and additionally passes the parameter `compression="bz2"`.

See: https://github.com/huggingface/datasets/pull/2806/files#diff-97bb2d08db65ce3b679aefc43cadad76d053c1e58ecc315e49b80873d0fbdabeR15 | Previous PR #2800 implemented support to stream remote tar files when passing the parameter `data_files`: they required a glob string `"*"`.

However, this glob string creates an error when streaming canonical datasets (with a `join` after the `open`).

This PR fixes this issue and allows streaming tar files both from:

- canonical datasets scripts and

- data files.

This PR also adds support for compressed tar files: `.tar.gz`, `.tar.bz2`,...

| 45 | text: Fix streaming tar files from canonical datasets

Previous PR #2800 implemented support to stream remote tar files when passing the parameter `data_files`: they required a glob string `"*"`.

However, this glob string creates an error when streaming canonical datasets (with a `join` after the `open`).

This PR fixes this issue and allows streaming tar files both from:

- canonical datasets scripts and

- data files.

This PR also adds support for compressed tar files: `.tar.gz`, `.tar.bz2`,...

@lewtun this PR fixes previous issue with xjoin:

Given:

```python

xjoin(

"https://storage.googleapis.com/huggingface-nlp/datasets/bookcorpus/bookcorpus.tar.bz2",

"books_large_p1.txt"

)

```

- Before it gave:

`"https://storage.googleapis.com/huggingface-nlp/datasets/bookcorpus/bookcorpus.tar.bz2/books_large_p1.txt"`

thus raising the 404 error

- Now it gives:

`tar://books_large_p1.txt::https://storage.googleapis.com/huggingface-nlp/datasets/bookcorpus/bookcorpus.tar.bz2`

(this is the expected format for `fsspec`) and additionally passes the parameter `compression="bz2"`.

See: https://github.com/huggingface/datasets/pull/2806/files#diff-97bb2d08db65ce3b679aefc43cadad76d053c1e58ecc315e49b80873d0fbdabeR15 |

https://github.com/huggingface/datasets/pull/2803 | add stack exchange | Hi ! Merging this one since it's all good :)

However I think it would also be better to actually rename it `the_pile_stack_exchange` to make things clearer and to avoid name collisions in the future. I would like to do the same for `books3` as well.

If you don't mind I'll open a PR to do the renaming | stack exchange is part of EleutherAI/The Pile, but AFAIK, The Pile dataset blend all sub datasets together thus we are not able to use just one of its sub dataset from The Pile data. So I create an independent dataset using The Pile preliminary components.

I also change default `timeout` to 100 seconds instead of 10 seconds, otherwise I keep getting read time out when downloading source data of stack exchange and cc100 dataset.

When I was creating dataset card. I found there is room for creating / editing dataset card. I've made it an issue. #2797

Also I am wondering whether the import of The Pile dataset is actively undertaken (because I may need it recently)? #1675 | 58 | text: add stack exchange

stack exchange is part of EleutherAI/The Pile, but AFAIK, The Pile dataset blend all sub datasets together thus we are not able to use just one of its sub dataset from The Pile data. So I create an independent dataset using The Pile preliminary components.

I also change default `timeout` to 100 seconds instead of 10 seconds, otherwise I keep getting read time out when downloading source data of stack exchange and cc100 dataset.

When I was creating dataset card. I found there is room for creating / editing dataset card. I've made it an issue. #2797

Also I am wondering whether the import of The Pile dataset is actively undertaken (because I may need it recently)? #1675

Hi ! Merging this one since it's all good :)

However I think it would also be better to actually rename it `the_pile_stack_exchange` to make things clearer and to avoid name collisions in the future. I would like to do the same for `books3` as well.

If you don't mind I'll open a PR to do the renaming |

https://github.com/huggingface/datasets/pull/2803 | add stack exchange |

> If you don't mind I'll open a PR to do the renaming

@lhoestq That will be nice !!

| stack exchange is part of EleutherAI/The Pile, but AFAIK, The Pile dataset blend all sub datasets together thus we are not able to use just one of its sub dataset from The Pile data. So I create an independent dataset using The Pile preliminary components.

I also change default `timeout` to 100 seconds instead of 10 seconds, otherwise I keep getting read time out when downloading source data of stack exchange and cc100 dataset.

When I was creating dataset card. I found there is room for creating / editing dataset card. I've made it an issue. #2797

Also I am wondering whether the import of The Pile dataset is actively undertaken (because I may need it recently)? #1675 | 19 | text: add stack exchange

stack exchange is part of EleutherAI/The Pile, but AFAIK, The Pile dataset blend all sub datasets together thus we are not able to use just one of its sub dataset from The Pile data. So I create an independent dataset using The Pile preliminary components.

I also change default `timeout` to 100 seconds instead of 10 seconds, otherwise I keep getting read time out when downloading source data of stack exchange and cc100 dataset.

When I was creating dataset card. I found there is room for creating / editing dataset card. I've made it an issue. #2797

Also I am wondering whether the import of The Pile dataset is actively undertaken (because I may need it recently)? #1675

> If you don't mind I'll open a PR to do the renaming

@lhoestq That will be nice !!

|

https://github.com/huggingface/datasets/pull/2802 | add openwebtext2 | Hi ! Do you really need `jsonlines` ? I think it simply uses `json.loads` under the hood.

Currently the test are failing because `jsonlines` is not part of the extra requirements `TESTS_REQUIRE` in setup.py

So either you can replace `jsonlines` with a simple for loop on the lines of the files and use `json.loads`, or you can add `TESTS_REQUIRE` to the test requirements (but in this case users will have to install it as well). | openwebtext2 is part of EleutherAI/The Pile, but AFAIK, The Pile dataset blend all sub datasets together thus we are not able to use just one of its sub dataset from The Pile data. So I create an independent dataset using The Pile preliminary components.

When I was creating dataset card. I found there is room for creating / editing dataset card. I've made it an issue. #2797

Also I am wondering whether the import of The Pile dataset is actively undertaken (because I may need it recently)? #1675 | 75 | text: add openwebtext2

openwebtext2 is part of EleutherAI/The Pile, but AFAIK, The Pile dataset blend all sub datasets together thus we are not able to use just one of its sub dataset from The Pile data. So I create an independent dataset using The Pile preliminary components.

When I was creating dataset card. I found there is room for creating / editing dataset card. I've made it an issue. #2797

Also I am wondering whether the import of The Pile dataset is actively undertaken (because I may need it recently)? #1675

Hi ! Do you really need `jsonlines` ? I think it simply uses `json.loads` under the hood.

Currently the test are failing because `jsonlines` is not part of the extra requirements `TESTS_REQUIRE` in setup.py

So either you can replace `jsonlines` with a simple for loop on the lines of the files and use `json.loads`, or you can add `TESTS_REQUIRE` to the test requirements (but in this case users will have to install it as well). |

https://github.com/huggingface/datasets/pull/2802 | add openwebtext2 | Thanks for your suggestion. I now know `io` and json lines format better and has changed `jsonlines` to just `readlines`. | openwebtext2 is part of EleutherAI/The Pile, but AFAIK, The Pile dataset blend all sub datasets together thus we are not able to use just one of its sub dataset from The Pile data. So I create an independent dataset using The Pile preliminary components.

When I was creating dataset card. I found there is room for creating / editing dataset card. I've made it an issue. #2797

Also I am wondering whether the import of The Pile dataset is actively undertaken (because I may need it recently)? #1675 | 20 | text: add openwebtext2

openwebtext2 is part of EleutherAI/The Pile, but AFAIK, The Pile dataset blend all sub datasets together thus we are not able to use just one of its sub dataset from The Pile data. So I create an independent dataset using The Pile preliminary components.

When I was creating dataset card. I found there is room for creating / editing dataset card. I've made it an issue. #2797

Also I am wondering whether the import of The Pile dataset is actively undertaken (because I may need it recently)? #1675

Thanks for your suggestion. I now know `io` and json lines format better and has changed `jsonlines` to just `readlines`. |

https://github.com/huggingface/datasets/pull/2801 | add books3 | > When I was creating dataset card. I found there is room for creating / editing dataset card. I've made it an issue. #2797

Thanks for the message, we'll definitely improve this

> Also I am wondering whether the import of The Pile dataset is actively undertaken (because I may need it recently)? #1675

Well currently no, but I think @lewtun was about to do it (though he's currently on vacations) | books3 is part of EleutherAI/The Pile, but AFAIK, The Pile dataset blend all sub datasets together thus we are not able to use just one of its sub dataset from The Pile data. So I create an independent dataset using The Pile preliminary components.

When I was creating dataset card. I found there is room for creating / editing dataset card. I've made it an issue. #2797

Also I am wondering whether the import of The Pile dataset is actively undertaken (because I may need it recently)? #1675 | 71 | text: add books3

books3 is part of EleutherAI/The Pile, but AFAIK, The Pile dataset blend all sub datasets together thus we are not able to use just one of its sub dataset from The Pile data. So I create an independent dataset using The Pile preliminary components.

When I was creating dataset card. I found there is room for creating / editing dataset card. I've made it an issue. #2797

Also I am wondering whether the import of The Pile dataset is actively undertaken (because I may need it recently)? #1675

> When I was creating dataset card. I found there is room for creating / editing dataset card. I've made it an issue. #2797

Thanks for the message, we'll definitely improve this

> Also I am wondering whether the import of The Pile dataset is actively undertaken (because I may need it recently)? #1675

Well currently no, but I think @lewtun was about to do it (though he's currently on vacations) |

https://github.com/huggingface/datasets/pull/2801 | add books3 | > > Also I am wondering whether the import of The Pile dataset is actively undertaken (because I may need it recently)? #1675

>

> Well currently no, but I think @lewtun was about to do it (though he's currently on vacations)

yes i plan to start working on this next week #2185

one question for @richarddwang - do you know if eleutherai happened to also release the "existing" datasets like enron emails and opensubtitles?

in appendix c of their paper, they provide details on how they extracted these datasets, but it would be nice if we could just point to a url so we can be as close as possible to original implementation. | books3 is part of EleutherAI/The Pile, but AFAIK, The Pile dataset blend all sub datasets together thus we are not able to use just one of its sub dataset from The Pile data. So I create an independent dataset using The Pile preliminary components.

When I was creating dataset card. I found there is room for creating / editing dataset card. I've made it an issue. #2797

Also I am wondering whether the import of The Pile dataset is actively undertaken (because I may need it recently)? #1675 | 114 | text: add books3

books3 is part of EleutherAI/The Pile, but AFAIK, The Pile dataset blend all sub datasets together thus we are not able to use just one of its sub dataset from The Pile data. So I create an independent dataset using The Pile preliminary components.

When I was creating dataset card. I found there is room for creating / editing dataset card. I've made it an issue. #2797

Also I am wondering whether the import of The Pile dataset is actively undertaken (because I may need it recently)? #1675

> > Also I am wondering whether the import of The Pile dataset is actively undertaken (because I may need it recently)? #1675

>

> Well currently no, but I think @lewtun was about to do it (though he's currently on vacations)

yes i plan to start working on this next week #2185

one question for @richarddwang - do you know if eleutherai happened to also release the "existing" datasets like enron emails and opensubtitles?

in appendix c of their paper, they provide details on how they extracted these datasets, but it would be nice if we could just point to a url so we can be as close as possible to original implementation. |

https://github.com/huggingface/datasets/pull/2801 | add books3 | @lewtun

> yes i plan to start working on this next week

Nice! Looking forward to it.

> one question for @richarddwang - do you know if eleutherai happened to also release the "existing" datasets like enron emails and opensubtitles?

Sadly, I don't know any existing dataset of enron emails, but I believe opensubtitles dataset is hosted at here. https://the-eye.eu/public/AI/pile_preliminary_components/

| books3 is part of EleutherAI/The Pile, but AFAIK, The Pile dataset blend all sub datasets together thus we are not able to use just one of its sub dataset from The Pile data. So I create an independent dataset using The Pile preliminary components.

When I was creating dataset card. I found there is room for creating / editing dataset card. I've made it an issue. #2797

Also I am wondering whether the import of The Pile dataset is actively undertaken (because I may need it recently)? #1675 | 61 | text: add books3

books3 is part of EleutherAI/The Pile, but AFAIK, The Pile dataset blend all sub datasets together thus we are not able to use just one of its sub dataset from The Pile data. So I create an independent dataset using The Pile preliminary components.

When I was creating dataset card. I found there is room for creating / editing dataset card. I've made it an issue. #2797

Also I am wondering whether the import of The Pile dataset is actively undertaken (because I may need it recently)? #1675

@lewtun

> yes i plan to start working on this next week

Nice! Looking forward to it.

> one question for @richarddwang - do you know if eleutherai happened to also release the "existing" datasets like enron emails and opensubtitles?

Sadly, I don't know any existing dataset of enron emails, but I believe opensubtitles dataset is hosted at here. https://the-eye.eu/public/AI/pile_preliminary_components/

|

https://github.com/huggingface/datasets/pull/2801 | add books3 | thanks for the link @richarddwang! i think that corpus is actually the youtube subtitles one and my impression is that eleutherai have only uploaded the 14 new datasets they created. i've contacted one of the authors so hopefully they can share some additional info for us :)

btw it might take a while to put together all the corpora if i also need to preprocess them (e.g. the open subtitles / enron email etc), but i expect no longer than a few weeks. | books3 is part of EleutherAI/The Pile, but AFAIK, The Pile dataset blend all sub datasets together thus we are not able to use just one of its sub dataset from The Pile data. So I create an independent dataset using The Pile preliminary components.

When I was creating dataset card. I found there is room for creating / editing dataset card. I've made it an issue. #2797

Also I am wondering whether the import of The Pile dataset is actively undertaken (because I may need it recently)? #1675 | 83 | text: add books3

books3 is part of EleutherAI/The Pile, but AFAIK, The Pile dataset blend all sub datasets together thus we are not able to use just one of its sub dataset from The Pile data. So I create an independent dataset using The Pile preliminary components.

When I was creating dataset card. I found there is room for creating / editing dataset card. I've made it an issue. #2797

Also I am wondering whether the import of The Pile dataset is actively undertaken (because I may need it recently)? #1675

thanks for the link @richarddwang! i think that corpus is actually the youtube subtitles one and my impression is that eleutherai have only uploaded the 14 new datasets they created. i've contacted one of the authors so hopefully they can share some additional info for us :)

btw it might take a while to put together all the corpora if i also need to preprocess them (e.g. the open subtitles / enron email etc), but i expect no longer than a few weeks. |

https://github.com/huggingface/datasets/pull/2800 | Support streaming tar files | Hi ! Why do we need the custom `readline` for exactly ? feel free to add a comment to say why it's needed | This PR adds support to stream tar files by using the `fsspec` tar protocol.

It also uses the custom `readline` implemented in PR #2786.

The corresponding test is implemented in PR #2786. | 23 | text: Support streaming tar files

This PR adds support to stream tar files by using the `fsspec` tar protocol.

It also uses the custom `readline` implemented in PR #2786.

The corresponding test is implemented in PR #2786.

Hi ! Why do we need the custom `readline` for exactly ? feel free to add a comment to say why it's needed |

https://github.com/huggingface/datasets/pull/2798 | Fix streaming zip files | Hi ! I don't fully understand this change @albertvillanova

The `_extract` method used to return the compound URL that points to the root of the inside of the archive.

This way users can use the usual os.path.join or other functions to point to the relevant files. I don't see why you're using a glob pattern ? | Currently, streaming remote zip data files gives `FileNotFoundError` message:

```python

data_files = f"https://huggingface.co/datasets/albertvillanova/datasets-tests-compression/resolve/main/sample.zip"

ds = load_dataset("json", split="train", data_files=data_files, streaming=True)

next(iter(ds))

```

This PR fixes it by adding a glob string.

The corresponding test is implemented in PR #2786. | 56 | text: Fix streaming zip files

Currently, streaming remote zip data files gives `FileNotFoundError` message:

```python

data_files = f"https://huggingface.co/datasets/albertvillanova/datasets-tests-compression/resolve/main/sample.zip"

ds = load_dataset("json", split="train", data_files=data_files, streaming=True)

next(iter(ds))

```

This PR fixes it by adding a glob string.

The corresponding test is implemented in PR #2786.

Hi ! I don't fully understand this change @albertvillanova

The `_extract` method used to return the compound URL that points to the root of the inside of the archive.

This way users can use the usual os.path.join or other functions to point to the relevant files. I don't see why you're using a glob pattern ? |

https://github.com/huggingface/datasets/pull/2798 | Fix streaming zip files | This change is to allow this:

```python

data_files = f"https://huggingface.co/datasets/albertvillanova/datasets-tests-compression/resolve/main/sample.zip"

ds = load_dataset("json", split="train", data_files=data_files, streaming=True)

assert isinstance(ds, IterableDataset)

```

Note that in this case the user will not call os.path.join.

Before this PR it gave error because pointing to the root, without any subsequent join, gives error:

```python

fsspec.open("zip://::https://huggingface.co/datasets/albertvillanova/datasets-tests-compression/resolve/main/sample.zip")

``` | Currently, streaming remote zip data files gives `FileNotFoundError` message:

```python

data_files = f"https://huggingface.co/datasets/albertvillanova/datasets-tests-compression/resolve/main/sample.zip"

ds = load_dataset("json", split="train", data_files=data_files, streaming=True)

next(iter(ds))

```

This PR fixes it by adding a glob string.

The corresponding test is implemented in PR #2786. | 51 | text: Fix streaming zip files

Currently, streaming remote zip data files gives `FileNotFoundError` message:

```python

data_files = f"https://huggingface.co/datasets/albertvillanova/datasets-tests-compression/resolve/main/sample.zip"

ds = load_dataset("json", split="train", data_files=data_files, streaming=True)

next(iter(ds))

```

This PR fixes it by adding a glob string.

The corresponding test is implemented in PR #2786.

This change is to allow this:

```python

data_files = f"https://huggingface.co/datasets/albertvillanova/datasets-tests-compression/resolve/main/sample.zip"

ds = load_dataset("json", split="train", data_files=data_files, streaming=True)

assert isinstance(ds, IterableDataset)

```

Note that in this case the user will not call os.path.join.

Before this PR it gave error because pointing to the root, without any subsequent join, gives error:

```python

fsspec.open("zip://::https://huggingface.co/datasets/albertvillanova/datasets-tests-compression/resolve/main/sample.zip")

``` |

https://github.com/huggingface/datasets/pull/2796 | add cedr dataset | > Hi ! Thanks a lot for adding this one :)

>

> Good job with the dataset card and the dataset script !

>

> I left a few suggestions

Thank you very much for your helpful suggestions. I have tried to carry them all out. | null | 47 | text: add cedr dataset

> Hi ! Thanks a lot for adding this one :)

>

> Good job with the dataset card and the dataset script !

>

> I left a few suggestions

Thank you very much for your helpful suggestions. I have tried to carry them all out. |

https://github.com/huggingface/datasets/pull/2792 | Update: GooAQ - add train/val/test splits | @albertvillanova my tests are failing here:

```

dataset_name = 'gooaq'

def test_load_dataset(self, dataset_name):

configs = self.dataset_tester.load_all_configs(dataset_name, is_local=True)[:1]

> self.dataset_tester.check_load_dataset(dataset_name, configs, is_local=True, use_local_dummy_data=True)

tests/test_dataset_common.py:234:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

tests/test_dataset_common.py:187: in check_load_dataset

self.parent.assertTrue(len(dataset[split]) > 0)

E AssertionError: False is not true

```

When I try loading dataset on local machine it works fine. Any suggestions on how can I avoid this error? | [GooAQ](https://github.com/allenai/gooaq) dataset was recently updated after splits were added for the same. This PR contains new updated GooAQ with train/val/test splits and updated README as well. | 96 | text: Update: GooAQ - add train/val/test splits

[GooAQ](https://github.com/allenai/gooaq) dataset was recently updated after splits were added for the same. This PR contains new updated GooAQ with train/val/test splits and updated README as well.

@albertvillanova my tests are failing here:

```

dataset_name = 'gooaq'

def test_load_dataset(self, dataset_name):

configs = self.dataset_tester.load_all_configs(dataset_name, is_local=True)[:1]

> self.dataset_tester.check_load_dataset(dataset_name, configs, is_local=True, use_local_dummy_data=True)

tests/test_dataset_common.py:234:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

tests/test_dataset_common.py:187: in check_load_dataset

self.parent.assertTrue(len(dataset[split]) > 0)

E AssertionError: False is not true

```

When I try loading dataset on local machine it works fine. Any suggestions on how can I avoid this error? |

https://github.com/huggingface/datasets/pull/2783 | Add KS task to SUPERB | thanks a lot for implementing this @anton-l !!

i won't have time to review this while i'm away, so happy for @albertvillanova and @patrickvonplaten to decide when to merge :) | Add the KS (keyword spotting) task as described in the [SUPERB paper](https://arxiv.org/abs/2105.01051).

- [s3prl instructions](https://github.com/s3prl/s3prl/blob/master/s3prl/downstream/README.md#ks-keyword-spotting)

- [s3prl implementation](https://github.com/s3prl/s3prl/blob/master/s3prl/downstream/speech_commands/dataset.py)

- [TFDS implementation](https://github.com/tensorflow/datasets/blob/master/tensorflow_datasets/audio/speech_commands.py)

Some notable quirks:

- The dataset is originally single-archive (train+val+test all in one), but the test set has a "canonical" distribution in a separate archive, which is also used here (see `_split_ks_files()`).

- The `_background_noise_`/`_silence_` audio files are much longer than others, so they require some sort of slicing for downstream training. I decided to leave the implementation of that up to the users, since TFDS and s3prl take different approaches (either slicing wavs deterministically, or subsampling randomly at runtime)

Related to #2619. | 30 | text: Add KS task to SUPERB

Add the KS (keyword spotting) task as described in the [SUPERB paper](https://arxiv.org/abs/2105.01051).

- [s3prl instructions](https://github.com/s3prl/s3prl/blob/master/s3prl/downstream/README.md#ks-keyword-spotting)

- [s3prl implementation](https://github.com/s3prl/s3prl/blob/master/s3prl/downstream/speech_commands/dataset.py)

- [TFDS implementation](https://github.com/tensorflow/datasets/blob/master/tensorflow_datasets/audio/speech_commands.py)

Some notable quirks:

- The dataset is originally single-archive (train+val+test all in one), but the test set has a "canonical" distribution in a separate archive, which is also used here (see `_split_ks_files()`).

- The `_background_noise_`/`_silence_` audio files are much longer than others, so they require some sort of slicing for downstream training. I decided to leave the implementation of that up to the users, since TFDS and s3prl take different approaches (either slicing wavs deterministically, or subsampling randomly at runtime)

Related to #2619.

thanks a lot for implementing this @anton-l !!

i won't have time to review this while i'm away, so happy for @albertvillanova and @patrickvonplaten to decide when to merge :) |

https://github.com/huggingface/datasets/pull/2783 | Add KS task to SUPERB | > The _background_noise_/_silence_ audio files are much longer than others, so they require some sort of slicing for downstream training. I decided to leave the implementation of that up to the users, since TFDS and s3prl take different approaches (either slicing wavs deterministically, or subsampling randomly at runtime)

@anton-l I was thinking that maybe we could give some hints in the dataset card (in a Usage section); something similar as for diarization: https://github.com/huggingface/datasets/blob/master/datasets/superb/README.md#example-of-usage

Note that for diarization it is not yet finished: we have to test it and then provide an end-to-end example: https://github.com/huggingface/datasets/pull/2661/files#r680224909 | Add the KS (keyword spotting) task as described in the [SUPERB paper](https://arxiv.org/abs/2105.01051).

- [s3prl instructions](https://github.com/s3prl/s3prl/blob/master/s3prl/downstream/README.md#ks-keyword-spotting)

- [s3prl implementation](https://github.com/s3prl/s3prl/blob/master/s3prl/downstream/speech_commands/dataset.py)

- [TFDS implementation](https://github.com/tensorflow/datasets/blob/master/tensorflow_datasets/audio/speech_commands.py)

Some notable quirks:

- The dataset is originally single-archive (train+val+test all in one), but the test set has a "canonical" distribution in a separate archive, which is also used here (see `_split_ks_files()`).

- The `_background_noise_`/`_silence_` audio files are much longer than others, so they require some sort of slicing for downstream training. I decided to leave the implementation of that up to the users, since TFDS and s3prl take different approaches (either slicing wavs deterministically, or subsampling randomly at runtime)

Related to #2619. | 94 | text: Add KS task to SUPERB

Add the KS (keyword spotting) task as described in the [SUPERB paper](https://arxiv.org/abs/2105.01051).

- [s3prl instructions](https://github.com/s3prl/s3prl/blob/master/s3prl/downstream/README.md#ks-keyword-spotting)

- [s3prl implementation](https://github.com/s3prl/s3prl/blob/master/s3prl/downstream/speech_commands/dataset.py)

- [TFDS implementation](https://github.com/tensorflow/datasets/blob/master/tensorflow_datasets/audio/speech_commands.py)

Some notable quirks:

- The dataset is originally single-archive (train+val+test all in one), but the test set has a "canonical" distribution in a separate archive, which is also used here (see `_split_ks_files()`).

- The `_background_noise_`/`_silence_` audio files are much longer than others, so they require some sort of slicing for downstream training. I decided to leave the implementation of that up to the users, since TFDS and s3prl take different approaches (either slicing wavs deterministically, or subsampling randomly at runtime)

Related to #2619.

> The _background_noise_/_silence_ audio files are much longer than others, so they require some sort of slicing for downstream training. I decided to leave the implementation of that up to the users, since TFDS and s3prl take different approaches (either slicing wavs deterministically, or subsampling randomly at runtime)

@anton-l I was thinking that maybe we could give some hints in the dataset card (in a Usage section); something similar as for diarization: https://github.com/huggingface/datasets/blob/master/datasets/superb/README.md#example-of-usage

Note that for diarization it is not yet finished: we have to test it and then provide an end-to-end example: https://github.com/huggingface/datasets/pull/2661/files#r680224909 |

https://github.com/huggingface/datasets/pull/2783 | Add KS task to SUPERB | @albertvillanova yeah, I'm not sure how to best implement it in pure `datasets` yet. It's something like this, where `sample_noise()` needs to be called from a pytorch batch collator or other framework-specific variant:

```python

def map_to_array(example):

import soundfile as sf

speech_array, sample_rate = sf.read(example["file"])

example["speech"] = speech_array

example["sample_rate"] = sample_rate

return example

def sample_noise(example):

# Use a version of this function in a stateless way to extract random 1 sec slices of background noise

# on each epoch

from random import randint

# _silence_ audios are longer than 1 sec

if example["label"] == "_silence_":

random_offset = randint(0, len(example["speech"]) - example["sample_rate"] - 1)

example["speech"] = example["speech"][random_offset : random_offset + example["sample_rate"]]

return example

``` | Add the KS (keyword spotting) task as described in the [SUPERB paper](https://arxiv.org/abs/2105.01051).

- [s3prl instructions](https://github.com/s3prl/s3prl/blob/master/s3prl/downstream/README.md#ks-keyword-spotting)

- [s3prl implementation](https://github.com/s3prl/s3prl/blob/master/s3prl/downstream/speech_commands/dataset.py)

- [TFDS implementation](https://github.com/tensorflow/datasets/blob/master/tensorflow_datasets/audio/speech_commands.py)

Some notable quirks:

- The dataset is originally single-archive (train+val+test all in one), but the test set has a "canonical" distribution in a separate archive, which is also used here (see `_split_ks_files()`).

- The `_background_noise_`/`_silence_` audio files are much longer than others, so they require some sort of slicing for downstream training. I decided to leave the implementation of that up to the users, since TFDS and s3prl take different approaches (either slicing wavs deterministically, or subsampling randomly at runtime)

Related to #2619. | 112 | text: Add KS task to SUPERB

Add the KS (keyword spotting) task as described in the [SUPERB paper](https://arxiv.org/abs/2105.01051).

- [s3prl instructions](https://github.com/s3prl/s3prl/blob/master/s3prl/downstream/README.md#ks-keyword-spotting)

- [s3prl implementation](https://github.com/s3prl/s3prl/blob/master/s3prl/downstream/speech_commands/dataset.py)

- [TFDS implementation](https://github.com/tensorflow/datasets/blob/master/tensorflow_datasets/audio/speech_commands.py)

Some notable quirks:

- The dataset is originally single-archive (train+val+test all in one), but the test set has a "canonical" distribution in a separate archive, which is also used here (see `_split_ks_files()`).

- The `_background_noise_`/`_silence_` audio files are much longer than others, so they require some sort of slicing for downstream training. I decided to leave the implementation of that up to the users, since TFDS and s3prl take different approaches (either slicing wavs deterministically, or subsampling randomly at runtime)

Related to #2619.

@albertvillanova yeah, I'm not sure how to best implement it in pure `datasets` yet. It's something like this, where `sample_noise()` needs to be called from a pytorch batch collator or other framework-specific variant:

```python

def map_to_array(example):

import soundfile as sf

speech_array, sample_rate = sf.read(example["file"])

example["speech"] = speech_array

example["sample_rate"] = sample_rate

return example

def sample_noise(example):

# Use a version of this function in a stateless way to extract random 1 sec slices of background noise

# on each epoch

from random import randint

# _silence_ audios are longer than 1 sec

if example["label"] == "_silence_":

random_offset = randint(0, len(example["speech"]) - example["sample_rate"] - 1)

example["speech"] = example["speech"][random_offset : random_offset + example["sample_rate"]]

return example

``` |

https://github.com/huggingface/datasets/pull/2783 | Add KS task to SUPERB | I see... Yes, not trivial indeed. Maybe for the moment you could add those functions above to the README (as it is the case for now in diarization)? What do you think? | Add the KS (keyword spotting) task as described in the [SUPERB paper](https://arxiv.org/abs/2105.01051).

- [s3prl instructions](https://github.com/s3prl/s3prl/blob/master/s3prl/downstream/README.md#ks-keyword-spotting)

- [s3prl implementation](https://github.com/s3prl/s3prl/blob/master/s3prl/downstream/speech_commands/dataset.py)

- [TFDS implementation](https://github.com/tensorflow/datasets/blob/master/tensorflow_datasets/audio/speech_commands.py)

Some notable quirks:

- The dataset is originally single-archive (train+val+test all in one), but the test set has a "canonical" distribution in a separate archive, which is also used here (see `_split_ks_files()`).

- The `_background_noise_`/`_silence_` audio files are much longer than others, so they require some sort of slicing for downstream training. I decided to leave the implementation of that up to the users, since TFDS and s3prl take different approaches (either slicing wavs deterministically, or subsampling randomly at runtime)

Related to #2619. | 32 | text: Add KS task to SUPERB

Add the KS (keyword spotting) task as described in the [SUPERB paper](https://arxiv.org/abs/2105.01051).

- [s3prl instructions](https://github.com/s3prl/s3prl/blob/master/s3prl/downstream/README.md#ks-keyword-spotting)

- [s3prl implementation](https://github.com/s3prl/s3prl/blob/master/s3prl/downstream/speech_commands/dataset.py)

- [TFDS implementation](https://github.com/tensorflow/datasets/blob/master/tensorflow_datasets/audio/speech_commands.py)

Some notable quirks:

- The dataset is originally single-archive (train+val+test all in one), but the test set has a "canonical" distribution in a separate archive, which is also used here (see `_split_ks_files()`).

- The `_background_noise_`/`_silence_` audio files are much longer than others, so they require some sort of slicing for downstream training. I decided to leave the implementation of that up to the users, since TFDS and s3prl take different approaches (either slicing wavs deterministically, or subsampling randomly at runtime)

Related to #2619.

I see... Yes, not trivial indeed. Maybe for the moment you could add those functions above to the README (as it is the case for now in diarization)? What do you think? |

https://github.com/huggingface/datasets/pull/2774 | Prevent .map from using multiprocessing when loading from cache | Hi @thomasw21, yes you are right: those failing tests were fixed with #2779.

Would you mind to merge current upstream master branch and push again?

```

git checkout sequential_map_when_cached

git fetch upstream master

git merge upstream/master

git push -u origin sequential_map_when_cached

``` | ## Context

On our setup, we use different setup to train vs proprocessing datasets. Usually we are able to obtain a high number of cpus to preprocess, which allows us to use `num_proc` however we can't use as many during training phase. Currently if we use `num_proc={whatever the preprocessing value was}` we load from cache, but we get:

```

Traceback (most recent call last):

File "lib/python3.8/site-packages/multiprocess/pool.py", line 131, in worker

put((job, i, result))

File "lib/python3.8/site-packages/multiprocess/queues.py", line 371, in put

self._writer.send_bytes(obj)

File "lib/python3.8/site-packages/multiprocess/connection.py", line 203, in send_bytes

self._send_bytes(m[offset:offset + size])

File "lib/python3.8/site-packages/multiprocess/connection.py", line 414, in _send_bytes

self._send(header + buf)

File "lib/python3.8/site-packages/multiprocess/connection.py", line 371, in _send

n = write(self._handle, buf)

BrokenPipeError: [Errno 32] Broken pipe

```

Our current guess, is that we're spawning too many processes compared to the number of cpus available, and it's running OOM. Also we're loading this in DDP setting which means that for each gpu, I need to spawn a high number of processes to match the preprocessing fingerprint.

Instead what we suggest:

- Allow loading shard sequentially, sharing the same fingerprint as the multiprocessed one, in order to leverage multiprocessing when we actually generate the cache, and remove it when loading from cache.

## Current issues

~I'm having a hard time making fingerprints match. For some reason, the multiprocessing and the sequential version generate two different hash.~

**EDIT**: Turns out multiprocessing and sequential have different `transform` value for fingerprinting (check `fingerprint_transform`) when running `_map_single`:

- sequential : `datasets.arrow_dataset.Dataset._map_single`

- multiprocessing: `datasets.arrow_dataset._map_single`

This discrepancy is caused by multiprocessing pickling the transformer function, it doesn't seem to keep the `Dataset` hierarchy. I'm still unclear on why `func.__qual_name__` isn't handled correctly in multiprocessing. But replacing `__qualname__` by `__name__` fixes the issue.

## What was done

~We try to prevent the usage of multiprocessing when loading a dataset. Instead we load all cached shards sequentially.~

I couldn't find a nice way to obtain the cached_file_name and check they all exist before deciding to use the multiprocessing flow or not. Instead I expose an optional boolean `sequential` in `map` method.

## TODO

- [x] Check that the multiprocessed version and the sequential version output the same output

- [x] Check that sequential can load multiprocessed

- [x] Check that multiprocessed can load sequential

## Test

```python

from datasets import load_dataset

from multiprocessing import Pool

import random

def process(batch, rng):

length = len(batch["text"])

return {**batch, "processed_text": [f"PROCESSED {rng.random()}" for _ in range(length)]}

dataset = load_dataset("stas/openwebtext-10k", split="train")

print(dataset.column_names)

print(type(dataset))

rng = random.Random(42)

dataset1 = dataset.map(process, batched=True, batch_size=50, num_proc=4, fn_kwargs={"rng": rng})

# This one should be loaded from cache

rng = random.Random(42)

dataset2 = dataset.map(process, batched=True, batch_size=50, num_proc=4, fn_kwargs={"rng": rng}, sequential=True)

# Just to check that the random generator was correct

print(dataset1[-1]["processed_text"])

print(dataset2[-1]["processed_text"])

```

## Other solutions

I chose to load everything sequentially, but we can probably find a way to load shards in parallel using another number of workers (essentially this would be an argument not used for fingerprinting, allowing to allow `m` shards using `n` processes, which would be very useful when same dataset have to be loaded on two different setup, and we still want to leverage cache).

Also we can use a env variable similarly to `TOKENIZERS_PARALLELISM` as this seems generally setup related (though this changes slightly if we use multiprocessing).

cc @lhoestq (since I had asked you previously on `num_proc` being used for fingerprinting). Don't know if this is acceptable. | 42 | text: Prevent .map from using multiprocessing when loading from cache

## Context

On our setup, we use different setup to train vs proprocessing datasets. Usually we are able to obtain a high number of cpus to preprocess, which allows us to use `num_proc` however we can't use as many during training phase. Currently if we use `num_proc={whatever the preprocessing value was}` we load from cache, but we get:

```

Traceback (most recent call last):

File "lib/python3.8/site-packages/multiprocess/pool.py", line 131, in worker

put((job, i, result))

File "lib/python3.8/site-packages/multiprocess/queues.py", line 371, in put

self._writer.send_bytes(obj)

File "lib/python3.8/site-packages/multiprocess/connection.py", line 203, in send_bytes

self._send_bytes(m[offset:offset + size])

File "lib/python3.8/site-packages/multiprocess/connection.py", line 414, in _send_bytes

self._send(header + buf)

File "lib/python3.8/site-packages/multiprocess/connection.py", line 371, in _send

n = write(self._handle, buf)

BrokenPipeError: [Errno 32] Broken pipe

```

Our current guess, is that we're spawning too many processes compared to the number of cpus available, and it's running OOM. Also we're loading this in DDP setting which means that for each gpu, I need to spawn a high number of processes to match the preprocessing fingerprint.

Instead what we suggest:

- Allow loading shard sequentially, sharing the same fingerprint as the multiprocessed one, in order to leverage multiprocessing when we actually generate the cache, and remove it when loading from cache.

## Current issues

~I'm having a hard time making fingerprints match. For some reason, the multiprocessing and the sequential version generate two different hash.~

**EDIT**: Turns out multiprocessing and sequential have different `transform` value for fingerprinting (check `fingerprint_transform`) when running `_map_single`:

- sequential : `datasets.arrow_dataset.Dataset._map_single`

- multiprocessing: `datasets.arrow_dataset._map_single`

This discrepancy is caused by multiprocessing pickling the transformer function, it doesn't seem to keep the `Dataset` hierarchy. I'm still unclear on why `func.__qual_name__` isn't handled correctly in multiprocessing. But replacing `__qualname__` by `__name__` fixes the issue.

## What was done

~We try to prevent the usage of multiprocessing when loading a dataset. Instead we load all cached shards sequentially.~

I couldn't find a nice way to obtain the cached_file_name and check they all exist before deciding to use the multiprocessing flow or not. Instead I expose an optional boolean `sequential` in `map` method.

## TODO

- [x] Check that the multiprocessed version and the sequential version output the same output

- [x] Check that sequential can load multiprocessed

- [x] Check that multiprocessed can load sequential

## Test

```python

from datasets import load_dataset

from multiprocessing import Pool

import random

def process(batch, rng):

length = len(batch["text"])

return {**batch, "processed_text": [f"PROCESSED {rng.random()}" for _ in range(length)]}

dataset = load_dataset("stas/openwebtext-10k", split="train")

print(dataset.column_names)

print(type(dataset))

rng = random.Random(42)

dataset1 = dataset.map(process, batched=True, batch_size=50, num_proc=4, fn_kwargs={"rng": rng})

# This one should be loaded from cache

rng = random.Random(42)

dataset2 = dataset.map(process, batched=True, batch_size=50, num_proc=4, fn_kwargs={"rng": rng}, sequential=True)

# Just to check that the random generator was correct

print(dataset1[-1]["processed_text"])

print(dataset2[-1]["processed_text"])

```

## Other solutions

I chose to load everything sequentially, but we can probably find a way to load shards in parallel using another number of workers (essentially this would be an argument not used for fingerprinting, allowing to allow `m` shards using `n` processes, which would be very useful when same dataset have to be loaded on two different setup, and we still want to leverage cache).

Also we can use a env variable similarly to `TOKENIZERS_PARALLELISM` as this seems generally setup related (though this changes slightly if we use multiprocessing).

cc @lhoestq (since I had asked you previously on `num_proc` being used for fingerprinting). Don't know if this is acceptable.

Hi @thomasw21, yes you are right: those failing tests were fixed with #2779.

Would you mind to merge current upstream master branch and push again?

```

git checkout sequential_map_when_cached

git fetch upstream master

git merge upstream/master

git push -u origin sequential_map_when_cached

``` |

https://github.com/huggingface/datasets/pull/2774 | Prevent .map from using multiprocessing when loading from cache | Thanks for working on this ! I'm sure we can figure something out ;)

Currently `map` starts a process to apply the map function on each shard. If the shard has already been processed, then the process that has been spawned loads the processed shard from the cache and returns it.

I think we should be able to simply not start a process if a shard is already processed and cached.

This way:

- you won't need to specify `sequential=True`

- it won't create new processes if the dataset is already processed and cached

- it will properly reload each processed shard that is cached

To know if we have to start a new process for a shard you can use the function `update_fingerprint` from fingerprint.py to know the expected fingerprint of the processed shard.

Then, if the shard has already been processed, there will be a cache file named `cached-<new_fingerprint>.arrow` and you can load it with

```

Dataset.from_file(path_to_cache_file, info=self.info, split=self.split)

```

Let me know if that makes sense ! | ## Context

On our setup, we use different setup to train vs proprocessing datasets. Usually we are able to obtain a high number of cpus to preprocess, which allows us to use `num_proc` however we can't use as many during training phase. Currently if we use `num_proc={whatever the preprocessing value was}` we load from cache, but we get:

```

Traceback (most recent call last):

File "lib/python3.8/site-packages/multiprocess/pool.py", line 131, in worker

put((job, i, result))

File "lib/python3.8/site-packages/multiprocess/queues.py", line 371, in put

self._writer.send_bytes(obj)

File "lib/python3.8/site-packages/multiprocess/connection.py", line 203, in send_bytes

self._send_bytes(m[offset:offset + size])

File "lib/python3.8/site-packages/multiprocess/connection.py", line 414, in _send_bytes

self._send(header + buf)

File "lib/python3.8/site-packages/multiprocess/connection.py", line 371, in _send

n = write(self._handle, buf)

BrokenPipeError: [Errno 32] Broken pipe

```

Our current guess, is that we're spawning too many processes compared to the number of cpus available, and it's running OOM. Also we're loading this in DDP setting which means that for each gpu, I need to spawn a high number of processes to match the preprocessing fingerprint.

Instead what we suggest:

- Allow loading shard sequentially, sharing the same fingerprint as the multiprocessed one, in order to leverage multiprocessing when we actually generate the cache, and remove it when loading from cache.

## Current issues

~I'm having a hard time making fingerprints match. For some reason, the multiprocessing and the sequential version generate two different hash.~

**EDIT**: Turns out multiprocessing and sequential have different `transform` value for fingerprinting (check `fingerprint_transform`) when running `_map_single`:

- sequential : `datasets.arrow_dataset.Dataset._map_single`

- multiprocessing: `datasets.arrow_dataset._map_single`

This discrepancy is caused by multiprocessing pickling the transformer function, it doesn't seem to keep the `Dataset` hierarchy. I'm still unclear on why `func.__qual_name__` isn't handled correctly in multiprocessing. But replacing `__qualname__` by `__name__` fixes the issue.

## What was done

~We try to prevent the usage of multiprocessing when loading a dataset. Instead we load all cached shards sequentially.~

I couldn't find a nice way to obtain the cached_file_name and check they all exist before deciding to use the multiprocessing flow or not. Instead I expose an optional boolean `sequential` in `map` method.

## TODO

- [x] Check that the multiprocessed version and the sequential version output the same output

- [x] Check that sequential can load multiprocessed

- [x] Check that multiprocessed can load sequential

## Test

```python

from datasets import load_dataset

from multiprocessing import Pool

import random

def process(batch, rng):

length = len(batch["text"])

return {**batch, "processed_text": [f"PROCESSED {rng.random()}" for _ in range(length)]}

dataset = load_dataset("stas/openwebtext-10k", split="train")

print(dataset.column_names)

print(type(dataset))

rng = random.Random(42)

dataset1 = dataset.map(process, batched=True, batch_size=50, num_proc=4, fn_kwargs={"rng": rng})

# This one should be loaded from cache

rng = random.Random(42)

dataset2 = dataset.map(process, batched=True, batch_size=50, num_proc=4, fn_kwargs={"rng": rng}, sequential=True)

# Just to check that the random generator was correct

print(dataset1[-1]["processed_text"])

print(dataset2[-1]["processed_text"])

```

## Other solutions

I chose to load everything sequentially, but we can probably find a way to load shards in parallel using another number of workers (essentially this would be an argument not used for fingerprinting, allowing to allow `m` shards using `n` processes, which would be very useful when same dataset have to be loaded on two different setup, and we still want to leverage cache).

Also we can use a env variable similarly to `TOKENIZERS_PARALLELISM` as this seems generally setup related (though this changes slightly if we use multiprocessing).

cc @lhoestq (since I had asked you previously on `num_proc` being used for fingerprinting). Don't know if this is acceptable. | 170 | text: Prevent .map from using multiprocessing when loading from cache

## Context

On our setup, we use different setup to train vs proprocessing datasets. Usually we are able to obtain a high number of cpus to preprocess, which allows us to use `num_proc` however we can't use as many during training phase. Currently if we use `num_proc={whatever the preprocessing value was}` we load from cache, but we get:

```

Traceback (most recent call last):

File "lib/python3.8/site-packages/multiprocess/pool.py", line 131, in worker

put((job, i, result))

File "lib/python3.8/site-packages/multiprocess/queues.py", line 371, in put

self._writer.send_bytes(obj)

File "lib/python3.8/site-packages/multiprocess/connection.py", line 203, in send_bytes

self._send_bytes(m[offset:offset + size])

File "lib/python3.8/site-packages/multiprocess/connection.py", line 414, in _send_bytes

self._send(header + buf)

File "lib/python3.8/site-packages/multiprocess/connection.py", line 371, in _send

n = write(self._handle, buf)

BrokenPipeError: [Errno 32] Broken pipe

```

Our current guess, is that we're spawning too many processes compared to the number of cpus available, and it's running OOM. Also we're loading this in DDP setting which means that for each gpu, I need to spawn a high number of processes to match the preprocessing fingerprint.

Instead what we suggest:

- Allow loading shard sequentially, sharing the same fingerprint as the multiprocessed one, in order to leverage multiprocessing when we actually generate the cache, and remove it when loading from cache.

## Current issues

~I'm having a hard time making fingerprints match. For some reason, the multiprocessing and the sequential version generate two different hash.~

**EDIT**: Turns out multiprocessing and sequential have different `transform` value for fingerprinting (check `fingerprint_transform`) when running `_map_single`:

- sequential : `datasets.arrow_dataset.Dataset._map_single`

- multiprocessing: `datasets.arrow_dataset._map_single`

This discrepancy is caused by multiprocessing pickling the transformer function, it doesn't seem to keep the `Dataset` hierarchy. I'm still unclear on why `func.__qual_name__` isn't handled correctly in multiprocessing. But replacing `__qualname__` by `__name__` fixes the issue.

## What was done

~We try to prevent the usage of multiprocessing when loading a dataset. Instead we load all cached shards sequentially.~

I couldn't find a nice way to obtain the cached_file_name and check they all exist before deciding to use the multiprocessing flow or not. Instead I expose an optional boolean `sequential` in `map` method.

## TODO

- [x] Check that the multiprocessed version and the sequential version output the same output

- [x] Check that sequential can load multiprocessed

- [x] Check that multiprocessed can load sequential

## Test

```python

from datasets import load_dataset

from multiprocessing import Pool

import random

def process(batch, rng):

length = len(batch["text"])