status

stringclasses 1

value | repo_name

stringclasses 31

values | repo_url

stringclasses 31

values | issue_id

int64 1

104k

| title

stringlengths 4

369

| body

stringlengths 0

254k

⌀ | issue_url

stringlengths 37

56

| pull_url

stringlengths 37

54

| before_fix_sha

stringlengths 40

40

| after_fix_sha

stringlengths 40

40

| report_datetime

unknown | language

stringclasses 5

values | commit_datetime

unknown | updated_file

stringlengths 4

188

| file_content

stringlengths 0

5.12M

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

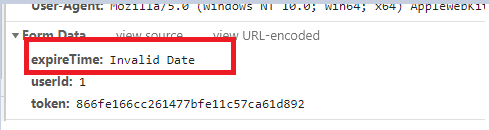

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 5,511 | [Feature][JsonSplit-api]schedule update interface | from #5498

remove the request parameter workerGroupId,including the front end and controller interface | https://github.com/apache/dolphinscheduler/issues/5511 | https://github.com/apache/dolphinscheduler/pull/5761 | d382a7ba8c454b41944958c6e42692843a765234 | cfa22d7c89bcd8e35b8a286b39b67b9b36b3b4dc | "2021-05-18T13:58:16Z" | java | "2021-07-07T10:15:19Z" | dolphinscheduler-api/src/test/java/org/apache/dolphinscheduler/api/controller/SchedulerControllerTest.java | /*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package org.apache.dolphinscheduler.api.controller;

import static org.mockito.ArgumentMatchers.isA;

import static org.springframework.test.web.servlet.request.MockMvcRequestBuilders.get;

import static org.springframework.test.web.servlet.request.MockMvcRequestBuilders.post;

import static org.springframework.test.web.servlet.result.MockMvcResultMatchers.content;

import static org.springframework.test.web.servlet.result.MockMvcResultMatchers.status;

import java.util.HashMap;

import java.util.Map;

import org.apache.dolphinscheduler.api.enums.Status;

import org.apache.dolphinscheduler.api.service.SchedulerService;

import org.apache.dolphinscheduler.api.utils.Result;

import org.apache.dolphinscheduler.common.Constants;

import org.apache.dolphinscheduler.common.enums.FailureStrategy;

import org.apache.dolphinscheduler.common.enums.Priority;

import org.apache.dolphinscheduler.common.enums.WarningType;

import org.apache.dolphinscheduler.common.utils.JSONUtils;

import org.apache.dolphinscheduler.dao.entity.User;

import org.junit.Assert;

import org.junit.Test;

import org.mockito.Mockito;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.boot.test.mock.mockito.MockBean;

import org.springframework.http.MediaType;

import org.springframework.test.web.servlet.MvcResult;

import org.springframework.util.LinkedMultiValueMap;

import org.springframework.util.MultiValueMap;

/**

* scheduler controller test

*/

public class SchedulerControllerTest extends AbstractControllerTest {

private static Logger logger = LoggerFactory.getLogger(SchedulerControllerTest.class);

@MockBean

private SchedulerService schedulerService;

@Test

public void testCreateSchedule() throws Exception {

MultiValueMap<String, String> paramsMap = new LinkedMultiValueMap<>();

paramsMap.add("processDefinitionCode","40");

paramsMap.add("schedule","{'startTime':'2019-12-16 00:00:00','endTime':'2019-12-17 00:00:00','crontab':'0 0 6 * * ? *'}");

paramsMap.add("warningType",String.valueOf(WarningType.NONE));

paramsMap.add("warningGroupId","1");

paramsMap.add("failureStrategy",String.valueOf(FailureStrategy.CONTINUE));

paramsMap.add("receivers","");

paramsMap.add("receiversCc","");

paramsMap.add("workerGroupId","1");

paramsMap.add("processInstancePriority",String.valueOf(Priority.HIGH));

Map<String, Object> serviceResult = new HashMap<>();

putMsg(serviceResult, Status.SUCCESS);

serviceResult.put(Constants.DATA_LIST, 1);

Mockito.when(schedulerService.insertSchedule(isA(User.class), isA(Long.class), isA(Long.class),

isA(String.class), isA(WarningType.class), isA(int.class), isA(FailureStrategy.class),

isA(Priority.class), isA(String.class))).thenReturn(serviceResult);

MvcResult mvcResult = mockMvc.perform(post("/projects/{projectCode}/schedule/create",123)

.header(SESSION_ID, sessionId)

.params(paramsMap))

.andExpect(status().isCreated())

.andExpect(content().contentType(MediaType.APPLICATION_JSON_UTF8))

.andReturn();

Result result = JSONUtils.parseObject(mvcResult.getResponse().getContentAsString(), Result.class);

Assert.assertEquals(Status.SUCCESS.getCode(),result.getCode().intValue());

logger.info(mvcResult.getResponse().getContentAsString());

}

@Test

public void testUpdateSchedule() throws Exception {

MultiValueMap<String, String> paramsMap = new LinkedMultiValueMap<>();

paramsMap.add("id","37");

paramsMap.add("schedule","{'startTime':'2019-12-16 00:00:00','endTime':'2019-12-17 00:00:00','crontab':'0 0 7 * * ? *'}");

paramsMap.add("warningType",String.valueOf(WarningType.NONE));

paramsMap.add("warningGroupId","1");

paramsMap.add("failureStrategy",String.valueOf(FailureStrategy.CONTINUE));

paramsMap.add("receivers","");

paramsMap.add("receiversCc","");

paramsMap.add("workerGroupId","1");

paramsMap.add("processInstancePriority",String.valueOf(Priority.HIGH));

MvcResult mvcResult = mockMvc.perform(post("/projects/{projectName}/schedule/update","cxc_1113")

.header(SESSION_ID, sessionId)

.params(paramsMap))

.andExpect(status().isOk())

.andExpect(content().contentType(MediaType.APPLICATION_JSON_UTF8))

.andReturn();

Result result = JSONUtils.parseObject(mvcResult.getResponse().getContentAsString(), Result.class);

Assert.assertEquals(Status.SUCCESS.getCode(),result.getCode().intValue());

logger.info(mvcResult.getResponse().getContentAsString());

}

@Test

public void testOnline() throws Exception {

MultiValueMap<String, String> paramsMap = new LinkedMultiValueMap<>();

paramsMap.add("id","37");

MvcResult mvcResult = mockMvc.perform(post("/projects/{projectName}/schedule/online","cxc_1113")

.header(SESSION_ID, sessionId)

.params(paramsMap))

.andExpect(status().isOk())

.andExpect(content().contentType(MediaType.APPLICATION_JSON_UTF8))

.andReturn();

Result result = JSONUtils.parseObject(mvcResult.getResponse().getContentAsString(), Result.class);

Assert.assertEquals(Status.SUCCESS.getCode(),result.getCode().intValue());

logger.info(mvcResult.getResponse().getContentAsString());

}

@Test

public void testOffline() throws Exception {

MultiValueMap<String, String> paramsMap = new LinkedMultiValueMap<>();

paramsMap.add("id","28");

MvcResult mvcResult = mockMvc.perform(post("/projects/{projectName}/schedule/offline","cxc_1113")

.header(SESSION_ID, sessionId)

.params(paramsMap))

.andExpect(status().isOk())

.andExpect(content().contentType(MediaType.APPLICATION_JSON_UTF8))

.andReturn();

Result result = JSONUtils.parseObject(mvcResult.getResponse().getContentAsString(), Result.class);

Assert.assertEquals(Status.SUCCESS.getCode(),result.getCode().intValue());

logger.info(mvcResult.getResponse().getContentAsString());

}

@Test

public void testQueryScheduleListPaging() throws Exception {

MultiValueMap<String, String> paramsMap = new LinkedMultiValueMap<>();

paramsMap.add("processDefinitionId","40");

paramsMap.add("searchVal","test");

paramsMap.add("pageNo","1");

paramsMap.add("pageSize","30");

MvcResult mvcResult = mockMvc.perform(get("/projects/{projectName}/schedule/list-paging","cxc_1113")

.header(SESSION_ID, sessionId)

.params(paramsMap))

.andExpect(status().isOk())

.andExpect(content().contentType(MediaType.APPLICATION_JSON_UTF8))

.andReturn();

Result result = JSONUtils.parseObject(mvcResult.getResponse().getContentAsString(), Result.class);

Assert.assertEquals(Status.SUCCESS.getCode(),result.getCode().intValue());

logger.info(mvcResult.getResponse().getContentAsString());

}

@Test

public void testQueryScheduleList() throws Exception {

MvcResult mvcResult = mockMvc.perform(post("/projects/{projectName}/schedule/list","cxc_1113")

.header(SESSION_ID, sessionId))

.andExpect(status().isOk())

.andExpect(content().contentType(MediaType.APPLICATION_JSON_UTF8))

.andReturn();

Result result = JSONUtils.parseObject(mvcResult.getResponse().getContentAsString(), Result.class);

Assert.assertEquals(Status.SUCCESS.getCode(),result.getCode().intValue());

logger.info(mvcResult.getResponse().getContentAsString());

}

@Test

public void testPreviewSchedule() throws Exception {

MvcResult mvcResult = mockMvc.perform(post("/projects/{projectName}/schedule/preview","cxc_1113")

.header(SESSION_ID, sessionId)

.param("schedule","{'startTime':'2019-06-10 00:00:00','endTime':'2019-06-13 00:00:00','crontab':'0 0 3/6 * * ? *'}"))

.andExpect(status().isCreated())

.andExpect(content().contentType(MediaType.APPLICATION_JSON_UTF8))

.andReturn();

Result result = JSONUtils.parseObject(mvcResult.getResponse().getContentAsString(), Result.class);

Assert.assertEquals(Status.SUCCESS.getCode(),result.getCode().intValue());

logger.info(mvcResult.getResponse().getContentAsString());

}

@Test

public void testDeleteScheduleById() throws Exception {

MultiValueMap<String, String> paramsMap = new LinkedMultiValueMap<>();

paramsMap.add("scheduleId","37");

MvcResult mvcResult = mockMvc.perform(get("/projects/{projectName}/schedule/delete","cxc_1113")

.header(SESSION_ID, sessionId)

.params(paramsMap))

.andExpect(status().isOk())

.andExpect(content().contentType(MediaType.APPLICATION_JSON_UTF8))

.andReturn();

Result result = JSONUtils.parseObject(mvcResult.getResponse().getContentAsString(), Result.class);

Assert.assertEquals(Status.SUCCESS.getCode(),result.getCode().intValue());

logger.info(mvcResult.getResponse().getContentAsString());

}

}

|

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 5,511 | [Feature][JsonSplit-api]schedule update interface | from #5498

remove the request parameter workerGroupId,including the front end and controller interface | https://github.com/apache/dolphinscheduler/issues/5511 | https://github.com/apache/dolphinscheduler/pull/5761 | d382a7ba8c454b41944958c6e42692843a765234 | cfa22d7c89bcd8e35b8a286b39b67b9b36b3b4dc | "2021-05-18T13:58:16Z" | java | "2021-07-07T10:15:19Z" | dolphinscheduler-ui/src/js/conf/home/store/dag/actions.js | /*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

import _ from 'lodash'

import io from '@/module/io'

import { tasksState } from '@/conf/home/pages/dag/_source/config'

// delete 'definitionList' from tasks

const deleteDefinitionList = (tasks) => {

const newTasks = []

tasks.forEach(item => {

const newItem = Object.assign({}, item)

if (newItem.dependence && newItem.dependence.dependTaskList) {

newItem.dependence.dependTaskList.forEach(dependTaskItem => {

if (dependTaskItem.dependItemList) {

dependTaskItem.dependItemList.forEach(dependItem => {

Reflect.deleteProperty(dependItem, 'definitionList')

})

}

})

}

newTasks.push(newItem)

})

return newTasks

}

export default {

/**

* Task status acquisition

*/

getTaskState ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get(`projects/${state.projectName}/instance/task-list-by-process-id`, {

processInstanceId: payload

}, res => {

const arr = _.map(res.data.taskList, v => {

return _.cloneDeep(_.assign(tasksState[v.state], {

name: v.name,

stateId: v.id,

dependentResult: v.dependentResult

}))

})

resolve({

list: arr,

processInstanceState: res.data.processInstanceState,

taskList: res.data.taskList

})

}).catch(e => {

reject(e)

})

})

},

/**

* Update process definition status

*/

editProcessState ({ state }, payload) {

return new Promise((resolve, reject) => {

io.post(`projects/${state.projectName}/process/release`, {

processId: payload.processId,

releaseState: payload.releaseState

}, res => {

resolve(res)

}).catch(e => {

reject(e)

})

})

},

/**

* get process definition versions pagination info

*/

getProcessDefinitionVersionsPage ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get(`projects/${state.projectName}/process/versions`, payload, res => {

resolve(res)

}).catch(e => {

reject(e)

})

})

},

/**

* switch process definition version

*/

switchProcessDefinitionVersion ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get(`projects/${state.projectName}/process/version/switch`, payload, res => {

resolve(res)

}).catch(e => {

reject(e)

})

})

},

/**

* delete process definition version

*/

deleteProcessDefinitionVersion ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get(`projects/${state.projectName}/process/version/delete`, payload, res => {

resolve(res)

}).catch(e => {

reject(e)

})

})

},

/**

* Update process instance status

*/

editExecutorsState ({ state }, payload) {

return new Promise((resolve, reject) => {

io.post(`projects/${state.projectName}/executors/execute`, {

processInstanceId: payload.processInstanceId,

executeType: payload.executeType

}, res => {

resolve(res)

}).catch(e => {

reject(e)

})

})

},

/**

* Verify that the DGA map name exists

*/

verifDAGName ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get(`projects/${state.projectName}/process/verify-name`, {

name: payload

}, res => {

state.name = payload

resolve(res)

}).catch(e => {

reject(e)

})

})

},

/**

* Get process definition DAG diagram details

*/

getProcessDetails ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get(`projects/${state.projectName}/process/select-by-id`, {

processId: payload

}, res => {

// process definition code

state.code = res.data.code

// version

state.version = res.data.version

// name

state.name = res.data.name

// description

state.description = res.data.description

// connects

state.connects = JSON.parse(res.data.connects)

// locations

state.locations = JSON.parse(res.data.locations)

// Process definition

const processDefinitionJson = JSON.parse(res.data.processDefinitionJson)

// tasks info

state.tasks = processDefinitionJson.tasks

// tasks cache

state.cacheTasks = {}

processDefinitionJson.tasks.forEach(v => {

state.cacheTasks[v.id] = v

})

// global params

state.globalParams = processDefinitionJson.globalParams

// timeout

state.timeout = processDefinitionJson.timeout

state.tenantId = processDefinitionJson.tenantId

resolve(res.data)

}).catch(res => {

reject(res)

})

})

},

/**

* Get process definition DAG diagram details

*/

copyProcess ({ state }, payload) {

return new Promise((resolve, reject) => {

io.post(`projects/${state.projectName}/process/copy`, {

processDefinitionIds: payload.processDefinitionIds,

targetProjectId: payload.targetProjectId

}, res => {

resolve(res)

}).catch(e => {

reject(e)

})

})

},

/**

* Get process definition DAG diagram details

*/

moveProcess ({ state }, payload) {

return new Promise((resolve, reject) => {

io.post(`projects/${state.projectName}/process/move`, {

processDefinitionIds: payload.processDefinitionIds,

targetProjectId: payload.targetProjectId

}, res => {

resolve(res)

}).catch(e => {

reject(e)

})

})

},

/**

* Get all the items created by the logged in user

*/

getAllItems ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get('projects/created-and-authorized-project', {}, res => {

resolve(res)

}).catch(e => {

reject(e)

})

})

},

/**

* Get the process instance DAG diagram details

*/

getInstancedetail ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get(`projects/${state.projectName}/instance/select-by-id`, {

processInstanceId: payload

}, res => {

// code

state.code = res.data.processDefinitionCode

// version

state.version = res.data.processDefinitionVersion

// name

state.name = res.data.name

// desc

state.description = res.data.description

// connects

state.connects = JSON.parse(res.data.connects)

// locations

state.locations = JSON.parse(res.data.locations)

// process instance

const processInstanceJson = JSON.parse(res.data.processInstanceJson)

// tasks info

state.tasks = processInstanceJson.tasks

// tasks cache

state.cacheTasks = {}

processInstanceJson.tasks.forEach(v => {

state.cacheTasks[v.id] = v

})

// global params

state.globalParams = processInstanceJson.globalParams

// timeout

state.timeout = processInstanceJson.timeout

state.tenantId = processInstanceJson.tenantId

// startup parameters

state.startup = _.assign(state.startup, _.pick(res.data, ['commandType', 'failureStrategy', 'processInstancePriority', 'workerGroup', 'warningType', 'warningGroupId', 'receivers', 'receiversCc']))

state.startup.commandParam = JSON.parse(res.data.commandParam)

resolve(res.data)

}).catch(res => {

reject(res)

})

})

},

/**

* Create process definition

*/

saveDAGchart ({ state }, payload) {

return new Promise((resolve, reject) => {

const data = {

globalParams: state.globalParams,

tasks: deleteDefinitionList(state.tasks),

tenantId: state.tenantId,

timeout: state.timeout

}

io.post(`projects/${state.projectName}/process/save`, {

processDefinitionJson: JSON.stringify(data),

name: _.trim(state.name),

description: _.trim(state.description),

locations: JSON.stringify(state.locations),

connects: JSON.stringify(state.connects)

}, res => {

resolve(res)

}).catch(e => {

reject(e)

})

})

},

/**

* Process definition update

*/

updateDefinition ({ state }, payload) {

return new Promise((resolve, reject) => {

const data = {

globalParams: state.globalParams,

tasks: deleteDefinitionList(state.tasks),

tenantId: state.tenantId,

timeout: state.timeout

}

io.post(`projects/${state.projectName}/process/update`, {

processDefinitionJson: JSON.stringify(data),

locations: JSON.stringify(state.locations),

connects: JSON.stringify(state.connects),

name: _.trim(state.name),

description: _.trim(state.description),

id: payload,

releaseState: state.releaseState

}, res => {

resolve(res)

state.isEditDag = false

}).catch(e => {

reject(e)

})

})

},

/**

* Process instance update

*/

updateInstance ({ state }, payload) {

return new Promise((resolve, reject) => {

const data = {

globalParams: state.globalParams,

tasks: state.tasks,

tenantId: state.tenantId,

timeout: state.timeout

}

io.post(`projects/${state.projectName}/instance/update`, {

processInstanceJson: JSON.stringify(data),

locations: JSON.stringify(state.locations),

connects: JSON.stringify(state.connects),

processInstanceId: payload,

syncDefine: state.syncDefine

}, res => {

resolve(res)

state.isEditDag = false

}).catch(e => {

reject(e)

})

})

},

/**

* Get a list of process definitions (sub-workflow usage is not paged)

*/

getProcessList ({ state }, payload) {

return new Promise((resolve, reject) => {

if (state.processListS.length) {

resolve()

return

}

io.get(`projects/${state.projectName}/process/list`, payload, res => {

state.processListS = res.data

resolve(res.data)

}).catch(res => {

reject(res)

})

})

},

/**

* Get a list of process definitions (list page usage with pagination)

*/

getProcessListP ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get(`projects/${state.projectName}/process/list-paging`, payload, res => {

resolve(res.data)

}).catch(res => {

reject(res)

})

})

},

/**

* Get a list of project

*/

getProjectList ({ state }, payload) {

return new Promise((resolve, reject) => {

if (state.projectListS.length) {

resolve()

return

}

io.get('projects/query-project-list', payload, res => {

state.projectListS = res.data

resolve(res.data)

}).catch(res => {

reject(res)

})

})

},

/**

* Get a list of process definitions by project id

*/

getProcessByProjectId ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get(`projects/${state.projectName}/process/queryProcessDefinitionAllByProjectId`, payload, res => {

resolve(res.data)

}).catch(res => {

reject(res)

})

})

},

/**

* get datasource

*/

getDatasourceList ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get('datasources/list', {

type: payload

}, res => {

resolve(res)

}).catch(res => {

reject(res)

})

})

},

/**

* get resources

*/

getResourcesList ({ state }) {

return new Promise((resolve, reject) => {

if (state.resourcesListS.length) {

resolve()

return

}

io.get('resources/list', {

type: 'FILE'

}, res => {

state.resourcesListS = res.data

resolve(res.data)

}).catch(res => {

reject(res)

})

})

},

/**

* get jar

*/

getResourcesListJar ({ state }) {

return new Promise((resolve, reject) => {

if (state.resourcesListJar.length) {

resolve()

return

}

io.get('resources/list/jar', {

type: 'FILE'

}, res => {

state.resourcesListJar = res.data

resolve(res.data)

}).catch(res => {

reject(res)

})

})

},

/**

* Get process instance

*/

getProcessInstance ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get(`projects/${state.projectName}/instance/list-paging`, payload, res => {

state.instanceListS = res.data.totalList

resolve(res.data)

}).catch(res => {

reject(res)

})

})

},

/**

* Get alarm list

*/

getNotifyGroupList ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get('alert-group/list', res => {

state.notifyGroupListS = _.map(res.data, v => {

return {

id: v.id,

code: v.groupName,

disabled: false

}

})

resolve(_.cloneDeep(state.notifyGroupListS))

}).catch(res => {

reject(res)

})

})

},

/**

* Process definition startup interface

*/

processStart ({ state }, payload) {

return new Promise((resolve, reject) => {

io.post(`projects/${state.projectName}/executors/start-process-instance`, payload, res => {

resolve(res)

}).catch(e => {

reject(e)

})

})

},

/**

* View log

*/

getLog ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get('log/detail', payload, res => {

resolve(res)

}).catch(e => {

reject(e)

})

})

},

/**

* Get the process instance id according to the process definition id

* @param taskId

*/

getSubProcessId ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get(`projects/${state.projectName}/instance/select-sub-process`, payload, res => {

resolve(res)

}).catch(e => {

reject(e)

})

})

},

/**

* Called before the process definition starts

*/

getStartCheck ({ state }, payload) {

return new Promise((resolve, reject) => {

io.post(`projects/${state.projectName}/executors/start-check`, payload, res => {

resolve(res)

}).catch(e => {

reject(e)

})

})

},

/**

* Create timing

*/

createSchedule ({ state }, payload) {

return new Promise((resolve, reject) => {

io.post(`projects/${state.projectCode}/schedule/create`, payload, res => {

resolve(res)

}).catch(e => {

reject(e)

})

})

},

/**

* Preview timing

*/

previewSchedule ({ state }, payload) {

return new Promise((resolve, reject) => {

io.post(`projects/${state.projectName}/schedule/preview`, payload, res => {

resolve(res.data)

// alert(res.data)

}).catch(e => {

reject(e)

})

})

},

/**

* Timing list paging

*/

getScheduleList ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get(`projects/${state.projectName}/schedule/list-paging`, payload, res => {

resolve(res)

}).catch(e => {

reject(e)

})

})

},

/**

* Timing online

*/

scheduleOffline ({ state }, payload) {

return new Promise((resolve, reject) => {

io.post(`projects/${state.projectName}/schedule/offline`, payload, res => {

resolve(res)

}).catch(e => {

reject(e)

})

})

},

/**

* Timed offline

*/

scheduleOnline ({ state }, payload) {

return new Promise((resolve, reject) => {

io.post(`projects/${state.projectName}/schedule/online`, payload, res => {

resolve(res)

}).catch(e => {

reject(e)

})

})

},

/**

* Edit timing

*/

updateSchedule ({ state }, payload) {

return new Promise((resolve, reject) => {

io.post(`projects/${state.projectName}/schedule/update`, payload, res => {

resolve(res)

}).catch(e => {

reject(e)

})

})

},

/**

* Delete process instance

*/

deleteInstance ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get(`projects/${state.projectName}/instance/delete`, payload, res => {

resolve(res)

}).catch(e => {

reject(e)

})

})

},

/**

* Batch delete process instance

*/

batchDeleteInstance ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get(`projects/${state.projectName}/instance/batch-delete`, payload, res => {

resolve(res)

}).catch(e => {

reject(e)

})

})

},

/**

* Delete definition

*/

deleteDefinition ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get(`projects/${state.projectName}/process/delete`, payload, res => {

resolve(res)

}).catch(e => {

reject(e)

})

})

},

/**

* Batch delete definition

*/

batchDeleteDefinition ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get(`projects/${state.projectName}/process/batch-delete`, payload, res => {

resolve(res)

}).catch(e => {

reject(e)

})

})

},

/**

* export definition

*/

exportDefinition ({ state }, payload) {

const downloadBlob = (data, fileNameS = 'json') => {

if (!data) {

return

}

const blob = new Blob([data])

const fileName = `${fileNameS}.json`

if ('download' in document.createElement('a')) { // 不是IE浏览器

const url = window.URL.createObjectURL(blob)

const link = document.createElement('a')

link.style.display = 'none'

link.href = url

link.setAttribute('download', fileName)

document.body.appendChild(link)

link.click()

document.body.removeChild(link) // 下载完成移除元素

window.URL.revokeObjectURL(url) // 释放掉blob对象

} else { // IE 10+

window.navigator.msSaveBlob(blob, fileName)

}

}

io.get(`projects/${state.projectName}/process/export`, { processDefinitionIds: payload.processDefinitionIds }, res => {

downloadBlob(res, payload.fileName)

}, e => {

}, {

responseType: 'blob'

})

},

/**

* Process instance get variable

*/

getViewvariables ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get(`projects/${state.projectName}/instance/view-variables`, payload, res => {

resolve(res)

}).catch(e => {

reject(e)

})

})

},

/**

* Get udfs function based on data source

*/

getUdfList ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get('resources/udf-func/list', payload, res => {

resolve(res)

}).catch(e => {

reject(e)

})

})

},

/**

* Query task instance list

*/

getTaskInstanceList ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get(`projects/${state.projectName}/task-instance/list-paging`, payload, res => {

resolve(res.data)

}).catch(e => {

reject(e)

})

})

},

/**

* Force fail/kill/need_fault_tolerance task success

*/

forceTaskSuccess ({ state }, payload) {

return new Promise((resolve, reject) => {

io.post(`projects/${state.projectName}/task-instance/force-success`, payload, res => {

resolve(res)

}).catch(e => {

reject(e)

})

})

},

/**

* Query task record list

*/

getTaskRecordList ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get('projects/task-record/list-paging', payload, res => {

resolve(res.data)

}).catch(e => {

reject(e)

})

})

},

/**

* Query history task record list

*/

getHistoryTaskRecordList ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get('projects/task-record/history-list-paging', payload, res => {

resolve(res.data)

}).catch(e => {

reject(e)

})

})

},

/**

* tree chart

*/

getViewTree ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get(`projects/${state.projectName}/process/view-tree`, payload, res => {

resolve(res.data)

}).catch(e => {

reject(e)

})

})

},

/**

* gantt chart

*/

getViewGantt ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get(`projects/${state.projectName}/instance/view-gantt`, payload, res => {

resolve(res.data)

}).catch(e => {

reject(e)

})

})

},

/**

* Query task node list

*/

getProcessTasksList ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get(`projects/${state.projectName}/process/gen-task-list`, payload, res => {

resolve(res.data)

}).catch(e => {

reject(e)

})

})

},

getTaskListDefIdAll ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get(`projects/${state.projectName}/process/get-task-list`, payload, res => {

resolve(res.data)

}).catch(e => {

reject(e)

})

})

},

/**

* remove timing

*/

deleteTiming ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get(`projects/${state.projectName}/schedule/delete`, payload, res => {

resolve(res)

}).catch(e => {

reject(e)

})

})

},

getResourceId ({ state }, payload) {

return new Promise((resolve, reject) => {

io.get('resources/queryResource', payload, res => {

resolve(res.data)

}).catch(e => {

reject(e)

})

})

}

}

|

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 5,763 | [Feature][JsonSplit-api]schedule online/offline interface | from #5498

Change the request parameter projectName to projectCode,including the front end and controller interface | https://github.com/apache/dolphinscheduler/issues/5763 | https://github.com/apache/dolphinscheduler/pull/5764 | cfa22d7c89bcd8e35b8a286b39b67b9b36b3b4dc | e4f427a8d8bf99754698e054845291a5223c2ea6 | "2021-07-07T11:37:00Z" | java | "2021-07-08T05:59:40Z" | dolphinscheduler-api/src/main/java/org/apache/dolphinscheduler/api/controller/SchedulerController.java | /*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package org.apache.dolphinscheduler.api.controller;

import static org.apache.dolphinscheduler.api.enums.Status.CREATE_SCHEDULE_ERROR;

import static org.apache.dolphinscheduler.api.enums.Status.DELETE_SCHEDULE_CRON_BY_ID_ERROR;

import static org.apache.dolphinscheduler.api.enums.Status.OFFLINE_SCHEDULE_ERROR;

import static org.apache.dolphinscheduler.api.enums.Status.PREVIEW_SCHEDULE_ERROR;

import static org.apache.dolphinscheduler.api.enums.Status.PUBLISH_SCHEDULE_ONLINE_ERROR;

import static org.apache.dolphinscheduler.api.enums.Status.QUERY_SCHEDULE_LIST_ERROR;

import static org.apache.dolphinscheduler.api.enums.Status.QUERY_SCHEDULE_LIST_PAGING_ERROR;

import static org.apache.dolphinscheduler.api.enums.Status.UPDATE_SCHEDULE_ERROR;

import static org.apache.dolphinscheduler.common.Constants.SESSION_USER;

import org.apache.dolphinscheduler.api.aspect.AccessLogAnnotation;

import org.apache.dolphinscheduler.api.enums.Status;

import org.apache.dolphinscheduler.api.exceptions.ApiException;

import org.apache.dolphinscheduler.api.service.SchedulerService;

import org.apache.dolphinscheduler.api.utils.Result;

import org.apache.dolphinscheduler.common.Constants;

import org.apache.dolphinscheduler.common.enums.FailureStrategy;

import org.apache.dolphinscheduler.common.enums.Priority;

import org.apache.dolphinscheduler.common.enums.ReleaseState;

import org.apache.dolphinscheduler.common.enums.WarningType;

import org.apache.dolphinscheduler.common.utils.ParameterUtils;

import org.apache.dolphinscheduler.dao.entity.User;

import java.util.Map;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.http.HttpStatus;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.PathVariable;

import org.springframework.web.bind.annotation.PostMapping;

import org.springframework.web.bind.annotation.RequestAttribute;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RequestParam;

import org.springframework.web.bind.annotation.ResponseStatus;

import org.springframework.web.bind.annotation.RestController;

import io.swagger.annotations.Api;

import io.swagger.annotations.ApiImplicitParam;

import io.swagger.annotations.ApiImplicitParams;

import io.swagger.annotations.ApiOperation;

import io.swagger.annotations.ApiParam;

import springfox.documentation.annotations.ApiIgnore;

/**

* scheduler controller

*/

@Api(tags = "SCHEDULER_TAG")

@RestController

@RequestMapping("/projects/{projectCode}/schedule")

public class SchedulerController extends BaseController {

public static final String DEFAULT_WARNING_TYPE = "NONE";

public static final String DEFAULT_NOTIFY_GROUP_ID = "1";

public static final String DEFAULT_FAILURE_POLICY = "CONTINUE";

public static final String DEFAULT_PROCESS_INSTANCE_PRIORITY = "MEDIUM";

@Autowired

private SchedulerService schedulerService;

/**

* create schedule

*

* @param loginUser login user

* @param projectCode project code

* @param processDefinitionCode process definition code

* @param schedule scheduler

* @param warningType warning type

* @param warningGroupId warning group id

* @param failureStrategy failure strategy

* @param processInstancePriority process instance priority

* @param workerGroup worker group

* @return create result code

*/

@ApiOperation(value = "createSchedule", notes = "CREATE_SCHEDULE_NOTES")

@ApiImplicitParams({

@ApiImplicitParam(name = "processDefinitionCode", value = "PROCESS_DEFINITION_CODE", required = true, dataType = "Long", example = "100"),

@ApiImplicitParam(name = "schedule", value = "SCHEDULE", dataType = "String",

example = "{'startTime':'2019-06-10 00:00:00','endTime':'2019-06-13 00:00:00','timezoneId':'America/Phoenix','crontab':'0 0 3/6 * * ? *'}"),

@ApiImplicitParam(name = "warningType", value = "WARNING_TYPE", type = "WarningType"),

@ApiImplicitParam(name = "warningGroupId", value = "WARNING_GROUP_ID", dataType = "Int", example = "100"),

@ApiImplicitParam(name = "failureStrategy", value = "FAILURE_STRATEGY", type = "FailureStrategy"),

@ApiImplicitParam(name = "workerGroupId", value = "WORKER_GROUP_ID", dataType = "Int", example = "100"),

@ApiImplicitParam(name = "processInstancePriority", value = "PROCESS_INSTANCE_PRIORITY", type = "Priority"),

})

@PostMapping("/create")

@ResponseStatus(HttpStatus.CREATED)

@ApiException(CREATE_SCHEDULE_ERROR)

@AccessLogAnnotation(ignoreRequestArgs = "loginUser")

public Result createSchedule(@ApiIgnore @RequestAttribute(value = SESSION_USER) User loginUser,

@ApiParam(name = "projectCode", value = "PROJECT_CODE", required = true) @PathVariable long projectCode,

@RequestParam(value = "processDefinitionCode") long processDefinitionCode,

@RequestParam(value = "schedule") String schedule,

@RequestParam(value = "warningType", required = false, defaultValue = DEFAULT_WARNING_TYPE) WarningType warningType,

@RequestParam(value = "warningGroupId", required = false, defaultValue = DEFAULT_NOTIFY_GROUP_ID) int warningGroupId,

@RequestParam(value = "failureStrategy", required = false, defaultValue = DEFAULT_FAILURE_POLICY) FailureStrategy failureStrategy,

@RequestParam(value = "workerGroup", required = false, defaultValue = "default") String workerGroup,

@RequestParam(value = "processInstancePriority", required = false, defaultValue = DEFAULT_PROCESS_INSTANCE_PRIORITY) Priority processInstancePriority) {

Map<String, Object> result = schedulerService.insertSchedule(loginUser, projectCode, processDefinitionCode, schedule,

warningType, warningGroupId, failureStrategy, processInstancePriority, workerGroup);

return returnDataList(result);

}

/**

* updateProcessInstance schedule

*

* @param loginUser login user

* @param projectCode project code

* @param id scheduler id

* @param schedule scheduler

* @param warningType warning type

* @param warningGroupId warning group id

* @param failureStrategy failure strategy

* @param workerGroup worker group

* @param processInstancePriority process instance priority

* @return update result code

*/

@ApiOperation(value = "updateSchedule", notes = "UPDATE_SCHEDULE_NOTES")

@ApiImplicitParams({

@ApiImplicitParam(name = "id", value = "SCHEDULE_ID", required = true, dataType = "Int", example = "100"),

@ApiImplicitParam(name = "schedule", value = "SCHEDULE", dataType = "String", example = "{'startTime':'2019-06-10 00:00:00','endTime':'2019-06-13 00:00:00','crontab':'0 0 3/6 * * ? *'}"),

@ApiImplicitParam(name = "warningType", value = "WARNING_TYPE", type = "WarningType"),

@ApiImplicitParam(name = "warningGroupId", value = "WARNING_GROUP_ID", dataType = "Int", example = "100"),

@ApiImplicitParam(name = "failureStrategy", value = "FAILURE_STRATEGY", type = "FailureStrategy"),

@ApiImplicitParam(name = "workerGroupId", value = "WORKER_GROUP_ID", dataType = "Int", example = "100"),

@ApiImplicitParam(name = "processInstancePriority", value = "PROCESS_INSTANCE_PRIORITY", type = "Priority"),

})

@PostMapping("/update")

@ApiException(UPDATE_SCHEDULE_ERROR)

@AccessLogAnnotation(ignoreRequestArgs = "loginUser")

public Result updateSchedule(@ApiIgnore @RequestAttribute(value = SESSION_USER) User loginUser,

@ApiParam(name = "projectCode", value = "PROJECT_CODE", required = true) @PathVariable long projectCode,

@RequestParam(value = "id") Integer id,

@RequestParam(value = "schedule") String schedule,

@RequestParam(value = "warningType", required = false, defaultValue = DEFAULT_WARNING_TYPE) WarningType warningType,

@RequestParam(value = "warningGroupId", required = false) int warningGroupId,

@RequestParam(value = "failureStrategy", required = false, defaultValue = "END") FailureStrategy failureStrategy,

@RequestParam(value = "workerGroup", required = false, defaultValue = "default") String workerGroup,

@RequestParam(value = "processInstancePriority", required = false) Priority processInstancePriority) {

Map<String, Object> result = schedulerService.updateSchedule(loginUser, projectCode, id, schedule,

warningType, warningGroupId, failureStrategy, processInstancePriority, workerGroup);

return returnDataList(result);

}

/**

* publish schedule setScheduleState

*

* @param loginUser login user

* @param projectName project name

* @param id scheduler id

* @return publish result code

*/

@ApiOperation(value = "online", notes = "ONLINE_SCHEDULE_NOTES")

@ApiImplicitParams({

@ApiImplicitParam(name = "id", value = "SCHEDULE_ID", required = true, dataType = "Int", example = "100")

})

@PostMapping("/online")

@ApiException(PUBLISH_SCHEDULE_ONLINE_ERROR)

@AccessLogAnnotation(ignoreRequestArgs = "loginUser")

public Result online(@ApiIgnore @RequestAttribute(value = SESSION_USER) User loginUser,

@ApiParam(name = "projectName", value = "PROJECT_NAME", required = true) @PathVariable String projectName,

@RequestParam("id") Integer id) {

Map<String, Object> result = schedulerService.setScheduleState(loginUser, projectName, id, ReleaseState.ONLINE);

return returnDataList(result);

}

/**

* offline schedule

*

* @param loginUser login user

* @param projectName project name

* @param id schedule id

* @return operation result code

*/

@ApiOperation(value = "offline", notes = "OFFLINE_SCHEDULE_NOTES")

@ApiImplicitParams({

@ApiImplicitParam(name = "id", value = "SCHEDULE_ID", required = true, dataType = "Int", example = "100")

})

@PostMapping("/offline")

@ApiException(OFFLINE_SCHEDULE_ERROR)

@AccessLogAnnotation(ignoreRequestArgs = "loginUser")

public Result offline(@ApiIgnore @RequestAttribute(value = SESSION_USER) User loginUser,

@ApiParam(name = "projectName", value = "PROJECT_NAME", required = true) @PathVariable String projectName,

@RequestParam("id") Integer id) {

Map<String, Object> result = schedulerService.setScheduleState(loginUser, projectName, id, ReleaseState.OFFLINE);

return returnDataList(result);

}

/**

* query schedule list paging

*

* @param loginUser login user

* @param projectName project name

* @param processDefinitionId process definition id

* @param pageNo page number

* @param pageSize page size

* @param searchVal search value

* @return schedule list page

*/

@ApiOperation(value = "queryScheduleListPaging", notes = "QUERY_SCHEDULE_LIST_PAGING_NOTES")

@ApiImplicitParams({

@ApiImplicitParam(name = "processDefinitionId", value = "PROCESS_DEFINITION_ID", required = true, dataType = "Int", example = "100"),

@ApiImplicitParam(name = "searchVal", value = "SEARCH_VAL", type = "String"),

@ApiImplicitParam(name = "pageNo", value = "PAGE_NO", dataType = "Int", example = "100"),

@ApiImplicitParam(name = "pageSize", value = "PAGE_SIZE", dataType = "Int", example = "100")

})

@GetMapping("/list-paging")

@ApiException(QUERY_SCHEDULE_LIST_PAGING_ERROR)

@AccessLogAnnotation(ignoreRequestArgs = "loginUser")

public Result queryScheduleListPaging(@ApiIgnore @RequestAttribute(value = SESSION_USER) User loginUser,

@ApiParam(name = "projectName", value = "PROJECT_NAME", required = true) @PathVariable String projectName,

@RequestParam Integer processDefinitionId,

@RequestParam(value = "searchVal", required = false) String searchVal,

@RequestParam("pageNo") Integer pageNo,

@RequestParam("pageSize") Integer pageSize) {

Map<String, Object> result = checkPageParams(pageNo, pageSize);

if (result.get(Constants.STATUS) != Status.SUCCESS) {

return returnDataListPaging(result);

}

searchVal = ParameterUtils.handleEscapes(searchVal);

result = schedulerService.querySchedule(loginUser, projectName, processDefinitionId, searchVal, pageNo, pageSize);

return returnDataListPaging(result);

}

/**

* delete schedule by id

*

* @param loginUser login user

* @param projectName project name

* @param scheduleId scheule id

* @return delete result code

*/

@ApiOperation(value = "deleteScheduleById", notes = "OFFLINE_SCHEDULE_NOTES")

@ApiImplicitParams({

@ApiImplicitParam(name = "scheduleId", value = "SCHEDULE_ID", required = true, dataType = "Int", example = "100")

})

@GetMapping(value = "/delete")

@ResponseStatus(HttpStatus.OK)

@ApiException(DELETE_SCHEDULE_CRON_BY_ID_ERROR)

@AccessLogAnnotation(ignoreRequestArgs = "loginUser")

public Result deleteScheduleById(@RequestAttribute(value = SESSION_USER) User loginUser,

@PathVariable String projectName,

@RequestParam("scheduleId") Integer scheduleId

) {

Map<String, Object> result = schedulerService.deleteScheduleById(loginUser, projectName, scheduleId);

return returnDataList(result);

}

/**

* query schedule list

*

* @param loginUser login user

* @param projectName project name

* @return schedule list

*/

@ApiOperation(value = "queryScheduleList", notes = "QUERY_SCHEDULE_LIST_NOTES")

@PostMapping("/list")

@ApiException(QUERY_SCHEDULE_LIST_ERROR)

@AccessLogAnnotation(ignoreRequestArgs = "loginUser")

public Result queryScheduleList(@ApiIgnore @RequestAttribute(value = SESSION_USER) User loginUser,

@ApiParam(name = "projectName", value = "PROJECT_NAME", required = true) @PathVariable String projectName) {

Map<String, Object> result = schedulerService.queryScheduleList(loginUser, projectName);

return returnDataList(result);

}

/**

* preview schedule

*

* @param loginUser login user

* @param projectName project name

* @param schedule schedule expression

* @return the next five fire time

*/

@ApiOperation(value = "previewSchedule", notes = "PREVIEW_SCHEDULE_NOTES")

@ApiImplicitParams({

@ApiImplicitParam(name = "schedule", value = "SCHEDULE", dataType = "String", example = "{'startTime':'2019-06-10 00:00:00','endTime':'2019-06-13 00:00:00','crontab':'0 0 3/6 * * ? *'}"),

})

@PostMapping("/preview")

@ResponseStatus(HttpStatus.CREATED)

@ApiException(PREVIEW_SCHEDULE_ERROR)

@AccessLogAnnotation(ignoreRequestArgs = "loginUser")

public Result previewSchedule(@ApiIgnore @RequestAttribute(value = SESSION_USER) User loginUser,

@ApiParam(name = "projectName", value = "PROJECT_NAME", required = true) @PathVariable String projectName,

@RequestParam(value = "schedule") String schedule

) {

Map<String, Object> result = schedulerService.previewSchedule(loginUser, projectName, schedule);

return returnDataList(result);

}

}

|

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 5,763 | [Feature][JsonSplit-api]schedule online/offline interface | from #5498

Change the request parameter projectName to projectCode,including the front end and controller interface | https://github.com/apache/dolphinscheduler/issues/5763 | https://github.com/apache/dolphinscheduler/pull/5764 | cfa22d7c89bcd8e35b8a286b39b67b9b36b3b4dc | e4f427a8d8bf99754698e054845291a5223c2ea6 | "2021-07-07T11:37:00Z" | java | "2021-07-08T05:59:40Z" | dolphinscheduler-api/src/main/java/org/apache/dolphinscheduler/api/service/SchedulerService.java | /*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package org.apache.dolphinscheduler.api.service;

import org.apache.dolphinscheduler.common.enums.FailureStrategy;

import org.apache.dolphinscheduler.common.enums.Priority;

import org.apache.dolphinscheduler.common.enums.ReleaseState;

import org.apache.dolphinscheduler.common.enums.WarningType;

import org.apache.dolphinscheduler.dao.entity.User;

import java.util.Map;

/**

* scheduler service

*/

public interface SchedulerService {

/**

* save schedule

*

* @param loginUser login user

* @param projectCode project code

* @param processDefineCode process definition code

* @param schedule scheduler

* @param warningType warning type

* @param warningGroupId warning group id

* @param failureStrategy failure strategy

* @param processInstancePriority process instance priority

* @param workerGroup worker group

* @return create result code

*/

Map<String, Object> insertSchedule(User loginUser,

long projectCode,

long processDefineCode,

String schedule,

WarningType warningType,

int warningGroupId,

FailureStrategy failureStrategy,

Priority processInstancePriority,

String workerGroup);

/**

* updateProcessInstance schedule

*

* @param loginUser login user

* @param projectCode project code

* @param id scheduler id

* @param scheduleExpression scheduler

* @param warningType warning type

* @param warningGroupId warning group id

* @param failureStrategy failure strategy

* @param workerGroup worker group

* @param processInstancePriority process instance priority

* @return update result code

*/

Map<String, Object> updateSchedule(User loginUser,

long projectCode,

Integer id,

String scheduleExpression,

WarningType warningType,

int warningGroupId,

FailureStrategy failureStrategy,

Priority processInstancePriority,

String workerGroup);

/**

* set schedule online or offline

*

* @param loginUser login user

* @param projectName project name

* @param id scheduler id

* @param scheduleStatus schedule status

* @return publish result code

*/

Map<String, Object> setScheduleState(User loginUser,

String projectName,

Integer id,

ReleaseState scheduleStatus);

/**

* query schedule

*

* @param loginUser login user

* @param projectName project name

* @param processDefineId process definition id

* @param pageNo page number

* @param pageSize page size

* @param searchVal search value

* @return schedule list page

*/

Map<String, Object> querySchedule(User loginUser, String projectName, Integer processDefineId, String searchVal, Integer pageNo, Integer pageSize);

/**

* query schedule list

*

* @param loginUser login user

* @param projectName project name

* @return schedule list

*/

Map<String, Object> queryScheduleList(User loginUser, String projectName);

/**

* delete schedule

*

* @param projectId project id

* @param scheduleId schedule id

* @throws RuntimeException runtime exception

*/

void deleteSchedule(int projectId, int scheduleId);

/**

* delete schedule by id

*

* @param loginUser login user

* @param projectName project name

* @param scheduleId scheule id

* @return delete result code

*/

Map<String, Object> deleteScheduleById(User loginUser, String projectName, Integer scheduleId);

/**

* preview schedule

*

* @param loginUser login user

* @param projectName project name

* @param schedule schedule expression

* @return the next five fire time

*/

Map<String, Object> previewSchedule(User loginUser, String projectName, String schedule);

}

|

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 5,763 | [Feature][JsonSplit-api]schedule online/offline interface | from #5498

Change the request parameter projectName to projectCode,including the front end and controller interface | https://github.com/apache/dolphinscheduler/issues/5763 | https://github.com/apache/dolphinscheduler/pull/5764 | cfa22d7c89bcd8e35b8a286b39b67b9b36b3b4dc | e4f427a8d8bf99754698e054845291a5223c2ea6 | "2021-07-07T11:37:00Z" | java | "2021-07-08T05:59:40Z" | dolphinscheduler-api/src/main/java/org/apache/dolphinscheduler/api/service/impl/SchedulerServiceImpl.java | /*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package org.apache.dolphinscheduler.api.service.impl;

import org.apache.dolphinscheduler.api.dto.ScheduleParam;

import org.apache.dolphinscheduler.api.enums.Status;

import org.apache.dolphinscheduler.api.exceptions.ServiceException;

import org.apache.dolphinscheduler.api.service.ExecutorService;

import org.apache.dolphinscheduler.api.service.MonitorService;

import org.apache.dolphinscheduler.api.service.ProjectService;

import org.apache.dolphinscheduler.api.service.SchedulerService;

import org.apache.dolphinscheduler.api.utils.PageInfo;

import org.apache.dolphinscheduler.common.Constants;

import org.apache.dolphinscheduler.common.enums.FailureStrategy;

import org.apache.dolphinscheduler.common.enums.Priority;

import org.apache.dolphinscheduler.common.enums.ReleaseState;

import org.apache.dolphinscheduler.common.enums.UserType;

import org.apache.dolphinscheduler.common.enums.WarningType;

import org.apache.dolphinscheduler.common.model.Server;

import org.apache.dolphinscheduler.common.utils.DateUtils;

import org.apache.dolphinscheduler.common.utils.JSONUtils;

import org.apache.dolphinscheduler.common.utils.StringUtils;

import org.apache.dolphinscheduler.dao.entity.ProcessDefinition;

import org.apache.dolphinscheduler.dao.entity.Project;

import org.apache.dolphinscheduler.dao.entity.Schedule;

import org.apache.dolphinscheduler.dao.entity.User;

import org.apache.dolphinscheduler.dao.mapper.ProcessDefinitionMapper;

import org.apache.dolphinscheduler.dao.mapper.ProjectMapper;

import org.apache.dolphinscheduler.dao.mapper.ScheduleMapper;

import org.apache.dolphinscheduler.service.process.ProcessService;

import org.apache.dolphinscheduler.service.quartz.ProcessScheduleJob;

import org.apache.dolphinscheduler.service.quartz.QuartzExecutors;

import org.apache.dolphinscheduler.service.quartz.cron.CronUtils;

import java.text.ParseException;

import java.util.ArrayList;

import java.util.Date;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

import org.quartz.CronExpression;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Service;

import org.springframework.transaction.annotation.Transactional;

import com.baomidou.mybatisplus.core.metadata.IPage;

import com.baomidou.mybatisplus.extension.plugins.pagination.Page;

/**

* scheduler service impl

*/

@Service

public class SchedulerServiceImpl extends BaseServiceImpl implements SchedulerService {

private static final Logger logger = LoggerFactory.getLogger(SchedulerServiceImpl.class);

@Autowired

private ProjectService projectService;

@Autowired

private ExecutorService executorService;

@Autowired

private MonitorService monitorService;

@Autowired

private ProcessService processService;

@Autowired

private ScheduleMapper scheduleMapper;

@Autowired

private ProjectMapper projectMapper;

@Autowired

private ProcessDefinitionMapper processDefinitionMapper;

/**

* save schedule

*

* @param loginUser login user

* @param projectCode project name

* @param processDefineCode process definition code

* @param schedule scheduler

* @param warningType warning type

* @param warningGroupId warning group id

* @param failureStrategy failure strategy

* @param processInstancePriority process instance priority

* @param workerGroup worker group

* @return create result code

*/

@Override

@Transactional(rollbackFor = RuntimeException.class)

public Map<String, Object> insertSchedule(User loginUser,

long projectCode,

long processDefineCode,

String schedule,

WarningType warningType,

int warningGroupId,

FailureStrategy failureStrategy,

Priority processInstancePriority,

String workerGroup) {

Map<String, Object> result = new HashMap<>();

Project project = projectMapper.queryByCode(projectCode);

// check project auth

boolean hasProjectAndPerm = projectService.hasProjectAndPerm(loginUser, project, result);

if (!hasProjectAndPerm) {

return result;

}

// check work flow define release state

ProcessDefinition processDefinition = processDefinitionMapper.queryByCode(processDefineCode);

result = executorService.checkProcessDefinitionValid(processDefinition, processDefineCode);

if (result.get(Constants.STATUS) != Status.SUCCESS) {

return result;

}

Schedule scheduleObj = new Schedule();

Date now = new Date();

scheduleObj.setProjectName(project.getName());

scheduleObj.setProcessDefinitionId(processDefinition.getId());

scheduleObj.setProcessDefinitionName(processDefinition.getName());

ScheduleParam scheduleParam = JSONUtils.parseObject(schedule, ScheduleParam.class);

if (DateUtils.differSec(scheduleParam.getStartTime(), scheduleParam.getEndTime()) == 0) {

logger.warn("The start time must not be the same as the end");

putMsg(result, Status.SCHEDULE_START_TIME_END_TIME_SAME);

return result;

}

scheduleObj.setStartTime(scheduleParam.getStartTime());

scheduleObj.setEndTime(scheduleParam.getEndTime());

if (!org.quartz.CronExpression.isValidExpression(scheduleParam.getCrontab())) {

logger.error("{} verify failure", scheduleParam.getCrontab());

putMsg(result, Status.REQUEST_PARAMS_NOT_VALID_ERROR, scheduleParam.getCrontab());

return result;

}

scheduleObj.setCrontab(scheduleParam.getCrontab());

scheduleObj.setTimezoneId(scheduleParam.getTimezoneId());

scheduleObj.setWarningType(warningType);

scheduleObj.setWarningGroupId(warningGroupId);

scheduleObj.setFailureStrategy(failureStrategy);

scheduleObj.setCreateTime(now);

scheduleObj.setUpdateTime(now);

scheduleObj.setUserId(loginUser.getId());

scheduleObj.setUserName(loginUser.getUserName());

scheduleObj.setReleaseState(ReleaseState.OFFLINE);

scheduleObj.setProcessInstancePriority(processInstancePriority);

scheduleObj.setWorkerGroup(workerGroup);

scheduleMapper.insert(scheduleObj);

/**

* updateProcessInstance receivers and cc by process definition id

*/

processDefinition.setWarningGroupId(warningGroupId);

processDefinitionMapper.updateById(processDefinition);

// return scheduler object with ID

result.put(Constants.DATA_LIST, scheduleMapper.selectById(scheduleObj.getId()));

putMsg(result, Status.SUCCESS);

result.put("scheduleId", scheduleObj.getId());

return result;

}

/**

* updateProcessInstance schedule

*

* @param loginUser login user

* @param projectCode project code

* @param id scheduler id

* @param scheduleExpression scheduler

* @param warningType warning type

* @param warningGroupId warning group id

* @param failureStrategy failure strategy

* @param workerGroup worker group

* @param processInstancePriority process instance priority

* @param scheduleStatus schedule status

* @return update result code

*/

@Override

@Transactional(rollbackFor = RuntimeException.class)

public Map<String, Object> updateSchedule(User loginUser,

long projectCode,

Integer id,

String scheduleExpression,

WarningType warningType,

int warningGroupId,

FailureStrategy failureStrategy,

Priority processInstancePriority,

String workerGroup) {

Map<String, Object> result = new HashMap<>();

Project project = projectMapper.queryByCode(projectCode);

// check project auth

boolean hasProjectAndPerm = projectService.hasProjectAndPerm(loginUser, project, result);

if (!hasProjectAndPerm) {

return result;

}

// check schedule exists

Schedule schedule = scheduleMapper.selectById(id);

if (schedule == null) {

putMsg(result, Status.SCHEDULE_CRON_NOT_EXISTS, id);

return result;

}

ProcessDefinition processDefinition = processService.findProcessDefineById(schedule.getProcessDefinitionId());

if (processDefinition == null) {

putMsg(result, Status.PROCESS_DEFINE_NOT_EXIST, schedule.getProcessDefinitionId());

return result;

}

/**

* scheduling on-line status forbid modification

*/

if (checkValid(result, schedule.getReleaseState() == ReleaseState.ONLINE, Status.SCHEDULE_CRON_ONLINE_FORBID_UPDATE)) {

return result;

}

Date now = new Date();

// updateProcessInstance param

if (StringUtils.isNotEmpty(scheduleExpression)) {

ScheduleParam scheduleParam = JSONUtils.parseObject(scheduleExpression, ScheduleParam.class);

if (DateUtils.differSec(scheduleParam.getStartTime(), scheduleParam.getEndTime()) == 0) {

logger.warn("The start time must not be the same as the end");

putMsg(result, Status.SCHEDULE_START_TIME_END_TIME_SAME);

return result;

}

schedule.setStartTime(scheduleParam.getStartTime());

schedule.setEndTime(scheduleParam.getEndTime());

if (!org.quartz.CronExpression.isValidExpression(scheduleParam.getCrontab())) {

putMsg(result, Status.SCHEDULE_CRON_CHECK_FAILED, scheduleParam.getCrontab());

return result;

}

schedule.setCrontab(scheduleParam.getCrontab());

schedule.setTimezoneId(scheduleParam.getTimezoneId());

}

if (warningType != null) {

schedule.setWarningType(warningType);

}

schedule.setWarningGroupId(warningGroupId);

if (failureStrategy != null) {

schedule.setFailureStrategy(failureStrategy);

}

schedule.setWorkerGroup(workerGroup);

schedule.setUpdateTime(now);

schedule.setProcessInstancePriority(processInstancePriority);

scheduleMapper.updateById(schedule);

/**

* updateProcessInstance recipients and cc by process definition ID

*/

processDefinition.setWarningGroupId(warningGroupId);

processDefinitionMapper.updateById(processDefinition);

putMsg(result, Status.SUCCESS);

return result;

}

/**

* set schedule online or offline

*

* @param loginUser login user

* @param projectName project name

* @param id scheduler id

* @param scheduleStatus schedule status

* @return publish result code

*/

@Override

@Transactional(rollbackFor = RuntimeException.class)

public Map<String, Object> setScheduleState(User loginUser,

String projectName,

Integer id,

ReleaseState scheduleStatus) {

Map<String, Object> result = new HashMap<>();

Project project = projectMapper.queryByName(projectName);

// check project auth

boolean hasProjectAndPerm = projectService.hasProjectAndPerm(loginUser, project, result);

if (!hasProjectAndPerm) {

return result;

}

// check schedule exists

Schedule scheduleObj = scheduleMapper.selectById(id);

if (scheduleObj == null) {

putMsg(result, Status.SCHEDULE_CRON_NOT_EXISTS, id);

return result;

}

// check schedule release state

if (scheduleObj.getReleaseState() == scheduleStatus) {

logger.info("schedule release is already {},needn't to change schedule id: {} from {} to {}",

scheduleObj.getReleaseState(), scheduleObj.getId(), scheduleObj.getReleaseState(), scheduleStatus);

putMsg(result, Status.SCHEDULE_CRON_REALEASE_NEED_NOT_CHANGE, scheduleStatus);

return result;

}

ProcessDefinition processDefinition = processService.findProcessDefineById(scheduleObj.getProcessDefinitionId());

if (processDefinition == null) {

putMsg(result, Status.PROCESS_DEFINE_NOT_EXIST, scheduleObj.getProcessDefinitionId());

return result;

}

if (scheduleStatus == ReleaseState.ONLINE) {

// check process definition release state

if (processDefinition.getReleaseState() != ReleaseState.ONLINE) {

logger.info("not release process definition id: {} , name : {}",

processDefinition.getId(), processDefinition.getName());

putMsg(result, Status.PROCESS_DEFINE_NOT_RELEASE, processDefinition.getName());

return result;

}

// check sub process definition release state

List<Integer> subProcessDefineIds = new ArrayList<>();

processService.recurseFindSubProcessId(scheduleObj.getProcessDefinitionId(), subProcessDefineIds);

Integer[] idArray = subProcessDefineIds.toArray(new Integer[subProcessDefineIds.size()]);

if (!subProcessDefineIds.isEmpty()) {

List<ProcessDefinition> subProcessDefinitionList =

processDefinitionMapper.queryDefinitionListByIdList(idArray);

if (subProcessDefinitionList != null && !subProcessDefinitionList.isEmpty()) {

for (ProcessDefinition subProcessDefinition : subProcessDefinitionList) {

/**

* if there is no online process, exit directly

*/

if (subProcessDefinition.getReleaseState() != ReleaseState.ONLINE) {

logger.info("not release process definition id: {} , name : {}",

subProcessDefinition.getId(), subProcessDefinition.getName());

putMsg(result, Status.PROCESS_DEFINE_NOT_RELEASE, subProcessDefinition.getId());

return result;

}

}

}

}

}

// check master server exists

List<Server> masterServers = monitorService.getServerListFromRegistry(true);

if (masterServers.isEmpty()) {

putMsg(result, Status.MASTER_NOT_EXISTS);

return result;

}

// set status

scheduleObj.setReleaseState(scheduleStatus);

scheduleMapper.updateById(scheduleObj);

try {

switch (scheduleStatus) {

case ONLINE:

logger.info("Call master client set schedule online, project id: {}, flow id: {},host: {}", project.getId(), processDefinition.getId(), masterServers);

setSchedule(project.getId(), scheduleObj);

break;

case OFFLINE:

logger.info("Call master client set schedule offline, project id: {}, flow id: {},host: {}", project.getId(), processDefinition.getId(), masterServers);

deleteSchedule(project.getId(), id);

break;

default:

putMsg(result, Status.SCHEDULE_STATUS_UNKNOWN, scheduleStatus.toString());

return result;

}

} catch (Exception e) {

result.put(Constants.MSG, scheduleStatus == ReleaseState.ONLINE ? "set online failure" : "set offline failure");

throw new ServiceException(result.get(Constants.MSG).toString());

}

putMsg(result, Status.SUCCESS);

return result;

}

/**

* query schedule

*

* @param loginUser login user

* @param projectName project name

* @param processDefineId process definition id

* @param pageNo page number

* @param pageSize page size

* @param searchVal search value

* @return schedule list page

*/

@Override

public Map<String, Object> querySchedule(User loginUser, String projectName, Integer processDefineId, String searchVal, Integer pageNo, Integer pageSize) {

HashMap<String, Object> result = new HashMap<>();

Project project = projectMapper.queryByName(projectName);

// check project auth

boolean hasProjectAndPerm = projectService.hasProjectAndPerm(loginUser, project, result);

if (!hasProjectAndPerm) {

return result;

}

ProcessDefinition processDefinition = processService.findProcessDefineById(processDefineId);

if (processDefinition == null) {

putMsg(result, Status.PROCESS_DEFINE_NOT_EXIST, processDefineId);

return result;

}

Page<Schedule> page = new Page<>(pageNo, pageSize);

IPage<Schedule> scheduleIPage = scheduleMapper.queryByProcessDefineIdPaging(

page, processDefineId, searchVal

);

PageInfo<Schedule> pageInfo = new PageInfo<>(pageNo, pageSize);

pageInfo.setTotalCount((int) scheduleIPage.getTotal());

pageInfo.setLists(scheduleIPage.getRecords());

result.put(Constants.DATA_LIST, pageInfo);

putMsg(result, Status.SUCCESS);

return result;

}

/**

* query schedule list

*

* @param loginUser login user

* @param projectName project name

* @return schedule list

*/

@Override

public Map<String, Object> queryScheduleList(User loginUser, String projectName) {

Map<String, Object> result = new HashMap<>();

Project project = projectMapper.queryByName(projectName);

// check project auth

boolean hasProjectAndPerm = projectService.hasProjectAndPerm(loginUser, project, result);

if (!hasProjectAndPerm) {

return result;

}

List<Schedule> schedules = scheduleMapper.querySchedulerListByProjectName(projectName);

result.put(Constants.DATA_LIST, schedules);

putMsg(result, Status.SUCCESS);

return result;

}

public void setSchedule(int projectId, Schedule schedule) {

logger.info("set schedule, project id: {}, scheduleId: {}", projectId, schedule.getId());

QuartzExecutors.getInstance().addJob(ProcessScheduleJob.class, projectId, schedule);

}

/**

* delete schedule

*

* @param projectId project id

* @param scheduleId schedule id

* @throws RuntimeException runtime exception

*/

@Override

public void deleteSchedule(int projectId, int scheduleId) {

logger.info("delete schedules of project id:{}, schedule id:{}", projectId, scheduleId);

String jobName = QuartzExecutors.buildJobName(scheduleId);

String jobGroupName = QuartzExecutors.buildJobGroupName(projectId);

if (!QuartzExecutors.getInstance().deleteJob(jobName, jobGroupName)) {

logger.warn("set offline failure:projectId:{},scheduleId:{}", projectId, scheduleId);

throw new ServiceException("set offline failure");

}

}

/**

* check valid

*

* @param result result

* @param bool bool

* @param status status

* @return check result code

*/

private boolean checkValid(Map<String, Object> result, boolean bool, Status status) {

// timeout is valid

if (bool) {

putMsg(result, status);

return true;

}

return false;

}

/**

* delete schedule by id

*

* @param loginUser login user

* @param projectName project name

* @param scheduleId scheule id

* @return delete result code

*/

@Override

public Map<String, Object> deleteScheduleById(User loginUser, String projectName, Integer scheduleId) {

Map<String, Object> result = new HashMap<>();

Project project = projectMapper.queryByName(projectName);

Map<String, Object> checkResult = projectService.checkProjectAndAuth(loginUser, project, projectName);

Status resultEnum = (Status) checkResult.get(Constants.STATUS);

if (resultEnum != Status.SUCCESS) {

return checkResult;

}

Schedule schedule = scheduleMapper.selectById(scheduleId);

if (schedule == null) {

putMsg(result, Status.SCHEDULE_CRON_NOT_EXISTS, scheduleId);