status

stringclasses 1

value | repo_name

stringclasses 31

values | repo_url

stringclasses 31

values | issue_id

int64 1

104k

| title

stringlengths 4

369

| body

stringlengths 0

254k

⌀ | issue_url

stringlengths 37

56

| pull_url

stringlengths 37

54

| before_fix_sha

stringlengths 40

40

| after_fix_sha

stringlengths 40

40

| report_datetime

unknown | language

stringclasses 5

values | commit_datetime

unknown | updated_file

stringlengths 4

188

| file_content

stringlengths 0

5.12M

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 4,886 | [Bug][Docker] Cannot create container for service dolphinscheduler-worker: invalid volume specification in Windows | **For better global communication, Please describe it in English. If you feel the description in English is not clear, then you can append description in Chinese(just for Mandarin(CN)), thx! **

**Describe the bug**

A clear and concise description of what the bug is.

**To Reproduce**

Steps to reproduce the behavior, for example:

1. Run `docker-compose` on Windows

2. See error: `Cannot create container for service dolphinscheduler-worker: invalid volume specification`

**Expected behavior**

Bug fixed.

**Screenshots**

If applicable, add screenshots to help explain your problem.

**Which version of Dolphin Scheduler:**

-[1.3.x]

-[dev]

**Additional context**

Add any other context about the problem here.

**Requirement or improvement**

- Please describe about your requirements or improvement suggestions.

| https://github.com/apache/dolphinscheduler/issues/4886 | https://github.com/apache/dolphinscheduler/pull/4887 | a75793d875a9a7c3394dc8768c014e1d4efa6e7f | b8788f007b1617605e05d70c0ff33c4aa7318c4b | "2021-02-26T12:27:50Z" | java | "2021-02-27T01:45:23Z" | docker/docker-swarm/docker-stack.yml | # Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

version: "3.4"

services:

dolphinscheduler-postgresql:

image: bitnami/postgresql:latest

ports:

- 5432:5432

environment:

TZ: Asia/Shanghai

POSTGRESQL_USERNAME: root

POSTGRESQL_PASSWORD: root

POSTGRESQL_DATABASE: dolphinscheduler

volumes:

- dolphinscheduler-postgresql:/bitnami/postgresql

networks:

- dolphinscheduler

deploy:

mode: replicated

replicas: 1

dolphinscheduler-zookeeper:

image: bitnami/zookeeper:latest

ports:

- 2181:2181

environment:

TZ: Asia/Shanghai

ALLOW_ANONYMOUS_LOGIN: "yes"

ZOO_4LW_COMMANDS_WHITELIST: srvr,ruok,wchs,cons

volumes:

- dolphinscheduler-zookeeper:/bitnami/zookeeper

networks:

- dolphinscheduler

deploy:

mode: replicated

replicas: 1

dolphinscheduler-api:

image: apache/dolphinscheduler:latest

command: api-server

ports:

- 12345:12345

environment:

TZ: Asia/Shanghai

DATABASE_HOST: dolphinscheduler-postgresql

DATABASE_PORT: 5432

DATABASE_USERNAME: root

DATABASE_PASSWORD: root

DATABASE_DATABASE: dolphinscheduler

ZOOKEEPER_QUORUM: dolphinscheduler-zookeeper:2181

RESOURCE_STORAGE_TYPE: HDFS

RESOURCE_UPLOAD_PATH: /dolphinscheduler

FS_DEFAULT_FS: file:///

healthcheck:

test: ["CMD", "/root/checkpoint.sh", "ApiApplicationServer"]

interval: 30s

timeout: 5s

retries: 3

start_period: 30s

volumes:

- dolphinscheduler-logs:/opt/dolphinscheduler/logs

networks:

- dolphinscheduler

deploy:

mode: replicated

replicas: 1

dolphinscheduler-alert:

image: apache/dolphinscheduler:latest

command: alert-server

ports:

- 50052:50052

environment:

TZ: Asia/Shanghai

ALERT_PLUGIN_DIR: lib/plugin/alert

DATABASE_HOST: dolphinscheduler-postgresql

DATABASE_PORT: 5432

DATABASE_USERNAME: root

DATABASE_PASSWORD: root

DATABASE_DATABASE: dolphinscheduler

healthcheck:

test: ["CMD", "/root/checkpoint.sh", "AlertServer"]

interval: 30s

timeout: 5s

retries: 3

start_period: 30s

volumes:

- dolphinscheduler-logs:/opt/dolphinscheduler/logs

networks:

- dolphinscheduler

deploy:

mode: replicated

replicas: 1

dolphinscheduler-master:

image: apache/dolphinscheduler:latest

command: master-server

ports:

- 5678:5678

environment:

TZ: Asia/Shanghai

MASTER_EXEC_THREADS: "100"

MASTER_EXEC_TASK_NUM: "20"

MASTER_HEARTBEAT_INTERVAL: "10"

MASTER_TASK_COMMIT_RETRYTIMES: "5"

MASTER_TASK_COMMIT_INTERVAL: "1000"

MASTER_MAX_CPULOAD_AVG: "100"

MASTER_RESERVED_MEMORY: "0.1"

DATABASE_HOST: dolphinscheduler-postgresql

DATABASE_PORT: 5432

DATABASE_USERNAME: root

DATABASE_PASSWORD: root

DATABASE_DATABASE: dolphinscheduler

ZOOKEEPER_QUORUM: dolphinscheduler-zookeeper:2181

healthcheck:

test: ["CMD", "/root/checkpoint.sh", "MasterServer"]

interval: 30s

timeout: 5s

retries: 3

start_period: 30s

volumes:

- dolphinscheduler-logs:/opt/dolphinscheduler/logs

networks:

- dolphinscheduler

deploy:

mode: replicated

replicas: 1

dolphinscheduler-worker:

image: apache/dolphinscheduler:latest

command: worker-server

ports:

- 1234:1234

- 50051:50051

environment:

TZ: Asia/Shanghai

WORKER_EXEC_THREADS: "100"

WORKER_HEARTBEAT_INTERVAL: "10"

WORKER_MAX_CPULOAD_AVG: "100"

WORKER_RESERVED_MEMORY: "0.1"

WORKER_GROUPS: "default"

WORKER_WEIGHT: "100"

DOLPHINSCHEDULER_DATA_BASEDIR_PATH: /tmp/dolphinscheduler

ALERT_LISTEN_HOST: dolphinscheduler-alert

DATABASE_HOST: dolphinscheduler-postgresql

DATABASE_PORT: 5432

DATABASE_USERNAME: root

DATABASE_PASSWORD: root

DATABASE_DATABASE: dolphinscheduler

ZOOKEEPER_QUORUM: dolphinscheduler-zookeeper:2181

RESOURCE_STORAGE_TYPE: HDFS

RESOURCE_UPLOAD_PATH: /dolphinscheduler

FS_DEFAULT_FS: file:///

healthcheck:

test: ["CMD", "/root/checkpoint.sh", "WorkerServer"]

interval: 30s

timeout: 5s

retries: 3

start_period: 30s

configs:

- source: dolphinscheduler-worker-task-env

target: /opt/dolphinscheduler/conf/env/dolphinscheduler_env.sh

volumes:

- dolphinscheduler-worker-data:/tmp/dolphinscheduler

- dolphinscheduler-logs:/opt/dolphinscheduler/logs

networks:

- dolphinscheduler

deploy:

mode: replicated

replicas: 1

networks:

dolphinscheduler:

driver: overlay

volumes:

dolphinscheduler-postgresql:

dolphinscheduler-zookeeper:

dolphinscheduler-worker-data:

dolphinscheduler-logs:

configs:

dolphinscheduler-worker-task-env:

file: ./dolphinscheduler_env.sh |

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 4,886 | [Bug][Docker] Cannot create container for service dolphinscheduler-worker: invalid volume specification in Windows | **For better global communication, Please describe it in English. If you feel the description in English is not clear, then you can append description in Chinese(just for Mandarin(CN)), thx! **

**Describe the bug**

A clear and concise description of what the bug is.

**To Reproduce**

Steps to reproduce the behavior, for example:

1. Run `docker-compose` on Windows

2. See error: `Cannot create container for service dolphinscheduler-worker: invalid volume specification`

**Expected behavior**

Bug fixed.

**Screenshots**

If applicable, add screenshots to help explain your problem.

**Which version of Dolphin Scheduler:**

-[1.3.x]

-[dev]

**Additional context**

Add any other context about the problem here.

**Requirement or improvement**

- Please describe about your requirements or improvement suggestions.

| https://github.com/apache/dolphinscheduler/issues/4886 | https://github.com/apache/dolphinscheduler/pull/4887 | a75793d875a9a7c3394dc8768c014e1d4efa6e7f | b8788f007b1617605e05d70c0ff33c4aa7318c4b | "2021-02-26T12:27:50Z" | java | "2021-02-27T01:45:23Z" | docker/docker-swarm/dolphinscheduler_env.sh | #

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

export HADOOP_HOME=/opt/soft/hadoop

export HADOOP_CONF_DIR=/opt/soft/hadoop/etc/hadoop

export SPARK_HOME1=/opt/soft/spark1

export SPARK_HOME2=/opt/soft/spark2

export PYTHON_HOME=/usr/bin/python

export JAVA_HOME=/usr/lib/jvm/java-1.8-openjdk

export HIVE_HOME=/opt/soft/hive

export FLINK_HOME=/opt/soft/flink

export DATAX_HOME=/opt/soft/datax/bin/datax.py

export PATH=$HADOOP_HOME/bin:$SPARK_HOME1/bin:$SPARK_HOME2/bin:$PYTHON_HOME:$JAVA_HOME/bin:$HIVE_HOME/bin:$PATH:$FLINK_HOME/bin:$DATAX_HOME:$PATH

|

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 4,893 | [Improvement][READM.md] update the latest url for `QuickStart in Docker` | please update the following url

```

Please referer the official website document:[[QuickStart in Docker](https://dolphinscheduler.apache.org/en-us/docs/1.3.4/user_doc/docker-deployment.html)]

````

with new 1.3.5 version :

https://dolphinscheduler.apache.org/en-us/docs/1.3.5/user_doc/docker-deployment.html

| https://github.com/apache/dolphinscheduler/issues/4893 | https://github.com/apache/dolphinscheduler/pull/4895 | b8788f007b1617605e05d70c0ff33c4aa7318c4b | d170b92dc6ab5deda8ab88d5eede1e8d642ee158 | "2021-02-27T01:36:39Z" | java | "2021-02-27T06:46:18Z" | README.md | Dolphin Scheduler Official Website

[dolphinscheduler.apache.org](https://dolphinscheduler.apache.org)

============

[](https://www.apache.org/licenses/LICENSE-2.0.html)

[](https://github.com/apache/Incubator-DolphinScheduler)

[](https://codecov.io/gh/apache/incubator-dolphinscheduler/branch/dev)

[](https://sonarcloud.io/dashboard?id=apache-dolphinscheduler)

[](https://starchart.cc/apache/incubator-dolphinscheduler)

[](README.md)

[](README_zh_CN.md)

### Design Features:

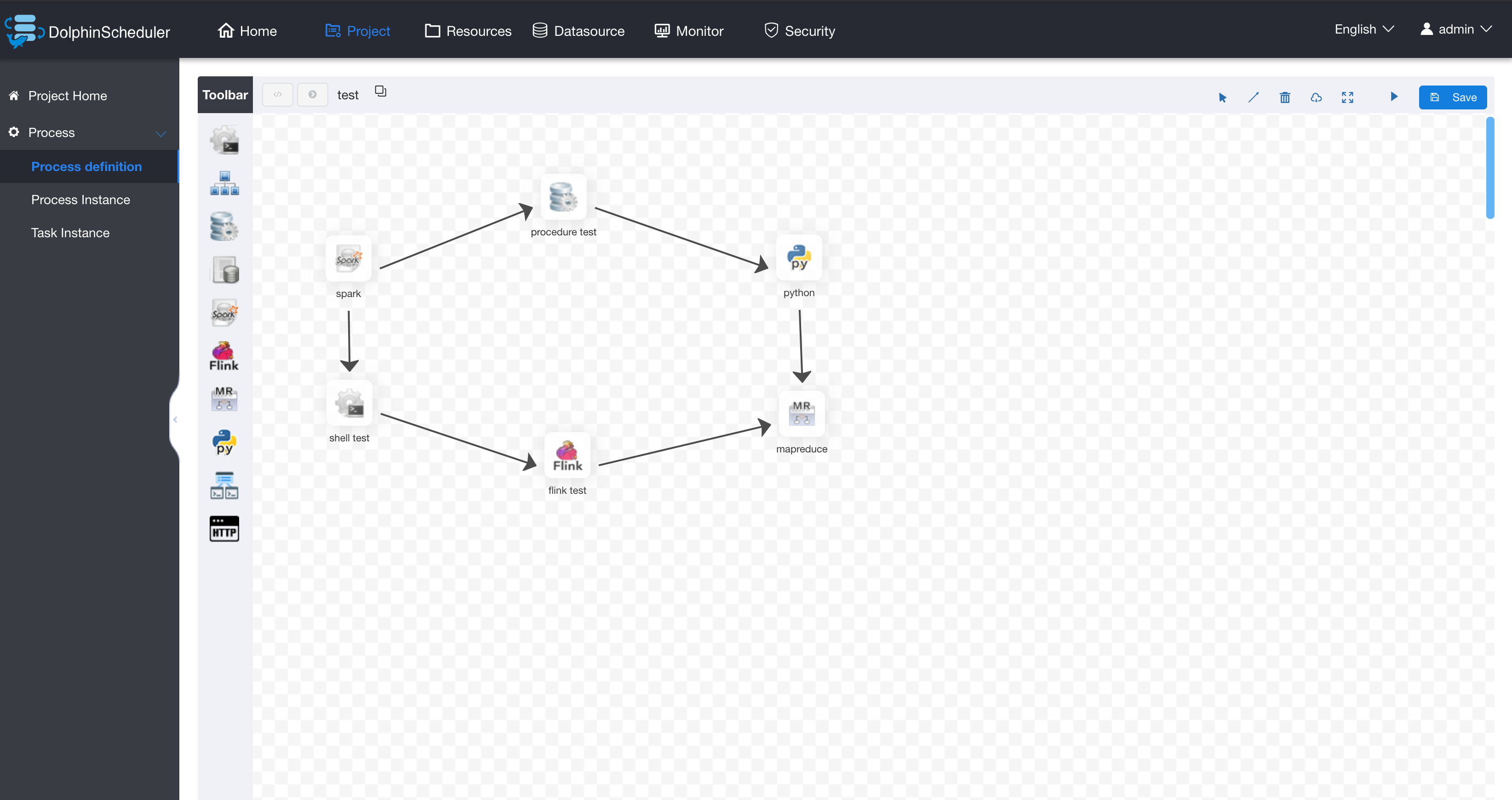

DolphinScheduler is a distributed and extensible workflow scheduler platform with powerful DAG visual interfaces, dedicated to solving complex job dependencies in the data pipeline and providing various types of jobs available `out of the box`.

Its main objectives are as follows:

- Associate the tasks according to the dependencies of the tasks in a DAG graph, which can visualize the running state of the task in real-time.

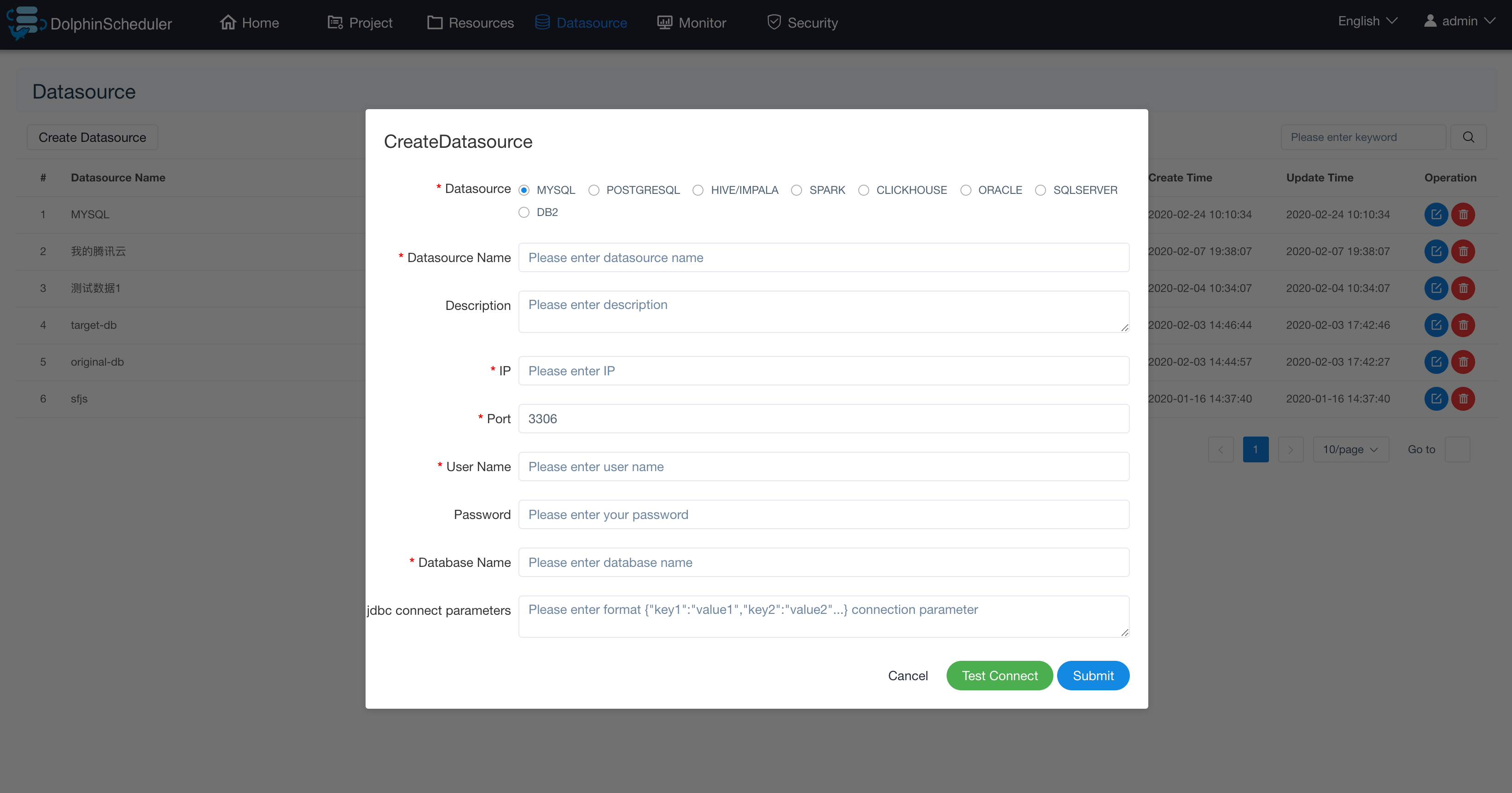

- Support various task types: Shell, MR, Spark, SQL (MySQL, PostgreSQL, hive, spark SQL), Python, Sub_Process, Procedure, etc.

- Support scheduling of workflows and dependencies, manual scheduling to pause/stop/recover task, support failure task retry/alarm, recover specified nodes from failure, kill task, etc.

- Support the priority of workflows & tasks, task failover, and task timeout alarm or failure.

- Support workflow global parameters and node customized parameter settings.

- Support online upload/download/management of resource files, etc. Support online file creation and editing.

- Support task log online viewing and scrolling and downloading, etc.

- Have implemented cluster HA, decentralize Master cluster and Worker cluster through Zookeeper.

- Support the viewing of Master/Worker CPU load, memory, and CPU usage metrics.

- Support displaying workflow history in tree/Gantt chart, as well as statistical analysis on the task status & process status in each workflow.

- Support back-filling data.

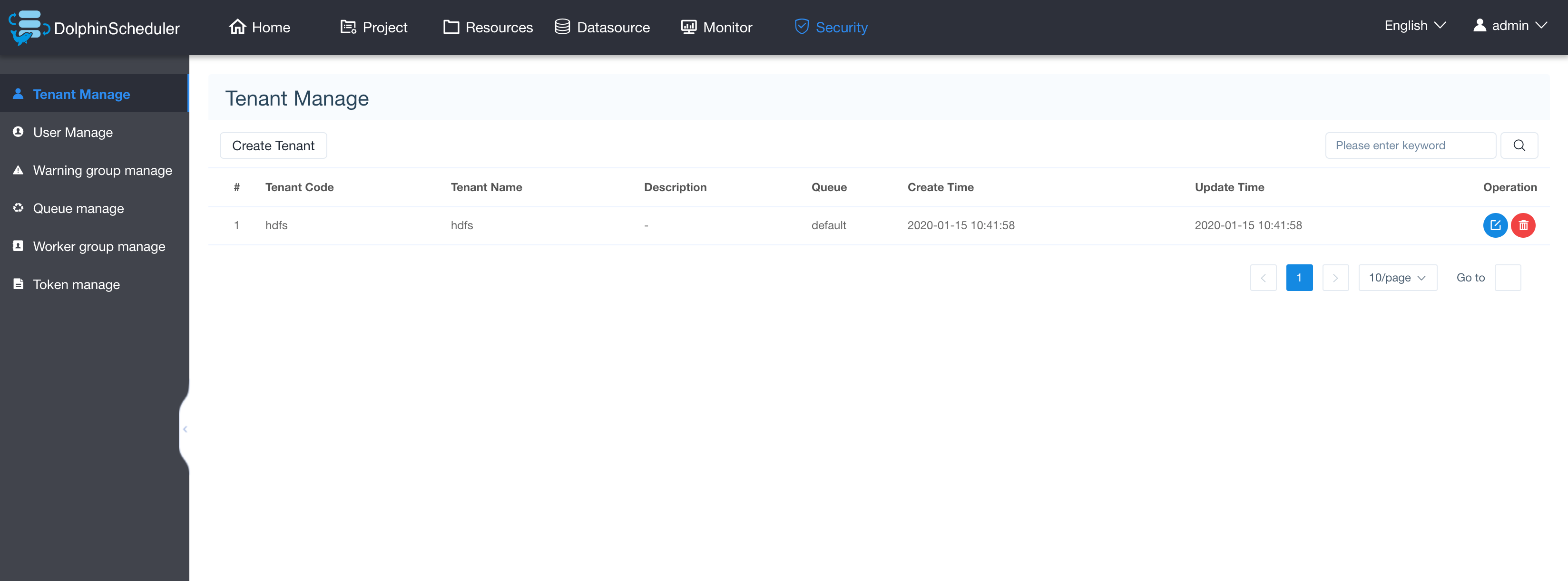

- Support multi-tenant.

- Support internationalization.

- More features waiting for partners to explore...

### What's in DolphinScheduler

Stability | Accessibility | Features | Scalability |

-- | -- | -- | --

Decentralized multi-master and multi-worker | Visualization of workflow key information, such as task status, task type, retry times, task operation machine information, visual variables, and so on at a glance. | Support pause, recover operation | Support customized task types

support HA | Visualization of all workflow operations, dragging tasks to draw DAGs, configuring data sources and resources. At the same time, for third-party systems, provide API mode operations. | Users on DolphinScheduler can achieve many-to-one or one-to-one mapping relationship through tenants and Hadoop users, which is very important for scheduling large data jobs. | The scheduler supports distributed scheduling, and the overall scheduling capability will increase linearly with the scale of the cluster. Master and Worker support dynamic adjustment.

Overload processing: By using the task queue mechanism, the number of schedulable tasks on a single machine can be flexibly configured. Machine jam can be avoided with high tolerance to numbers of tasks cached in task queue. | One-click deployment | Support traditional shell tasks, and big data platform task scheduling: MR, Spark, SQL (MySQL, PostgreSQL, hive, spark SQL), Python, Procedure, Sub_Process | |

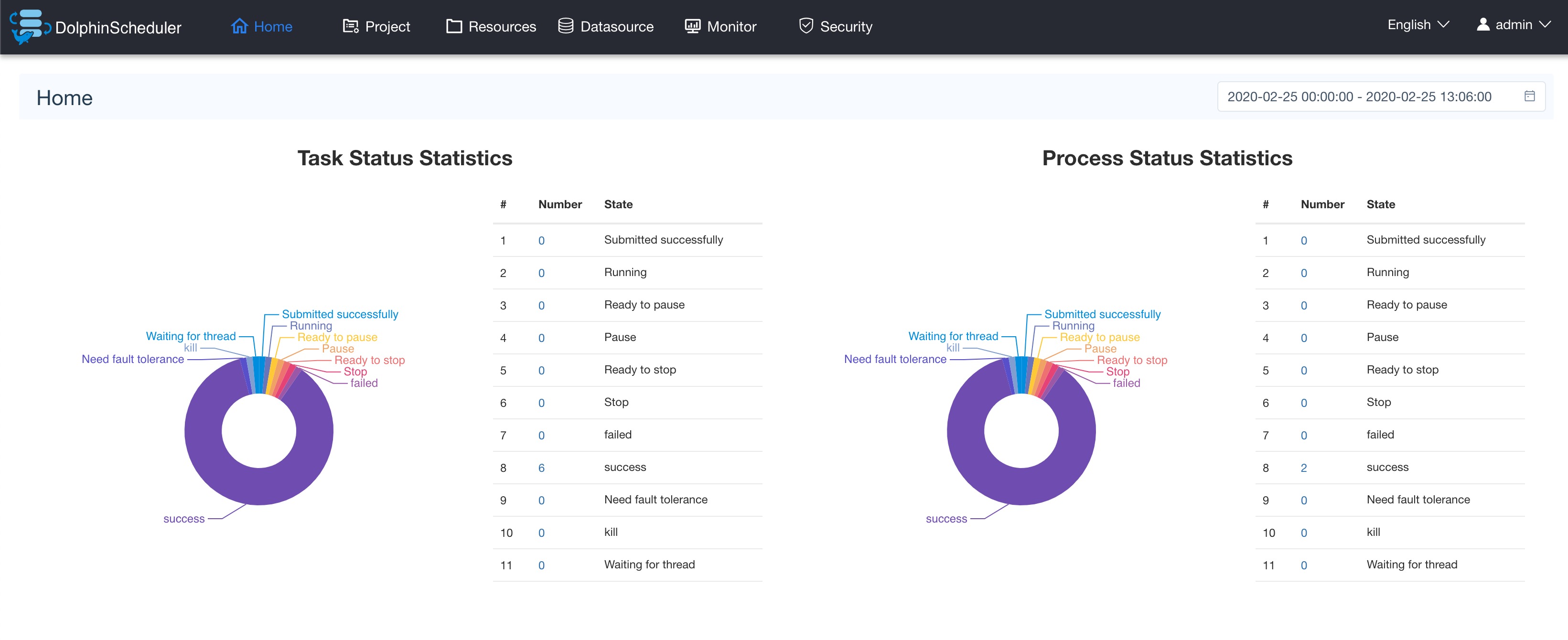

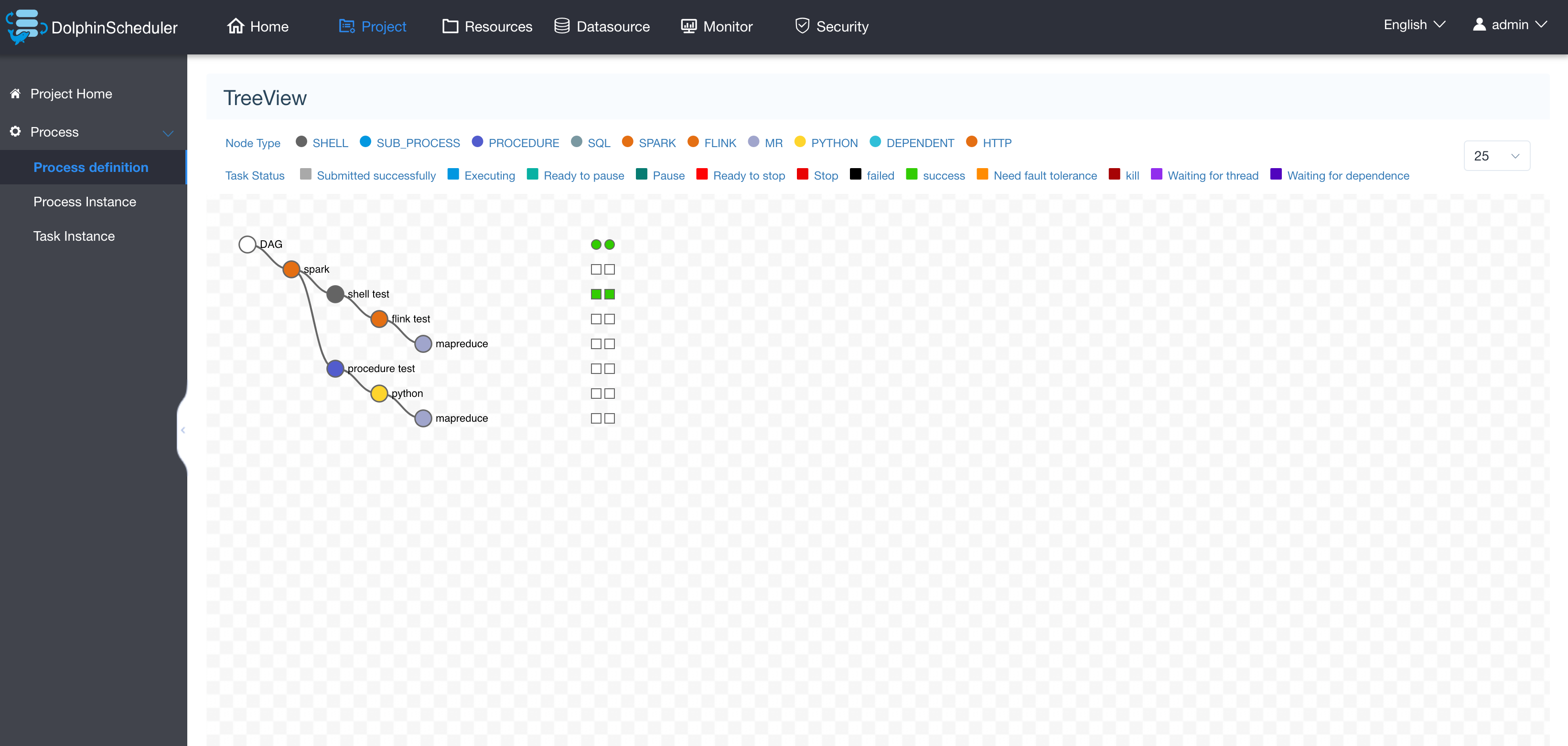

### User Interface Screenshots

### QuickStart in Docker

Please referer the official website document:[[QuickStart in Docker](https://dolphinscheduler.apache.org/en-us/docs/1.3.4/user_doc/docker-deployment.html)]

### How to Build

```bash

./mvnw clean install -Prelease

```

Artifact:

```

dolphinscheduler-dist/target/apache-dolphinscheduler-incubating-${latest.release.version}-dolphinscheduler-bin.tar.gz: Binary package of DolphinScheduler

dolphinscheduler-dist/target/apache-dolphinscheduler-incubating-${latest.release.version}-src.zip: Source code package of DolphinScheduler

```

### Thanks

DolphinScheduler is based on a lot of excellent open-source projects, such as google guava, guice, grpc, netty, ali bonecp, quartz, and many open-source projects of Apache and so on.

We would like to express our deep gratitude to all the open-source projects used in Dolphin Scheduler. We hope that we are not only the beneficiaries of open-source, but also give back to the community. Besides, we hope everyone who have the same enthusiasm and passion for open source could join in and contribute to the open-source community!

### Get Help

1. Submit an [[issue](https://github.com/apache/incubator-dolphinscheduler/issues/new/choose)]

1. Subscribe to this mail list: https://dolphinscheduler.apache.org/en-us/community/development/subscribe.html, then email dev@dolphinscheduler.apache.org

### Community

You are so much welcomed to communicate with the developers and users of Dolphin Scheduler freely. There are two ways to find them:

1. Join the slack channel by [this invitation link](https://join.slack.com/t/asf-dolphinscheduler/shared_invite/zt-mzqu52gi-rCggPkSHQ0DZYkwbTxO1Gw).

2. Follow the [twitter account of Dolphin Scheduler](https://twitter.com/dolphinschedule) and get the latest news just on time.

### How to Contribute

The community welcomes everyone to participate in contributing, please refer to this website to find out more: [[How to contribute](https://dolphinscheduler.apache.org/en-us/community/development/contribute.html)]

### License

Please refer to the [LICENSE](https://github.com/apache/incubator-dolphinscheduler/blob/dev/LICENSE) file.

|

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 2,584 | [Feature] 建议k8s部署的时候默认数据库是MySQL,降低使用门槛 | 建议k8s部署的时候默认数据库是MySQL,降低使用门槛。

目前随着Tidb等NewSQL数据库的流行,NewSQL会成为技术中台的首选数据库,而Tidb作为一个非常火的项目是搭建技术中台的首选数据库,Tidb兼容MySQL协议而非pg协议,所以incubator-dolphinscheduler的数据直接建在Tidb上是非常合适的 | https://github.com/apache/dolphinscheduler/issues/2584 | https://github.com/apache/dolphinscheduler/pull/4875 | 139211f3ddf9e30fb058c659fd467b18a95d0dbe | bfc5d1e4ba08cc19059bb40293bb0777b3d42377 | "2020-04-30T02:53:24Z" | java | "2021-02-27T14:44:55Z" | docker/build/README.md | ## What is Dolphin Scheduler?

Dolphin Scheduler is a distributed and easy-to-expand visual DAG workflow scheduling system, dedicated to solving the complex dependencies in data processing, making the scheduling system out of the box for data processing.

GitHub URL: https://github.com/apache/incubator-dolphinscheduler

Official Website: https://dolphinscheduler.apache.org

[](README.md)

[](README_zh_CN.md)

## How to use this docker image

#### You can start a dolphinscheduler by docker-compose (recommended)

```

$ docker-compose -f ./docker/docker-swarm/docker-compose.yml up -d

```

The default **postgres** user `root`, postgres password `root` and database `dolphinscheduler` are created in the `docker-compose.yml`.

The default **zookeeper** is created in the `docker-compose.yml`.

Access the Web UI:http://192.168.xx.xx:12345/dolphinscheduler

The default username is `admin` and the default password is `dolphinscheduler123`

#### Or via Environment Variables **`DATABASE_HOST`** **`DATABASE_PORT`** **`DATABASE_DATABASE`** **`ZOOKEEPER_QUORUM`**

You can specify **existing postgres and zookeeper service**. Example:

```

$ docker run -d --name dolphinscheduler \

-e ZOOKEEPER_QUORUM="192.168.x.x:2181" \

-e DATABASE_HOST="192.168.x.x" -e DATABASE_PORT="5432" -e DATABASE_DATABASE="dolphinscheduler" \

-e DATABASE_USERNAME="test" -e DATABASE_PASSWORD="test" \

-p 12345:12345 \

apache/dolphinscheduler:latest all

```

Access the Web UI:http://192.168.xx.xx:12345/dolphinscheduler

#### Or start a standalone dolphinscheduler server

You can start a standalone dolphinscheduler server.

* Create a **local volume** for resource storage, For example:

```

docker volume create dolphinscheduler-resource-local

```

* Start a **master server**, For example:

```

$ docker run -d --name dolphinscheduler-master \

-e ZOOKEEPER_QUORUM="192.168.x.x:2181" \

-e DATABASE_HOST="192.168.x.x" -e DATABASE_PORT="5432" -e DATABASE_DATABASE="dolphinscheduler" \

-e DATABASE_USERNAME="test" -e DATABASE_PASSWORD="test" \

apache/dolphinscheduler:latest master-server

```

* Start a **worker server**, For example:

```

$ docker run -d --name dolphinscheduler-worker \

-e ZOOKEEPER_QUORUM="192.168.x.x:2181" \

-e DATABASE_HOST="192.168.x.x" -e DATABASE_PORT="5432" -e DATABASE_DATABASE="dolphinscheduler" \

-e DATABASE_USERNAME="test" -e DATABASE_PASSWORD="test" \

-e ALERT_LISTEN_HOST="dolphinscheduler-alert" \

-v dolphinscheduler-resource-local:/dolphinscheduler \

apache/dolphinscheduler:latest worker-server

```

* Start a **api server**, For example:

```

$ docker run -d --name dolphinscheduler-api \

-e ZOOKEEPER_QUORUM="192.168.x.x:2181" \

-e DATABASE_HOST="192.168.x.x" -e DATABASE_PORT="5432" -e DATABASE_DATABASE="dolphinscheduler" \

-e DATABASE_USERNAME="test" -e DATABASE_PASSWORD="test" \

-v dolphinscheduler-resource-local:/dolphinscheduler \

-p 12345:12345 \

apache/dolphinscheduler:latest api-server

```

* Start a **alert server**, For example:

```

$ docker run -d --name dolphinscheduler-alert \

-e DATABASE_HOST="192.168.x.x" -e DATABASE_PORT="5432" -e DATABASE_DATABASE="dolphinscheduler" \

-e DATABASE_USERNAME="test" -e DATABASE_PASSWORD="test" \

apache/dolphinscheduler:latest alert-server

```

**Note**: You must be specify `DATABASE_HOST` `DATABASE_PORT` `DATABASE_DATABASE` `DATABASE_USERNAME` `DATABASE_PASSWORD` `ZOOKEEPER_QUORUM` when start a standalone dolphinscheduler server.

## How to build a docker image

You can build a docker image in A Unix-like operating system, You can also build it in Windows operating system.

In Unix-Like, Example:

```bash

$ cd path/incubator-dolphinscheduler

$ sh ./docker/build/hooks/build

```

In Windows, Example:

```bat

C:\incubator-dolphinscheduler>.\docker\build\hooks\build.bat

```

Please read `./docker/build/hooks/build` `./docker/build/hooks/build.bat` script files if you don't understand

## Environment Variables

The Dolphin Scheduler image uses several environment variables which are easy to miss. While none of the variables are required, they may significantly aid you in using the image.

**`DATABASE_TYPE`**

This environment variable sets the type for database. The default value is `postgresql`.

**Note**: You must be specify it when start a standalone dolphinscheduler server. Like `master-server`, `worker-server`, `api-server`, `alert-server`.

**`DATABASE_DRIVER`**

This environment variable sets the type for database. The default value is `org.postgresql.Driver`.

**Note**: You must be specify it when start a standalone dolphinscheduler server. Like `master-server`, `worker-server`, `api-server`, `alert-server`.

**`DATABASE_HOST`**

This environment variable sets the host for database. The default value is `127.0.0.1`.

**Note**: You must be specify it when start a standalone dolphinscheduler server. Like `master-server`, `worker-server`, `api-server`, `alert-server`.

**`DATABASE_PORT`**

This environment variable sets the port for database. The default value is `5432`.

**Note**: You must be specify it when start a standalone dolphinscheduler server. Like `master-server`, `worker-server`, `api-server`, `alert-server`.

**`DATABASE_USERNAME`**

This environment variable sets the username for database. The default value is `root`.

**Note**: You must be specify it when start a standalone dolphinscheduler server. Like `master-server`, `worker-server`, `api-server`, `alert-server`.

**`DATABASE_PASSWORD`**

This environment variable sets the password for database. The default value is `root`.

**Note**: You must be specify it when start a standalone dolphinscheduler server. Like `master-server`, `worker-server`, `api-server`, `alert-server`.

**`DATABASE_DATABASE`**

This environment variable sets the database for database. The default value is `dolphinscheduler`.

**Note**: You must be specify it when start a standalone dolphinscheduler server. Like `master-server`, `worker-server`, `api-server`, `alert-server`.

**`DATABASE_PARAMS`**

This environment variable sets the database for database. The default value is `characterEncoding=utf8`.

**Note**: You must be specify it when start a standalone dolphinscheduler server. Like `master-server`, `worker-server`, `api-server`, `alert-server`.

**`DOLPHINSCHEDULER_ENV_PATH`**

This environment variable sets the runtime environment for task. The default value is `/opt/dolphinscheduler/conf/env/dolphinscheduler_env.sh`.

**`DOLPHINSCHEDULER_DATA_BASEDIR_PATH`**

User data directory path, self configuration, please make sure the directory exists and have read write permissions. The default value is `/tmp/dolphinscheduler`

**`DOLPHINSCHEDULER_OPTS`**

This environment variable sets java options. The default value is empty.

**`RESOURCE_STORAGE_TYPE`**

This environment variable sets resource storage type for dolphinscheduler like `HDFS`, `S3`, `NONE`. The default value is `HDFS`.

**`RESOURCE_UPLOAD_PATH`**

This environment variable sets resource store path on HDFS/S3 for resource storage. The default value is `/dolphinscheduler`.

**`FS_DEFAULT_FS`**

This environment variable sets fs.defaultFS for resource storage like `file:///`, `hdfs://mycluster:8020` or `s3a://dolphinscheduler`. The default value is `file:///`.

**`FS_S3A_ENDPOINT`**

This environment variable sets s3 endpoint for resource storage. The default value is `s3.xxx.amazonaws.com`.

**`FS_S3A_ACCESS_KEY`**

This environment variable sets s3 access key for resource storage. The default value is `xxxxxxx`.

**`FS_S3A_SECRET_KEY`**

This environment variable sets s3 secret key for resource storage. The default value is `xxxxxxx`.

**`ZOOKEEPER_QUORUM`**

This environment variable sets zookeeper quorum for `master-server` and `worker-serverr`. The default value is `127.0.0.1:2181`.

**Note**: You must be specify it when start a standalone dolphinscheduler server. Like `master-server`, `worker-server`.

**`ZOOKEEPER_ROOT`**

This environment variable sets zookeeper root directory for dolphinscheduler. The default value is `/dolphinscheduler`.

**`MASTER_EXEC_THREADS`**

This environment variable sets exec thread num for `master-server`. The default value is `100`.

**`MASTER_EXEC_TASK_NUM`**

This environment variable sets exec task num for `master-server`. The default value is `20`.

**`MASTER_HEARTBEAT_INTERVAL`**

This environment variable sets heartbeat interval for `master-server`. The default value is `10`.

**`MASTER_TASK_COMMIT_RETRYTIMES`**

This environment variable sets task commit retry times for `master-server`. The default value is `5`.

**`MASTER_TASK_COMMIT_INTERVAL`**

This environment variable sets task commit interval for `master-server`. The default value is `1000`.

**`MASTER_MAX_CPULOAD_AVG`**

This environment variable sets max cpu load avg for `master-server`. The default value is `100`.

**`MASTER_RESERVED_MEMORY`**

This environment variable sets reserved memory for `master-server`. The default value is `0.1`.

**`MASTER_LISTEN_PORT`**

This environment variable sets port for `master-server`. The default value is `5678`.

**`WORKER_EXEC_THREADS`**

This environment variable sets exec thread num for `worker-server`. The default value is `100`.

**`WORKER_HEARTBEAT_INTERVAL`**

This environment variable sets heartbeat interval for `worker-server`. The default value is `10`.

**`WORKER_MAX_CPULOAD_AVG`**

This environment variable sets max cpu load avg for `worker-server`. The default value is `100`.

**`WORKER_RESERVED_MEMORY`**

This environment variable sets reserved memory for `worker-server`. The default value is `0.1`.

**`WORKER_LISTEN_PORT`**

This environment variable sets port for `worker-server`. The default value is `1234`.

**`WORKER_GROUPS`**

This environment variable sets group for `worker-server`. The default value is `default`.

**`WORKER_WEIGHT`**

This environment variable sets weight for `worker-server`. The default value is `100`.

**`ALERT_LISTEN_HOST`**

This environment variable sets the host of `alert-server` for `worker-server`. The default value is `127.0.0.1`.

**`ALERT_PLUGIN_DIR`**

This environment variable sets the alert plugin directory for `alert-server`. The default value is `lib/plugin/alert`.

## Initialization scripts

If you would like to do additional initialization in an image derived from this one, add one or more environment variable under `/root/start-init-conf.sh`, and modify template files in `/opt/dolphinscheduler/conf/*.tpl`.

For example, to add an environment variable `API_SERVER_PORT` in `/root/start-init-conf.sh`:

```

export API_SERVER_PORT=5555

```

and to modify `/opt/dolphinscheduler/conf/application-api.properties.tpl` template file, add server port:

```

server.port=${API_SERVER_PORT}

```

`/root/start-init-conf.sh` will dynamically generate config file:

```sh

echo "generate app config"

ls ${DOLPHINSCHEDULER_HOME}/conf/ | grep ".tpl" | while read line; do

eval "cat << EOF

$(cat ${DOLPHINSCHEDULER_HOME}/conf/${line})

EOF

" > ${DOLPHINSCHEDULER_HOME}/conf/${line%.*}

done

```

|

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 2,584 | [Feature] 建议k8s部署的时候默认数据库是MySQL,降低使用门槛 | 建议k8s部署的时候默认数据库是MySQL,降低使用门槛。

目前随着Tidb等NewSQL数据库的流行,NewSQL会成为技术中台的首选数据库,而Tidb作为一个非常火的项目是搭建技术中台的首选数据库,Tidb兼容MySQL协议而非pg协议,所以incubator-dolphinscheduler的数据直接建在Tidb上是非常合适的 | https://github.com/apache/dolphinscheduler/issues/2584 | https://github.com/apache/dolphinscheduler/pull/4875 | 139211f3ddf9e30fb058c659fd467b18a95d0dbe | bfc5d1e4ba08cc19059bb40293bb0777b3d42377 | "2020-04-30T02:53:24Z" | java | "2021-02-27T14:44:55Z" | docker/build/README_zh_CN.md | ## Dolphin Scheduler是什么?

一个分布式易扩展的可视化DAG工作流任务调度系统。致力于解决数据处理流程中错综复杂的依赖关系,使调度系统在数据处理流程中`开箱即用`。

GitHub URL: https://github.com/apache/incubator-dolphinscheduler

Official Website: https://dolphinscheduler.apache.org

[](README.md)

[](README_zh_CN.md)

## 如何使用docker镜像

#### 以 docker-compose 的方式启动dolphinscheduler(推荐)

```

$ docker-compose -f ./docker/docker-swarm/docker-compose.yml up -d

```

在`docker-compose.yml`文件中,默认的创建`Postgres`的用户、密码和数据库,默认值分别为:`root`、`root`、`dolphinscheduler`。

同时,默认的`Zookeeper`也会在`docker-compose.yml`文件中被创建。

访问前端界面:http://192.168.xx.xx:12345/dolphinscheduler

#### 或者通过环境变量 **`DATABASE_HOST`** **`DATABASE_PORT`** **`ZOOKEEPER_QUORUM`** 使用已存在的服务

你可以指定已经存在的 **`Postgres`** 和 **`Zookeeper`** 服务. 如下:

```

$ docker run -d --name dolphinscheduler \

-e ZOOKEEPER_QUORUM="192.168.x.x:2181" \

-e DATABASE_HOST="192.168.x.x" -e DATABASE_PORT="5432" -e DATABASE_DATABASE="dolphinscheduler" \

-e DATABASE_USERNAME="test" -e DATABASE_PASSWORD="test" \

-p 12345:12345 \

apache/dolphinscheduler:latest all

```

访问前端界面:http://192.168.xx.xx:12345/dolphinscheduler

#### 或者运行dolphinscheduler中的部分服务

你能够运行dolphinscheduler中的部分服务。

* 创建一个 **本地卷** 用于资源存储,如下:

```

docker volume create dolphinscheduler-resource-local

```

* 启动一个 **master server**, 如下:

```

$ docker run -d --name dolphinscheduler-master \

-e ZOOKEEPER_QUORUM="192.168.x.x:2181" \

-e DATABASE_HOST="192.168.x.x" -e DATABASE_PORT="5432" -e DATABASE_DATABASE="dolphinscheduler" \

-e DATABASE_USERNAME="test" -e DATABASE_PASSWORD="test" \

apache/dolphinscheduler:latest master-server

```

* 启动一个 **worker server**, 如下:

```

$ docker run -d --name dolphinscheduler-worker \

-e ZOOKEEPER_QUORUM="192.168.x.x:2181" \

-e DATABASE_HOST="192.168.x.x" -e DATABASE_PORT="5432" -e DATABASE_DATABASE="dolphinscheduler" \

-e DATABASE_USERNAME="test" -e DATABASE_PASSWORD="test" \

-e ALERT_LISTEN_HOST="dolphinscheduler-alert" \

-v dolphinscheduler-resource-local:/dolphinscheduler \

apache/dolphinscheduler:latest worker-server

```

* 启动一个 **api server**, 如下:

```

$ docker run -d --name dolphinscheduler-api \

-e ZOOKEEPER_QUORUM="192.168.x.x:2181" \

-e DATABASE_HOST="192.168.x.x" -e DATABASE_PORT="5432" -e DATABASE_DATABASE="dolphinscheduler" \

-e DATABASE_USERNAME="test" -e DATABASE_PASSWORD="test" \

-v dolphinscheduler-resource-local:/dolphinscheduler \

-p 12345:12345 \

apache/dolphinscheduler:latest api-server

```

* 启动一个 **alert server**, 如下:

```

$ docker run -d --name dolphinscheduler-alert \

-e DATABASE_HOST="192.168.x.x" -e DATABASE_PORT="5432" -e DATABASE_DATABASE="dolphinscheduler" \

-e DATABASE_USERNAME="test" -e DATABASE_PASSWORD="test" \

apache/dolphinscheduler:latest alert-server

```

**注意**: 当你运行dolphinscheduler中的部分服务时,你必须指定这些环境变量 `DATABASE_HOST` `DATABASE_PORT` `DATABASE_DATABASE` `DATABASE_USERNAME` `DATABASE_PASSWORD` `ZOOKEEPER_QUORUM`。

## 如何构建一个docker镜像

你能够在类Unix系统和Windows系统中构建一个docker镜像。

类Unix系统, 如下:

```bash

$ cd path/incubator-dolphinscheduler

$ sh ./docker/build/hooks/build

```

Windows系统, 如下:

```bat

C:\incubator-dolphinscheduler>.\docker\build\hooks\build.bat

```

如果你不理解这些脚本 `./docker/build/hooks/build` `./docker/build/hooks/build.bat`,请阅读里面的内容。

## 环境变量

Dolphin Scheduler映像使用了几个容易遗漏的环境变量。虽然这些变量不是必须的,但是可以帮助你更容易配置镜像并根据你的需求定义相应的服务配置。

**`DATABASE_TYPE`**

配置`database`的`TYPE`, 默认值 `postgresql`。

**注意**: 当运行`dolphinscheduler`中`master-server`、`worker-server`、`api-server`、`alert-server`这些服务时,必须指定这个环境变量,以便于你更好的搭建分布式服务。

**`DATABASE_DRIVER`**

配置`database`的`DRIVER`, 默认值 `org.postgresql.Driver`。

**注意**: 当运行`dolphinscheduler`中`master-server`、`worker-server`、`api-server`、`alert-server`这些服务时,必须指定这个环境变量,以便于你更好的搭建分布式服务。

**`DATABASE_HOST`**

配置`database`的`HOST`, 默认值 `127.0.0.1`。

**注意**: 当运行`dolphinscheduler`中`master-server`、`worker-server`、`api-server`、`alert-server`这些服务时,必须指定这个环境变量,以便于你更好的搭建分布式服务。

**`DATABASE_PORT`**

配置`database`的`PORT`, 默认值 `5432`。

**注意**: 当运行`dolphinscheduler`中`master-server`、`worker-server`、`api-server`、`alert-server`这些服务时,必须指定这个环境变量,以便于你更好的搭建分布式服务。

**`DATABASE_USERNAME`**

配置`database`的`USERNAME`, 默认值 `root`。

**注意**: 当运行`dolphinscheduler`中`master-server`、`worker-server`、`api-server`、`alert-server`这些服务时,必须指定这个环境变量,以便于你更好的搭建分布式服务。

**`DATABASE_PASSWORD`**

配置`database`的`PASSWORD`, 默认值 `root`。

**注意**: 当运行`dolphinscheduler`中`master-server`、`worker-server`、`api-server`、`alert-server`这些服务时,必须指定这个环境变量,以便于你更好的搭建分布式服务。

**`DATABASE_DATABASE`**

配置`database`的`DATABASE`, 默认值 `dolphinscheduler`。

**注意**: 当运行`dolphinscheduler`中`master-server`、`worker-server`、`api-server`、`alert-server`这些服务时,必须指定这个环境变量,以便于你更好的搭建分布式服务。

**`DATABASE_PARAMS`**

配置`database`的`PARAMS`, 默认值 `characterEncoding=utf8`。

**注意**: 当运行`dolphinscheduler`中`master-server`、`worker-server`、`api-server`、`alert-server`这些服务时,必须指定这个环境变量,以便于你更好的搭建分布式服务。

**`DOLPHINSCHEDULER_ENV_PATH`**

任务执行时的环境变量配置文件, 默认值 `/opt/dolphinscheduler/conf/env/dolphinscheduler_env.sh`。

**`DOLPHINSCHEDULER_DATA_BASEDIR_PATH`**

用户数据目录, 用户自己配置, 请确保这个目录存在并且用户读写权限, 默认值 `/tmp/dolphinscheduler`。

**`DOLPHINSCHEDULER_OPTS`**

配置`dolphinscheduler`的`java options`,默认值 `""`、

**`RESOURCE_STORAGE_TYPE`**

配置`dolphinscheduler`的资源存储类型,可选项为 `HDFS`、`S3`、`NONE`,默认值 `HDFS`。

**`RESOURCE_UPLOAD_PATH`**

配置`HDFS/S3`上的资源存储路径,默认值 `/dolphinscheduler`。

**`FS_DEFAULT_FS`**

配置资源存储的文件系统协议,如 `file:///`, `hdfs://mycluster:8020` or `s3a://dolphinscheduler`,默认值 `file:///`。

**`FS_S3A_ENDPOINT`**

当`RESOURCE_STORAGE_TYPE=S3`时,需要配置`S3`的访问路径,默认值 `s3.xxx.amazonaws.com`。

**`FS_S3A_ACCESS_KEY`**

当`RESOURCE_STORAGE_TYPE=S3`时,需要配置`S3`的`s3 access key`,默认值 `xxxxxxx`。

**`FS_S3A_SECRET_KEY`**

当`RESOURCE_STORAGE_TYPE=S3`时,需要配置`S3`的`s3 secret key`,默认值 `xxxxxxx`。

**`ZOOKEEPER_QUORUM`**

配置`master-server`和`worker-serverr`的`Zookeeper`地址, 默认值 `127.0.0.1:2181`。

**注意**: 当运行`dolphinscheduler`中`master-server`、`worker-server`这些服务时,必须指定这个环境变量,以便于你更好的搭建分布式服务。

**`ZOOKEEPER_ROOT`**

配置`dolphinscheduler`在`zookeeper`中数据存储的根目录,默认值 `/dolphinscheduler`。

**`MASTER_EXEC_THREADS`**

配置`master-server`中的执行线程数量,默认值 `100`。

**`MASTER_EXEC_TASK_NUM`**

配置`master-server`中的执行任务数量,默认值 `20`。

**`MASTER_HEARTBEAT_INTERVAL`**

配置`master-server`中的心跳交互时间,默认值 `10`。

**`MASTER_TASK_COMMIT_RETRYTIMES`**

配置`master-server`中的任务提交重试次数,默认值 `5`。

**`MASTER_TASK_COMMIT_INTERVAL`**

配置`master-server`中的任务提交交互时间,默认值 `1000`。

**`MASTER_MAX_CPULOAD_AVG`**

配置`master-server`中的CPU中的`load average`值,默认值 `100`。

**`MASTER_RESERVED_MEMORY`**

配置`master-server`的保留内存,默认值 `0.1`。

**`MASTER_LISTEN_PORT`**

配置`master-server`的端口,默认值 `5678`。

**`WORKER_EXEC_THREADS`**

配置`worker-server`中的执行线程数量,默认值 `100`。

**`WORKER_HEARTBEAT_INTERVAL`**

配置`worker-server`中的心跳交互时间,默认值 `10`。

**`WORKER_MAX_CPULOAD_AVG`**

配置`worker-server`中的CPU中的最大`load average`值,默认值 `100`。

**`WORKER_RESERVED_MEMORY`**

配置`worker-server`的保留内存,默认值 `0.1`。

**`WORKER_LISTEN_PORT`**

配置`worker-server`的端口,默认值 `1234`。

**`WORKER_GROUPS`**

配置`worker-server`的分组,默认值 `default`。

**`WORKER_WEIGHT`**

配置`worker-server`的权重,默认之`100`。

**`ALERT_LISTEN_HOST`**

配置`worker-server`的告警主机,即`alert-server`的主机名,默认值 `127.0.0.1`。

**`ALERT_PLUGIN_DIR`**

配置`alert-server`的告警插件目录,默认值 `lib/plugin/alert`。

## 初始化脚本

如果你想在编译的时候或者运行的时候附加一些其它的操作及新增一些环境变量,你可以在`/root/start-init-conf.sh`文件中进行修改,同时如果涉及到配置文件的修改,请在`/opt/dolphinscheduler/conf/*.tpl`中修改相应的配置文件

例如,在`/root/start-init-conf.sh`添加一个环境变量`API_SERVER_PORT`:

```

export API_SERVER_PORT=5555

```

当添加以上环境变量后,你应该在相应的模板文件`/opt/dolphinscheduler/conf/application-api.properties.tpl`中添加这个环境变量配置:

```

server.port=${API_SERVER_PORT}

```

`/root/start-init-conf.sh`将根据模板文件动态的生成配置文件:

```sh

echo "generate app config"

ls ${DOLPHINSCHEDULER_HOME}/conf/ | grep ".tpl" | while read line; do

eval "cat << EOF

$(cat ${DOLPHINSCHEDULER_HOME}/conf/${line})

EOF

" > ${DOLPHINSCHEDULER_HOME}/conf/${line%.*}

done

```

|

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 2,584 | [Feature] 建议k8s部署的时候默认数据库是MySQL,降低使用门槛 | 建议k8s部署的时候默认数据库是MySQL,降低使用门槛。

目前随着Tidb等NewSQL数据库的流行,NewSQL会成为技术中台的首选数据库,而Tidb作为一个非常火的项目是搭建技术中台的首选数据库,Tidb兼容MySQL协议而非pg协议,所以incubator-dolphinscheduler的数据直接建在Tidb上是非常合适的 | https://github.com/apache/dolphinscheduler/issues/2584 | https://github.com/apache/dolphinscheduler/pull/4875 | 139211f3ddf9e30fb058c659fd467b18a95d0dbe | bfc5d1e4ba08cc19059bb40293bb0777b3d42377 | "2020-04-30T02:53:24Z" | java | "2021-02-27T14:44:55Z" | docker/build/conf/dolphinscheduler/worker.properties.tpl | #

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

# worker execute thread num

worker.exec.threads=${WORKER_EXEC_THREADS}

# worker heartbeat interval

worker.heartbeat.interval=${WORKER_HEARTBEAT_INTERVAL}

# only less than cpu avg load, worker server can work. default value -1: the number of cpu cores * 2

worker.max.cpuload.avg=${WORKER_MAX_CPULOAD_AVG}

# only larger than reserved memory, worker server can work. default value : physical memory * 1/6, unit is G.

worker.reserved.memory=${WORKER_RESERVED_MEMORY}

# worker listener port

worker.listen.port=${WORKER_LISTEN_PORT}

# default worker group

worker.groups=${WORKER_GROUPS}

# default worker weight

worker.weight=${WORKER_WEIGHT}

# alert server listener host

alert.listen.host=${ALERT_LISTEN_HOST}

|

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 2,584 | [Feature] 建议k8s部署的时候默认数据库是MySQL,降低使用门槛 | 建议k8s部署的时候默认数据库是MySQL,降低使用门槛。

目前随着Tidb等NewSQL数据库的流行,NewSQL会成为技术中台的首选数据库,而Tidb作为一个非常火的项目是搭建技术中台的首选数据库,Tidb兼容MySQL协议而非pg协议,所以incubator-dolphinscheduler的数据直接建在Tidb上是非常合适的 | https://github.com/apache/dolphinscheduler/issues/2584 | https://github.com/apache/dolphinscheduler/pull/4875 | 139211f3ddf9e30fb058c659fd467b18a95d0dbe | bfc5d1e4ba08cc19059bb40293bb0777b3d42377 | "2020-04-30T02:53:24Z" | java | "2021-02-27T14:44:55Z" | docker/docker-swarm/docker-compose.yml | # Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

version: "3.4"

services:

dolphinscheduler-postgresql:

image: bitnami/postgresql:latest

container_name: dolphinscheduler-postgresql

ports:

- 5432:5432

environment:

TZ: Asia/Shanghai

POSTGRESQL_USERNAME: root

POSTGRESQL_PASSWORD: root

POSTGRESQL_DATABASE: dolphinscheduler

volumes:

- dolphinscheduler-postgresql:/bitnami/postgresql

- dolphinscheduler-postgresql-initdb:/docker-entrypoint-initdb.d

restart: unless-stopped

networks:

- dolphinscheduler

dolphinscheduler-zookeeper:

image: bitnami/zookeeper:latest

container_name: dolphinscheduler-zookeeper

ports:

- 2181:2181

environment:

TZ: Asia/Shanghai

ALLOW_ANONYMOUS_LOGIN: "yes"

ZOO_4LW_COMMANDS_WHITELIST: srvr,ruok,wchs,cons

volumes:

- dolphinscheduler-zookeeper:/bitnami/zookeeper

restart: unless-stopped

networks:

- dolphinscheduler

dolphinscheduler-api:

image: apache/dolphinscheduler:latest

container_name: dolphinscheduler-api

command: api-server

ports:

- 12345:12345

environment:

TZ: Asia/Shanghai

DATABASE_HOST: dolphinscheduler-postgresql

DATABASE_PORT: 5432

DATABASE_USERNAME: root

DATABASE_PASSWORD: root

DATABASE_DATABASE: dolphinscheduler

ZOOKEEPER_QUORUM: dolphinscheduler-zookeeper:2181

RESOURCE_STORAGE_TYPE: HDFS

RESOURCE_UPLOAD_PATH: /dolphinscheduler

FS_DEFAULT_FS: file:///

healthcheck:

test: ["CMD", "/root/checkpoint.sh", "ApiApplicationServer"]

interval: 30s

timeout: 5s

retries: 3

start_period: 30s

depends_on:

- dolphinscheduler-postgresql

- dolphinscheduler-zookeeper

volumes:

- dolphinscheduler-logs:/opt/dolphinscheduler/logs

- dolphinscheduler-resource-local:/dolphinscheduler

restart: unless-stopped

networks:

- dolphinscheduler

dolphinscheduler-alert:

image: apache/dolphinscheduler:latest

container_name: dolphinscheduler-alert

command: alert-server

ports:

- 50052:50052

environment:

TZ: Asia/Shanghai

ALERT_PLUGIN_DIR: lib/plugin/alert

DATABASE_HOST: dolphinscheduler-postgresql

DATABASE_PORT: 5432

DATABASE_USERNAME: root

DATABASE_PASSWORD: root

DATABASE_DATABASE: dolphinscheduler

healthcheck:

test: ["CMD", "/root/checkpoint.sh", "AlertServer"]

interval: 30s

timeout: 5s

retries: 3

start_period: 30s

depends_on:

- dolphinscheduler-postgresql

volumes:

- dolphinscheduler-logs:/opt/dolphinscheduler/logs

restart: unless-stopped

networks:

- dolphinscheduler

dolphinscheduler-master:

image: apache/dolphinscheduler:latest

container_name: dolphinscheduler-master

command: master-server

ports:

- 5678:5678

environment:

TZ: Asia/Shanghai

MASTER_EXEC_THREADS: "100"

MASTER_EXEC_TASK_NUM: "20"

MASTER_HEARTBEAT_INTERVAL: "10"

MASTER_TASK_COMMIT_RETRYTIMES: "5"

MASTER_TASK_COMMIT_INTERVAL: "1000"

MASTER_MAX_CPULOAD_AVG: "100"

MASTER_RESERVED_MEMORY: "0.1"

DATABASE_HOST: dolphinscheduler-postgresql

DATABASE_PORT: 5432

DATABASE_USERNAME: root

DATABASE_PASSWORD: root

DATABASE_DATABASE: dolphinscheduler

ZOOKEEPER_QUORUM: dolphinscheduler-zookeeper:2181

healthcheck:

test: ["CMD", "/root/checkpoint.sh", "MasterServer"]

interval: 30s

timeout: 5s

retries: 3

start_period: 30s

depends_on:

- dolphinscheduler-postgresql

- dolphinscheduler-zookeeper

volumes:

- dolphinscheduler-logs:/opt/dolphinscheduler/logs

restart: unless-stopped

networks:

- dolphinscheduler

dolphinscheduler-worker:

image: apache/dolphinscheduler:latest

container_name: dolphinscheduler-worker

command: worker-server

ports:

- 1234:1234

- 50051:50051

environment:

TZ: Asia/Shanghai

WORKER_EXEC_THREADS: "100"

WORKER_HEARTBEAT_INTERVAL: "10"

WORKER_MAX_CPULOAD_AVG: "100"

WORKER_RESERVED_MEMORY: "0.1"

WORKER_GROUPS: "default"

WORKER_WEIGHT: "100"

HADOOP_HOME: "/opt/soft/hadoop"

HADOOP_CONF_DIR: "/opt/soft/hadoop/etc/hadoop"

SPARK_HOME1: "/opt/soft/spark1"

SPARK_HOME2: "/opt/soft/spark2"

PYTHON_HOME: "/usr/bin/python"

JAVA_HOME: "/usr/lib/jvm/java-1.8-openjdk"

HIVE_HOME: "/opt/soft/hive"

FLINK_HOME: "/opt/soft/flink"

DATAX_HOME: "/opt/soft/datax/bin/datax.py"

DOLPHINSCHEDULER_DATA_BASEDIR_PATH: /tmp/dolphinscheduler

ALERT_LISTEN_HOST: dolphinscheduler-alert

DATABASE_HOST: dolphinscheduler-postgresql

DATABASE_PORT: 5432

DATABASE_USERNAME: root

DATABASE_PASSWORD: root

DATABASE_DATABASE: dolphinscheduler

ZOOKEEPER_QUORUM: dolphinscheduler-zookeeper:2181

RESOURCE_STORAGE_TYPE: HDFS

RESOURCE_UPLOAD_PATH: /dolphinscheduler

FS_DEFAULT_FS: file:///

healthcheck:

test: ["CMD", "/root/checkpoint.sh", "WorkerServer"]

interval: 30s

timeout: 5s

retries: 3

start_period: 30s

depends_on:

- dolphinscheduler-postgresql

- dolphinscheduler-zookeeper

volumes:

- dolphinscheduler-worker-data:/tmp/dolphinscheduler

- dolphinscheduler-logs:/opt/dolphinscheduler/logs

- dolphinscheduler-resource-local:/dolphinscheduler

restart: unless-stopped

networks:

- dolphinscheduler

networks:

dolphinscheduler:

driver: bridge

volumes:

dolphinscheduler-postgresql:

dolphinscheduler-postgresql-initdb:

dolphinscheduler-zookeeper:

dolphinscheduler-worker-data:

dolphinscheduler-logs:

dolphinscheduler-resource-local: |

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 2,584 | [Feature] 建议k8s部署的时候默认数据库是MySQL,降低使用门槛 | 建议k8s部署的时候默认数据库是MySQL,降低使用门槛。

目前随着Tidb等NewSQL数据库的流行,NewSQL会成为技术中台的首选数据库,而Tidb作为一个非常火的项目是搭建技术中台的首选数据库,Tidb兼容MySQL协议而非pg协议,所以incubator-dolphinscheduler的数据直接建在Tidb上是非常合适的 | https://github.com/apache/dolphinscheduler/issues/2584 | https://github.com/apache/dolphinscheduler/pull/4875 | 139211f3ddf9e30fb058c659fd467b18a95d0dbe | bfc5d1e4ba08cc19059bb40293bb0777b3d42377 | "2020-04-30T02:53:24Z" | java | "2021-02-27T14:44:55Z" | docker/docker-swarm/docker-stack.yml | # Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

version: "3.4"

services:

dolphinscheduler-postgresql:

image: bitnami/postgresql:latest

ports:

- 5432:5432

environment:

TZ: Asia/Shanghai

POSTGRESQL_USERNAME: root

POSTGRESQL_PASSWORD: root

POSTGRESQL_DATABASE: dolphinscheduler

volumes:

- dolphinscheduler-postgresql:/bitnami/postgresql

networks:

- dolphinscheduler

deploy:

mode: replicated

replicas: 1

dolphinscheduler-zookeeper:

image: bitnami/zookeeper:latest

ports:

- 2181:2181

environment:

TZ: Asia/Shanghai

ALLOW_ANONYMOUS_LOGIN: "yes"

ZOO_4LW_COMMANDS_WHITELIST: srvr,ruok,wchs,cons

volumes:

- dolphinscheduler-zookeeper:/bitnami/zookeeper

networks:

- dolphinscheduler

deploy:

mode: replicated

replicas: 1

dolphinscheduler-api:

image: apache/dolphinscheduler:latest

command: api-server

ports:

- 12345:12345

environment:

TZ: Asia/Shanghai

DATABASE_HOST: dolphinscheduler-postgresql

DATABASE_PORT: 5432

DATABASE_USERNAME: root

DATABASE_PASSWORD: root

DATABASE_DATABASE: dolphinscheduler

ZOOKEEPER_QUORUM: dolphinscheduler-zookeeper:2181

RESOURCE_STORAGE_TYPE: HDFS

RESOURCE_UPLOAD_PATH: /dolphinscheduler

FS_DEFAULT_FS: file:///

healthcheck:

test: ["CMD", "/root/checkpoint.sh", "ApiApplicationServer"]

interval: 30s

timeout: 5s

retries: 3

start_period: 30s

volumes:

- dolphinscheduler-logs:/opt/dolphinscheduler/logs

networks:

- dolphinscheduler

deploy:

mode: replicated

replicas: 1

dolphinscheduler-alert:

image: apache/dolphinscheduler:latest

command: alert-server

ports:

- 50052:50052

environment:

TZ: Asia/Shanghai

ALERT_PLUGIN_DIR: lib/plugin/alert

DATABASE_HOST: dolphinscheduler-postgresql

DATABASE_PORT: 5432

DATABASE_USERNAME: root

DATABASE_PASSWORD: root

DATABASE_DATABASE: dolphinscheduler

healthcheck:

test: ["CMD", "/root/checkpoint.sh", "AlertServer"]

interval: 30s

timeout: 5s

retries: 3

start_period: 30s

volumes:

- dolphinscheduler-logs:/opt/dolphinscheduler/logs

networks:

- dolphinscheduler

deploy:

mode: replicated

replicas: 1

dolphinscheduler-master:

image: apache/dolphinscheduler:latest

command: master-server

ports:

- 5678:5678

environment:

TZ: Asia/Shanghai

MASTER_EXEC_THREADS: "100"

MASTER_EXEC_TASK_NUM: "20"

MASTER_HEARTBEAT_INTERVAL: "10"

MASTER_TASK_COMMIT_RETRYTIMES: "5"

MASTER_TASK_COMMIT_INTERVAL: "1000"

MASTER_MAX_CPULOAD_AVG: "100"

MASTER_RESERVED_MEMORY: "0.1"

DATABASE_HOST: dolphinscheduler-postgresql

DATABASE_PORT: 5432

DATABASE_USERNAME: root

DATABASE_PASSWORD: root

DATABASE_DATABASE: dolphinscheduler

ZOOKEEPER_QUORUM: dolphinscheduler-zookeeper:2181

healthcheck:

test: ["CMD", "/root/checkpoint.sh", "MasterServer"]

interval: 30s

timeout: 5s

retries: 3

start_period: 30s

volumes:

- dolphinscheduler-logs:/opt/dolphinscheduler/logs

networks:

- dolphinscheduler

deploy:

mode: replicated

replicas: 1

dolphinscheduler-worker:

image: apache/dolphinscheduler:latest

command: worker-server

ports:

- 1234:1234

- 50051:50051

environment:

TZ: Asia/Shanghai

WORKER_EXEC_THREADS: "100"

WORKER_HEARTBEAT_INTERVAL: "10"

WORKER_MAX_CPULOAD_AVG: "100"

WORKER_RESERVED_MEMORY: "0.1"

WORKER_GROUPS: "default"

WORKER_WEIGHT: "100"

HADOOP_HOME: "/opt/soft/hadoop"

HADOOP_CONF_DIR: "/opt/soft/hadoop/etc/hadoop"

SPARK_HOME1: "/opt/soft/spark1"

SPARK_HOME2: "/opt/soft/spark2"

PYTHON_HOME: "/usr/bin/python"

JAVA_HOME: "/usr/lib/jvm/java-1.8-openjdk"

HIVE_HOME: "/opt/soft/hive"

FLINK_HOME: "/opt/soft/flink"

DATAX_HOME: "/opt/soft/datax/bin/datax.py"

DOLPHINSCHEDULER_DATA_BASEDIR_PATH: /tmp/dolphinscheduler

ALERT_LISTEN_HOST: dolphinscheduler-alert

DATABASE_HOST: dolphinscheduler-postgresql

DATABASE_PORT: 5432

DATABASE_USERNAME: root

DATABASE_PASSWORD: root

DATABASE_DATABASE: dolphinscheduler

ZOOKEEPER_QUORUM: dolphinscheduler-zookeeper:2181

RESOURCE_STORAGE_TYPE: HDFS

RESOURCE_UPLOAD_PATH: /dolphinscheduler

FS_DEFAULT_FS: file:///

healthcheck:

test: ["CMD", "/root/checkpoint.sh", "WorkerServer"]

interval: 30s

timeout: 5s

retries: 3

start_period: 30s

volumes:

- dolphinscheduler-worker-data:/tmp/dolphinscheduler

- dolphinscheduler-logs:/opt/dolphinscheduler/logs

networks:

- dolphinscheduler

deploy:

mode: replicated

replicas: 1

networks:

dolphinscheduler:

driver: overlay

volumes:

dolphinscheduler-postgresql:

dolphinscheduler-zookeeper:

dolphinscheduler-worker-data:

dolphinscheduler-logs: |

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 2,584 | [Feature] 建议k8s部署的时候默认数据库是MySQL,降低使用门槛 | 建议k8s部署的时候默认数据库是MySQL,降低使用门槛。

目前随着Tidb等NewSQL数据库的流行,NewSQL会成为技术中台的首选数据库,而Tidb作为一个非常火的项目是搭建技术中台的首选数据库,Tidb兼容MySQL协议而非pg协议,所以incubator-dolphinscheduler的数据直接建在Tidb上是非常合适的 | https://github.com/apache/dolphinscheduler/issues/2584 | https://github.com/apache/dolphinscheduler/pull/4875 | 139211f3ddf9e30fb058c659fd467b18a95d0dbe | bfc5d1e4ba08cc19059bb40293bb0777b3d42377 | "2020-04-30T02:53:24Z" | java | "2021-02-27T14:44:55Z" | docker/kubernetes/dolphinscheduler/README.md | # Dolphin Scheduler

[Dolphin Scheduler](https://dolphinscheduler.apache.org) is a distributed and easy-to-expand visual DAG workflow scheduling system, dedicated to solving the complex dependencies in data processing, making the scheduling system out of the box for data processing.

## Introduction

This chart bootstraps a [Dolphin Scheduler](https://dolphinscheduler.apache.org) distributed deployment on a [Kubernetes](http://kubernetes.io) cluster using the [Helm](https://helm.sh) package manager.

## Prerequisites

- Helm 3.1.0+

- Kubernetes 1.12+

- PV provisioner support in the underlying infrastructure

## Installing the Chart

To install the chart with the release name `dolphinscheduler`:

```bash

$ git clone https://github.com/apache/incubator-dolphinscheduler.git

$ cd incubator-dolphinscheduler/docker/kubernetes/dolphinscheduler

$ helm repo add bitnami https://charts.bitnami.com/bitnami

$ helm dependency update .

$ helm install dolphinscheduler .

```

These commands deploy Dolphin Scheduler on the Kubernetes cluster in the default configuration. The [configuration](#configuration) section lists the parameters that can be configured during installation.

> **Tip**: List all releases using `helm list`

## Uninstalling the Chart

To uninstall/delete the `dolphinscheduler` deployment:

```bash

$ helm uninstall dolphinscheduler

```

The command removes all the Kubernetes components associated with the chart and deletes the release.

## Configuration

The following tables lists the configurable parameters of the Dolphins Scheduler chart and their default values.

| Parameter | Description | Default |

| --------------------------------------------------------------------------------- | ------------------------------------------------------------------------------------------------------------------------------ | ----------------------------------------------------- |

| `timezone` | World time and date for cities in all time zones | `Asia/Shanghai` |

| `image.registry` | Docker image registry for the Dolphins Scheduler | `docker.io` |

| `image.repository` | Docker image repository for the Dolphins Scheduler | `dolphinscheduler` |

| `image.tag` | Docker image version for the Dolphins Scheduler | `1.2.1` |

| `image.imagePullPolicy` | Image pull policy. One of Always, Never, IfNotPresent | `IfNotPresent` |

| `image.pullSecres` | PullSecrets is an optional list of references to secrets in the same namespace to use for pulling any of the images | `[]` |

| | | |

| `postgresql.enabled` | If not exists external PostgreSQL, by default, the Dolphins Scheduler will use a internal PostgreSQL | `true` |

| `postgresql.postgresqlUsername` | The username for internal PostgreSQL | `root` |

| `postgresql.postgresqlPassword` | The password for internal PostgreSQL | `root` |

| `postgresql.postgresqlDatabase` | The database for internal PostgreSQL | `dolphinscheduler` |

| `postgresql.persistence.enabled` | Set `postgresql.persistence.enabled` to `true` to mount a new volume for internal PostgreSQL | `false` |

| `postgresql.persistence.size` | `PersistentVolumeClaim` Size | `20Gi` |

| `postgresql.persistence.storageClass` | PostgreSQL data Persistent Volume Storage Class. If set to "-", storageClassName: "", which disables dynamic provisioning | `-` |

| `externalDatabase.type` | If exists external PostgreSQL, and set `postgresql.enable` value to false. Dolphins Scheduler's database type will use it. | `postgresql` |

| `externalDatabase.driver` | If exists external PostgreSQL, and set `postgresql.enable` value to false. Dolphins Scheduler's database driver will use it. | `org.postgresql.Driver` |

| `externalDatabase.host` | If exists external PostgreSQL, and set `postgresql.enable` value to false. Dolphins Scheduler's database host will use it. | `localhost` |

| `externalDatabase.port` | If exists external PostgreSQL, and set `postgresql.enable` value to false. Dolphins Scheduler's database port will use it. | `5432` |

| `externalDatabase.username` | If exists external PostgreSQL, and set `postgresql.enable` value to false. Dolphins Scheduler's database username will use it. | `root` |

| `externalDatabase.password` | If exists external PostgreSQL, and set `postgresql.enable` value to false. Dolphins Scheduler's database password will use it. | `root` |

| `externalDatabase.database` | If exists external PostgreSQL, and set `postgresql.enable` value to false. Dolphins Scheduler's database database will use it. | `dolphinscheduler` |

| `externalDatabase.params` | If exists external PostgreSQL, and set `postgresql.enable` value to false. Dolphins Scheduler's database params will use it. | `characterEncoding=utf8` |

| | | |

| `zookeeper.enabled` | If not exists external Zookeeper, by default, the Dolphin Scheduler will use a internal Zookeeper | `true` |

| `zookeeper.taskQueue` | Specify task queue for `master` and `worker` | `zookeeper` |

| `zookeeper.persistence.enabled` | Set `zookeeper.persistence.enabled` to `true` to mount a new volume for internal Zookeeper | `false` |

| `zookeeper.persistence.size` | `PersistentVolumeClaim` Size | `20Gi` |

| `zookeeper.persistence.storageClass` | Zookeeper data Persistent Volume Storage Class. If set to "-", storageClassName: "", which disables dynamic provisioning | `-` |

| `externalZookeeper.taskQueue` | If exists external Zookeeper, and set `zookeeper.enable` value to false. Specify task queue for `master` and `worker` | `zookeeper` |

| `externalZookeeper.zookeeperQuorum` | If exists external Zookeeper, and set `zookeeper.enable` value to false. Specify Zookeeper quorum | `127.0.0.1:2181` |

| `externalZookeeper.zookeeperRoot` | If exists external Zookeeper, and set `zookeeper.enable` value to false. Specify Zookeeper root path for `master` and `worker` | `dolphinscheduler` |

| | | |

| `common.configmap.DOLPHINSCHEDULER_ENV_PATH` | Extra env file path. | `/tmp/dolphinscheduler/env` |

| `common.configmap.DOLPHINSCHEDULER_DATA_BASEDIR_PATH` | File uploaded path of DS. | `/tmp/dolphinscheduler/files` |

| `common.configmap.RESOURCE_STORAGE_TYPE` | Resource Storate type, support type are: S3、HDFS、NONE. | `NONE` |

| `common.configmap.RESOURCE_UPLOAD_PATH` | The base path of resource. | `/ds` |

| `common.configmap.FS_DEFAULT_FS` | The default fs of resource, for s3 is the `s3a` prefix and bucket name. | `s3a://xxxx` |

| `common.configmap.FS_S3A_ENDPOINT` | If the resource type is `S3`, you should fill this filed, it's the endpoint of s3. | `s3.xxx.amazonaws.com` |

| `common.configmap.FS_S3A_ACCESS_KEY` | The access key for your s3 bucket. | `xxxxxxx` |

| `common.configmap.FS_S3A_SECRET_KEY` | The secret key for your s3 bucket. | `xxxxxxx` |

| `master.podManagementPolicy` | PodManagementPolicy controls how pods are created during initial scale up, when replacing pods on nodes, or when scaling down | `Parallel` |

| | | |

| `master.replicas` | Replicas is the desired number of replicas of the given Template | `3` |

| `master.nodeSelector` | NodeSelector is a selector which must be true for the pod to fit on a node | `{}` |

| `master.tolerations` | If specified, the pod's tolerations | `{}` |

| `master.affinity` | If specified, the pod's scheduling constraints | `{}` |

| `master.jvmOptions` | The JVM options for master server. | `""` |

| `master.resources` | The `resource` limit and request config for master server. | `{}` |

| `master.annotations` | The `annotations` for master server. | `{}` |

| `master.configmap.MASTER_EXEC_THREADS` | Master execute thread num | `100` |

| `master.configmap.MASTER_EXEC_TASK_NUM` | Master execute task number in parallel | `20` |

| `master.configmap.MASTER_HEARTBEAT_INTERVAL` | Master heartbeat interval | `10` |

| `master.configmap.MASTER_TASK_COMMIT_RETRYTIMES` | Master commit task retry times | `5` |

| `master.configmap.MASTER_TASK_COMMIT_INTERVAL` | Master commit task interval | `1000` |

| `master.configmap.MASTER_MAX_CPULOAD_AVG` | Only less than cpu avg load, master server can work. default value : the number of cpu cores * 2 | `100` |

| `master.configmap.MASTER_RESERVED_MEMORY` | Only larger than reserved memory, master server can work. default value : physical memory * 1/10, unit is G | `0.1` |

| `master.livenessProbe.enabled` | Turn on and off liveness probe | `true` |

| `master.livenessProbe.initialDelaySeconds` | Delay before liveness probe is initiated | `30` |

| `master.livenessProbe.periodSeconds` | How often to perform the probe | `30` |

| `master.livenessProbe.timeoutSeconds` | When the probe times out | `5` |

| `master.livenessProbe.failureThreshold` | Minimum consecutive successes for the probe | `3` |

| `master.livenessProbe.successThreshold` | Minimum consecutive failures for the probe | `1` |

| `master.readinessProbe.enabled` | Turn on and off readiness probe | `true` |

| `master.readinessProbe.initialDelaySeconds` | Delay before readiness probe is initiated | `30` |

| `master.readinessProbe.periodSeconds` | How often to perform the probe | `30` |

| `master.readinessProbe.timeoutSeconds` | When the probe times out | `5` |

| `master.readinessProbe.failureThreshold` | Minimum consecutive successes for the probe | `3` |

| `master.readinessProbe.successThreshold` | Minimum consecutive failures for the probe | `1` |

| `master.persistentVolumeClaim.enabled` | Set `master.persistentVolumeClaim.enabled` to `true` to mount a new volume for `master` | `false` |

| `master.persistentVolumeClaim.accessModes` | `PersistentVolumeClaim` Access Modes | `[ReadWriteOnce]` |

| `master.persistentVolumeClaim.storageClassName` | `Master` logs data Persistent Volume Storage Class. If set to "-", storageClassName: "", which disables dynamic provisioning | `-` |

| `master.persistentVolumeClaim.storage` | `PersistentVolumeClaim` Size | `20Gi` |

| | | |

| `worker.podManagementPolicy` | PodManagementPolicy controls how pods are created during initial scale up, when replacing pods on nodes, or when scaling down | `Parallel` |

| `worker.replicas` | Replicas is the desired number of replicas of the given Template | `3` |

| `worker.nodeSelector` | NodeSelector is a selector which must be true for the pod to fit on a node | `{}` |

| `worker.tolerations` | If specified, the pod's tolerations | `{}` |

| `worker.affinity` | If specified, the pod's scheduling constraints | `{}` |

| `worker.jvmOptions` | The JVM options for worker server. | `""` |

| `worker.resources` | The `resource` limit and request config for worker server. | `{}` |

| `worker.annotations` | The `annotations` for worker server. | `{}` |

| `worker.configmap.WORKER_EXEC_THREADS` | Worker execute thread num | `100` |

| `worker.configmap.WORKER_HEARTBEAT_INTERVAL` | Worker heartbeat interval | `10` |

| `worker.configmap.WORKER_FETCH_TASK_NUM` | Submit the number of tasks at a time | `3` |

| `worker.configmap.WORKER_MAX_CPULOAD_AVG` | Only less than cpu avg load, worker server can work. default value : the number of cpu cores * 2 | `100` |

| `worker.configmap.WORKER_RESERVED_MEMORY` | Only larger than reserved memory, worker server can work. default value : physical memory * 1/10, unit is G | `0.1` |

| `worker.configmap.DOLPHINSCHEDULER_DATA_BASEDIR_PATH` | User data directory path, self configuration, please make sure the directory exists and have read write permissions | `/tmp/dolphinscheduler` |

| `worker.configmap.DOLPHINSCHEDULER_ENV` | System env path, self configuration, please read `values.yaml` | `[]` |

| `worker.livenessProbe.enabled` | Turn on and off liveness probe | `true` |

| `worker.livenessProbe.initialDelaySeconds` | Delay before liveness probe is initiated | `30` |

| `worker.livenessProbe.periodSeconds` | How often to perform the probe | `30` |

| `worker.livenessProbe.timeoutSeconds` | When the probe times out | `5` |

| `worker.livenessProbe.failureThreshold` | Minimum consecutive successes for the probe | `3` |

| `worker.livenessProbe.successThreshold` | Minimum consecutive failures for the probe | `1` |

| `worker.readinessProbe.enabled` | Turn on and off readiness probe | `true` |

| `worker.readinessProbe.initialDelaySeconds` | Delay before readiness probe is initiated | `30` |

| `worker.readinessProbe.periodSeconds` | How often to perform the probe | `30` |

| `worker.readinessProbe.timeoutSeconds` | When the probe times out | `5` |

| `worker.readinessProbe.failureThreshold` | Minimum consecutive successes for the probe | `3` |

| `worker.readinessProbe.successThreshold` | Minimum consecutive failures for the probe | `1` |

| `worker.persistentVolumeClaim.enabled` | Set `worker.persistentVolumeClaim.enabled` to `true` to enable `persistentVolumeClaim` for `worker` | `false` |

| `worker.persistentVolumeClaim.dataPersistentVolume.enabled` | Set `worker.persistentVolumeClaim.dataPersistentVolume.enabled` to `true` to mount a data volume for `worker` | `false` |

| `worker.persistentVolumeClaim.dataPersistentVolume.accessModes` | `PersistentVolumeClaim` Access Modes | `[ReadWriteOnce]` |

| `worker.persistentVolumeClaim.dataPersistentVolume.storageClassName` | `Worker` data Persistent Volume Storage Class. If set to "-", storageClassName: "", which disables dynamic provisioning | `-` |

| `worker.persistentVolumeClaim.dataPersistentVolume.storage` | `PersistentVolumeClaim` Size | `20Gi` |

| `worker.persistentVolumeClaim.logsPersistentVolume.enabled` | Set `worker.persistentVolumeClaim.logsPersistentVolume.enabled` to `true` to mount a logs volume for `worker` | `false` |

| `worker.persistentVolumeClaim.logsPersistentVolume.accessModes` | `PersistentVolumeClaim` Access Modes | `[ReadWriteOnce]` |

| `worker.persistentVolumeClaim.logsPersistentVolume.storageClassName` | `Worker` logs data Persistent Volume Storage Class. If set to "-", storageClassName: "", which disables dynamic provisioning | `-` |

| `worker.persistentVolumeClaim.logsPersistentVolume.storage` | `PersistentVolumeClaim` Size | `20Gi` |

| | | |

| `alert.strategy.type` | Type of deployment. Can be "Recreate" or "RollingUpdate" | `RollingUpdate` |

| `alert.strategy.rollingUpdate.maxSurge` | The maximum number of pods that can be scheduled above the desired number of pods | `25%` |

| `alert.strategy.rollingUpdate.maxUnavailable` | The maximum number of pods that can be unavailable during the update | `25%` |

| `alert.replicas` | Replicas is the desired number of replicas of the given Template | `1` |

| `alert.nodeSelector` | NodeSelector is a selector which must be true for the pod to fit on a node | `{}` |

| `alert.tolerations` | If specified, the pod's tolerations | `{}` |

| `alert.affinity` | If specified, the pod's scheduling constraints | `{}` |

| `alert.jvmOptions` | The JVM options for alert server. | `""` |

| `alert.resources` | The `resource` limit and request config for alert server. | `{}` |

| `alert.annotations` | The `annotations` for alert server. | `{}` |

| `alert.configmap.ALERT_PLUGIN_DIR` | Alert plugin path. | `/opt/dolphinscheduler/alert/plugin` |

| `alert.configmap.XLS_FILE_PATH` | XLS file path | `/tmp/xls` |

| `alert.configmap.MAIL_SERVER_HOST` | Mail `SERVER HOST ` | `nil` |

| `alert.configmap.MAIL_SERVER_PORT` | Mail `SERVER PORT` | `nil` |

| `alert.configmap.MAIL_SENDER` | Mail `SENDER` | `nil` |

| `alert.configmap.MAIL_USER` | Mail `USER` | `nil` |

| `alert.configmap.MAIL_PASSWD` | Mail `PASSWORD` | `nil` |

| `alert.configmap.MAIL_SMTP_STARTTLS_ENABLE` | Mail `SMTP STARTTLS` enable | `false` |

| `alert.configmap.MAIL_SMTP_SSL_ENABLE` | Mail `SMTP SSL` enable | `false` |

| `alert.configmap.MAIL_SMTP_SSL_TRUST` | Mail `SMTP SSL TRUST` | `nil` |

| `alert.configmap.ENTERPRISE_WECHAT_ENABLE` | `Enterprise Wechat` enable | `false` |

| `alert.configmap.ENTERPRISE_WECHAT_CORP_ID` | `Enterprise Wechat` corp id | `nil` |

| `alert.configmap.ENTERPRISE_WECHAT_SECRET` | `Enterprise Wechat` secret | `nil` |

| `alert.configmap.ENTERPRISE_WECHAT_AGENT_ID` | `Enterprise Wechat` agent id | `nil` |

| `alert.configmap.ENTERPRISE_WECHAT_USERS` | `Enterprise Wechat` users | `nil` |

| `alert.livenessProbe.enabled` | Turn on and off liveness probe | `true` |

| `alert.livenessProbe.initialDelaySeconds` | Delay before liveness probe is initiated | `30` |

| `alert.livenessProbe.periodSeconds` | How often to perform the probe | `30` |

| `alert.livenessProbe.timeoutSeconds` | When the probe times out | `5` |

| `alert.livenessProbe.failureThreshold` | Minimum consecutive successes for the probe | `3` |

| `alert.livenessProbe.successThreshold` | Minimum consecutive failures for the probe | `1` |

| `alert.readinessProbe.enabled` | Turn on and off readiness probe | `true` |

| `alert.readinessProbe.initialDelaySeconds` | Delay before readiness probe is initiated | `30` |

| `alert.readinessProbe.periodSeconds` | How often to perform the probe | `30` |

| `alert.readinessProbe.timeoutSeconds` | When the probe times out | `5` |

| `alert.readinessProbe.failureThreshold` | Minimum consecutive successes for the probe | `3` |

| `alert.readinessProbe.successThreshold` | Minimum consecutive failures for the probe | `1` |

| `alert.persistentVolumeClaim.enabled` | Set `alert.persistentVolumeClaim.enabled` to `true` to mount a new volume for `alert` | `false` |

| `alert.persistentVolumeClaim.accessModes` | `PersistentVolumeClaim` Access Modes | `[ReadWriteOnce]` |

| `alert.persistentVolumeClaim.storageClassName` | `Alert` logs data Persistent Volume Storage Class. If set to "-", storageClassName: "", which disables dynamic provisioning | `-` |

| `alert.persistentVolumeClaim.storage` | `PersistentVolumeClaim` Size | `20Gi` |

| | | |

| `api.strategy.type` | Type of deployment. Can be "Recreate" or "RollingUpdate" | `RollingUpdate` |

| `api.strategy.rollingUpdate.maxSurge` | The maximum number of pods that can be scheduled above the desired number of pods | `25%` |

| `api.strategy.rollingUpdate.maxUnavailable` | The maximum number of pods that can be unavailable during the update | `25%` |

| `api.replicas` | Replicas is the desired number of replicas of the given Template | `1` |

| `api.nodeSelector` | NodeSelector is a selector which must be true for the pod to fit on a node | `{}` |

| `api.tolerations` | If specified, the pod's tolerations | `{}` |

| `api.affinity` | If specified, the pod's scheduling constraints | `{}` |

| `api.jvmOptions` | The JVM options for api server. | `""` |

| `api.resources` | The `resource` limit and request config for api server. | `{}` |

| `api.annotations` | The `annotations` for api server. | `{}` |

| `api.livenessProbe.enabled` | Turn on and off liveness probe | `true` |

| `api.livenessProbe.initialDelaySeconds` | Delay before liveness probe is initiated | `30` |

| `api.livenessProbe.periodSeconds` | How often to perform the probe | `30` |

| `api.livenessProbe.timeoutSeconds` | When the probe times out | `5` |

| `api.livenessProbe.failureThreshold` | Minimum consecutive successes for the probe | `3` |

| `api.livenessProbe.successThreshold` | Minimum consecutive failures for the probe | `1` |

| `api.readinessProbe.enabled` | Turn on and off readiness probe | `true` |

| `api.readinessProbe.initialDelaySeconds` | Delay before readiness probe is initiated | `30` |

| `api.readinessProbe.periodSeconds` | How often to perform the probe | `30` |

| `api.readinessProbe.timeoutSeconds` | When the probe times out | `5` |

| `api.readinessProbe.failureThreshold` | Minimum consecutive successes for the probe | `3` |

| `api.readinessProbe.successThreshold` | Minimum consecutive failures for the probe | `1` |

| `api.persistentVolumeClaim.enabled` | Set `api.persistentVolumeClaim.enabled` to `true` to mount a new volume for `api` | `false` |

| `api.persistentVolumeClaim.accessModes` | `PersistentVolumeClaim` Access Modes | `[ReadWriteOnce]` |

| `api.persistentVolumeClaim.storageClassName` | `api` logs data Persistent Volume Storage Class. If set to "-", storageClassName: "", which disables dynamic provisioning | `-` |

| `api.persistentVolumeClaim.storage` | `PersistentVolumeClaim` Size | `20Gi` |

| | | |

| `ingress.enabled` | Enable ingress | `false` |

| `ingress.host` | Ingress host | `dolphinscheduler.org` |

| `ingress.path` | Ingress path | `/` |

| `ingress.tls.enabled` | Enable ingress tls | `false` |

| `ingress.tls.hosts` | Ingress tls hosts | `dolphinscheduler.org` |

| `ingress.tls.secretName` | Ingress tls secret name | `dolphinscheduler-tls` |

For more information please refer to the [chart](https://github.com/apache/incubator-dolphinscheduler.git) documentation.

|

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 2,584 | [Feature] 建议k8s部署的时候默认数据库是MySQL,降低使用门槛 | 建议k8s部署的时候默认数据库是MySQL,降低使用门槛。

目前随着Tidb等NewSQL数据库的流行,NewSQL会成为技术中台的首选数据库,而Tidb作为一个非常火的项目是搭建技术中台的首选数据库,Tidb兼容MySQL协议而非pg协议,所以incubator-dolphinscheduler的数据直接建在Tidb上是非常合适的 | https://github.com/apache/dolphinscheduler/issues/2584 | https://github.com/apache/dolphinscheduler/pull/4875 | 139211f3ddf9e30fb058c659fd467b18a95d0dbe | bfc5d1e4ba08cc19059bb40293bb0777b3d42377 | "2020-04-30T02:53:24Z" | java | "2021-02-27T14:44:55Z" | dolphinscheduler-server/src/main/resources/worker.properties | #

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.