metadata

license: mit

tags:

- audio-feature-extraction

- speech-language-models

- gpt4-o

- tokenizer

- codec-representation

- text-to-speech

- automatic-speech-recognition

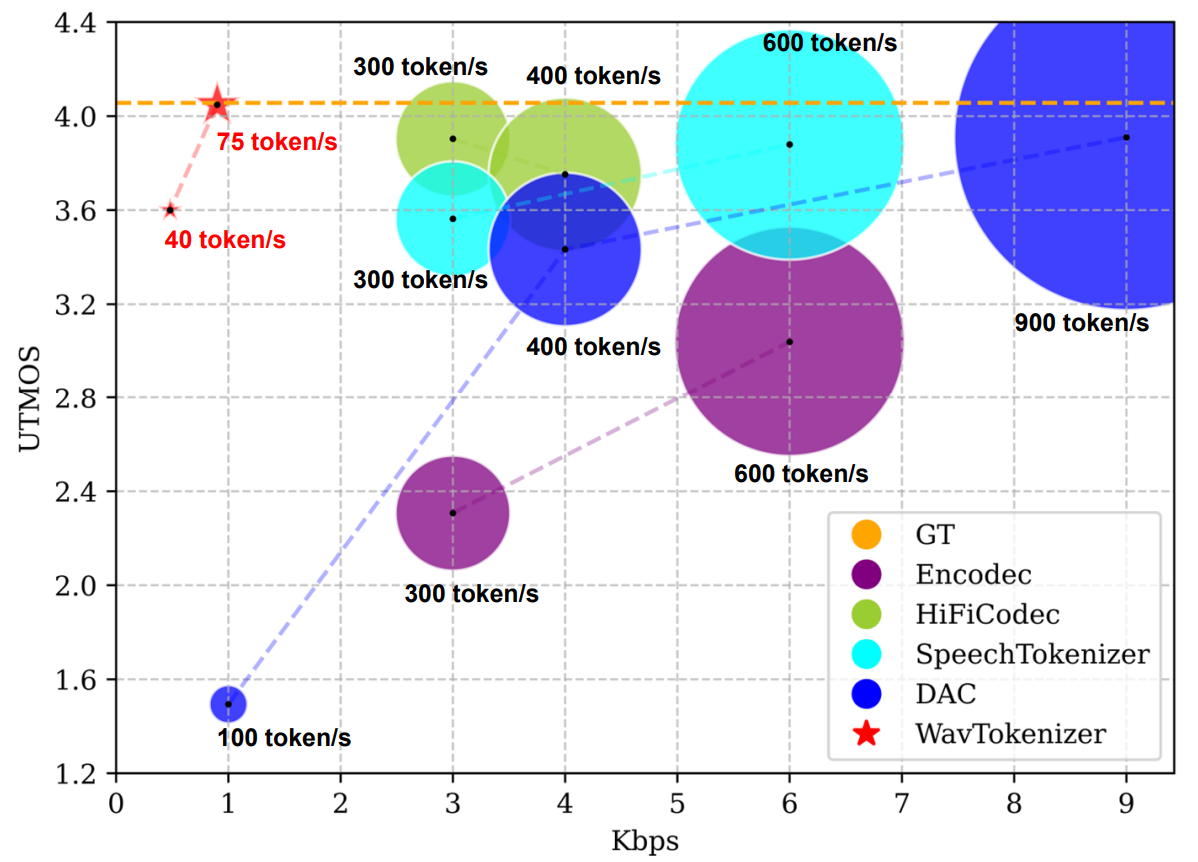

WavTokenizer: SOTA Discrete Codec Models With Forty Tokens Per Second for Audio Language Modeling

🎉🎉 with WavTokenizer, you can represent speech, music, and audio with only 40 tokens per second!

🎉🎉 with WavTokenizer, You can get strong reconstruction results.

🎉🎉 WavTokenizer owns rich semantic information and is build for audio language models such as GPT4-o.

🔥 News

- 2024.08: We release WavTokenizer on arxiv.

Installation

To use WavTokenizer, install it using:

conda create -n wavtokenizer python=3.9

conda activate wavtokenizer

pip install -r requirements.txt

Infer

Part1: Reconstruct audio from raw wav

from encoder.utils import convert_audio

import torchaudio

import torch

from decoder.pretrained import WavTokenizer

device=torch.device('cpu')

config_path = "./configs/xxx.yaml"

model_path = "./xxx.ckpt"

audio_outpath = "xxx"

wavtokenizer = WavTokenizer.from_pretrained0802(config_path, model_path)

wavtokenizer = wavtokenizer.to(device)

wav, sr = torchaudio.load(audio_path)

wav = convert_audio(wav, sr, 24000, 1)

bandwidth_id = torch.tensor([0])

wav=wav.to(device)

features,discrete_code= wavtokenizer.encode_infer(wav, bandwidth_id=bandwidth_id)

audio_out = wavtokenizer.decode(features, bandwidth_id=bandwidth_id)

torchaudio.save(audio_outpath, audio_out, sample_rate=24000, encoding='PCM_S', bits_per_sample=16)

Part2: Generating discrete codecs

from encoder.utils import convert_audio

import torchaudio

import torch

from decoder.pretrained import WavTokenizer

device=torch.device('cpu')

config_path = "./configs/xxx.yaml"

model_path = "./xxx.ckpt"

wavtokenizer = WavTokenizer.from_pretrained0802(config_path, model_path)

wavtokenizer = wavtokenizer.to(device)

wav, sr = torchaudio.load(audio_path)

wav = convert_audio(wav, sr, 24000, 1)

bandwidth_id = torch.tensor([0])

wav=wav.to(device)

_,discrete_code= wavtokenizer.encode_infer(wav, bandwidth_id=bandwidth_id)

print(discrete_code)

Part3: Audio reconstruction through codecs

# audio_tokens [n_q,1,t]/[n_q,t]

features = wavtokenizer.codes_to_features(audio_tokens)

bandwidth_id = torch.tensor([0])

audio_out = wavtokenizer.decode(features, bandwidth_id=bandwidth_id)

Available models

🤗 links to the Huggingface model hub.

| Model name | HuggingFace | Corpus | Token/s | Domain | Open-Source |

|---|---|---|---|---|---|

| WavTokenizer-small-600-24k-4096 | 🤗 | LibriTTS | 40 | Speech | √ |

| WavTokenizer-small-320-24k-4096 | 🤗 | LibriTTS | 75 | Speech | √ |

| WavTokenizer-medium-600-24k-4096 | 🤗 | 10000 Hours | 40 | Speech, Audio, Music | Coming Soon |

| WavTokenizer-medium-320-24k-4096 | 🤗 | 10000 Hours | 75 | Speech, Audio, Music | Coming Soon |

| WavTokenizer-large-600-24k-4096 | 🤗 | 80000 Hours | 40 | Speech, Audio, Music | Coming Soon |

| WavTokenizer-large-320-24k-4096 | 🤗 | 80000 Hours | 75 | Speech, Audio, Music | Coming Soon |

Training

Step1: Prepare train dataset

# Process the data into a form similar to ./data/demo.txt

Step2: Modifying configuration files

# ./configs/xxx.yaml

# Modify the values of parameters such as batch_size, filelist_path, save_dir, device

Step3: Start training process

Refer to Pytorch Lightning documentation for details about customizing the training pipeline.

cd ./WavTokenizer

python train.py fit --config ./configs/xxx.yaml

Citation

If this code contributes to your research, please cite our work, Language-Codec and WavTokenizer:

@article{ji2024wavtokenizer,

title={WavTokenizer: an Efficient Acoustic Discrete Codec Tokenizer for Audio Language Modeling},

author={Ji, Shengpeng and Jiang, Ziyue and Cheng, Xize and Chen, Yifu and Fang, Minghui and Zuo, Jialong and Yang, Qian and Li, Ruiqi and Zhang, Ziang and Yang, Xiaoda and others},

journal={arXiv preprint arXiv:2408.16532},

year={2024}

}

@article{ji2024language,

title={Language-codec: Reducing the gaps between discrete codec representation and speech language models},

author={Ji, Shengpeng and Fang, Minghui and Jiang, Ziyue and Huang, Rongjie and Zuo, Jialung and Wang, Shulei and Zhao, Zhou},

journal={arXiv preprint arXiv:2402.12208},

year={2024}

}