|

--- |

|

language: |

|

- en |

|

license: apache-2.0 |

|

library_name: transformers |

|

tags: |

|

- mergekit |

|

- merge |

|

base_model: |

|

- sometimesanotion/Qwen2.5-14B-Vimarckoso-v3 |

|

- sometimesanotion/Lamarck-14B-v0.3 |

|

- sometimesanotion/Qwenvergence-14B-v3-Prose |

|

- Krystalan/DRT-o1-14B |

|

- underwoods/medius-erebus-magnum-14b |

|

- sometimesanotion/Abliterate-Qwenvergence |

|

- huihui-ai/Qwen2.5-14B-Instruct-abliterated-v2 |

|

metrics: |

|

- accuracy |

|

pipeline_tag: text-generation |

|

--- |

|

|

|

--- |

|

|

|

**Update:** Lamarck has, for the moment, taken the #1 average score for 14 billion parameter models. Counting all the way up to 32 billion parameters, it's #7. This validates the complex merge techniques which captured the complementary strengths of other work in this community. Humor me, I'm giving our guy his meme shades! |

|

|

|

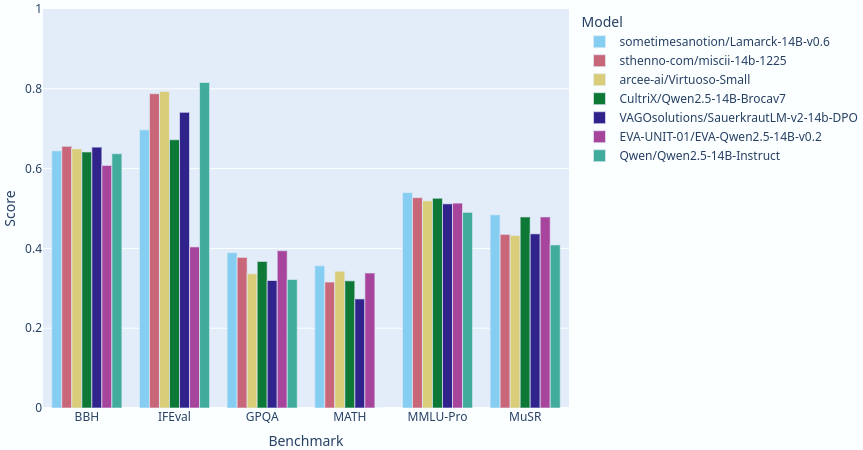

Lamarck 14B v0.6: A generalist merge focused on multi-step reasoning, prose, multi-language ability, and code. It is based on components that have punched above their weight in the 14 billion parameter class. Here you can see a comparison between Lamarck and other top-performing merges and finetunes: |

|

|

|

|

|

|

|

Previous releases were based on a SLERP merge of model_stock+della branches focused on reasoning and prose. The prose branch got surprisingly good at reasoning, and the reasoning branch became a strong generalist in its own right. Some of you have already downloaded it as [sometimesanotion/Qwen2.5-14B-Vimarckoso-v3](https://huggingface.co/sometimesanotion/Qwen2.5-14B-Vimarckoso-v3). |

|

|

|

Lamarck 0.6 hit a whole new of multi-pronged merge strategies: |

|

|

|

- **Extracted LoRA adapters from special-purpose merges** |

|

- **Separate branches for breadcrumbs and DELLA merges** |

|

- **Highly targeted weight/density gradients for every 2-4 layers** |

|

- **Finalization through SLERP merges recombining the separate branches** |

|

|

|

This approach selectively merges the strongest aspects of its ancestors. Lamarck v0.6 is my most complex merge to date. The LORA extractions alone pushed my hardware to where it alone kept the building warm in winter for days! By comparison, the SLERP merge below which finalized it was a simple step. |

|

|

|

```yaml |

|

name: Lamarck-14B-v0.6-rc4 |

|

merge_method: slerp |

|

base_model: sometimesanotion/lamarck-14b-converge-della-linear |

|

tokenizer_source: base |

|

dtype: float32 |

|

out_dtype: bfloat16 |

|

parameters: |

|

int8_mask: true |

|

normalize: true |

|

rescale: false |

|

parameters: |

|

t: |

|

- value: 0.30 |

|

slices: |

|

- sources: |

|

- model: sometimesanotion/lamarck-14b-converge-della-linear |

|

layer_range: [ 0, 8 ] |

|

- model: sometimesanotion/lamarck-14b-converge-breadcrumbs |

|

layer_range: [ 0, 8 ] |

|

- sources: |

|

- model: sometimesanotion/lamarck-14b-converge-della-linear |

|

layer_range: [ 8, 16 ] |

|

- model: sometimesanotion/lamarck-14b-converge-breadcrumbs |

|

layer_range: [ 8, 16 ] |

|

- sources: |

|

- model: sometimesanotion/lamarck-14b-converge-della-linear |

|

layer_range: [ 16, 24 ] |

|

- model: sometimesanotion/lamarck-14b-converge-breadcrumbs |

|

layer_range: [ 16, 24 ] |

|

- sources: |

|

- model: sometimesanotion/lamarck-14b-converge-della-linear |

|

layer_range: [ 24, 32 ] |

|

- model: sometimesanotion/lamarck-14b-converge-breadcrumbs |

|

layer_range: [ 24, 32 ] |

|

- sources: |

|

- model: sometimesanotion/lamarck-14b-converge-della-linear |

|

layer_range: [ 32, 40 ] |

|

- model: sometimesanotion/lamarck-14b-converge-breadcrumbs |

|

layer_range: [ 32, 40 ] |

|

- sources: |

|

- model: sometimesanotion/lamarck-14b-converge-della-linear |

|

layer_range: [ 40, 48 ] |

|

- model: sometimesanotion/lamarck-14b-converge-breadcrumbs |

|

layer_range: [ 40, 48 ] |

|

|

|

``` |

|

|

|

The strengths Lamarck has combined from its immediate ancestors are in turn derived from select finetunes and merges. Kudoes to @arcee-ai, @CultriX, @sthenno-com, @Krystalan, @underwoods, @VAGOSolutions, and @rombodawg whose models had the most influence, as this version's base model [Vimarckoso v3](https://huggingface.co/sometimesanotion/Qwen2.5-14B-Vimarckoso-v3)'s card will best show. |

|

|