status

stringclasses 1

value | repo_name

stringclasses 31

values | repo_url

stringclasses 31

values | issue_id

int64 1

104k

| title

stringlengths 4

369

| body

stringlengths 0

254k

⌀ | issue_url

stringlengths 37

56

| pull_url

stringlengths 37

54

| before_fix_sha

stringlengths 40

40

| after_fix_sha

stringlengths 40

40

| report_datetime

unknown | language

stringclasses 5

values | commit_datetime

unknown | updated_file

stringlengths 4

188

| file_content

stringlengths 0

5.12M

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

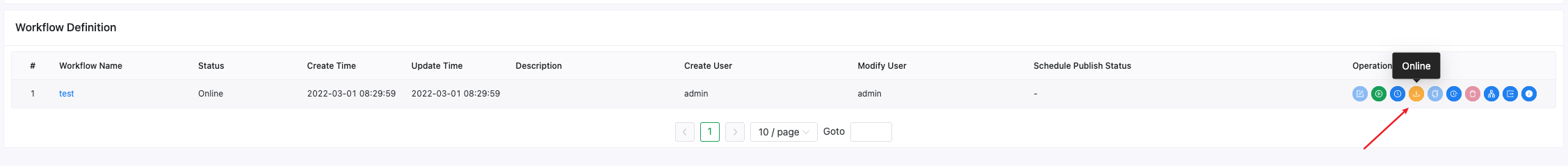

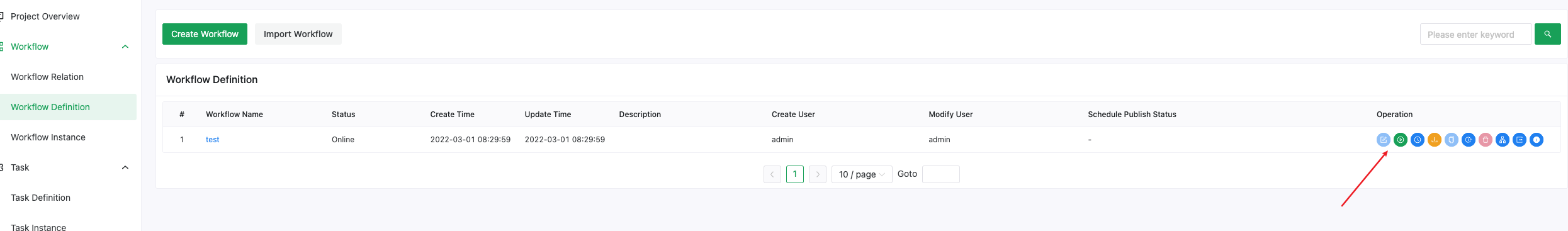

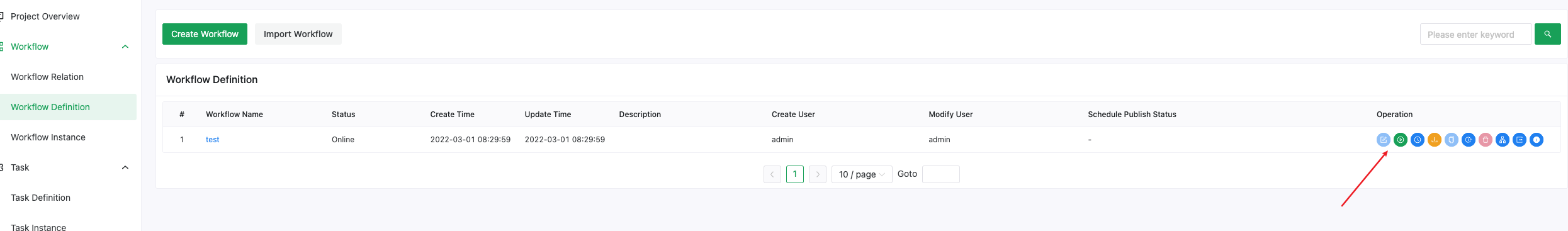

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 8,412 | [Bug] [UI] Workflow definition timing manage edit | ### Search before asking

- [X] I had searched in the [issues](https://github.com/apache/dolphinscheduler/issues?q=is%3Aissue) and found no similar issues.

### What happened

Environment name is not assigned when i edit timing manage in workflow define. as follows:

### What you expected to happen

Environment Name show the correct value.

### How to reproduce

Open `Process definition` -> `Cron Manage` and click edit button.

### Anything else

_No response_

### Version

dev

### Are you willing to submit PR?

- [X] Yes I am willing to submit a PR!

### Code of Conduct

- [X] I agree to follow this project's [Code of Conduct](https://www.apache.org/foundation/policies/conduct)

| https://github.com/apache/dolphinscheduler/issues/8412 | https://github.com/apache/dolphinscheduler/pull/8413 | 6334a8221a0dfa35d7fb69de227b5aedbe775a0f | d2f8d96fa05f55e884d2c0d40f9fd3f64016d9dd | "2022-02-17T06:42:38Z" | java | "2022-02-17T11:44:47Z" | dolphinscheduler-ui/src/js/conf/home/pages/projects/pages/definition/pages/list/_source/timing.vue | /*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

<template>

<div class="timing-process-model">

<div class="clearfix list">

<div class="text">

{{$t('Start and stop time')}}

</div>

<div class="cont">

<el-date-picker

style="width: 360px"

v-model="scheduleTime"

size="small"

@change="_datepicker"

type="datetimerange"

range-separator="-"

:start-placeholder="$t('startDate')"

:end-placeholder="$t('endDate')"

value-format="yyyy-MM-dd HH:mm:ss">

</el-date-picker>

</div>

</div>

<div class="clearfix list">

<el-button type="primary" style="margin-left:20px" size="small" round :loading="spinnerLoading" @click="preview()">{{$t('Execute time')}}</el-button>

<div class="text">

{{$t('Timing')}}

</div>

<div class="cont">

<template>

<el-popover

placement="bottom-start"

trigger="click">

<template slot="reference">

<el-input

style="width: 360px;"

type="text"

size="small"

readonly

:value="crontab">

</el-input>

</template>

<div class="crontab-box">

<v-crontab v-model="crontab" :locale="i18n"></v-crontab>

</div>

</el-popover>

</template>

</div>

</div>

<div class="clearfix list">

<div class="text">

{{$t('Timezone')}}

</div>

<div class="cont">

<el-select v-model=timezoneId filterable placeholder="Timezone">

<el-option

v-for="item in availableTimezoneIDList"

:key="item"

:label="item"

:value="item">

</el-option>

</el-select>

</div>

</div>

<div class="clearfix list">

<div style = "padding-left: 150px;">{{$t('Next five execution times')}}</div>

<ul style = "padding-left: 150px;">

<li v-for="(time,i) in previewTimes" :key='i'>{{time}}</li>

</ul>

</div>

<div class="clearfix list">

<div class="text">

{{$t('Failure Strategy')}}

</div>

<div class="cont">

<el-radio-group v-model="failureStrategy" style="margin-top: 7px;" size="small">

<el-radio :label="'CONTINUE'">{{$t('Continue')}}</el-radio>

<el-radio :label="'END'">{{$t('End')}}</el-radio>

</el-radio-group>

</div>

</div>

<div class="clearfix list">

<div class="text">

{{$t('Notification strategy')}}

</div>

<div class="cont">

<el-select

style="width: 200px;"

size="small"

v-model="warningType">

<el-option

v-for="city in warningTypeList"

:key="city.id"

:value="city.id"

:label="city.code">

</el-option>

</el-select>

</div>

</div>

<div class="clearfix list">

<div class="text">

{{$t('Process priority')}}

</div>

<div class="cont">

<m-priority v-model="processInstancePriority"></m-priority>

</div>

</div>

<div class="clearfix list">

<div class="text">

{{$t('Worker group')}}

</div>

<div class="cont">

<m-worker-groups v-model="workerGroup"></m-worker-groups>

</div>

</div>

<div class="clearfix list">

<div class="text">

{{$t('Environment Name')}}

</div>

<div class="cont">

<m-related-environment v-model="environmentCode" :workerGroup="workerGroup" v-on:environmentCodeEvent="_onUpdateEnvironmentCode"></m-related-environment>

</div>

</div>

<div class="clearfix list">

<div class="text">

{{$t('Alarm group')}}

</div>

<div class="cont">

<el-select

style="width: 200px;"

clearable

size="small"

:disabled="!notifyGroupList.length"

v-model="warningGroupId">

<el-input slot="trigger" readonly slot-scope="{ selectedModel }" :value="selectedModel ? selectedModel.label : ''" style="width: 200px;" @on-click-icon.stop="warningGroupId = {}">

<em slot="suffix" class="el-icon-error" style="font-size: 15px;cursor: pointer;" v-show="warningGroupId.id"></em>

<em slot="suffix" class="el-icon-bottom" style="font-size: 12px;" v-show="!warningGroupId.id"></em>

</el-input>

<el-option

v-for="city in notifyGroupList"

:key="city.id"

:value="city.id"

:label="city.code">

</el-option>

</el-select>

</div>

</div>

<div class="submit">

<el-button type="text" size="small" @click="close()"> {{$t('Cancel')}} </el-button>

<el-button type="primary" size="small" round :loading="spinnerLoading" @click="ok()">{{spinnerLoading ? $t('Loading...') : (timingData.item.crontab ? $t('Edit') : $t('Create'))}} </el-button>

</div>

</div>

</template>

<script>

import moment from 'moment-timezone'

import i18n from '@/module/i18n'

import store from '@/conf/home/store'

import { warningTypeList } from './util'

import { vCrontab } from '@/module/components/crontab/index'

import { formatDate } from '@/module/filter/filter'

import mPriority from '@/module/components/priority/priority'

import mWorkerGroups from '@/conf/home/pages/dag/_source/formModel/_source/workerGroups'

import mRelatedEnvironment from '@/conf/home/pages/dag/_source/formModel/_source/relatedEnvironment'

export default {

name: 'timing-process',

data () {

return {

store,

processDefinitionId: 0,

failureStrategy: 'CONTINUE',

warningTypeList: warningTypeList,

availableTimezoneIDList: moment.tz.names(),

warningType: 'NONE',

notifyGroupList: [],

warningGroupId: '',

spinnerLoading: false,

scheduleTime: '',

crontab: '0 0 * * * ? *',

timezoneId: moment.tz.guess(),

cronPopover: false,

i18n: i18n.globalScope.LOCALE,

processInstancePriority: 'MEDIUM',

workerGroup: '',

environmentCode: '',

previewTimes: []

}

},

props: {

timingData: Object

},

methods: {

_datepicker (val) {

this.scheduleTime = val

},

_verification () {

if (!this.scheduleTime) {

this.$message.warning(`${i18n.$t('Please select time')}`)

return false

}

if (this.scheduleTime[0] === this.scheduleTime[1]) {

this.$message.warning(`${i18n.$t('The start time must not be the same as the end')}`)

return false

}

if (!this.crontab) {

this.$message.warning(`${i18n.$t('Please enter crontab')}`)

return false

}

return true

},

_onUpdateEnvironmentCode (o) {

this.environmentCode = o

},

_timing () {

if (this._verification()) {

let api = ''

let searchParams = {

schedule: JSON.stringify({

startTime: this.scheduleTime[0],

endTime: this.scheduleTime[1],

crontab: this.crontab,

timezoneId: this.timezoneId

}),

failureStrategy: this.failureStrategy,

warningType: this.warningType,

processInstancePriority: this.processInstancePriority,

warningGroupId: this.warningGroupId === '' ? 0 : this.warningGroupId,

workerGroup: this.workerGroup,

environmentCode: this.environmentCode

}

let msg = ''

// edit

if (this.timingData.item.crontab) {

api = 'dag/updateSchedule'

searchParams.id = this.timingData.item.id

msg = `${i18n.$t('Edit')}${i18n.$t('Success')},${i18n.$t('Please go online')}`

} else {

api = 'dag/createSchedule'

searchParams.processDefinitionCode = this.timingData.item.code

msg = `${i18n.$t('Create')}${i18n.$t('Success')}`

}

this.store.dispatch(api, searchParams).then(res => {

this.$message.success(msg)

this.$emit('onUpdateTiming')

}).catch(e => {

this.$message.error(e.msg || '')

})

}

},

_preview () {

if (this._verification()) {

let api = 'dag/previewSchedule'

let searchParams = {

schedule: JSON.stringify({

startTime: this.scheduleTime[0],

endTime: this.scheduleTime[1],

crontab: this.crontab

})

}

this.store.dispatch(api, searchParams).then(res => {

if (res.length) {

this.previewTimes = res

} else {

this.$message.warning(`${i18n.$t('There is no data for this period of time')}`)

}

})

}

},

_getNotifyGroupList () {

return new Promise((resolve, reject) => {

this.store.dispatch('dag/getNotifyGroupList').then(res => {

this.notifyGroupList = res

if (this.notifyGroupList.length) {

resolve()

} else {

reject(new Error(0))

}

})

})

},

ok () {

this._timing()

},

close () {

this.$emit('closeTiming')

},

preview () {

this._preview()

}

},

watch: {

},

created () {

if (this.timingData.item.workerGroup === undefined) {

let stateWorkerGroupsList = this.store.state.security.workerGroupsListAll || []

if (stateWorkerGroupsList.length) {

this.workerGroup = stateWorkerGroupsList[0].id

} else {

this.store.dispatch('security/getWorkerGroupsAll').then(res => {

this.$nextTick(() => {

this.workerGroup = res[0].id

})

})

}

} else {

this.workerGroup = this.timingData.item.workerGroup

}

if (this.timingData.item.crontab !== null) {

this.crontab = this.timingData.item.crontab

}

if (this.timingData.type === 'timing') {

let date = new Date()

let year = date.getFullYear()

let month = date.getMonth() + 1

let day = date.getDate()

if (month < 10) {

month = '0' + month

}

if (day < 10) {

day = '0' + day

}

let startDate = year + '-' + month + '-' + day + ' ' + '00:00:00'

let endDate = (year + 100) + '-' + month + '-' + day + ' ' + '00:00:00'

let times = []

times[0] = startDate

times[1] = endDate

this.crontab = '0 0 * * * ? *'

this.scheduleTime = times

}

},

mounted () {

let item = this.timingData.item

// Determine whether to echo

if (this.timingData.item.crontab) {

this.crontab = item.crontab

this.scheduleTime = [formatDate(item.startTime), formatDate(item.endTime)]

this.timezoneId = item.timezoneId === null ? moment.tz.guess() : item.timezoneId

this.failureStrategy = item.failureStrategy

this.warningType = item.warningType

this.processInstancePriority = item.processInstancePriority

this._getNotifyGroupList().then(() => {

this.$nextTick(() => {

// let list = _.filter(this.notifyGroupList, v => v.id === item.warningGroupId)

this.warningGroupId = item.warningGroupId === 0 ? '' : item.warningGroupId

})

}).catch(() => { this.warningGroupId = '' })

} else {

this._getNotifyGroupList().then(() => {

this.$nextTick(() => {

this.warningGroupId = ''

})

}).catch(() => { this.warningGroupId = '' })

}

},

components: { vCrontab, mPriority, mWorkerGroups, mRelatedEnvironment }

}

</script>

<style lang="scss" rel="stylesheet/scss">

.timing-process-model {

width: 860px;

min-height: 300px;

background: #fff;

border-radius: 3px;

margin-top: 0;

.crontab-box {

margin: -6px;

.v-crontab {

}

}

.form-model {

padding-top: 0;

}

.title-box {

margin-bottom: 18px;

span {

padding-left: 30px;

font-size: 16px;

padding-top: 29px;

display: block;

}

}

.list {

margin-bottom: 14px;

>.text {

width: 140px;

float: left;

text-align: right;

line-height: 32px;

padding-right: 8px;

}

.cont {

width: 350px;

float: left;

}

}

.submit {

text-align: right;

padding-right: 30px;

padding-top: 10px;

padding-bottom: 30px;

}

}

.v-crontab-form-model {

.list-box {

padding: 0;

}

}

.x-date-packer-panel .x-date-packer-day .lattice label.bg-hover {

background: #00BFFF!important;

margin-top: -4px;

}

.x-date-packer-panel .x-date-packer-day .lattice em:hover {

background: #0098e1!important;

}

</style>

|

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 8,296 | [Bug] [API] Update process definition error | ### Search before asking

- [X] I had searched in the [issues](https://github.com/apache/dolphinscheduler/issues?q=is%3Aissue) and found no similar issues.

### What happened

```

[ERROR] 2022-02-07 17:44:03.394 org.apache.dolphinscheduler.api.service.impl.ProcessDefinitionServiceImpl:[332] - error:

java.lang.IllegalStateException: Duplicate key TaskDefinition{id=0, code=3909232388448, name='依赖检查', version=1, description='', projectCode=0, userId=0, taskType=DEPENDENT, taskParams='{"dependence":{"relation":"AND","dependTaskList":[{"relation":"AND","dependItemList":[{"projectCode":3909232228064,"definitionCode":3909232229350,"depTaskCode":0,"cycle":"day","dateValue":"today"}]},{"relation":"AND","dependItemList":[{"projectCode":3909232228064,"definitionCode":3909232232293,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232229346,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230758,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230759,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230765,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230761,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230760,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230887,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230506,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230517,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230259,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232231796,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230263,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230628,"depTaskCode":0,"cycle":"day","dateValue":"today"}]}]},"conditionResult":{"successNode":[],"failedNode":[]},"waitStartTimeout":{"strategy":"FAILED","interval":null,"checkInterval":null,"enable":false},"switchResult":{}}', taskParamList=null, taskParamMap=null, flag=YES, taskPriority=MEDIUM, userName='null', projectName='null', workerGroup='offline-cluster', failRetryTimes=720, environmentCode='-1', failRetryInterval=1, timeoutFlag=CLOSE, timeoutNotifyStrategy=null, timeout=0, delayTime=0, resourceIds='null', createTime=null, updateTime=null}

at java.util.stream.Collectors.lambda$throwingMerger$0(Collectors.java:133)

at java.util.HashMap.merge(HashMap.java:1255)

at java.util.stream.Collectors.lambda$toMap$58(Collectors.java:1320)

at java.util.stream.ReduceOps$3ReducingSink.accept(ReduceOps.java:169)

at java.util.ArrayList$ArrayListSpliterator.forEachRemaining(ArrayList.java:1384)

at java.util.stream.AbstractPipeline.copyInto(AbstractPipeline.java:482)

at java.util.stream.AbstractPipeline.wrapAndCopyInto(AbstractPipeline.java:472)

at java.util.stream.ReduceOps$ReduceOp.evaluateSequential(ReduceOps.java:708)

at java.util.stream.AbstractPipeline.evaluate(AbstractPipeline.java:234)

at java.util.stream.ReferencePipeline.collect(ReferencePipeline.java:566)

at org.apache.dolphinscheduler.service.process.ProcessService.transformTask(ProcessService.java:2517)

at org.apache.dolphinscheduler.service.process.ProcessService$$FastClassBySpringCGLIB$$ed138739.invoke(<generated>)

at org.springframework.cglib.proxy.MethodProxy.invoke(MethodProxy.java:218)

at org.springframework.aop.framework.CglibAopProxy$DynamicAdvisedInterceptor.intercept(CglibAopProxy.java:689)

at org.apache.dolphinscheduler.service.process.ProcessService$$EnhancerBySpringCGLIB$$a43f595c.transformTask(<generated>)

at org.apache.dolphinscheduler.api.service.impl.ProcessDefinitionServiceImpl.checkTaskRelationList(ProcessDefinitionServiceImpl.java:305)

at org.apache.dolphinscheduler.api.service.impl.ProcessDefinitionServiceImpl.updateProcessDefinition(ProcessDefinitionServiceImpl.java:528)

at org.apache.dolphinscheduler.api.service.impl.ProcessDefinitionServiceImpl$$FastClassBySpringCGLIB$$e8e34ed9.invoke(<generated>)

at org.springframework.cglib.proxy.MethodProxy.invoke(MethodProxy.java:218)

at org.springframework.aop.framework.CglibAopProxy$CglibMethodInvocation.invokeJoinpoint(CglibAopProxy.java:783)

at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:163)

at org.springframework.aop.framework.CglibAopProxy$CglibMethodInvocation.proceed(CglibAopProxy.java:753)

at org.springframework.transaction.interceptor.TransactionInterceptor$1.proceedWithInvocation(TransactionInterceptor.java:123)

at org.springframework.transaction.interceptor.TransactionAspectSupport.invokeWithinTransaction(TransactionAspectSupport.java:388)

at org.springframework.transaction.interceptor.TransactionInterceptor.invoke(TransactionInterceptor.java:119)

at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:186)

at org.springframework.aop.framework.CglibAopProxy$CglibMethodInvocation.proceed(CglibAopProxy.java:753)

at org.springframework.aop.framework.CglibAopProxy$DynamicAdvisedInterceptor.intercept(CglibAopProxy.java:698)

at org.apache.dolphinscheduler.api.service.impl.ProcessDefinitionServiceImpl$$EnhancerBySpringCGLIB$$1978743c.updateProcessDefinition(<generated>)

at org.apache.dolphinscheduler.api.controller.ProcessDefinitionController.updateProcessDefinition(ProcessDefinitionController.java:249)

at org.apache.dolphinscheduler.api.controller.ProcessDefinitionController$$FastClassBySpringCGLIB$$dc9bf5db.invoke(<generated>)

```

### What you expected to happen

update successfully.

### How to reproduce

update successfully.

### Anything else

_No response_

### Version

2.0.3

### Are you willing to submit PR?

- [X] Yes I am willing to submit a PR!

### Code of Conduct

- [X] I agree to follow this project's [Code of Conduct](https://www.apache.org/foundation/policies/conduct)

| https://github.com/apache/dolphinscheduler/issues/8296 | https://github.com/apache/dolphinscheduler/pull/8408 | af39ae3ce9e055da5a9485ccc5f5678e8120ad17 | f6e2a2cf2387cf4f3ff1d794cea8158bac989fb7 | "2022-02-07T09:57:26Z" | java | "2022-02-18T13:05:43Z" | dolphinscheduler-dao/src/main/resources/sql/upgrade/2.0.4_schema/mysql/dolphinscheduler_ddl.sql | |

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 8,296 | [Bug] [API] Update process definition error | ### Search before asking

- [X] I had searched in the [issues](https://github.com/apache/dolphinscheduler/issues?q=is%3Aissue) and found no similar issues.

### What happened

```

[ERROR] 2022-02-07 17:44:03.394 org.apache.dolphinscheduler.api.service.impl.ProcessDefinitionServiceImpl:[332] - error:

java.lang.IllegalStateException: Duplicate key TaskDefinition{id=0, code=3909232388448, name='依赖检查', version=1, description='', projectCode=0, userId=0, taskType=DEPENDENT, taskParams='{"dependence":{"relation":"AND","dependTaskList":[{"relation":"AND","dependItemList":[{"projectCode":3909232228064,"definitionCode":3909232229350,"depTaskCode":0,"cycle":"day","dateValue":"today"}]},{"relation":"AND","dependItemList":[{"projectCode":3909232228064,"definitionCode":3909232232293,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232229346,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230758,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230759,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230765,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230761,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230760,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230887,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230506,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230517,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230259,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232231796,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230263,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230628,"depTaskCode":0,"cycle":"day","dateValue":"today"}]}]},"conditionResult":{"successNode":[],"failedNode":[]},"waitStartTimeout":{"strategy":"FAILED","interval":null,"checkInterval":null,"enable":false},"switchResult":{}}', taskParamList=null, taskParamMap=null, flag=YES, taskPriority=MEDIUM, userName='null', projectName='null', workerGroup='offline-cluster', failRetryTimes=720, environmentCode='-1', failRetryInterval=1, timeoutFlag=CLOSE, timeoutNotifyStrategy=null, timeout=0, delayTime=0, resourceIds='null', createTime=null, updateTime=null}

at java.util.stream.Collectors.lambda$throwingMerger$0(Collectors.java:133)

at java.util.HashMap.merge(HashMap.java:1255)

at java.util.stream.Collectors.lambda$toMap$58(Collectors.java:1320)

at java.util.stream.ReduceOps$3ReducingSink.accept(ReduceOps.java:169)

at java.util.ArrayList$ArrayListSpliterator.forEachRemaining(ArrayList.java:1384)

at java.util.stream.AbstractPipeline.copyInto(AbstractPipeline.java:482)

at java.util.stream.AbstractPipeline.wrapAndCopyInto(AbstractPipeline.java:472)

at java.util.stream.ReduceOps$ReduceOp.evaluateSequential(ReduceOps.java:708)

at java.util.stream.AbstractPipeline.evaluate(AbstractPipeline.java:234)

at java.util.stream.ReferencePipeline.collect(ReferencePipeline.java:566)

at org.apache.dolphinscheduler.service.process.ProcessService.transformTask(ProcessService.java:2517)

at org.apache.dolphinscheduler.service.process.ProcessService$$FastClassBySpringCGLIB$$ed138739.invoke(<generated>)

at org.springframework.cglib.proxy.MethodProxy.invoke(MethodProxy.java:218)

at org.springframework.aop.framework.CglibAopProxy$DynamicAdvisedInterceptor.intercept(CglibAopProxy.java:689)

at org.apache.dolphinscheduler.service.process.ProcessService$$EnhancerBySpringCGLIB$$a43f595c.transformTask(<generated>)

at org.apache.dolphinscheduler.api.service.impl.ProcessDefinitionServiceImpl.checkTaskRelationList(ProcessDefinitionServiceImpl.java:305)

at org.apache.dolphinscheduler.api.service.impl.ProcessDefinitionServiceImpl.updateProcessDefinition(ProcessDefinitionServiceImpl.java:528)

at org.apache.dolphinscheduler.api.service.impl.ProcessDefinitionServiceImpl$$FastClassBySpringCGLIB$$e8e34ed9.invoke(<generated>)

at org.springframework.cglib.proxy.MethodProxy.invoke(MethodProxy.java:218)

at org.springframework.aop.framework.CglibAopProxy$CglibMethodInvocation.invokeJoinpoint(CglibAopProxy.java:783)

at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:163)

at org.springframework.aop.framework.CglibAopProxy$CglibMethodInvocation.proceed(CglibAopProxy.java:753)

at org.springframework.transaction.interceptor.TransactionInterceptor$1.proceedWithInvocation(TransactionInterceptor.java:123)

at org.springframework.transaction.interceptor.TransactionAspectSupport.invokeWithinTransaction(TransactionAspectSupport.java:388)

at org.springframework.transaction.interceptor.TransactionInterceptor.invoke(TransactionInterceptor.java:119)

at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:186)

at org.springframework.aop.framework.CglibAopProxy$CglibMethodInvocation.proceed(CglibAopProxy.java:753)

at org.springframework.aop.framework.CglibAopProxy$DynamicAdvisedInterceptor.intercept(CglibAopProxy.java:698)

at org.apache.dolphinscheduler.api.service.impl.ProcessDefinitionServiceImpl$$EnhancerBySpringCGLIB$$1978743c.updateProcessDefinition(<generated>)

at org.apache.dolphinscheduler.api.controller.ProcessDefinitionController.updateProcessDefinition(ProcessDefinitionController.java:249)

at org.apache.dolphinscheduler.api.controller.ProcessDefinitionController$$FastClassBySpringCGLIB$$dc9bf5db.invoke(<generated>)

```

### What you expected to happen

update successfully.

### How to reproduce

update successfully.

### Anything else

_No response_

### Version

2.0.3

### Are you willing to submit PR?

- [X] Yes I am willing to submit a PR!

### Code of Conduct

- [X] I agree to follow this project's [Code of Conduct](https://www.apache.org/foundation/policies/conduct)

| https://github.com/apache/dolphinscheduler/issues/8296 | https://github.com/apache/dolphinscheduler/pull/8408 | af39ae3ce9e055da5a9485ccc5f5678e8120ad17 | f6e2a2cf2387cf4f3ff1d794cea8158bac989fb7 | "2022-02-07T09:57:26Z" | java | "2022-02-18T13:05:43Z" | dolphinscheduler-dao/src/main/resources/sql/upgrade/2.0.4_schema/mysql/dolphinscheduler_dml.sql | |

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 8,296 | [Bug] [API] Update process definition error | ### Search before asking

- [X] I had searched in the [issues](https://github.com/apache/dolphinscheduler/issues?q=is%3Aissue) and found no similar issues.

### What happened

```

[ERROR] 2022-02-07 17:44:03.394 org.apache.dolphinscheduler.api.service.impl.ProcessDefinitionServiceImpl:[332] - error:

java.lang.IllegalStateException: Duplicate key TaskDefinition{id=0, code=3909232388448, name='依赖检查', version=1, description='', projectCode=0, userId=0, taskType=DEPENDENT, taskParams='{"dependence":{"relation":"AND","dependTaskList":[{"relation":"AND","dependItemList":[{"projectCode":3909232228064,"definitionCode":3909232229350,"depTaskCode":0,"cycle":"day","dateValue":"today"}]},{"relation":"AND","dependItemList":[{"projectCode":3909232228064,"definitionCode":3909232232293,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232229346,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230758,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230759,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230765,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230761,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230760,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230887,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230506,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230517,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230259,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232231796,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230263,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230628,"depTaskCode":0,"cycle":"day","dateValue":"today"}]}]},"conditionResult":{"successNode":[],"failedNode":[]},"waitStartTimeout":{"strategy":"FAILED","interval":null,"checkInterval":null,"enable":false},"switchResult":{}}', taskParamList=null, taskParamMap=null, flag=YES, taskPriority=MEDIUM, userName='null', projectName='null', workerGroup='offline-cluster', failRetryTimes=720, environmentCode='-1', failRetryInterval=1, timeoutFlag=CLOSE, timeoutNotifyStrategy=null, timeout=0, delayTime=0, resourceIds='null', createTime=null, updateTime=null}

at java.util.stream.Collectors.lambda$throwingMerger$0(Collectors.java:133)

at java.util.HashMap.merge(HashMap.java:1255)

at java.util.stream.Collectors.lambda$toMap$58(Collectors.java:1320)

at java.util.stream.ReduceOps$3ReducingSink.accept(ReduceOps.java:169)

at java.util.ArrayList$ArrayListSpliterator.forEachRemaining(ArrayList.java:1384)

at java.util.stream.AbstractPipeline.copyInto(AbstractPipeline.java:482)

at java.util.stream.AbstractPipeline.wrapAndCopyInto(AbstractPipeline.java:472)

at java.util.stream.ReduceOps$ReduceOp.evaluateSequential(ReduceOps.java:708)

at java.util.stream.AbstractPipeline.evaluate(AbstractPipeline.java:234)

at java.util.stream.ReferencePipeline.collect(ReferencePipeline.java:566)

at org.apache.dolphinscheduler.service.process.ProcessService.transformTask(ProcessService.java:2517)

at org.apache.dolphinscheduler.service.process.ProcessService$$FastClassBySpringCGLIB$$ed138739.invoke(<generated>)

at org.springframework.cglib.proxy.MethodProxy.invoke(MethodProxy.java:218)

at org.springframework.aop.framework.CglibAopProxy$DynamicAdvisedInterceptor.intercept(CglibAopProxy.java:689)

at org.apache.dolphinscheduler.service.process.ProcessService$$EnhancerBySpringCGLIB$$a43f595c.transformTask(<generated>)

at org.apache.dolphinscheduler.api.service.impl.ProcessDefinitionServiceImpl.checkTaskRelationList(ProcessDefinitionServiceImpl.java:305)

at org.apache.dolphinscheduler.api.service.impl.ProcessDefinitionServiceImpl.updateProcessDefinition(ProcessDefinitionServiceImpl.java:528)

at org.apache.dolphinscheduler.api.service.impl.ProcessDefinitionServiceImpl$$FastClassBySpringCGLIB$$e8e34ed9.invoke(<generated>)

at org.springframework.cglib.proxy.MethodProxy.invoke(MethodProxy.java:218)

at org.springframework.aop.framework.CglibAopProxy$CglibMethodInvocation.invokeJoinpoint(CglibAopProxy.java:783)

at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:163)

at org.springframework.aop.framework.CglibAopProxy$CglibMethodInvocation.proceed(CglibAopProxy.java:753)

at org.springframework.transaction.interceptor.TransactionInterceptor$1.proceedWithInvocation(TransactionInterceptor.java:123)

at org.springframework.transaction.interceptor.TransactionAspectSupport.invokeWithinTransaction(TransactionAspectSupport.java:388)

at org.springframework.transaction.interceptor.TransactionInterceptor.invoke(TransactionInterceptor.java:119)

at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:186)

at org.springframework.aop.framework.CglibAopProxy$CglibMethodInvocation.proceed(CglibAopProxy.java:753)

at org.springframework.aop.framework.CglibAopProxy$DynamicAdvisedInterceptor.intercept(CglibAopProxy.java:698)

at org.apache.dolphinscheduler.api.service.impl.ProcessDefinitionServiceImpl$$EnhancerBySpringCGLIB$$1978743c.updateProcessDefinition(<generated>)

at org.apache.dolphinscheduler.api.controller.ProcessDefinitionController.updateProcessDefinition(ProcessDefinitionController.java:249)

at org.apache.dolphinscheduler.api.controller.ProcessDefinitionController$$FastClassBySpringCGLIB$$dc9bf5db.invoke(<generated>)

```

### What you expected to happen

update successfully.

### How to reproduce

update successfully.

### Anything else

_No response_

### Version

2.0.3

### Are you willing to submit PR?

- [X] Yes I am willing to submit a PR!

### Code of Conduct

- [X] I agree to follow this project's [Code of Conduct](https://www.apache.org/foundation/policies/conduct)

| https://github.com/apache/dolphinscheduler/issues/8296 | https://github.com/apache/dolphinscheduler/pull/8408 | af39ae3ce9e055da5a9485ccc5f5678e8120ad17 | f6e2a2cf2387cf4f3ff1d794cea8158bac989fb7 | "2022-02-07T09:57:26Z" | java | "2022-02-18T13:05:43Z" | dolphinscheduler-dao/src/main/resources/sql/upgrade/2.0.4_schema/postgresql/dolphinscheduler_ddl.sql | |

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 8,296 | [Bug] [API] Update process definition error | ### Search before asking

- [X] I had searched in the [issues](https://github.com/apache/dolphinscheduler/issues?q=is%3Aissue) and found no similar issues.

### What happened

```

[ERROR] 2022-02-07 17:44:03.394 org.apache.dolphinscheduler.api.service.impl.ProcessDefinitionServiceImpl:[332] - error:

java.lang.IllegalStateException: Duplicate key TaskDefinition{id=0, code=3909232388448, name='依赖检查', version=1, description='', projectCode=0, userId=0, taskType=DEPENDENT, taskParams='{"dependence":{"relation":"AND","dependTaskList":[{"relation":"AND","dependItemList":[{"projectCode":3909232228064,"definitionCode":3909232229350,"depTaskCode":0,"cycle":"day","dateValue":"today"}]},{"relation":"AND","dependItemList":[{"projectCode":3909232228064,"definitionCode":3909232232293,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232229346,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230758,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230759,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230765,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230761,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230760,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230887,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230506,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230517,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230259,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232231796,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230263,"depTaskCode":0,"cycle":"day","dateValue":"today"},{"projectCode":3909232228064,"definitionCode":3909232230628,"depTaskCode":0,"cycle":"day","dateValue":"today"}]}]},"conditionResult":{"successNode":[],"failedNode":[]},"waitStartTimeout":{"strategy":"FAILED","interval":null,"checkInterval":null,"enable":false},"switchResult":{}}', taskParamList=null, taskParamMap=null, flag=YES, taskPriority=MEDIUM, userName='null', projectName='null', workerGroup='offline-cluster', failRetryTimes=720, environmentCode='-1', failRetryInterval=1, timeoutFlag=CLOSE, timeoutNotifyStrategy=null, timeout=0, delayTime=0, resourceIds='null', createTime=null, updateTime=null}

at java.util.stream.Collectors.lambda$throwingMerger$0(Collectors.java:133)

at java.util.HashMap.merge(HashMap.java:1255)

at java.util.stream.Collectors.lambda$toMap$58(Collectors.java:1320)

at java.util.stream.ReduceOps$3ReducingSink.accept(ReduceOps.java:169)

at java.util.ArrayList$ArrayListSpliterator.forEachRemaining(ArrayList.java:1384)

at java.util.stream.AbstractPipeline.copyInto(AbstractPipeline.java:482)

at java.util.stream.AbstractPipeline.wrapAndCopyInto(AbstractPipeline.java:472)

at java.util.stream.ReduceOps$ReduceOp.evaluateSequential(ReduceOps.java:708)

at java.util.stream.AbstractPipeline.evaluate(AbstractPipeline.java:234)

at java.util.stream.ReferencePipeline.collect(ReferencePipeline.java:566)

at org.apache.dolphinscheduler.service.process.ProcessService.transformTask(ProcessService.java:2517)

at org.apache.dolphinscheduler.service.process.ProcessService$$FastClassBySpringCGLIB$$ed138739.invoke(<generated>)

at org.springframework.cglib.proxy.MethodProxy.invoke(MethodProxy.java:218)

at org.springframework.aop.framework.CglibAopProxy$DynamicAdvisedInterceptor.intercept(CglibAopProxy.java:689)

at org.apache.dolphinscheduler.service.process.ProcessService$$EnhancerBySpringCGLIB$$a43f595c.transformTask(<generated>)

at org.apache.dolphinscheduler.api.service.impl.ProcessDefinitionServiceImpl.checkTaskRelationList(ProcessDefinitionServiceImpl.java:305)

at org.apache.dolphinscheduler.api.service.impl.ProcessDefinitionServiceImpl.updateProcessDefinition(ProcessDefinitionServiceImpl.java:528)

at org.apache.dolphinscheduler.api.service.impl.ProcessDefinitionServiceImpl$$FastClassBySpringCGLIB$$e8e34ed9.invoke(<generated>)

at org.springframework.cglib.proxy.MethodProxy.invoke(MethodProxy.java:218)

at org.springframework.aop.framework.CglibAopProxy$CglibMethodInvocation.invokeJoinpoint(CglibAopProxy.java:783)

at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:163)

at org.springframework.aop.framework.CglibAopProxy$CglibMethodInvocation.proceed(CglibAopProxy.java:753)

at org.springframework.transaction.interceptor.TransactionInterceptor$1.proceedWithInvocation(TransactionInterceptor.java:123)

at org.springframework.transaction.interceptor.TransactionAspectSupport.invokeWithinTransaction(TransactionAspectSupport.java:388)

at org.springframework.transaction.interceptor.TransactionInterceptor.invoke(TransactionInterceptor.java:119)

at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:186)

at org.springframework.aop.framework.CglibAopProxy$CglibMethodInvocation.proceed(CglibAopProxy.java:753)

at org.springframework.aop.framework.CglibAopProxy$DynamicAdvisedInterceptor.intercept(CglibAopProxy.java:698)

at org.apache.dolphinscheduler.api.service.impl.ProcessDefinitionServiceImpl$$EnhancerBySpringCGLIB$$1978743c.updateProcessDefinition(<generated>)

at org.apache.dolphinscheduler.api.controller.ProcessDefinitionController.updateProcessDefinition(ProcessDefinitionController.java:249)

at org.apache.dolphinscheduler.api.controller.ProcessDefinitionController$$FastClassBySpringCGLIB$$dc9bf5db.invoke(<generated>)

```

### What you expected to happen

update successfully.

### How to reproduce

update successfully.

### Anything else

_No response_

### Version

2.0.3

### Are you willing to submit PR?

- [X] Yes I am willing to submit a PR!

### Code of Conduct

- [X] I agree to follow this project's [Code of Conduct](https://www.apache.org/foundation/policies/conduct)

| https://github.com/apache/dolphinscheduler/issues/8296 | https://github.com/apache/dolphinscheduler/pull/8408 | af39ae3ce9e055da5a9485ccc5f5678e8120ad17 | f6e2a2cf2387cf4f3ff1d794cea8158bac989fb7 | "2022-02-07T09:57:26Z" | java | "2022-02-18T13:05:43Z" | dolphinscheduler-dao/src/main/resources/sql/upgrade/2.0.4_schema/postgresql/dolphinscheduler_dml.sql | |

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 8,432 | [Bug] [Standalone] table t_ds_worker_group not found in standalone mode | ### Search before asking

- [X] I had searched in the [issues](https://github.com/apache/dolphinscheduler/issues?q=is%3Aissue) and found no similar issues.

### What happened

table t_ds_worker_group not found in standalone mode

### What you expected to happen

running successfully.

### How to reproduce

running standalone server.

### Anything else

_No response_

### Version

dev

### Are you willing to submit PR?

- [X] Yes I am willing to submit a PR!

### Code of Conduct

- [X] I agree to follow this project's [Code of Conduct](https://www.apache.org/foundation/policies/conduct)

| https://github.com/apache/dolphinscheduler/issues/8432 | https://github.com/apache/dolphinscheduler/pull/8433 | 668b36c73187279349ee91e128791926b1bbc86b | 8200a3f15ab5c7de0ea8e4d356ce968c6380e42a | "2022-02-18T11:10:48Z" | java | "2022-02-18T13:46:52Z" | dolphinscheduler-master/src/main/java/org/apache/dolphinscheduler/server/master/registry/ServerNodeManager.java | /*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package org.apache.dolphinscheduler.server.master.registry;

import static org.apache.dolphinscheduler.common.Constants.REGISTRY_DOLPHINSCHEDULER_MASTERS;

import static org.apache.dolphinscheduler.common.Constants.REGISTRY_DOLPHINSCHEDULER_WORKERS;

import org.apache.dolphinscheduler.common.Constants;

import org.apache.dolphinscheduler.common.enums.NodeType;

import org.apache.dolphinscheduler.common.model.Server;

import org.apache.dolphinscheduler.common.utils.NetUtils;

import org.apache.dolphinscheduler.dao.AlertDao;

import org.apache.dolphinscheduler.dao.entity.WorkerGroup;

import org.apache.dolphinscheduler.dao.mapper.WorkerGroupMapper;

import org.apache.dolphinscheduler.registry.api.Event;

import org.apache.dolphinscheduler.registry.api.Event.Type;

import org.apache.dolphinscheduler.registry.api.SubscribeListener;

import org.apache.dolphinscheduler.remote.utils.NamedThreadFactory;

import org.apache.dolphinscheduler.service.queue.MasterPriorityQueue;

import org.apache.dolphinscheduler.service.registry.RegistryClient;

import org.apache.commons.collections.CollectionUtils;

import org.apache.commons.lang.StringUtils;

import java.util.Collection;

import java.util.Collections;

import java.util.HashMap;

import java.util.HashSet;

import java.util.List;

import java.util.Map;

import java.util.Set;

import java.util.concurrent.ConcurrentHashMap;

import java.util.concurrent.Executors;

import java.util.concurrent.ScheduledExecutorService;

import java.util.concurrent.TimeUnit;

import java.util.concurrent.locks.Lock;

import java.util.concurrent.locks.ReentrantLock;

import javax.annotation.PreDestroy;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.InitializingBean;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Service;

/**

* server node manager

*/

@Service

public class ServerNodeManager implements InitializingBean {

private final Logger logger = LoggerFactory.getLogger(ServerNodeManager.class);

/**

* master lock

*/

private final Lock masterLock = new ReentrantLock();

/**

* worker group lock

*/

private final Lock workerGroupLock = new ReentrantLock();

/**

* worker node info lock

*/

private final Lock workerNodeInfoLock = new ReentrantLock();

/**

* worker group nodes

*/

private final ConcurrentHashMap<String, Set<String>> workerGroupNodes = new ConcurrentHashMap<>();

/**

* master nodes

*/

private final Set<String> masterNodes = new HashSet<>();

/**

* worker node info

*/

private final Map<String, String> workerNodeInfo = new HashMap<>();

/**

* executor service

*/

private ScheduledExecutorService executorService;

@Autowired

private RegistryClient registryClient;

/**

* eg : /node/worker/group/127.0.0.1:xxx

*/

private static final int WORKER_LISTENER_CHECK_LENGTH = 5;

/**

* worker group mapper

*/

@Autowired

private WorkerGroupMapper workerGroupMapper;

private final MasterPriorityQueue masterPriorityQueue = new MasterPriorityQueue();

/**

* alert dao

*/

@Autowired

private AlertDao alertDao;

private static volatile int MASTER_SLOT = 0;

private static volatile int MASTER_SIZE = 0;

public static int getSlot() {

return MASTER_SLOT;

}

public static int getMasterSize() {

return MASTER_SIZE;

}

/**

* init listener

*

* @throws Exception if error throws Exception

*/

@Override

public void afterPropertiesSet() throws Exception {

/**

* load nodes from zookeeper

*/

load();

/**

* init executor service

*/

executorService = Executors.newSingleThreadScheduledExecutor(new NamedThreadFactory("ServerNodeManagerExecutor"));

executorService.scheduleWithFixedDelay(new WorkerNodeInfoAndGroupDbSyncTask(), 0, 10, TimeUnit.SECONDS);

/*

* init MasterNodeListener listener

*/

registryClient.subscribe(REGISTRY_DOLPHINSCHEDULER_MASTERS, new MasterDataListener());

/*

* init WorkerNodeListener listener

*/

registryClient.subscribe(REGISTRY_DOLPHINSCHEDULER_WORKERS, new WorkerDataListener());

}

/**

* load nodes from zookeeper

*/

public void load() {

/*

* master nodes from zookeeper

*/

updateMasterNodes();

/*

* worker group nodes from zookeeper

*/

Collection<String> workerGroups = registryClient.getWorkerGroupDirectly();

for (String workerGroup : workerGroups) {

syncWorkerGroupNodes(workerGroup, registryClient.getWorkerGroupNodesDirectly(workerGroup));

}

}

/**

* worker node info and worker group db sync task

*/

class WorkerNodeInfoAndGroupDbSyncTask implements Runnable {

@Override

public void run() {

// sync worker node info

Map<String, String> newWorkerNodeInfo = registryClient.getServerMaps(NodeType.WORKER, true);

syncAllWorkerNodeInfo(newWorkerNodeInfo);

// sync worker group nodes from database

List<WorkerGroup> workerGroupList = workerGroupMapper.queryAllWorkerGroup();

if (CollectionUtils.isNotEmpty(workerGroupList)) {

for (WorkerGroup wg : workerGroupList) {

String workerGroup = wg.getName();

Set<String> nodes = new HashSet<>();

String[] addrs = wg.getAddrList().split(Constants.COMMA);

for (String addr : addrs) {

if (newWorkerNodeInfo.containsKey(addr)) {

nodes.add(addr);

}

}

if (!nodes.isEmpty()) {

syncWorkerGroupNodes(workerGroup, nodes);

}

}

}

}

}

/**

* worker group node listener

*/

class WorkerDataListener implements SubscribeListener {

@Override

public void notify(Event event) {

final String path = event.path();

final Type type = event.type();

final String data = event.data();

if (registryClient.isWorkerPath(path)) {

try {

if (type == Type.ADD) {

logger.info("worker group node : {} added.", path);

String group = parseGroup(path);

Collection<String> currentNodes = registryClient.getWorkerGroupNodesDirectly(group);

logger.info("currentNodes : {}", currentNodes);

syncWorkerGroupNodes(group, currentNodes);

} else if (type == Type.REMOVE) {

logger.info("worker group node : {} down.", path);

String group = parseGroup(path);

Collection<String> currentNodes = registryClient.getWorkerGroupNodesDirectly(group);

syncWorkerGroupNodes(group, currentNodes);

alertDao.sendServerStopedAlert(1, path, "WORKER");

} else if (type == Type.UPDATE) {

logger.debug("worker group node : {} update, data: {}", path, data);

String group = parseGroup(path);

Collection<String> currentNodes = registryClient.getWorkerGroupNodesDirectly(group);

syncWorkerGroupNodes(group, currentNodes);

String node = parseNode(path);

syncSingleWorkerNodeInfo(node, data);

}

} catch (IllegalArgumentException ex) {

logger.warn(ex.getMessage());

} catch (Exception ex) {

logger.error("WorkerGroupListener capture data change and get data failed", ex);

}

}

}

private String parseGroup(String path) {

String[] parts = path.split("/");

if (parts.length < WORKER_LISTENER_CHECK_LENGTH) {

throw new IllegalArgumentException(String.format("worker group path : %s is not valid, ignore", path));

}

return parts[parts.length - 2];

}

private String parseNode(String path) {

String[] parts = path.split("/");

if (parts.length < WORKER_LISTENER_CHECK_LENGTH) {

throw new IllegalArgumentException(String.format("worker group path : %s is not valid, ignore", path));

}

return parts[parts.length - 1];

}

}

class MasterDataListener implements SubscribeListener {

@Override

public void notify(Event event) {

final String path = event.path();

final Type type = event.type();

if (registryClient.isMasterPath(path)) {

try {

if (type.equals(Type.ADD)) {

logger.info("master node : {} added.", path);

updateMasterNodes();

}

if (type.equals(Type.REMOVE)) {

logger.info("master node : {} down.", path);

updateMasterNodes();

alertDao.sendServerStopedAlert(1, path, "MASTER");

}

} catch (Exception ex) {

logger.error("MasterNodeListener capture data change and get data failed.", ex);

}

}

}

}

private void updateMasterNodes() {

MASTER_SLOT = 0;

this.masterNodes.clear();

String nodeLock = Constants.REGISTRY_DOLPHINSCHEDULER_LOCK_MASTERS;

try {

registryClient.getLock(nodeLock);

Collection<String> currentNodes = registryClient.getMasterNodesDirectly();

List<Server> masterNodes = registryClient.getServerList(NodeType.MASTER);

syncMasterNodes(currentNodes, masterNodes);

} catch (Exception e) {

logger.error("update master nodes error", e);

} finally {

registryClient.releaseLock(nodeLock);

}

}

/**

* get master nodes

*

* @return master nodes

*/

public Set<String> getMasterNodes() {

masterLock.lock();

try {

return Collections.unmodifiableSet(masterNodes);

} finally {

masterLock.unlock();

}

}

/**

* sync master nodes

*

* @param nodes master nodes

*/

private void syncMasterNodes(Collection<String> nodes, List<Server> masterNodes) {

masterLock.lock();

try {

String host = NetUtils.getHost();

this.masterNodes.addAll(nodes);

this.masterPriorityQueue.clear();

this.masterPriorityQueue.putList(masterNodes);

int index = masterPriorityQueue.getIndex(host);

if (index >= 0) {

MASTER_SIZE = nodes.size();

MASTER_SLOT = index;

} else {

logger.warn("current host:{} is not in active master list", host);

}

logger.info("update master nodes, master size: {}, slot: {}", MASTER_SIZE, MASTER_SLOT);

} finally {

masterLock.unlock();

}

}

/**

* sync worker group nodes

*

* @param workerGroup worker group

* @param nodes worker nodes

*/

private void syncWorkerGroupNodes(String workerGroup, Collection<String> nodes) {

workerGroupLock.lock();

try {

workerGroup = workerGroup.toLowerCase();

Set<String> workerNodes = workerGroupNodes.getOrDefault(workerGroup, new HashSet<>());

workerNodes.clear();

workerNodes.addAll(nodes);

workerGroupNodes.put(workerGroup, workerNodes);

} finally {

workerGroupLock.unlock();

}

}

public Map<String, Set<String>> getWorkerGroupNodes() {

return Collections.unmodifiableMap(workerGroupNodes);

}

/**

* get worker group nodes

*

* @param workerGroup workerGroup

* @return worker nodes

*/

public Set<String> getWorkerGroupNodes(String workerGroup) {

workerGroupLock.lock();

try {

if (StringUtils.isEmpty(workerGroup)) {

workerGroup = Constants.DEFAULT_WORKER_GROUP;

}

workerGroup = workerGroup.toLowerCase();

Set<String> nodes = workerGroupNodes.get(workerGroup);

if (CollectionUtils.isNotEmpty(nodes)) {

return Collections.unmodifiableSet(nodes);

}

return nodes;

} finally {

workerGroupLock.unlock();

}

}

/**

* get worker node info

*

* @return worker node info

*/

public Map<String, String> getWorkerNodeInfo() {

return Collections.unmodifiableMap(workerNodeInfo);

}

/**

* get worker node info

*

* @param workerNode worker node

* @return worker node info

*/

public String getWorkerNodeInfo(String workerNode) {

workerNodeInfoLock.lock();

try {

return workerNodeInfo.getOrDefault(workerNode, null);

} finally {

workerNodeInfoLock.unlock();

}

}

/**

* sync worker node info

*

* @param newWorkerNodeInfo new worker node info

*/

private void syncAllWorkerNodeInfo(Map<String, String> newWorkerNodeInfo) {

workerNodeInfoLock.lock();

try {

workerNodeInfo.clear();

workerNodeInfo.putAll(newWorkerNodeInfo);

} finally {

workerNodeInfoLock.unlock();

}

}

/**

* sync single worker node info

*/

private void syncSingleWorkerNodeInfo(String node, String info) {

workerNodeInfoLock.lock();

try {

workerNodeInfo.put(node, info);

} finally {

workerNodeInfoLock.unlock();

}

}

/**

* destroy

*/

@PreDestroy

public void destroy() {

executorService.shutdownNow();

}

}

|

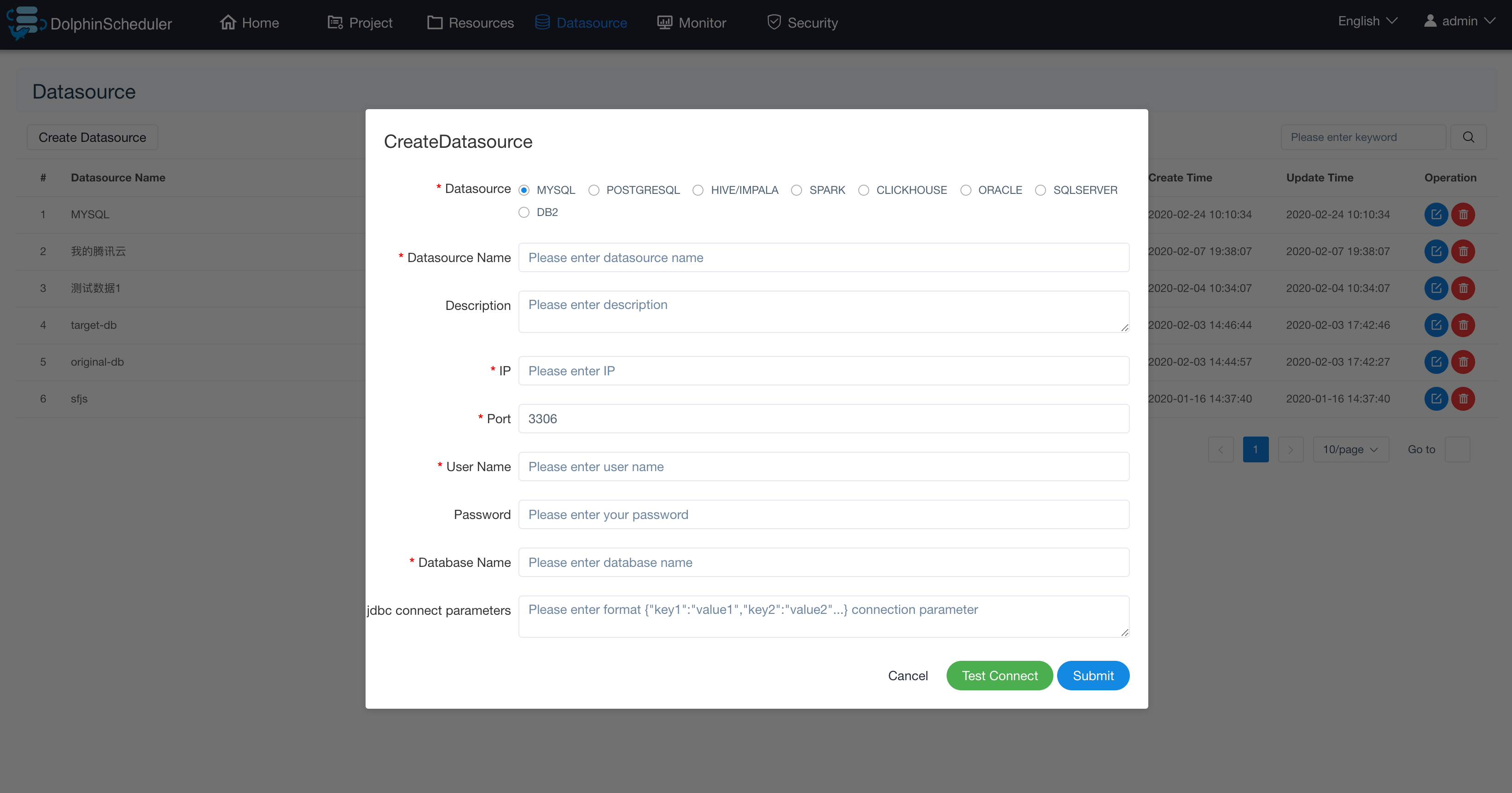

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 8,135 | [Bug] [DataSource] jdbc connect parameters can not input '@' | ### Search before asking

- [X] I had searched in the [issues](https://github.com/apache/dolphinscheduler/issues?q=is%3Aissue) and found no similar issues.

### What happened

In 2.0.2 version, when I create hive datasource with kerberos, then need input principal to jdbc connect parameters,

when Test connect , The error is reported below

### What you expected to happen

when input correct principal, can connect to hive

### How to reproduce

in AbstractDatasourceProcessor.java, modify PARAMS_PATTER field, appropriate value is

> private static final PARAMS_PATTER = Pattern.compile("^[a-zA-Z0-9\\-\\_\\/\\@\\.]+$");

### Anything else

_No response_

### Version

2.0.2

### Are you willing to submit PR?

- [ ] Yes I am willing to submit a PR!

### Code of Conduct

- [X] I agree to follow this project's [Code of Conduct](https://www.apache.org/foundation/policies/conduct)

| https://github.com/apache/dolphinscheduler/issues/8135 | https://github.com/apache/dolphinscheduler/pull/8293 | 8200a3f15ab5c7de0ea8e4d356ce968c6380e42a | 2a1406073a73b349f63396567919f81a802785d8 | "2022-01-20T07:29:54Z" | java | "2022-02-18T13:58:43Z" | dolphinscheduler-datasource-plugin/dolphinscheduler-datasource-api/src/main/java/org/apache/dolphinscheduler/plugin/datasource/api/datasource/AbstractDataSourceProcessor.java | /*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package org.apache.dolphinscheduler.plugin.datasource.api.datasource;

import org.apache.dolphinscheduler.spi.datasource.BaseConnectionParam;

import org.apache.dolphinscheduler.spi.datasource.ConnectionParam;

import org.apache.dolphinscheduler.spi.enums.DbType;

import org.apache.commons.collections4.MapUtils;

import java.text.MessageFormat;

import java.util.Map;

import java.util.regex.Pattern;

public abstract class AbstractDataSourceProcessor implements DataSourceProcessor {

private static final Pattern IPV4_PATTERN = Pattern.compile("^[a-zA-Z0-9\\_\\-\\.\\,]+$");

private static final Pattern IPV6_PATTERN = Pattern.compile("^[a-zA-Z0-9\\_\\-\\.\\:\\[\\]\\,]+$");

private static final Pattern DATABASE_PATTER = Pattern.compile("^[a-zA-Z0-9\\_\\-\\.]+$");

private static final Pattern PARAMS_PATTER = Pattern.compile("^[a-zA-Z0-9\\-\\_\\/]+$");

@Override

public void checkDatasourceParam(BaseDataSourceParamDTO baseDataSourceParamDTO) {

checkHost(baseDataSourceParamDTO.getHost());

checkDatasourcePatter(baseDataSourceParamDTO.getDatabase());

checkOther(baseDataSourceParamDTO.getOther());

}

/**

* Check the host is valid

*

* @param host datasource host

*/

protected void checkHost(String host) {

if (!IPV4_PATTERN.matcher(host).matches() || !IPV6_PATTERN.matcher(host).matches()) {

throw new IllegalArgumentException("datasource host illegal");

}

}

/**

* check database name is valid

*

* @param database database name

*/

protected void checkDatasourcePatter(String database) {

if (!DATABASE_PATTER.matcher(database).matches()) {

throw new IllegalArgumentException("datasource name illegal");

}

}

/**

* check other is valid

*

* @param other other

*/

protected void checkOther(Map<String, String> other) {

if (MapUtils.isEmpty(other)) {

return;

}

boolean paramsCheck = other.entrySet().stream().allMatch(p -> PARAMS_PATTER.matcher(p.getValue()).matches());

if (!paramsCheck) {

throw new IllegalArgumentException("datasource other params illegal");

}

}

@Override

public String getDatasourceUniqueId(ConnectionParam connectionParam, DbType dbType) {

BaseConnectionParam baseConnectionParam = (BaseConnectionParam) connectionParam;

return MessageFormat.format("{0}@{1}@{2}", dbType.getDescp(), baseConnectionParam.getUser(), baseConnectionParam.getJdbcUrl());

}

}

|

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 8,135 | [Bug] [DataSource] jdbc connect parameters can not input '@' | ### Search before asking

- [X] I had searched in the [issues](https://github.com/apache/dolphinscheduler/issues?q=is%3Aissue) and found no similar issues.

### What happened

In 2.0.2 version, when I create hive datasource with kerberos, then need input principal to jdbc connect parameters,

when Test connect , The error is reported below

### What you expected to happen

when input correct principal, can connect to hive

### How to reproduce

in AbstractDatasourceProcessor.java, modify PARAMS_PATTER field, appropriate value is

> private static final PARAMS_PATTER = Pattern.compile("^[a-zA-Z0-9\\-\\_\\/\\@\\.]+$");

### Anything else

_No response_

### Version

2.0.2

### Are you willing to submit PR?

- [ ] Yes I am willing to submit a PR!

### Code of Conduct

- [X] I agree to follow this project's [Code of Conduct](https://www.apache.org/foundation/policies/conduct)

| https://github.com/apache/dolphinscheduler/issues/8135 | https://github.com/apache/dolphinscheduler/pull/8293 | 8200a3f15ab5c7de0ea8e4d356ce968c6380e42a | 2a1406073a73b349f63396567919f81a802785d8 | "2022-01-20T07:29:54Z" | java | "2022-02-18T13:58:43Z" | dolphinscheduler-datasource-plugin/dolphinscheduler-datasource-api/src/test/java/org/apache/dolphinscheduler/plugin/datasource/api/datasource/AbstractDataSourceProcessorTest.java | |

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 8,213 | [Bug] [dolphinscheduler-server] task run error when worker group name contains uppercase letters | ### Search before asking

- [X] I had searched in the [issues](https://github.com/apache/dolphinscheduler/issues?q=is%3Aissue) and found no similar issues.

### What happened

worker group名称存在大写的时候,运行任务报错;错误如下

_[INFO] 2022-01-27 08:00:00.389 TaskLogLogger:[134] - set work flow 299 task 463 running

[INFO] 2022-01-27 08:00:00.390 TaskLogLogger:[104] - work flow 299 task 463, sub work flow: 301 state: running

[ERROR] 2022-01-27 08:00:01.081 org.apache.dolphinscheduler.server.master.consumer.TaskPriorityQueueConsumer:[144] - ExecuteException dispatch error: fail to execute : Command [type=TASK_EXECUTE_REQUEST, opaque=3511226, bodyLen=3375] due to no suitable worker, current task needs worker group test to execute

org.apache.dolphinscheduler.server.master.dispatch.exceptions.ExecuteException: fail to execute : Command [type=TASK_EXECUTE_REQUEST, opaque=3511226, bodyLen=3375] due to no suitable worker, current task needs worker group test to execute

at org.apache.dolphinscheduler.server.master.dispatch.ExecutorDispatcher.dispatch(ExecutorDispatcher.java:89)

at org.apache.dolphinscheduler.server.master.consumer.TaskPriorityQueueConsumer.dispatch(TaskPriorityQueueConsumer.java:138)

at org.apache.dolphinscheduler.server.master.consumer.TaskPriorityQueueConsumer.run(TaskPriorityQueueConsumer.java:101)

[ERROR] 2022-01-27 08:00:01.082 org.apache.dolphinscheduler.server.master.consumer.TaskPriorityQueueConsumer:[144] - ExecuteException dispatch error: fail to execute : Command [type=TASK_EXECUTE_REQUEST, opaque=3511227, bodyLen=3106] due to no suitable worker, current task needs worker group Tenant_2_DS资源组3 to execute

org.apache.dolphinscheduler.server.master.dispatch.exceptions.ExecuteException: fail to execute : Command [type=TASK_EXECUTE_REQUEST, opaque=3511227, bodyLen=3106] due to no suitable worker, current task needs worker group Tenant_2_DS资源组3 to execute

at org.apache.dolphinscheduler.server.master.dispatch.ExecutorDispatcher.dispatch(ExecutorDispatcher.java:89)

at org.apache.dolphinscheduler.server.master.consumer.TaskPriorityQueueConsumer.dispatch(TaskPriorityQueueConsumer.java:138)

at org.apache.dolphinscheduler.server.master.consumer.TaskPriorityQueueConsumer.run(TaskPriorityQueueConsumer.java:101)

[WARN] 2022-01-27 08:00:01.360 org.apache.dolphinscheduler.server.master.dispatch.host.LowerWeightHostManager:[153] - worker 192.168.92.31:1234 in work group test have not received the heartbeat

[WARN] 2022-01-27 08:00:02.361 org.apache.dolphinscheduler.server.master.dispatch.host.LowerWeightHostManager:[153] - worker 192.168.92.31:1234 in work group test have not received the heartbeat

_

原因:master ServerNodeManager.syncWorkerGroupNodes() 方法,将读取到的worker group名称转为了小写,但是,从任务内读取的workergroup名称仍然是大写,因此任务找不到需要的worker group;

处理:将//workerGroup = workerGroup.toLowerCase(); 注释掉。

` private void syncWorkerGroupNodes(String workerGroup, Collection<String> nodes) {

workerGroupLock.lock();

try {

//workerGroup = workerGroup.toLowerCase();

Set<String> workerNodes = workerGroupNodes.getOrDefault(workerGroup, new HashSet<>());

workerNodes.clear();

workerNodes.addAll(nodes);

workerGroupNodes.put(workerGroup, workerNodes);

} finally {

workerGroupLock.unlock();

}

}

`

### What you expected to happen

当worker group 名称存在大写字符时,任务正常运行

### How to reproduce

创建一个包含大写字符的workergroup 名称,创建任意一个任务并关联到 创建的worker group 运行;

### Anything else

_No response_

### Version

2.0.3

### Are you willing to submit PR?

- [X] Yes I am willing to submit a PR!

### Code of Conduct

- [X] I agree to follow this project's [Code of Conduct](https://www.apache.org/foundation/policies/conduct)

| https://github.com/apache/dolphinscheduler/issues/8213 | https://github.com/apache/dolphinscheduler/pull/8448 | 02e47da30b76334c6847c8142a735cdd94f0cc43 | 234f399488e7fb0937cbf322c928f7abb9eb8365 | "2022-01-27T04:05:26Z" | java | "2022-02-19T13:38:58Z" | dolphinscheduler-master/src/main/java/org/apache/dolphinscheduler/server/master/registry/ServerNodeManager.java | /*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package org.apache.dolphinscheduler.server.master.registry;

import static org.apache.dolphinscheduler.common.Constants.REGISTRY_DOLPHINSCHEDULER_MASTERS;

import static org.apache.dolphinscheduler.common.Constants.REGISTRY_DOLPHINSCHEDULER_WORKERS;

import org.apache.dolphinscheduler.common.Constants;

import org.apache.dolphinscheduler.common.enums.NodeType;

import org.apache.dolphinscheduler.common.model.Server;

import org.apache.dolphinscheduler.common.utils.NetUtils;

import org.apache.dolphinscheduler.dao.AlertDao;

import org.apache.dolphinscheduler.dao.entity.WorkerGroup;

import org.apache.dolphinscheduler.dao.mapper.WorkerGroupMapper;

import org.apache.dolphinscheduler.registry.api.Event;

import org.apache.dolphinscheduler.registry.api.Event.Type;

import org.apache.dolphinscheduler.registry.api.SubscribeListener;

import org.apache.dolphinscheduler.remote.utils.NamedThreadFactory;

import org.apache.dolphinscheduler.service.queue.MasterPriorityQueue;

import org.apache.dolphinscheduler.service.registry.RegistryClient;

import org.apache.commons.collections.CollectionUtils;

import org.apache.commons.lang.StringUtils;

import java.util.Collection;

import java.util.Collections;

import java.util.HashMap;

import java.util.HashSet;

import java.util.List;

import java.util.Map;

import java.util.Set;

import java.util.concurrent.ConcurrentHashMap;

import java.util.concurrent.Executors;

import java.util.concurrent.ScheduledExecutorService;

import java.util.concurrent.TimeUnit;

import java.util.concurrent.locks.Lock;

import java.util.concurrent.locks.ReentrantLock;

import javax.annotation.PreDestroy;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.InitializingBean;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Service;

/**

* server node manager

*/

@Service

public class ServerNodeManager implements InitializingBean {

private final Logger logger = LoggerFactory.getLogger(ServerNodeManager.class);

/**

* master lock

*/

private final Lock masterLock = new ReentrantLock();

/**

* worker group lock

*/

private final Lock workerGroupLock = new ReentrantLock();

/**

* worker node info lock

*/

private final Lock workerNodeInfoLock = new ReentrantLock();

/**

* worker group nodes

*/

private final ConcurrentHashMap<String, Set<String>> workerGroupNodes = new ConcurrentHashMap<>();

/**

* master nodes

*/

private final Set<String> masterNodes = new HashSet<>();

/**

* worker node info

*/

private final Map<String, String> workerNodeInfo = new HashMap<>();

/**

* executor service

*/

private ScheduledExecutorService executorService;

@Autowired

private RegistryClient registryClient;

/**

* eg : /node/worker/group/127.0.0.1:xxx

*/

private static final int WORKER_LISTENER_CHECK_LENGTH = 5;

/**

* worker group mapper

*/

@Autowired

private WorkerGroupMapper workerGroupMapper;

private final MasterPriorityQueue masterPriorityQueue = new MasterPriorityQueue();

/**

* alert dao

*/

@Autowired

private AlertDao alertDao;

private static volatile int MASTER_SLOT = 0;

private static volatile int MASTER_SIZE = 0;

public static int getSlot() {

return MASTER_SLOT;

}

public static int getMasterSize() {

return MASTER_SIZE;

}

/**

* init listener

*

* @throws Exception if error throws Exception

*/

@Override

public void afterPropertiesSet() throws Exception {

/**

* load nodes from zookeeper

*/

load();

/**

* init executor service

*/

executorService = Executors.newSingleThreadScheduledExecutor(new NamedThreadFactory("ServerNodeManagerExecutor"));

executorService.scheduleWithFixedDelay(new WorkerNodeInfoAndGroupDbSyncTask(), 0, 10, TimeUnit.SECONDS);

/*

* init MasterNodeListener listener

*/

registryClient.subscribe(REGISTRY_DOLPHINSCHEDULER_MASTERS, new MasterDataListener());

/*

* init WorkerNodeListener listener

*/

registryClient.subscribe(REGISTRY_DOLPHINSCHEDULER_WORKERS, new WorkerDataListener());

}

/**

* load nodes from zookeeper

*/

public void load() {

/*

* master nodes from zookeeper

*/

updateMasterNodes();

/*

* worker group nodes from zookeeper

*/

Collection<String> workerGroups = registryClient.getWorkerGroupDirectly();

for (String workerGroup : workerGroups) {

syncWorkerGroupNodes(workerGroup, registryClient.getWorkerGroupNodesDirectly(workerGroup));

}

}

/**

* worker node info and worker group db sync task

*/

class WorkerNodeInfoAndGroupDbSyncTask implements Runnable {

@Override

public void run() {

try {

// sync worker node info

Map<String, String> newWorkerNodeInfo = registryClient.getServerMaps(NodeType.WORKER, true);

syncAllWorkerNodeInfo(newWorkerNodeInfo);

// sync worker group nodes from database

List<WorkerGroup> workerGroupList = workerGroupMapper.queryAllWorkerGroup();

if (CollectionUtils.isNotEmpty(workerGroupList)) {

for (WorkerGroup wg : workerGroupList) {

String workerGroup = wg.getName();

Set<String> nodes = new HashSet<>();

String[] addrs = wg.getAddrList().split(Constants.COMMA);

for (String addr : addrs) {

if (newWorkerNodeInfo.containsKey(addr)) {

nodes.add(addr);

}

}

if (!nodes.isEmpty()) {

syncWorkerGroupNodes(workerGroup, nodes);

}

}

}

} catch (Exception e) {

logger.error("WorkerNodeInfoAndGroupDbSyncTask error:", e);

}

}

}

/**

* worker group node listener

*/

class WorkerDataListener implements SubscribeListener {

@Override

public void notify(Event event) {

final String path = event.path();

final Type type = event.type();

final String data = event.data();

if (registryClient.isWorkerPath(path)) {

try {

if (type == Type.ADD) {

logger.info("worker group node : {} added.", path);

String group = parseGroup(path);

Collection<String> currentNodes = registryClient.getWorkerGroupNodesDirectly(group);

logger.info("currentNodes : {}", currentNodes);

syncWorkerGroupNodes(group, currentNodes);

} else if (type == Type.REMOVE) {

logger.info("worker group node : {} down.", path);

String group = parseGroup(path);

Collection<String> currentNodes = registryClient.getWorkerGroupNodesDirectly(group);

syncWorkerGroupNodes(group, currentNodes);

alertDao.sendServerStopedAlert(1, path, "WORKER");

} else if (type == Type.UPDATE) {

logger.debug("worker group node : {} update, data: {}", path, data);

String group = parseGroup(path);

Collection<String> currentNodes = registryClient.getWorkerGroupNodesDirectly(group);

syncWorkerGroupNodes(group, currentNodes);

String node = parseNode(path);

syncSingleWorkerNodeInfo(node, data);

}

} catch (IllegalArgumentException ex) {

logger.warn(ex.getMessage());

} catch (Exception ex) {

logger.error("WorkerGroupListener capture data change and get data failed", ex);

}

}

}

private String parseGroup(String path) {

String[] parts = path.split("/");

if (parts.length < WORKER_LISTENER_CHECK_LENGTH) {

throw new IllegalArgumentException(String.format("worker group path : %s is not valid, ignore", path));

}

return parts[parts.length - 2];

}

private String parseNode(String path) {

String[] parts = path.split("/");

if (parts.length < WORKER_LISTENER_CHECK_LENGTH) {

throw new IllegalArgumentException(String.format("worker group path : %s is not valid, ignore", path));

}

return parts[parts.length - 1];

}

}