status

stringclasses 1

value | repo_name

stringclasses 31

values | repo_url

stringclasses 31

values | issue_id

int64 1

104k

| title

stringlengths 4

369

| body

stringlengths 0

254k

⌀ | issue_url

stringlengths 37

56

| pull_url

stringlengths 37

54

| before_fix_sha

stringlengths 40

40

| after_fix_sha

stringlengths 40

40

| report_datetime

unknown | language

stringclasses 5

values | commit_datetime

unknown | updated_file

stringlengths 4

188

| file_content

stringlengths 0

5.12M

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 8,710 | [Bug][UI Next][V1.0.0-Alpha] There is no tooltip for timing management table editing and up and down buttons. | ### Search before asking

- [X] I had searched in the [issues](https://github.com/apache/dolphinscheduler/issues?q=is%3Aissue) and found no similar issues.

### What happened

### What you expected to happen

There is no tooltip for regular management table editing and up and down buttons.

### How to reproduce

Add tooltip.

### Anything else

_No response_

### Version

dev

### Are you willing to submit PR?

- [X] Yes I am willing to submit a PR!

### Code of Conduct

- [X] I agree to follow this project's [Code of Conduct](https://www.apache.org/foundation/policies/conduct)

| https://github.com/apache/dolphinscheduler/issues/8710 | https://github.com/apache/dolphinscheduler/pull/8716 | e2af9054b39f73183490fd8a96efe919a29a488d | 698c795d4f3412e175fb28e569bf34c6d8085f0b | "2022-03-05T12:41:37Z" | java | "2022-03-06T12:59:20Z" | dolphinscheduler-ui-next/src/views/projects/workflow/definition/timing/use-table.ts | /*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

import { h, ref, reactive } from 'vue'

import { useI18n } from 'vue-i18n'

import { useRouter } from 'vue-router'

import { NSpace, NTooltip, NButton, NPopconfirm, NEllipsis } from 'naive-ui'

import {

deleteScheduleById,

offline,

online,

queryScheduleListPaging

} from '@/service/modules/schedules'

import {

ArrowDownOutlined,

ArrowUpOutlined,

DeleteOutlined,

EditOutlined

} from '@vicons/antd'

import type { Router } from 'vue-router'

import type { TableColumns } from 'naive-ui/es/data-table/src/interface'

import { ISearchParam } from './types'

import styles from '../index.module.scss'

export function useTable() {

const { t } = useI18n()

const router: Router = useRouter()

const columns: TableColumns<any> = [

{

title: '#',

key: 'id',

width: 50,

render: (_row, index) => index + 1

},

{

title: t('project.workflow.workflow_name'),

key: 'processDefinitionName',

width: 200,

render: (_row) =>

h(

NEllipsis,

{ style: 'max-width: 200px' },

{

default: () => _row.processDefinitionName

}

)

},

{

title: t('project.workflow.start_time'),

key: 'startTime'

},

{

title: t('project.workflow.end_time'),

key: 'endTime'

},

{

title: t('project.workflow.crontab'),

key: 'crontab'

},

{

title: t('project.workflow.failure_strategy'),

key: 'failureStrategy'

},

{

title: t('project.workflow.status'),

key: 'releaseState',

render: (_row) =>

_row.releaseState === 'ONLINE'

? t('project.workflow.up_line')

: t('project.workflow.down_line')

},

{

title: t('project.workflow.create_time'),

key: 'createTime'

},

{

title: t('project.workflow.update_time'),

key: 'updateTime'

},

{

title: t('project.workflow.operation'),

key: 'operation',

fixed: 'right',

className: styles.operation,

render: (row) => {

return h(NSpace, null, {

default: () => [

h(

NButton,

{

circle: true,

type: 'info',

size: 'small',

disabled: row.releaseState === 'ONLINE',

onClick: () => {

handleEdit(row)

}

},

{

icon: () => h(EditOutlined)

}

),

h(

NButton,

{

circle: true,

type: row.releaseState === 'ONLINE' ? 'error' : 'warning',

size: 'small',

onClick: () => {

handleReleaseState(row)

}

},

{

icon: () =>

h(

row.releaseState === 'ONLINE'

? ArrowDownOutlined

: ArrowUpOutlined

)

}

),

h(

NPopconfirm,

{

onPositiveClick: () => {

handleDelete(row.id)

}

},

{

trigger: () =>

h(

NTooltip,

{},

{

trigger: () =>

h(

NButton,

{

circle: true,

type: 'error',

size: 'small'

},

{

icon: () => h(DeleteOutlined)

}

),

default: () => t('project.workflow.delete')

}

),

default: () => t('project.workflow.delete_confirm')

}

)

]

})

}

}

]

const handleEdit = (row: any) => {

variables.showRef = true

variables.row = row

}

const variables = reactive({

columns,

row: {},

tableData: [],

projectCode: ref(Number(router.currentRoute.value.params.projectCode)),

page: ref(1),

pageSize: ref(10),

searchVal: ref(),

totalPage: ref(1),

showRef: ref(false)

})

const getTableData = (params: ISearchParam) => {

const definitionCode = Number(

router.currentRoute.value.params.definitionCode

)

queryScheduleListPaging(

{ ...params, processDefinitionCode: definitionCode },

variables.projectCode

).then((res: any) => {

variables.totalPage = res.totalPage

variables.tableData = res.totalList.map((item: any) => {

return { ...item }

})

})

}

const handleReleaseState = (row: any) => {

let handle = online

if (row.releaseState === 'ONLINE') {

handle = offline

}

handle(variables.projectCode, row.id).then(() => {

window.$message.success(t('project.workflow.success'))

getTableData({

pageSize: variables.pageSize,

pageNo: variables.page,

searchVal: variables.searchVal

})

})

}

const handleDelete = (id: number) => {

/* after deleting data from the current page, you need to jump forward when the page is empty. */

if (variables.tableData.length === 1 && variables.page > 1) {

variables.page -= 1

}

deleteScheduleById(id, variables.projectCode)

.then(() => {

window.$message.success(t('project.workflow.success'))

getTableData({

pageSize: variables.pageSize,

pageNo: variables.page,

searchVal: variables.searchVal

})

})

.catch((error: any) => {

window.$message.error(error.message)

})

}

return {

variables,

getTableData

}

}

|

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 8,717 | [Bug][UI Next][V1.0.0-Alpha] Regularly manage multilingual switching issues. | ### Search before asking

- [X] I had searched in the [issues](https://github.com/apache/dolphinscheduler/issues?q=is%3Aissue) and found no similar issues.

### What happened

### What you expected to happen

Regularly manage multilingual switching issues.

### How to reproduce

After switching the language, the header also changes with the language.

### Anything else

_No response_

### Version

dev

### Are you willing to submit PR?

- [X] Yes I am willing to submit a PR!

### Code of Conduct

- [X] I agree to follow this project's [Code of Conduct](https://www.apache.org/foundation/policies/conduct)

| https://github.com/apache/dolphinscheduler/issues/8717 | https://github.com/apache/dolphinscheduler/pull/8718 | 698c795d4f3412e175fb28e569bf34c6d8085f0b | 9c162c86c3fad159839e0e58b58a20c2bd0abcce | "2022-03-06T12:09:40Z" | java | "2022-03-06T13:36:11Z" | dolphinscheduler-ui-next/src/views/projects/workflow/definition/timing/index.tsx | /*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

import Card from '@/components/card'

import { ArrowLeftOutlined } from '@vicons/antd'

import { NButton, NDataTable, NIcon, NPagination } from 'naive-ui'

import { defineComponent, onMounted, toRefs } from 'vue'

import { useI18n } from 'vue-i18n'

import { useRouter } from 'vue-router'

import type { Router } from 'vue-router'

import { useTable } from './use-table'

import TimingModal from '../components/timing-modal'

import styles from '../index.module.scss'

export default defineComponent({

name: 'WorkflowDefinitionTiming',

setup() {

const { variables, getTableData } = useTable()

const requestData = () => {

getTableData({

pageSize: variables.pageSize,

pageNo: variables.page,

searchVal: variables.searchVal

})

}

const handleUpdateList = () => {

requestData()

}

const handleSearch = () => {

variables.page = 1

requestData()

}

const handleChangePageSize = () => {

variables.page = 1

requestData()

}

onMounted(() => {

requestData()

})

return {

requestData,

handleSearch,

handleUpdateList,

handleChangePageSize,

...toRefs(variables)

}

},

render() {

const { t } = useI18n()

const router: Router = useRouter()

return (

<div class={styles.content}>

<Card class={styles.card}>

<div class={styles.header}>

<NButton type='primary' onClick={() => router.go(-1)}>

<NIcon>

<ArrowLeftOutlined />

</NIcon>

</NButton>

</div>

</Card>

<Card title={t('project.workflow.cron_manage')}>

<NDataTable

columns={this.columns}

data={this.tableData}

striped

size={'small'}

class={styles.table}

/>

<div class={styles.pagination}>

<NPagination

v-model:page={this.page}

v-model:page-size={this.pageSize}

page-count={this.totalPage}

show-size-picker

page-sizes={[10, 30, 50]}

show-quick-jumper

onUpdatePage={this.requestData}

onUpdatePageSize={this.handleChangePageSize}

/>

</div>

</Card>

<TimingModal

type={'update'}

v-model:row={this.row}

v-model:show={this.showRef}

onUpdateList={this.handleUpdateList}

/>

</div>

)

}

})

|

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 8,717 | [Bug][UI Next][V1.0.0-Alpha] Regularly manage multilingual switching issues. | ### Search before asking

- [X] I had searched in the [issues](https://github.com/apache/dolphinscheduler/issues?q=is%3Aissue) and found no similar issues.

### What happened

### What you expected to happen

Regularly manage multilingual switching issues.

### How to reproduce

After switching the language, the header also changes with the language.

### Anything else

_No response_

### Version

dev

### Are you willing to submit PR?

- [X] Yes I am willing to submit a PR!

### Code of Conduct

- [X] I agree to follow this project's [Code of Conduct](https://www.apache.org/foundation/policies/conduct)

| https://github.com/apache/dolphinscheduler/issues/8717 | https://github.com/apache/dolphinscheduler/pull/8718 | 698c795d4f3412e175fb28e569bf34c6d8085f0b | 9c162c86c3fad159839e0e58b58a20c2bd0abcce | "2022-03-06T12:09:40Z" | java | "2022-03-06T13:36:11Z" | dolphinscheduler-ui-next/src/views/projects/workflow/definition/timing/use-table.ts | /*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

import { h, ref, reactive } from 'vue'

import { useI18n } from 'vue-i18n'

import { useRouter } from 'vue-router'

import {

NSpace,

NTooltip,

NButton,

NPopconfirm,

NEllipsis,

NIcon

} from 'naive-ui'

import {

deleteScheduleById,

offline,

online,

queryScheduleListPaging

} from '@/service/modules/schedules'

import {

ArrowDownOutlined,

ArrowUpOutlined,

DeleteOutlined,

EditOutlined

} from '@vicons/antd'

import type { Router } from 'vue-router'

import type { TableColumns } from 'naive-ui/es/data-table/src/interface'

import { ISearchParam } from './types'

import styles from '../index.module.scss'

export function useTable() {

const { t } = useI18n()

const router: Router = useRouter()

const columns: TableColumns<any> = [

{

title: '#',

key: 'id',

width: 50,

render: (_row, index) => index + 1

},

{

title: t('project.workflow.workflow_name'),

key: 'processDefinitionName',

width: 200,

render: (_row) =>

h(

NEllipsis,

{ style: 'max-width: 200px' },

{

default: () => _row.processDefinitionName

}

)

},

{

title: t('project.workflow.start_time'),

key: 'startTime'

},

{

title: t('project.workflow.end_time'),

key: 'endTime'

},

{

title: t('project.workflow.crontab'),

key: 'crontab'

},

{

title: t('project.workflow.failure_strategy'),

key: 'failureStrategy'

},

{

title: t('project.workflow.status'),

key: 'releaseState',

render: (_row) =>

_row.releaseState === 'ONLINE'

? t('project.workflow.up_line')

: t('project.workflow.down_line')

},

{

title: t('project.workflow.create_time'),

key: 'createTime'

},

{

title: t('project.workflow.update_time'),

key: 'updateTime'

},

{

title: t('project.workflow.operation'),

key: 'operation',

fixed: 'right',

className: styles.operation,

render: (row) => {

return h(NSpace, null, {

default: () => [

h(

NTooltip,

{},

{

trigger: () =>

h(

NButton,

{

circle: true,

type: 'info',

size: 'small',

disabled: row.releaseState === 'ONLINE',

onClick: () => {

handleEdit(row)

}

},

{

icon: () => h(EditOutlined)

}

),

default: () => t('project.workflow.edit')

}

),

h(

NTooltip,

{},

{

trigger: () =>

h(

NButton,

{

circle: true,

type: row.releaseState === 'ONLINE' ? 'error' : 'warning',

size: 'small',

onClick: () => {

handleReleaseState(row)

}

},

{

icon: () =>

h(

row.releaseState === 'ONLINE'

? ArrowDownOutlined

: ArrowUpOutlined

)

}

),

default: () =>

row.releaseState === 'ONLINE'

? t('project.workflow.down_line')

: t('project.workflow.up_line')

}

),

h(

NPopconfirm,

{

onPositiveClick: () => {

handleDelete(row.id)

}

},

{

trigger: () =>

h(

NTooltip,

{},

{

trigger: () =>

h(

NButton,

{

circle: true,

type: 'error',

size: 'small'

},

{

icon: () => h(DeleteOutlined)

}

),

default: () => t('project.workflow.delete')

}

),

default: () => t('project.workflow.delete_confirm')

}

)

]

})

}

}

]

const handleEdit = (row: any) => {

variables.showRef = true

variables.row = row

}

const variables = reactive({

columns,

row: {},

tableData: [],

projectCode: ref(Number(router.currentRoute.value.params.projectCode)),

page: ref(1),

pageSize: ref(10),

searchVal: ref(),

totalPage: ref(1),

showRef: ref(false)

})

const getTableData = (params: ISearchParam) => {

const definitionCode = Number(

router.currentRoute.value.params.definitionCode

)

queryScheduleListPaging(

{ ...params, processDefinitionCode: definitionCode },

variables.projectCode

).then((res: any) => {

variables.totalPage = res.totalPage

variables.tableData = res.totalList.map((item: any) => {

return { ...item }

})

})

}

const handleReleaseState = (row: any) => {

let handle = online

if (row.releaseState === 'ONLINE') {

handle = offline

}

handle(variables.projectCode, row.id).then(() => {

window.$message.success(t('project.workflow.success'))

getTableData({

pageSize: variables.pageSize,

pageNo: variables.page,

searchVal: variables.searchVal

})

})

}

const handleDelete = (id: number) => {

/* after deleting data from the current page, you need to jump forward when the page is empty. */

if (variables.tableData.length === 1 && variables.page > 1) {

variables.page -= 1

}

deleteScheduleById(id, variables.projectCode)

.then(() => {

window.$message.success(t('project.workflow.success'))

getTableData({

pageSize: variables.pageSize,

pageNo: variables.page,

searchVal: variables.searchVal

})

})

.catch((error: any) => {

window.$message.error(error.message)

})

}

return {

variables,

getTableData

}

}

|

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 8,720 | [Bug][UI Next][V1.0.0-Alpha] Workflow instance table action button is too small to click. | ### Search before asking

- [X] I had searched in the [issues](https://github.com/apache/dolphinscheduler/issues?q=is%3Aissue) and found no similar issues.

### What happened

### What you expected to happen

Workflow instance table action button is too small to click.

### How to reproduce

Make the table button larger.

### Anything else

_No response_

### Version

dev

### Are you willing to submit PR?

- [X] Yes I am willing to submit a PR!

### Code of Conduct

- [X] I agree to follow this project's [Code of Conduct](https://www.apache.org/foundation/policies/conduct)

| https://github.com/apache/dolphinscheduler/issues/8720 | https://github.com/apache/dolphinscheduler/pull/8721 | b0fc6e7a695bd6a20092dc2baf1bacf7e2caba30 | ac18b195ec2c5e2792ab7e4da416a3740745d5b4 | "2022-03-07T02:16:12Z" | java | "2022-03-07T03:11:23Z" | dolphinscheduler-ui-next/src/views/projects/workflow/instance/components/table-action.tsx | /*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

import { defineComponent, PropType, toRefs } from 'vue'

import { NSpace, NTooltip, NButton, NIcon, NPopconfirm } from 'naive-ui'

import {

DeleteOutlined,

FormOutlined,

InfoCircleFilled,

SyncOutlined,

CloseOutlined,

CloseCircleOutlined,

PauseCircleOutlined,

ControlOutlined,

PlayCircleOutlined

} from '@vicons/antd'

import { useI18n } from 'vue-i18n'

import { useRouter } from 'vue-router'

import type { Router } from 'vue-router'

import { IWorkflowInstance } from '@/service/modules/process-instances/types'

const props = {

row: {

type: Object as PropType<IWorkflowInstance>,

required: true

}

}

export default defineComponent({

name: 'TableAction',

props,

emits: [

'updateList',

'reRun',

'reStore',

'stop',

'suspend',

'deleteInstance'

],

setup(props, ctx) {

const router: Router = useRouter()

const handleEdit = () => {

router.push({

name: 'workflow-instance-detail',

params: { id: props.row!.id },

query: { code: props.row!.processDefinitionCode }

})

}

const handleGantt = () => {

router.push({

name: 'workflow-instance-gantt',

params: { id: props.row!.id },

query: { code: props.row!.processDefinitionCode }

})

}

const handleReRun = () => {

ctx.emit('reRun')

}

const handleReStore = () => {

ctx.emit('reStore')

}

const handleStop = () => {

ctx.emit('stop')

}

const handleSuspend = () => {

ctx.emit('suspend')

}

const handleDeleteInstance = () => {

ctx.emit('deleteInstance')

}

return {

handleEdit,

handleReRun,

handleReStore,

handleStop,

handleSuspend,

handleDeleteInstance,

handleGantt,

...toRefs(props)

}

},

render() {

const { t } = useI18n()

const state = this.row?.state

return (

<NSpace>

<NTooltip trigger={'hover'}>

{{

default: () => t('project.workflow.edit'),

trigger: () => (

<NButton

tag='div'

size='tiny'

type='info'

circle

disabled={

(state !== 'SUCCESS' &&

state !== 'PAUSE' &&

state !== 'FAILURE' &&

state !== 'STOP') ||

this.row?.disabled

}

onClick={this.handleEdit}

>

<NIcon>

<FormOutlined />

</NIcon>

</NButton>

)

}}

</NTooltip>

<NTooltip trigger={'hover'}>

{{

default: () => t('project.workflow.rerun'),

trigger: () => {

return (

<NButton

tag='div'

size='tiny'

type='info'

circle

onClick={this.handleReRun}

disabled={

(state !== 'SUCCESS' &&

state !== 'PAUSE' &&

state !== 'FAILURE' &&

state !== 'STOP') ||

this.row?.disabled

}

>

{this.row?.buttonType === 'run' ? (

<span>{this.row?.count}</span>

) : (

<NIcon>

<SyncOutlined />

</NIcon>

)}

</NButton>

)

}

}}

</NTooltip>

<NTooltip trigger={'hover'}>

{{

default: () => t('project.workflow.recovery_failed'),

trigger: () => (

<NButton

tag='div'

size='tiny'

type='primary'

circle

onClick={this.handleReStore}

disabled={state !== 'FAILURE' || this.row?.disabled}

>

{this.row?.buttonType === 'store' ? (

<span>{this.row?.count}</span>

) : (

<NIcon>

<CloseCircleOutlined />

</NIcon>

)}

</NButton>

)

}}

</NTooltip>

<NTooltip trigger={'hover'}>

{{

default: () =>

state === 'PAUSE'

? t('project.workflow.recovery_failed')

: t('project.workflow.stop'),

trigger: () => (

<NButton

tag='div'

size='tiny'

type='error'

circle

onClick={this.handleStop}

disabled={

(state !== 'RUNNING_EXECUTION' && state !== 'PAUSE') ||

this.row?.disabled

}

>

<NIcon>

{state === 'STOP' ? (

<PlayCircleOutlined />

) : (

<CloseOutlined />

)}

</NIcon>

</NButton>

)

}}

</NTooltip>

<NTooltip trigger={'hover'}>

{{

default: () =>

state === 'PAUSE'

? t('project.workflow.recovery_suspend')

: t('project.workflow.pause'),

trigger: () => (

<NButton

tag='div'

size='tiny'

type='warning'

circle

disabled={

(state !== 'RUNNING_EXECUTION' && state !== 'PAUSE') ||

this.row?.disabled

}

onClick={this.handleSuspend}

>

<NIcon>

{state === 'PAUSE' ? (

<PlayCircleOutlined />

) : (

<PauseCircleOutlined />

)}

</NIcon>

</NButton>

)

}}

</NTooltip>

<NTooltip trigger={'hover'}>

{{

default: () => t('project.workflow.delete'),

trigger: () => (

<NButton

tag='div'

size='tiny'

type='error'

circle

disabled={

(state !== 'SUCCESS' &&

state !== 'FAILURE' &&

state !== 'STOP' &&

state !== 'PAUSE') ||

this.row?.disabled

}

>

<NPopconfirm onPositiveClick={this.handleDeleteInstance}>

{{

default: () => t('project.workflow.delete_confirm'),

icon: () => (

<NIcon>

<InfoCircleFilled />

</NIcon>

),

trigger: () => (

<NIcon>

<DeleteOutlined />

</NIcon>

)

}}

</NPopconfirm>

</NButton>

)

}}

</NTooltip>

<NTooltip trigger={'hover'}>

{{

default: () => t('project.workflow.gantt'),

trigger: () => (

<NButton

tag='div'

size='tiny'

type='info'

circle

disabled={this.row?.disabled}

onClick={this.handleGantt}

>

<NIcon>

<ControlOutlined />

</NIcon>

</NButton>

)

}}

</NTooltip>

</NSpace>

)

}

})

|

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 8,720 | [Bug][UI Next][V1.0.0-Alpha] Workflow instance table action button is too small to click. | ### Search before asking

- [X] I had searched in the [issues](https://github.com/apache/dolphinscheduler/issues?q=is%3Aissue) and found no similar issues.

### What happened

### What you expected to happen

Workflow instance table action button is too small to click.

### How to reproduce

Make the table button larger.

### Anything else

_No response_

### Version

dev

### Are you willing to submit PR?

- [X] Yes I am willing to submit a PR!

### Code of Conduct

- [X] I agree to follow this project's [Code of Conduct](https://www.apache.org/foundation/policies/conduct)

| https://github.com/apache/dolphinscheduler/issues/8720 | https://github.com/apache/dolphinscheduler/pull/8721 | b0fc6e7a695bd6a20092dc2baf1bacf7e2caba30 | ac18b195ec2c5e2792ab7e4da416a3740745d5b4 | "2022-03-07T02:16:12Z" | java | "2022-03-07T03:11:23Z" | dolphinscheduler-ui-next/src/views/projects/workflow/instance/use-table.ts | /*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

import _ from 'lodash'

import { format } from 'date-fns'

import { reactive, h, ref } from 'vue'

import { useI18n } from 'vue-i18n'

import { useRouter } from 'vue-router'

import type { Router } from 'vue-router'

import { NTooltip, NIcon, NSpin } from 'naive-ui'

import { RowKey } from 'naive-ui/lib/data-table/src/interface'

import {

queryProcessInstanceListPaging,

deleteProcessInstanceById,

batchDeleteProcessInstanceByIds

} from '@/service/modules/process-instances'

import { execute } from '@/service/modules/executors'

import TableAction from './components/table-action'

import { runningType, tasksState } from '@/utils/common'

import { IWorkflowInstance } from '@/service/modules/process-instances/types'

import { ICountDownParam } from './types'

import { ExecuteReq } from '@/service/modules/executors/types'

import { parseTime } from '@/utils/common'

import styles from './index.module.scss'

export function useTable() {

const { t } = useI18n()

const router: Router = useRouter()

const taskStateIcon = tasksState(t)

const variables = reactive({

columns: [],

checkedRowKeys: [] as Array<RowKey>,

tableData: [] as Array<IWorkflowInstance>,

page: ref(1),

pageSize: ref(10),

totalPage: ref(1),

searchVal: ref(),

executorName: ref(),

host: ref(),

stateType: ref(),

startDate: ref(),

endDate: ref(),

projectCode: ref(Number(router.currentRoute.value.params.projectCode))

})

const createColumns = (variables: any) => {

variables.columns = [

{

type: 'selection'

},

{

title: '#',

key: 'id',

width: 50,

render: (rowData: any, rowIndex: number) => rowIndex + 1

},

{

title: t('project.workflow.workflow_name'),

key: 'name',

width: 200,

render: (_row: IWorkflowInstance) =>

h(

'a',

{

href: 'javascript:',

class: styles.links,

onClick: () =>

router.push({

name: 'workflow-instance-detail',

params: { id: _row.id },

query: { code: _row.processDefinitionCode }

})

},

{

default: () => {

return _row.name

}

}

)

},

{

title: t('project.workflow.status'),

key: 'state',

render: (_row: IWorkflowInstance) => {

const stateIcon = taskStateIcon[_row.state]

const iconElement = h(

NIcon,

{

size: '18px',

style: 'position: relative; top: 7.5px; left: 7.5px'

},

{

default: () =>

h(stateIcon.icon, {

color: stateIcon.color

})

}

)

return h(

NTooltip,

{},

{

trigger: () => {

if (stateIcon.isSpin) {

return h(

NSpin,

{

small: 'small'

},

{

icon: () => iconElement

}

)

} else {

return iconElement

}

},

default: () => stateIcon!.desc

}

)

}

},

{

title: t('project.workflow.run_type'),

key: 'commandType',

render: (_row: IWorkflowInstance) =>

(

_.filter(runningType(t), (v) => v.code === _row.commandType)[0] ||

{}

).desc

},

{

title: t('project.workflow.scheduling_time'),

key: 'scheduleTime',

render: (_row: IWorkflowInstance) =>

_row.scheduleTime

? format(parseTime(_row.scheduleTime), 'yyyy-MM-dd HH:mm:ss')

: '-'

},

{

title: t('project.workflow.start_time'),

key: 'startTime',

render: (_row: IWorkflowInstance) =>

_row.startTime

? format(parseTime(_row.startTime), 'yyyy-MM-dd HH:mm:ss')

: '-'

},

{

title: t('project.workflow.end_time'),

key: 'endTime',

render: (_row: IWorkflowInstance) =>

_row.endTime

? format(parseTime(_row.endTime), 'yyyy-MM-dd HH:mm:ss')

: '-'

},

{

title: t('project.workflow.duration'),

key: 'duration',

render: (_row: IWorkflowInstance) => _row.duration || '-'

},

{

title: t('project.workflow.run_times'),

key: 'runTimes'

},

{

title: t('project.workflow.fault_tolerant_sign'),

key: 'recovery'

},

{

title: t('project.workflow.dry_run_flag'),

key: 'dryRun',

render: (_row: IWorkflowInstance) => (_row.dryRun === 1 ? 'YES' : 'NO')

},

{

title: t('project.workflow.executor'),

key: 'executorName'

},

{

title: t('project.workflow.host'),

key: 'host'

},

{

title: t('project.workflow.operation'),

key: 'operation',

width: 220,

fixed: 'right',

className: styles.operation,

render: (_row: IWorkflowInstance, index: number) =>

h(TableAction, {

row: _row,

onReRun: () =>

_countDownFn({

index,

processInstanceId: _row.id,

executeType: 'REPEAT_RUNNING',

buttonType: 'run'

}),

onReStore: () =>

_countDownFn({

index,

processInstanceId: _row.id,

executeType: 'START_FAILURE_TASK_PROCESS',

buttonType: 'store'

}),

onStop: () => {

if (_row.state === 'STOP') {

_countDownFn({

index,

processInstanceId: _row.id,

executeType: 'RECOVER_SUSPENDED_PROCESS',

buttonType: 'suspend'

})

} else {

_upExecutorsState({

processInstanceId: _row.id,

executeType: 'STOP'

})

}

},

onSuspend: () => {

if (_row.state === 'PAUSE') {

_countDownFn({

index,

processInstanceId: _row.id,

executeType: 'RECOVER_SUSPENDED_PROCESS',

buttonType: 'suspend'

})

} else {

_upExecutorsState({

processInstanceId: _row.id,

executeType: 'PAUSE'

})

}

},

onDeleteInstance: () => deleteInstance(_row.id)

})

}

]

}

const getTableData = () => {

const params = {

pageNo: variables.page,

pageSize: variables.pageSize,

searchVal: variables.searchVal,

executorName: variables.executorName,

host: variables.host,

stateType: variables.stateType,

startDate: variables.startDate,

endDate: variables.endDate

}

queryProcessInstanceListPaging({ ...params }, variables.projectCode).then(

(res: any) => {

variables.totalPage = res.totalPage

variables.tableData = res.totalList.map((item: any) => {

return { ...item }

})

}

)

}

const deleteInstance = (id: number) => {

deleteProcessInstanceById(id, variables.projectCode)

.then(() => {

window.$message.success(t('project.workflow.success'))

if (variables.tableData.length === 1 && variables.page > 1) {

variables.page -= 1

}

getTableData()

})

.catch((error: any) => {

window.$message.error(error.message || '')

getTableData()

})

}

const batchDeleteInstance = () => {

const data = {

processInstanceIds: _.join(variables.checkedRowKeys, ',')

}

batchDeleteProcessInstanceByIds(data, variables.projectCode)

.then(() => {

window.$message.success(t('project.workflow.success'))

if (

variables.tableData.length === variables.checkedRowKeys.length &&

variables.page > 1

) {

variables.page -= 1

}

variables.checkedRowKeys = []

getTableData()

})

.catch((error: any) => {

window.$message.error(error.message || '')

getTableData()

})

}

/**

* operating

*/

const _upExecutorsState = (param: ExecuteReq) => {

execute(param, variables.projectCode)

.then(() => {

window.$message.success(t('project.workflow.success'))

getTableData()

})

.catch((error: any) => {

window.$message.error(error.message || '')

getTableData()

})

}

/**

* Countdown

*/

const _countDown = (fn: any, index: number) => {

const TIME_COUNT = 10

let timer: number | undefined

let $count: number

if (!timer) {

$count = TIME_COUNT

timer = setInterval(() => {

if ($count > 0 && $count <= TIME_COUNT) {

$count--

variables.tableData[index].count = $count

} else {

fn()

clearInterval(timer)

timer = undefined

}

}, 1000)

}

}

/**

* Countdown method refresh

*/

const _countDownFn = (param: ICountDownParam) => {

const { index } = param

variables.tableData[index].buttonType = param.buttonType

execute(param, variables.projectCode)

.then(() => {

variables.tableData[index].disabled = true

window.$message.success(t('project.workflow.success'))

_countDown(() => {

getTableData()

}, index)

})

.catch((error: any) => {

window.$message.error(error.message)

getTableData()

})

}

return {

variables,

createColumns,

getTableData,

batchDeleteInstance

}

}

|

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 8,722 | [bug][UI Next][V1.0.0-Alpha] An error page | ### Search before asking

- [X] I had searched in the [issues](https://github.com/apache/dolphinscheduler/issues?q=is%3Aissue) and found no similar issues.

### What happened

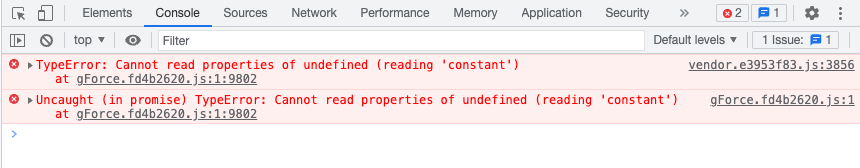

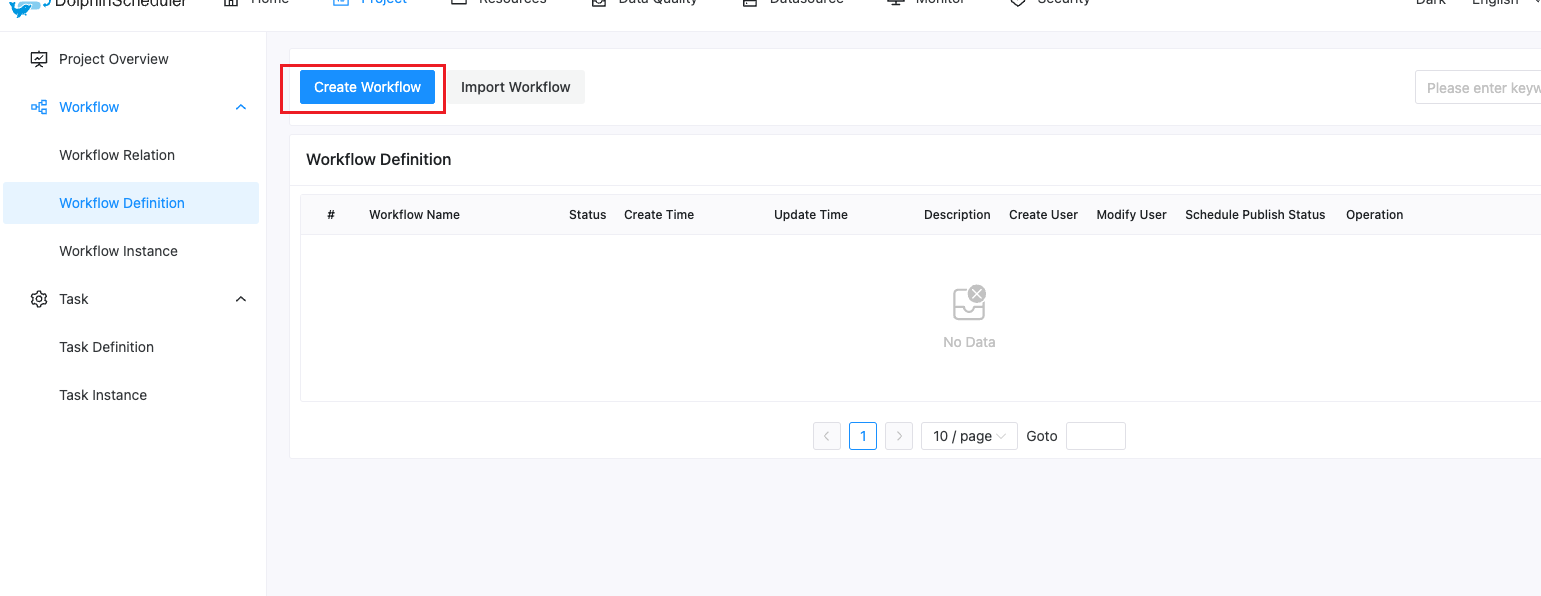

An error page

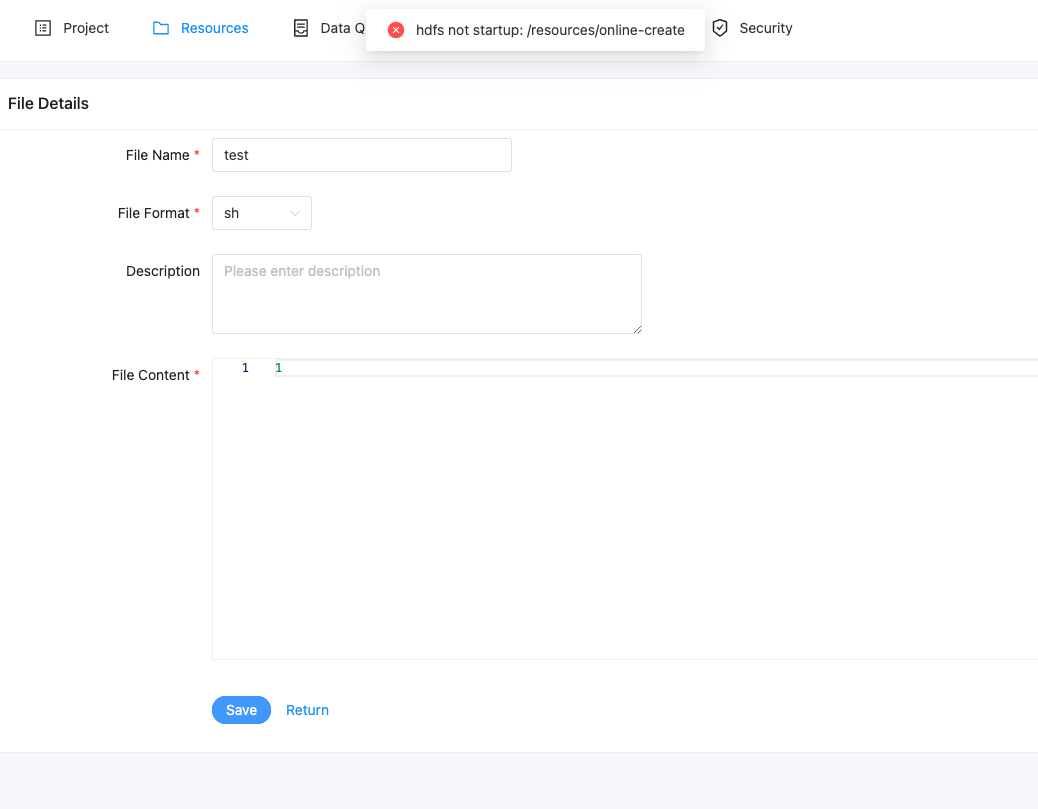

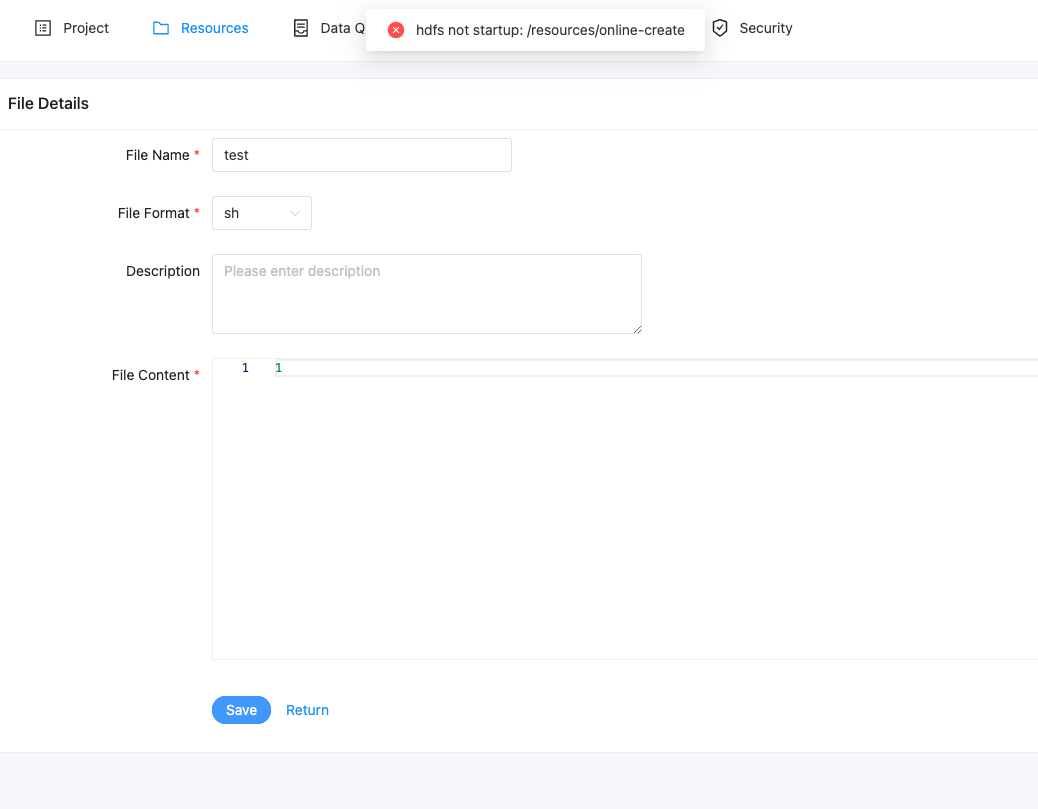

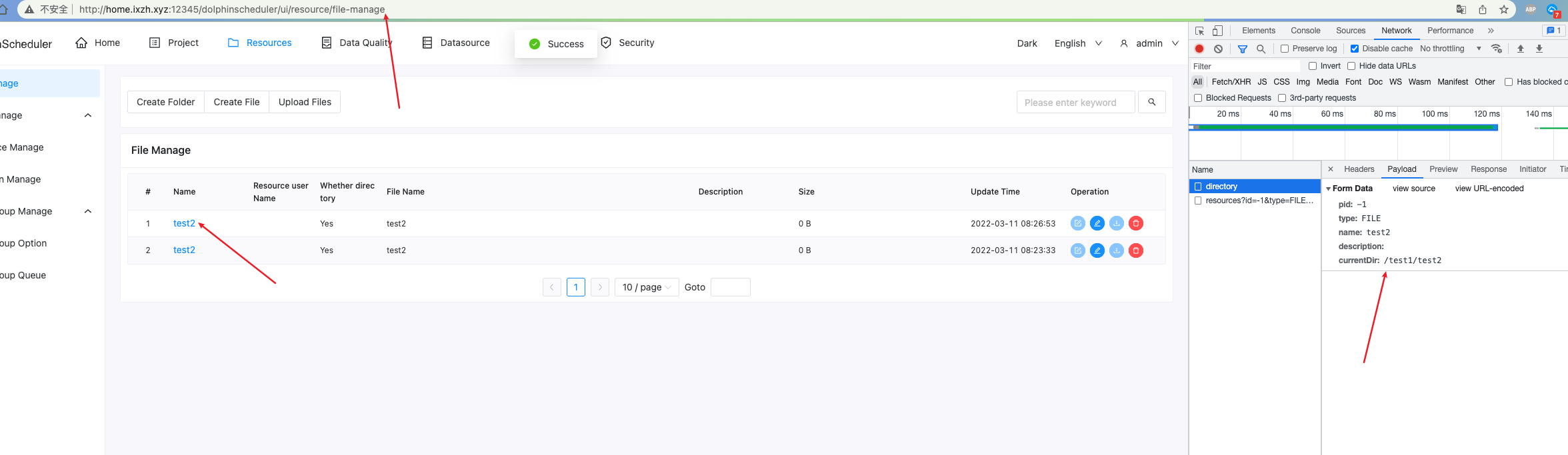

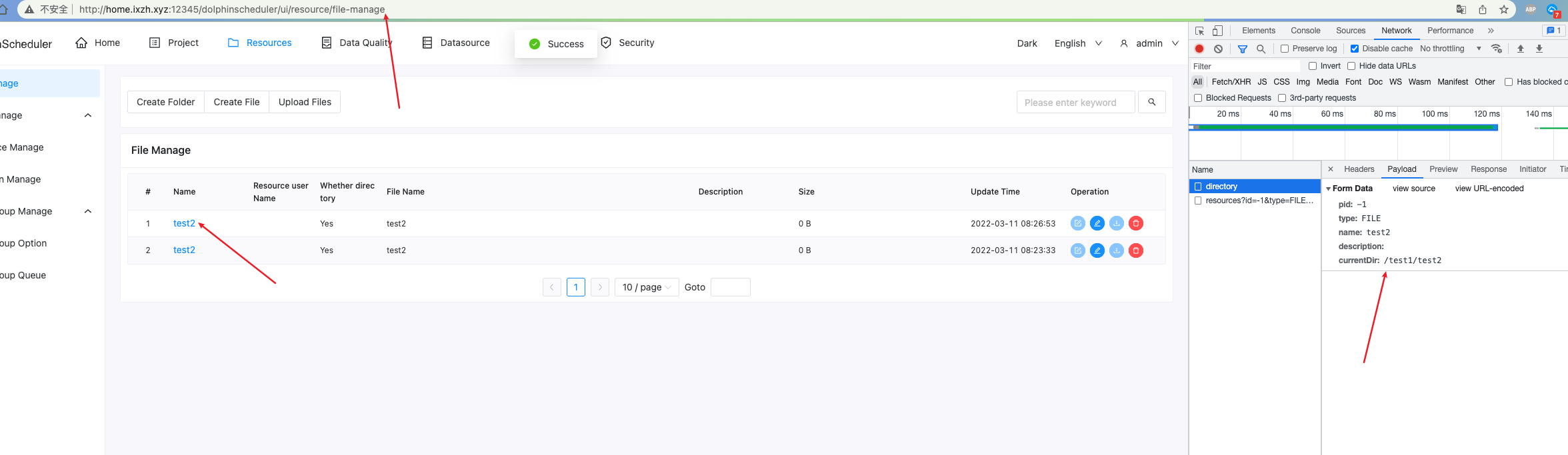

<img width="1235" alt="image" src="https://user-images.githubusercontent.com/76080484/156958639-10e7e382-491a-4587-9183-4d2750c65854.png">

<img width="1123" alt="image" src="https://user-images.githubusercontent.com/76080484/156958683-59f9d8dc-60fc-4612-8c80-fcfc0629c2b1.png">

### What you expected to happen

Normal use

### How to reproduce

Open the page

### Anything else

_No response_

### Version

dev

### Are you willing to submit PR?

- [ ] Yes I am willing to submit a PR!

### Code of Conduct

- [X] I agree to follow this project's [Code of Conduct](https://www.apache.org/foundation/policies/conduct)

| https://github.com/apache/dolphinscheduler/issues/8722 | https://github.com/apache/dolphinscheduler/pull/8731 | 5c640789c3dacac3fee3555ad601ac09d6bee099 | 63e85f314d2a60e6e43480c8ef9897adf64a899f | "2022-03-07T02:43:09Z" | java | "2022-03-07T07:43:59Z" | dolphinscheduler-ui-next/src/locales/index.ts | /*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

import { createI18n } from 'vue-i18n'

import zh_CN from './modules/zh_CN'

import en_US from './modules/en_US'

const i18n = createI18n({

globalInjection: true,

locale: 'zh_CN',

messages: {

zh_CN,

en_US

}

})

export default i18n

|

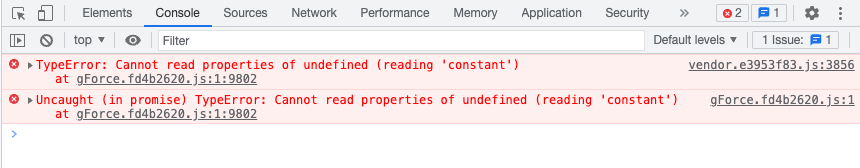

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 8,690 | [Bug][UI Next][V1.0.0-Alpha] Workflow execution error | ### Search before asking

- [X] I had searched in the [issues](https://github.com/apache/dolphinscheduler/issues?q=is%3Aissue) and found no similar issues.

### What happened

Workflow execution error

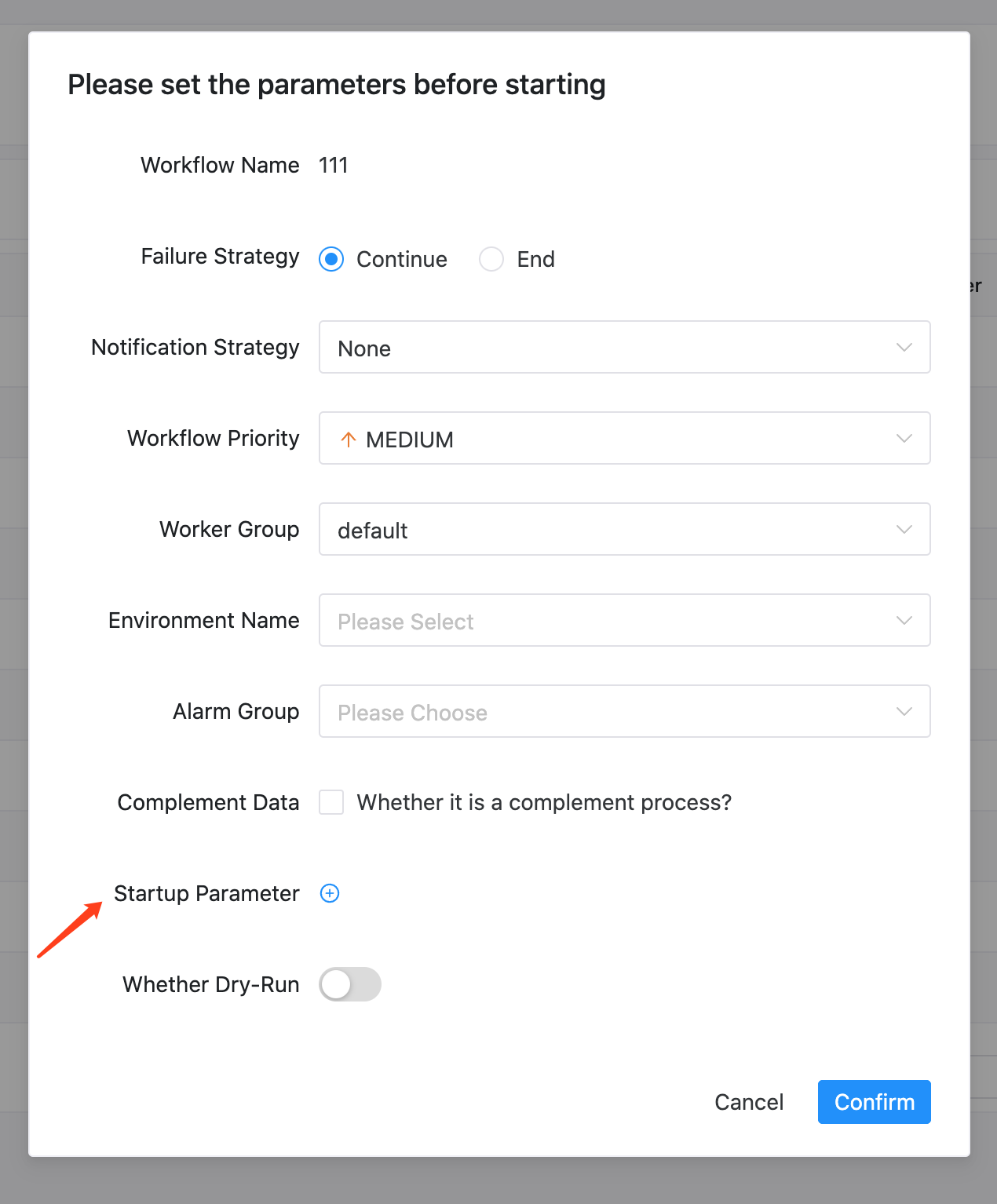

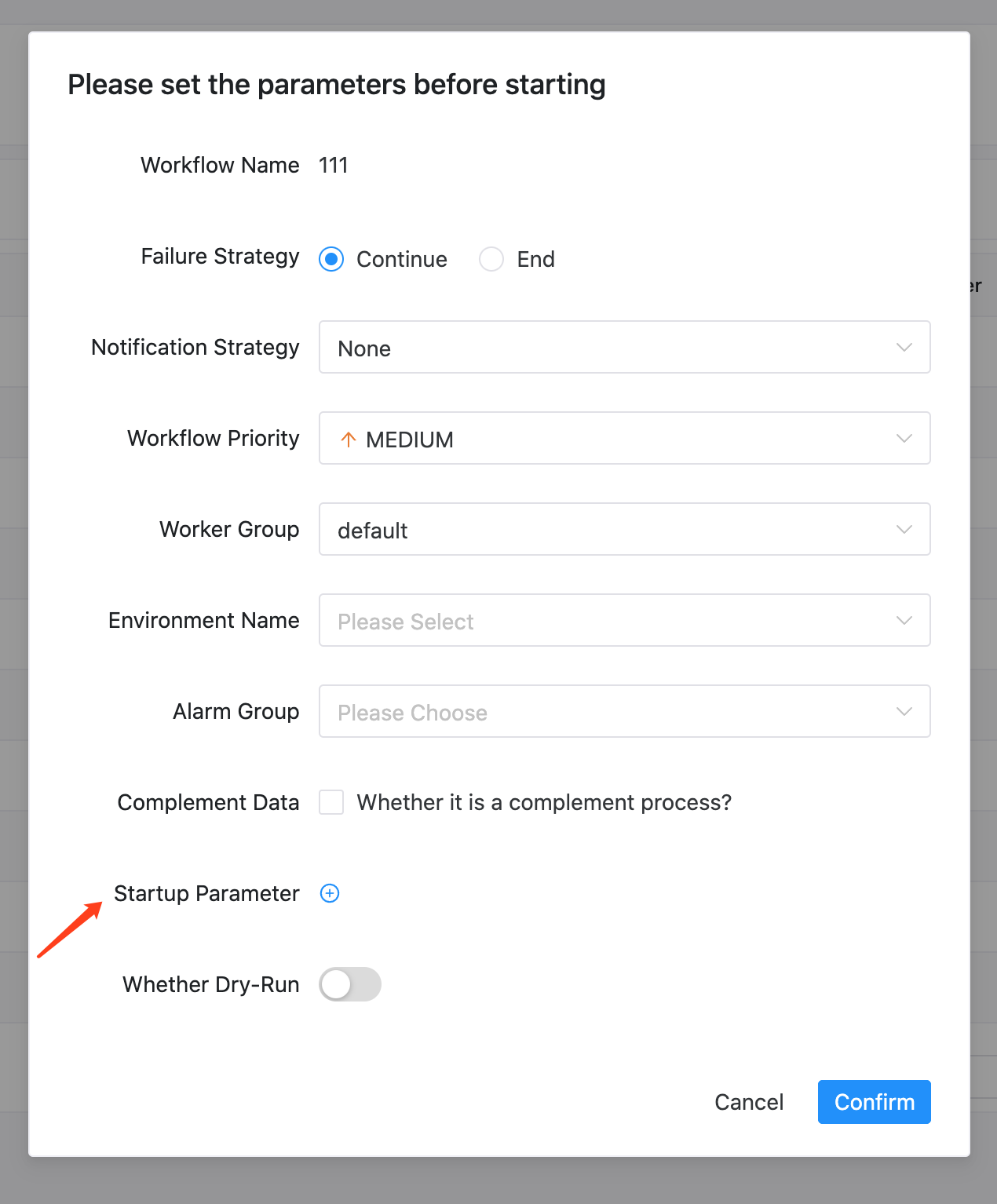

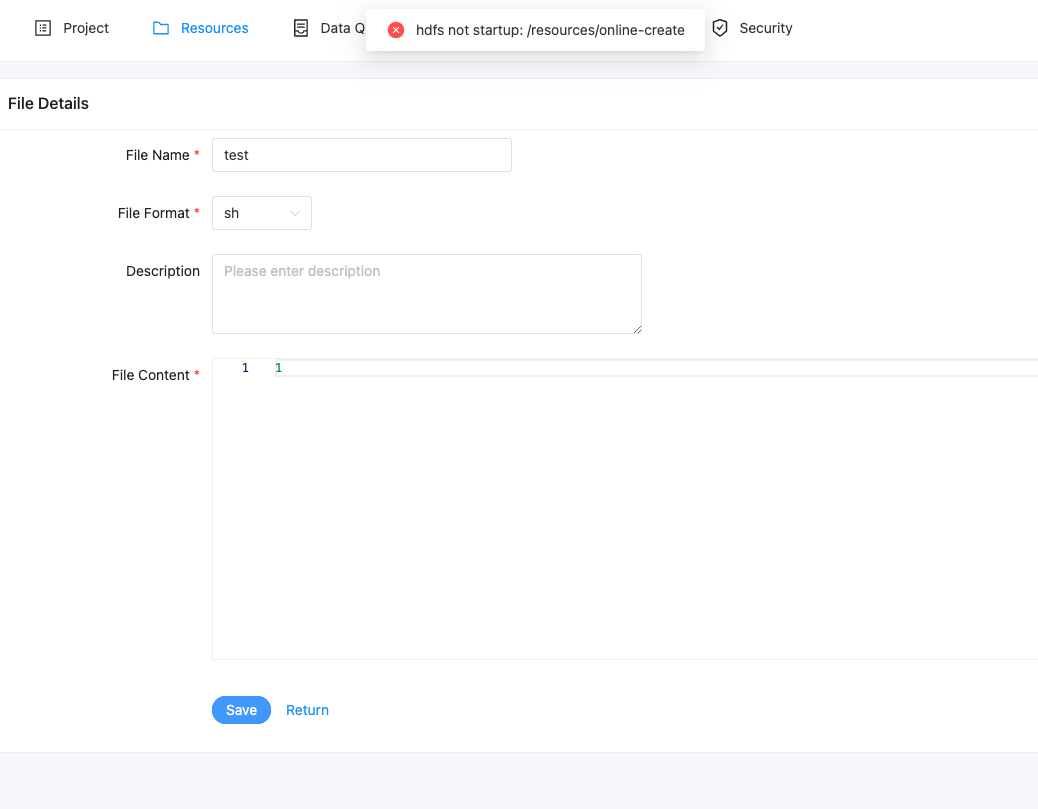

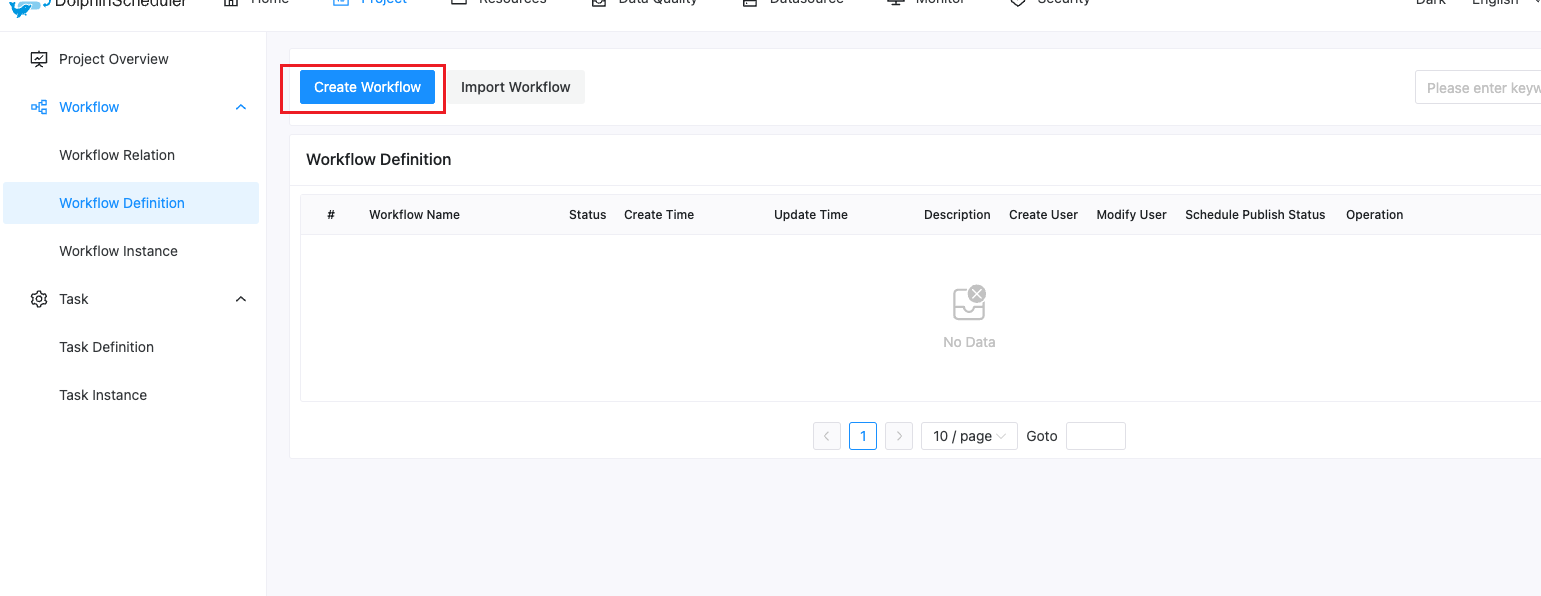

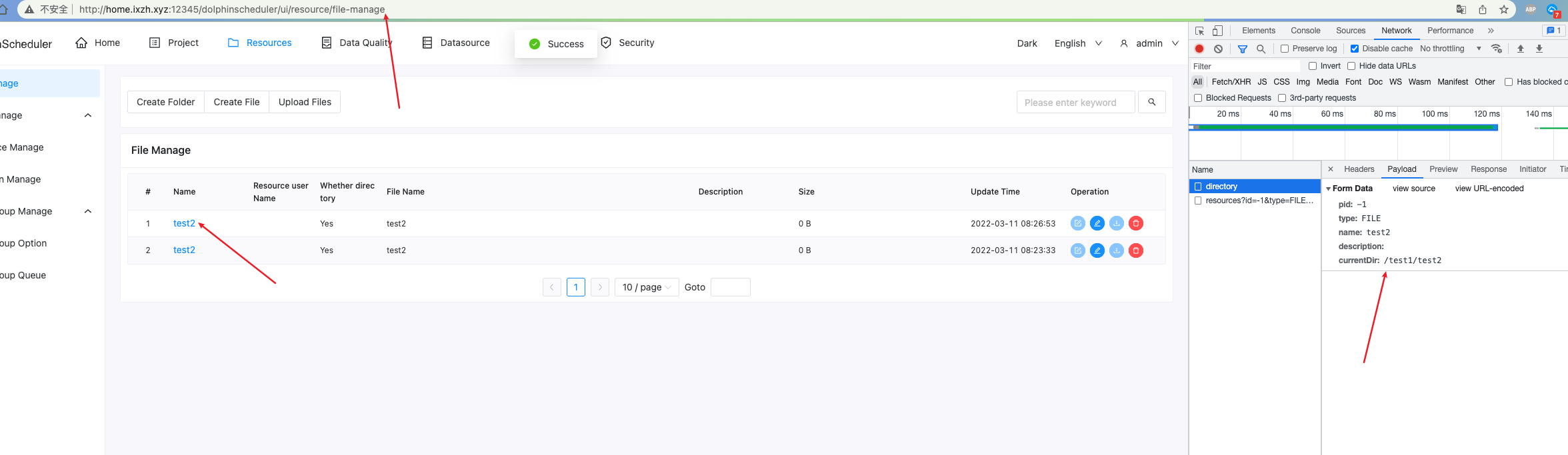

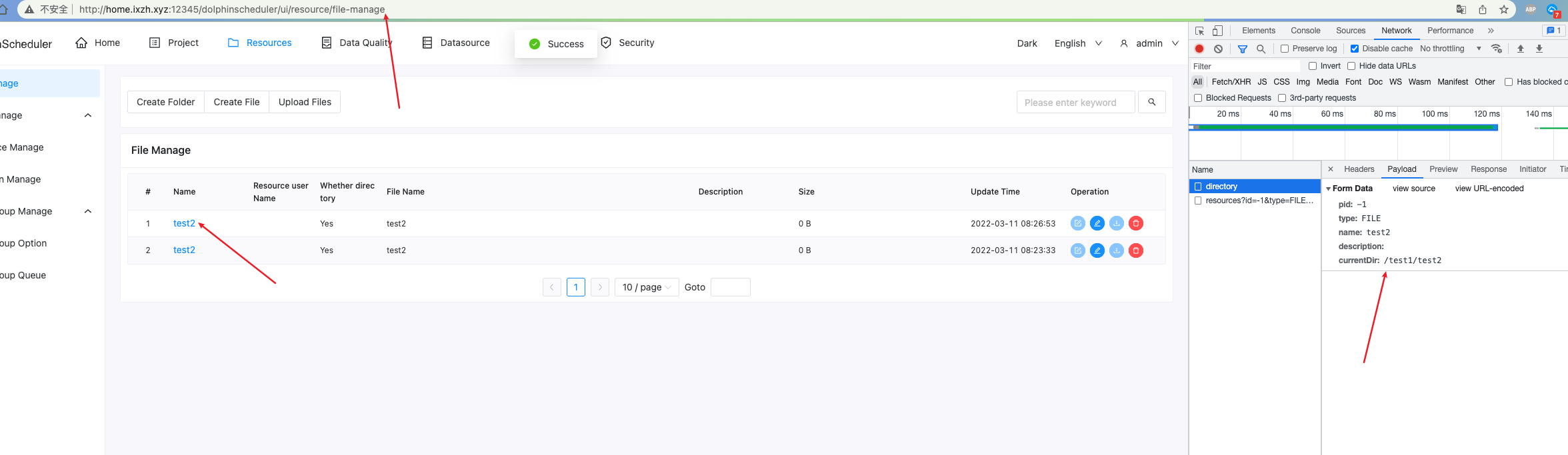

<img width="1571" alt="image" src="https://user-images.githubusercontent.com/76080484/156691383-e0cdcb28-94cf-41a2-bfad-8e5587c99dab.png">

If this ” execType“ field is not false, it will fail, otherwise it will succeed

<img width="1533" alt="image" src="https://user-images.githubusercontent.com/76080484/156691502-16627d54-1110-42da-88d9-6ba15c095f76.png">

path:/executors/start-process-instance

<img width="997" alt="image" src="https://user-images.githubusercontent.com/76080484/156691724-8089dfe4-af2b-4d4c-8b11-b914a2c21f3e.png">

### What you expected to happen

normal operation

### How to reproduce

Click Run workflow

### Anything else

_No response_

### Version

dev

### Are you willing to submit PR?

- [ ] Yes I am willing to submit a PR!

### Code of Conduct

- [X] I agree to follow this project's [Code of Conduct](https://www.apache.org/foundation/policies/conduct)

| https://github.com/apache/dolphinscheduler/issues/8690 | https://github.com/apache/dolphinscheduler/pull/8734 | c51f2e4a7cdfca19cc0477871c9a589b684354f9 | e34f6fc807900c60cdc9bb5dff96701a94e8d17c | "2022-03-04T03:16:24Z" | java | "2022-03-07T09:04:37Z" | dolphinscheduler-ui-next/src/views/projects/workflow/definition/components/start-modal.tsx | /*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

import { defineComponent, PropType, toRefs, h, onMounted, ref } from 'vue'

import { useI18n } from 'vue-i18n'

import Modal from '@/components/modal'

import { useForm } from './use-form'

import { useModal } from './use-modal'

import {

NForm,

NFormItem,

NButton,

NIcon,

NInput,

NSpace,

NRadio,

NRadioGroup,

NSelect,

NSwitch,

NCheckbox,

NDatePicker

} from 'naive-ui'

import {

ArrowDownOutlined,

ArrowUpOutlined,

DeleteOutlined,

PlusCircleOutlined

} from '@vicons/antd'

import { IDefinitionData } from '../types'

import styles from '../index.module.scss'

const props = {

row: {

type: Object as PropType<IDefinitionData>,

default: {}

},

show: {

type: Boolean as PropType<boolean>,

default: false

}

}

export default defineComponent({

name: 'workflowDefinitionStart',

props,

emits: ['update:show', 'update:row', 'updateList'],

setup(props, ctx) {

const parallelismRef = ref(false)

const { t } = useI18n()

const { startState } = useForm()

const {

variables,

handleStartDefinition,

getWorkerGroups,

getAlertGroups,

getEnvironmentList

} = useModal(startState, ctx)

const hideModal = () => {

ctx.emit('update:show')

}

const handleStart = () => {

handleStartDefinition(props.row.code)

}

const generalWarningTypeListOptions = () => [

{

value: 'NONE',

label: t('project.workflow.none_send')

},

{

value: 'SUCCESS',

label: t('project.workflow.success_send')

},

{

value: 'FAILURE',

label: t('project.workflow.failure_send')

},

{

value: 'ALL',

label: t('project.workflow.all_send')

}

]

const generalPriorityList = () => [

{

value: 'HIGHEST',

label: 'HIGHEST',

color: '#ff0000',

icon: ArrowUpOutlined

},

{

value: 'HIGH',

label: 'HIGH',

color: '#ff0000',

icon: ArrowUpOutlined

},

{

value: 'MEDIUM',

label: 'MEDIUM',

color: '#EA7D24',

icon: ArrowUpOutlined

},

{

value: 'LOW',

label: 'LOW',

color: '#2A8734',

icon: ArrowDownOutlined

},

{

value: 'LOWEST',

label: 'LOWEST',

color: '#2A8734',

icon: ArrowDownOutlined

}

]

const renderLabel = (option: any) => {

return [

h(

NIcon,

{

style: {

verticalAlign: 'middle',

marginRight: '4px',

marginBottom: '3px'

},

color: option.color

},

{

default: () => h(option.icon)

}

),

option.label

]

}

const updateWorkerGroup = () => {

startState.startForm.environmentCode = null

}

const addStartParams = () => {

variables.startParamsList.push({

prop: '',

value: ''

})

}

const updateParamsList = (index: number, param: Array<string>) => {

variables.startParamsList[index].prop = param[0]

variables.startParamsList[index].value = param[1]

}

const removeStartParams = (index: number) => {

variables.startParamsList.splice(index, 1)

}

onMounted(() => {

getWorkerGroups()

getAlertGroups()

getEnvironmentList()

})

return {

t,

parallelismRef,

hideModal,

handleStart,

generalWarningTypeListOptions,

generalPriorityList,

renderLabel,

updateWorkerGroup,

removeStartParams,

addStartParams,

updateParamsList,

...toRefs(variables),

...toRefs(startState),

...toRefs(props)

}

},

render() {

const { t } = this

return (

<Modal

show={this.show}

title={t('project.workflow.set_parameters_before_starting')}

onCancel={this.hideModal}

onConfirm={this.handleStart}

>

<NForm ref='startFormRef' label-placement='left' label-width='160'>

<NFormItem

label={t('project.workflow.workflow_name')}

path='workflow_name'

>

{this.row.name}

</NFormItem>

<NFormItem

label={t('project.workflow.failure_strategy')}

path='failureStrategy'

>

<NRadioGroup v-model:value={this.startForm.failureStrategy}>

<NSpace>

<NRadio value='CONTINUE'>

{t('project.workflow.continue')}

</NRadio>

<NRadio value='END'>{t('project.workflow.end')}</NRadio>

</NSpace>

</NRadioGroup>

</NFormItem>

<NFormItem

label={t('project.workflow.notification_strategy')}

path='warningType'

>

<NSelect

options={this.generalWarningTypeListOptions()}

v-model:value={this.startForm.warningType}

/>

</NFormItem>

<NFormItem

label={t('project.workflow.workflow_priority')}

path='processInstancePriority'

>

<NSelect

options={this.generalPriorityList()}

renderLabel={this.renderLabel}

v-model:value={this.startForm.processInstancePriority}

/>

</NFormItem>

<NFormItem

label={t('project.workflow.worker_group')}

path='workerGroup'

>

<NSelect

options={this.workerGroups}

onUpdateValue={this.updateWorkerGroup}

v-model:value={this.startForm.workerGroup}

/>

</NFormItem>

<NFormItem

label={t('project.workflow.environment_name')}

path='environmentCode'

>

<NSelect

options={this.environmentList.filter((item: any) =>

item.workerGroups?.includes(this.startForm.workerGroup)

)}

v-model:value={this.startForm.environmentCode}

clearable

/>

</NFormItem>

<NFormItem

label={t('project.workflow.alarm_group')}

path='warningGroupId'

>

<NSelect

options={this.alertGroups}

placeholder={t('project.workflow.please_choose')}

v-model:value={this.startForm.warningGroupId}

clearable

/>

</NFormItem>

<NFormItem

label={t('project.workflow.complement_data')}

path='complement_data'

>

<NCheckbox

checkedValue={'COMPLEMENT_DATA'}

uncheckedValue={undefined}

v-model:checked={this.startForm.execType}

>

{t('project.workflow.whether_complement_data')}

</NCheckbox>

</NFormItem>

{this.startForm.execType && (

<NSpace>

<NFormItem

label={t('project.workflow.mode_of_execution')}

path='runMode'

>

<NRadioGroup v-model:value={this.startForm.runMode}>

<NSpace>

<NRadio value={'RUN_MODE_SERIAL'}>

{t('project.workflow.serial_execution')}

</NRadio>

<NRadio value={'RUN_MODE_PARALLEL'}>

{t('project.workflow.parallel_execution')}

</NRadio>

</NSpace>

</NRadioGroup>

</NFormItem>

{this.startForm.runMode === 'RUN_MODE_PARALLEL' && (

<NFormItem

label={t('project.workflow.parallelism')}

path='expectedParallelismNumber'

>

<NCheckbox v-model:checked={this.parallelismRef}>

{t('project.workflow.custom_parallelism')}

</NCheckbox>

<NInput

disabled={!this.parallelismRef}

placeholder={t('project.workflow.please_enter_parallelism')}

v-model:value={this.startForm.expectedParallelismNumber}

/>

</NFormItem>

)}

<NFormItem

label={t('project.workflow.schedule_date')}

path='startEndTime'

>

<NDatePicker

type='datetimerange'

clearable

v-model:value={this.startForm.startEndTime}

/>

</NFormItem>

</NSpace>

)}

<NFormItem

label={t('project.workflow.startup_parameter')}

path='startup_parameter'

>

{this.startParamsList.length === 0 ? (

<NButton text type='primary' onClick={this.addStartParams}>

<NIcon>

<PlusCircleOutlined />

</NIcon>

</NButton>

) : (

<NSpace vertical>

{this.startParamsList.map((item, index) => (

<NSpace class={styles.startup} key={index}>

<NInput

pair

separator=':'

placeholder={['prop', 'value']}

onUpdateValue={(param) =>

this.updateParamsList(index, param)

}

/>

<NButton

text

type='error'

onClick={() => this.removeStartParams(index)}

>

<NIcon>

<DeleteOutlined />

</NIcon>

</NButton>

<NButton text type='primary' onClick={this.addStartParams}>

<NIcon>

<PlusCircleOutlined />

</NIcon>

</NButton>

</NSpace>

))}

</NSpace>

)}

</NFormItem>

<NFormItem

label={t('project.workflow.whether_dry_run')}

path='dryRun'

>

<NSwitch

checkedValue={1}

uncheckedValue={0}

v-model:value={this.startForm.dryRun}

/>

</NFormItem>

</NForm>

</Modal>

)

}

})

|

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 8,690 | [Bug][UI Next][V1.0.0-Alpha] Workflow execution error | ### Search before asking

- [X] I had searched in the [issues](https://github.com/apache/dolphinscheduler/issues?q=is%3Aissue) and found no similar issues.

### What happened

Workflow execution error

<img width="1571" alt="image" src="https://user-images.githubusercontent.com/76080484/156691383-e0cdcb28-94cf-41a2-bfad-8e5587c99dab.png">

If this ” execType“ field is not false, it will fail, otherwise it will succeed

<img width="1533" alt="image" src="https://user-images.githubusercontent.com/76080484/156691502-16627d54-1110-42da-88d9-6ba15c095f76.png">

path:/executors/start-process-instance

<img width="997" alt="image" src="https://user-images.githubusercontent.com/76080484/156691724-8089dfe4-af2b-4d4c-8b11-b914a2c21f3e.png">

### What you expected to happen

normal operation

### How to reproduce

Click Run workflow

### Anything else

_No response_

### Version

dev

### Are you willing to submit PR?

- [ ] Yes I am willing to submit a PR!

### Code of Conduct

- [X] I agree to follow this project's [Code of Conduct](https://www.apache.org/foundation/policies/conduct)

| https://github.com/apache/dolphinscheduler/issues/8690 | https://github.com/apache/dolphinscheduler/pull/8734 | c51f2e4a7cdfca19cc0477871c9a589b684354f9 | e34f6fc807900c60cdc9bb5dff96701a94e8d17c | "2022-03-04T03:16:24Z" | java | "2022-03-07T09:04:37Z" | dolphinscheduler-ui-next/src/views/projects/workflow/definition/components/use-form.ts | /*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

import { reactive, ref } from 'vue'

import { useI18n } from 'vue-i18n'

import type { FormRules } from 'naive-ui'

export const useForm = () => {

const { t } = useI18n()

const date = new Date()

const year = date.getFullYear()

const month = date.getMonth()

const day = date.getDate()

const importState = reactive({

importFormRef: ref(),

importForm: {

name: '',

file: ''

},

importRules: {

file: {

required: true,

trigger: ['input', 'blur'],

validator() {

if (importState.importForm.name === '') {

return new Error(t('project.workflow.enter_name_tips'))

}

}

}

} as FormRules

})

const startState = reactive({

startFormRef: ref(),

startForm: {

processDefinitionCode: -1,

startEndTime: [new Date(year, month, day), new Date(year, month, day)],

scheduleTime: null,

failureStrategy: 'CONTINUE',

warningType: 'NONE',

warningGroupId: null,

execType: '',

startNodeList: '',

taskDependType: 'TASK_POST',

runMode: 'RUN_MODE_SERIAL',

processInstancePriority: 'MEDIUM',

workerGroup: 'default',

environmentCode: null,

startParams: null,

expectedParallelismNumber: '',

dryRun: 0

}

})

const timingState = reactive({

timingFormRef: ref(),

timingForm: {

startEndTime: [

new Date(year, month, day),

new Date(year + 100, month, day)

],

crontab: '0 0 * * * ? *',

timezoneId: Intl.DateTimeFormat().resolvedOptions().timeZone,

failureStrategy: 'CONTINUE',

warningType: 'NONE',

processInstancePriority: 'MEDIUM',

warningGroupId: '',

workerGroup: 'default',

environmentCode: null

}

})

return {

importState,

startState,

timingState

}

}

|

closed | apache/dolphinscheduler | https://github.com/apache/dolphinscheduler | 8,497 | [Feature][UI Next][V1.0.0-Alpha] Dependent tasks can re-run automatically in the case of complement | ### Search before asking

- [X] I had searched in the [issues](https://github.com/apache/dolphinscheduler/issues?q=is%3Aissue) and found no similar feature requirement.

### Description

Add a select button to control the switch of complement dependent process.

Api:

Add param ```complementDependentMode``` in ```projects/{projectCode}/executors/start-process-instance```

Enum:

1. OFF_MODE (default, not required)

2. ALL_DEPENDENT

### Use case

#8373

### Related issues

#8373

### Are you willing to submit a PR?

- [ ] Yes I am willing to submit a PR!

### Code of Conduct

- [X] I agree to follow this project's [Code of Conduct](https://www.apache.org/foundation/policies/conduct)

| https://github.com/apache/dolphinscheduler/issues/8497 | https://github.com/apache/dolphinscheduler/pull/8739 | 1d7ee2c5c444b538f3606e0ba4b22d64f0c2686d | aa5392529bb8d2ba7b4b73a9527adf713f8884c8 | "2022-02-23T04:41:14Z" | java | "2022-03-07T10:05:58Z" | dolphinscheduler-ui-next/src/locales/modules/en_US.ts | /*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

const login = {

test: 'Test',

userName: 'Username',

userName_tips: 'Please enter your username',

userPassword: 'Password',

userPassword_tips: 'Please enter your password',

login: 'Login'

}

const modal = {

cancel: 'Cancel',

confirm: 'Confirm'

}

const theme = {

light: 'Light',

dark: 'Dark'

}

const userDropdown = {

profile: 'Profile',

password: 'Password',

logout: 'Logout'

}

const menu = {

home: 'Home',

project: 'Project',

resources: 'Resources',

datasource: 'Datasource',

monitor: 'Monitor',

security: 'Security',

project_overview: 'Project Overview',

workflow_relation: 'Workflow Relation',

workflow: 'Workflow',

workflow_definition: 'Workflow Definition',

workflow_instance: 'Workflow Instance',

task: 'Task',

task_instance: 'Task Instance',

task_definition: 'Task Definition',

file_manage: 'File Manage',

udf_manage: 'UDF Manage',

resource_manage: 'Resource Manage',

function_manage: 'Function Manage',

service_manage: 'Service Manage',

master: 'Master',

worker: 'Worker',

db: 'DB',

statistical_manage: 'Statistical Manage',

statistics: 'Statistics',

audit_log: 'Audit Log',

tenant_manage: 'Tenant Manage',

user_manage: 'User Manage',

alarm_group_manage: 'Alarm Group Manage',

alarm_instance_manage: 'Alarm Instance Manage',

worker_group_manage: 'Worker Group Manage',

yarn_queue_manage: 'Yarn Queue Manage',

environment_manage: 'Environment Manage',

k8s_namespace_manage: 'K8S Namespace Manage',

token_manage: 'Token Manage',

task_group_manage: 'Task Group Manage',

task_group_option: 'Task Group Option',

task_group_queue: 'Task Group Queue',

data_quality: 'Data Quality',

task_result: 'Task Result',

rule: 'Rule management'

}

const home = {

task_state_statistics: 'Task State Statistics',

process_state_statistics: 'Process State Statistics',

process_definition_statistics: 'Process Definition Statistics',

number: 'Number',

state: 'State',

submitted_success: 'SUBMITTED_SUCCESS',

running_execution: 'RUNNING_EXECUTION',

ready_pause: 'READY_PAUSE',

pause: 'PAUSE',

ready_stop: 'READY_STOP',

stop: 'STOP',

failure: 'FAILURE',

success: 'SUCCESS',

need_fault_tolerance: 'NEED_FAULT_TOLERANCE',

kill: 'KILL',

waiting_thread: 'WAITING_THREAD',

waiting_depend: 'WAITING_DEPEND',

delay_execution: 'DELAY_EXECUTION',

forced_success: 'FORCED_SUCCESS',

serial_wait: 'SERIAL_WAIT'

}

const password = {

edit_password: 'Edit Password',

password: 'Password',

confirm_password: 'Confirm Password',

password_tips: 'Please enter your password',

confirm_password_tips: 'Please enter your confirm password',

two_password_entries_are_inconsistent:

'Two password entries are inconsistent',

submit: 'Submit'

}

const profile = {

profile: 'Profile',

edit: 'Edit',

username: 'Username',

email: 'Email',

phone: 'Phone',

state: 'State',

permission: 'Permission',

create_time: 'Create Time',

update_time: 'Update Time',

administrator: 'Administrator',

ordinary_user: 'Ordinary User',

edit_profile: 'Edit Profile',

username_tips: 'Please enter your username',

email_tips: 'Please enter your email',

email_correct_tips: 'Please enter your email in the correct format',

phone_tips: 'Please enter your phone',

state_tips: 'Please choose your state',

enable: 'Enable',

disable: 'Disable'

}

const monitor = {

master: {

cpu_usage: 'CPU Usage',

memory_usage: 'Memory Usage',

load_average: 'Load Average',

create_time: 'Create Time',

last_heartbeat_time: 'Last Heartbeat Time',

directory_detail: 'Directory Detail',

host: 'Host',

directory: 'Directory'

},

worker: {

cpu_usage: 'CPU Usage',

memory_usage: 'Memory Usage',

load_average: 'Load Average',

create_time: 'Create Time',

last_heartbeat_time: 'Last Heartbeat Time',

directory_detail: 'Directory Detail',

host: 'Host',

directory: 'Directory'

},

db: {

health_state: 'Health State',

max_connections: 'Max Connections',

threads_connections: 'Threads Connections',

threads_running_connections: 'Threads Running Connections'

},

statistics: {

command_number_of_waiting_for_running:

'Command Number Of Waiting For Running',

failure_command_number: 'Failure Command Number',

tasks_number_of_waiting_running: 'Tasks Number Of Waiting Running',

task_number_of_ready_to_kill: 'Task Number Of Ready To Kill'

},

audit_log: {

user_name: 'User Name',

resource_type: 'Resource Type',

project_name: 'Project Name',

operation_type: 'Operation Type',

create_time: 'Create Time',

start_time: 'Start Time',

end_time: 'End Time',

user_audit: 'User Audit',

project_audit: 'Project Audit',

create: 'Create',

update: 'Update',

delete: 'Delete',

read: 'Read'

}

}

const resource = {

file: {

file_manage: 'File Manage',

create_folder: 'Create Folder',

create_file: 'Create File',

upload_files: 'Upload Files',

enter_keyword_tips: 'Please enter keyword',

name: 'Name',

user_name: 'Resource userName',

whether_directory: 'Whether directory',

file_name: 'File Name',

description: 'Description',

size: 'Size',

update_time: 'Update Time',

operation: 'Operation',

edit: 'Edit',

rename: 'Rename',

download: 'Download',

delete: 'Delete',

yes: 'Yes',

no: 'No',

folder_name: 'Folder Name',

enter_name_tips: 'Please enter name',

enter_description_tips: 'Please enter description',

enter_content_tips: 'Please enter the resource content',

file_format: 'File Format',

file_content: 'File Content',

delete_confirm: 'Delete?',

confirm: 'Confirm',

cancel: 'Cancel',

success: 'Success',

file_details: 'File Details',

return: 'Return',

save: 'Save'

},

udf: {

udf_resources: 'UDF resources',

create_folder: 'Create Folder',

upload_udf_resources: 'Upload UDF Resources',

udf_source_name: 'UDF Resource Name',

whether_directory: 'Whether directory',

file_name: 'File Name',

file_size: 'File Size',

description: 'Description',

create_time: 'Create Time',

update_time: 'Update Time',

operation: 'Operation',

yes: 'Yes',

no: 'No',

edit: 'Edit',

download: 'Download',

delete: 'Delete',

delete_confirm: 'Delete?',

success: 'Success',

folder_name: 'Folder Name',

upload: 'Upload',

upload_files: 'Upload Files',

file_upload: 'File Upload',

enter_keyword_tips: 'Please enter keyword',

enter_name_tips: 'Please enter name',

enter_description_tips: 'Please enter description'

},

function: {

udf_function: 'UDF Function',

create_udf_function: 'Create UDF Function',

edit_udf_function: 'Create UDF Function',

udf_function_name: 'UDF Function Name',

class_name: 'Class Name',

type: 'Type',

description: 'Description',

jar_package: 'Jar Package',

update_time: 'Update Time',

operation: 'Operation',

rename: 'Rename',

edit: 'Edit',

delete: 'Delete',

success: 'Success',

package_name: 'Package Name',

udf_resources: 'UDF Resources',

instructions: 'Instructions',

upload_resources: 'Upload Resources',

udf_resources_directory: 'UDF resources directory',

delete_confirm: 'Delete?',

enter_keyword_tips: 'Please enter keyword',

enter_udf_unction_name_tips: 'Please enter a UDF function name',

enter_package_name_tips: 'Please enter a Package name',

enter_select_udf_resources_tips: 'Please select UDF resources',

enter_select_udf_resources_directory_tips:

'Please select UDF resources directory',

enter_instructions_tips: 'Please enter a instructions',

enter_name_tips: 'Please enter name',

enter_description_tips: 'Please enter description'

},

task_group_option: {

manage: 'Task group manage',

option: 'Task group option',

create: 'Create task group',

edit: 'Edit task group',

delete: 'Delete task group',

view_queue: 'View the queue of the task group',

switch_status: 'Switch status',

code: 'Task group code',

name: 'Task group name',

project_name: 'Project name',

resource_pool_size: 'Resource pool size',

resource_pool_size_be_a_number:

'The size of the task group resource pool should be more than 1',

resource_used_pool_size: 'Used resource',

desc: 'Task group desc',

status: 'Task group status',

enable_status: 'Enable',

disable_status: 'Disable',

please_enter_name: 'Please enter task group name',

please_enter_desc: 'Please enter task group description',

please_enter_resource_pool_size:

'Please enter task group resource pool size',

please_select_project: 'Please select a project',

create_time: 'Create time',

update_time: 'Update time',

actions: 'Actions',

please_enter_keywords: 'Please enter keywords'

},

task_group_queue: {

actions: 'Actions',

task_name: 'Task name',

task_group_name: 'Task group name',

project_name: 'Project name',

process_name: 'Process name',

process_instance_name: 'Process instance',

queue: 'Task group queue',

priority: 'Priority',

priority_be_a_number:

'The priority of the task group queue should be a positive number',

force_starting_status: 'Starting status',

in_queue: 'In queue',

task_status: 'Task status',

view: 'View task group queue',

the_status_of_waiting: 'Waiting into the queue',

the_status_of_queuing: 'Queuing',

the_status_of_releasing: 'Released',

modify_priority: 'Edit the priority',

start_task: 'Start the task',

priority_not_empty: 'The value of priority can not be empty',

priority_must_be_number: 'The value of priority should be number',

please_select_task_name: 'Please select a task name',

create_time: 'Create time',

update_time: 'Update time',

edit_priority: 'Edit the task priority'

}

}

const project = {

list: {

create_project: 'Create Project',

edit_project: 'Edit Project',

project_list: 'Project List',

project_tips: 'Please enter your project',

description_tips: 'Please enter your description',

username_tips: 'Please enter your username',

project_name: 'Project Name',

project_description: 'Project Description',

owned_users: 'Owned Users',

workflow_define_count: 'Workflow Define Count',

process_instance_running_count: 'Process Instance Running Count',

description: 'Description',

create_time: 'Create Time',

update_time: 'Update Time',

operation: 'Operation',

edit: 'Edit',

delete: 'Delete',

confirm: 'Confirm',

cancel: 'Cancel',

delete_confirm: 'Delete?'

},

workflow: {

workflow_relation: 'Workflow Relation',

create_workflow: 'Create Workflow',

import_workflow: 'Import Workflow',

workflow_name: 'Workflow Name',

current_selection: 'Current Selection',

online: 'Online',

offline: 'Offline',

refresh: 'Refresh',

show_hide_label: 'Show / Hide Label',

workflow_offline: 'Workflow Offline',

schedule_offline: 'Schedule Offline',

schedule_start_time: 'Schedule Start Time',

schedule_end_time: 'Schedule End Time',

crontab_expression: 'Crontab',

workflow_publish_status: 'Workflow Publish Status',

schedule_publish_status: 'Schedule Publish Status',

workflow_definition: 'Workflow Definition',

workflow_instance: 'Workflow Instance',

status: 'Status',

create_time: 'Create Time',

update_time: 'Update Time',

description: 'Description',

create_user: 'Create User',

modify_user: 'Modify User',

operation: 'Operation',

edit: 'Edit',

start: 'Start',

timing: 'Timing',

timezone: 'Timezone',

up_line: 'Online',

down_line: 'Offline',

copy_workflow: 'Copy Workflow',

cron_manage: 'Cron manage',

delete: 'Delete',

tree_view: 'Tree View',

tree_limit: 'Limit Size',

export: 'Export',

version_info: 'Version Info',

version: 'Version',

file_upload: 'File Upload',

upload_file: 'Upload File',

upload: 'Upload',

file_name: 'File Name',

success: 'Success',

set_parameters_before_starting: 'Please set the parameters before starting',

set_parameters_before_timing: 'Set parameters before timing',

start_and_stop_time: 'Start and stop time',

next_five_execution_times: 'Next five execution times',

execute_time: 'Execute time',

failure_strategy: 'Failure Strategy',

notification_strategy: 'Notification Strategy',

workflow_priority: 'Workflow Priority',

worker_group: 'Worker Group',

environment_name: 'Environment Name',

alarm_group: 'Alarm Group',

complement_data: 'Complement Data',

startup_parameter: 'Startup Parameter',

whether_dry_run: 'Whether Dry-Run',

continue: 'Continue',

end: 'End',

none_send: 'None',

success_send: 'Success',

failure_send: 'Failure',

all_send: 'All',

whether_complement_data: 'Whether it is a complement process?',

schedule_date: 'Schedule date',

mode_of_execution: 'Mode of execution',

serial_execution: 'Serial execution',

parallel_execution: 'Parallel execution',

parallelism: 'Parallelism',

custom_parallelism: 'Custom Parallelism',

please_enter_parallelism: 'Please enter Parallelism',

please_choose: 'Please Choose',

start_time: 'Start Time',

end_time: 'End Time',

crontab: 'Crontab',

delete_confirm: 'Delete?',

enter_name_tips: 'Please enter name',

switch_version: 'Switch To This Version',

confirm_switch_version: 'Confirm Switch To This Version?',

current_version: 'Current Version',

run_type: 'Run Type',

scheduling_time: 'Scheduling Time',

duration: 'Duration',

run_times: 'Run Times',

fault_tolerant_sign: 'Fault-tolerant Sign',

dry_run_flag: 'Dry-run Flag',

executor: 'Executor',

host: 'Host',

start_process: 'Start Process',

execute_from_the_current_node: 'Execute from the current node',

recover_tolerance_fault_process: 'Recover tolerance fault process',

resume_the_suspension_process: 'Resume the suspension process',

execute_from_the_failed_nodes: 'Execute from the failed nodes',

scheduling_execution: 'Scheduling execution',

rerun: 'Rerun',

stop: 'Stop',

pause: 'Pause',

recovery_waiting_thread: 'Recovery waiting thread',

recover_serial_wait: 'Recover serial wait',

recovery_suspend: 'Recovery Suspend',

recovery_failed: 'Recovery Failed',

gantt: 'Gantt',

name: 'Name',

all_status: 'AllStatus',

submit_success: 'Submitted successfully',

running: 'Running',

ready_to_pause: 'Ready to pause',

ready_to_stop: 'Ready to stop',

failed: 'Failed',

need_fault_tolerance: 'Need fault tolerance',

kill: 'Kill',

waiting_for_thread: 'Waiting for thread',

waiting_for_dependence: 'Waiting for dependence',

waiting_for_dependency_to_complete: 'Waiting for dependency to complete',

delay_execution: 'Delay execution',

forced_success: 'Forced success',

serial_wait: 'Serial wait',

executing: 'Executing',

startup_type: 'Startup Type',

complement_range: 'Complement Range',

parameters_variables: 'Parameters variables',

global_parameters: 'Global parameters',

local_parameters: 'Local parameters',

type: 'Type',

retry_count: 'Retry Count',

submit_time: 'Submit Time',

refresh_status_succeeded: 'Refresh status succeeded',

view_log: 'View log',

update_log_success: 'Update log success',

no_more_log: 'No more logs',

no_log: 'No log',

loading_log: 'Loading Log...',

close: 'Close',

download_log: 'Download Log',

refresh_log: 'Refresh Log',

enter_full_screen: 'Enter full screen',

cancel_full_screen: 'Cancel full screen',

task_state: 'Task status'

},

task: {

task_name: 'Task Name',

task_type: 'Task Type',

create_task: 'Create Task',

workflow_instance: 'Workflow Instance',

workflow_name: 'Workflow Name',

workflow_name_tips: 'Please select workflow name',

workflow_state: 'Workflow State',

version: 'Version',

current_version: 'Current Version',

switch_version: 'Switch To This Version',

confirm_switch_version: 'Confirm Switch To This Version?',

description: 'Description',

move: 'Move',

upstream_tasks: 'Upstream Tasks',