Spaces:

Running

The vision language model in this video is 0.5B and can take in image, video and 3D! 🤯 Llava-NeXT-Interleave is a new vision language model trained on interleaved image, video and 3D data keep reading ⥥⥥

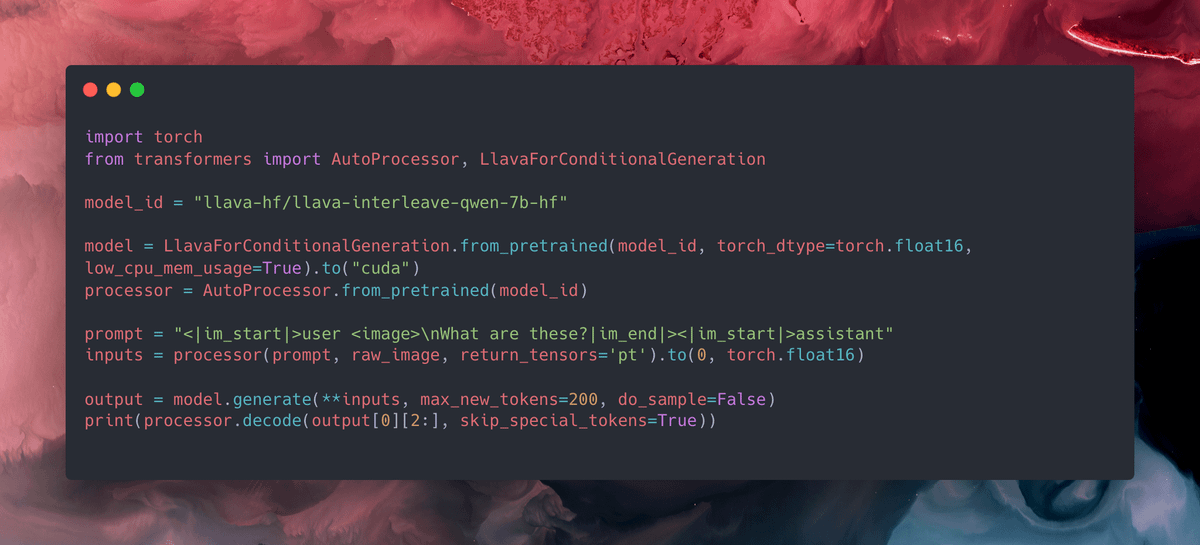

This model comes with 0.5B, 7B and 7B-DPO variants, all can be used with Transformers 😍

Collection of models | Demo

See how to use below 👇🏻

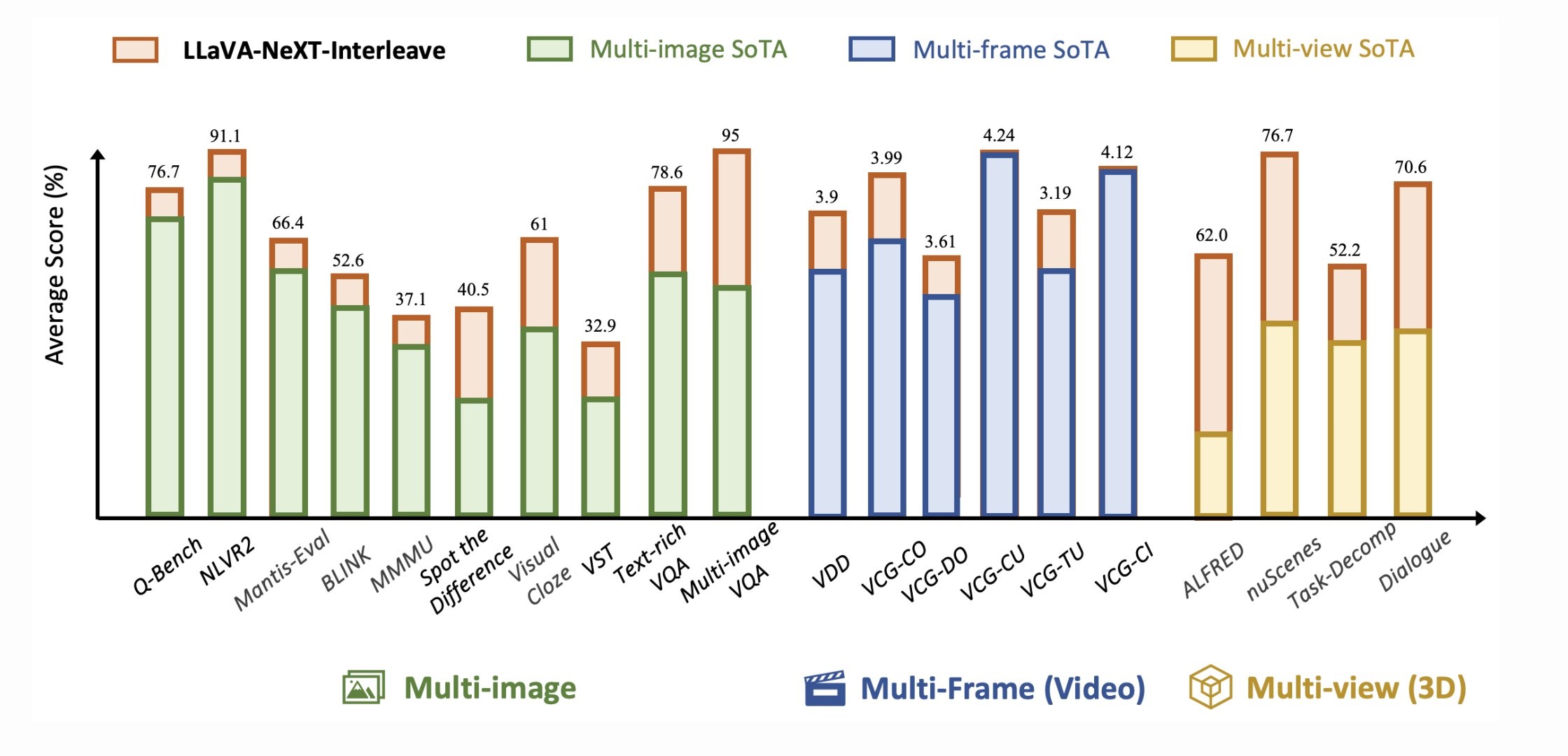

Authors of this paper have explored training Llava-NeXT on interleaved data where the data consists of multiple modalities, including image(s), video, 3D 📚

They have discovered that interleaved data increases results across all benchmarks!

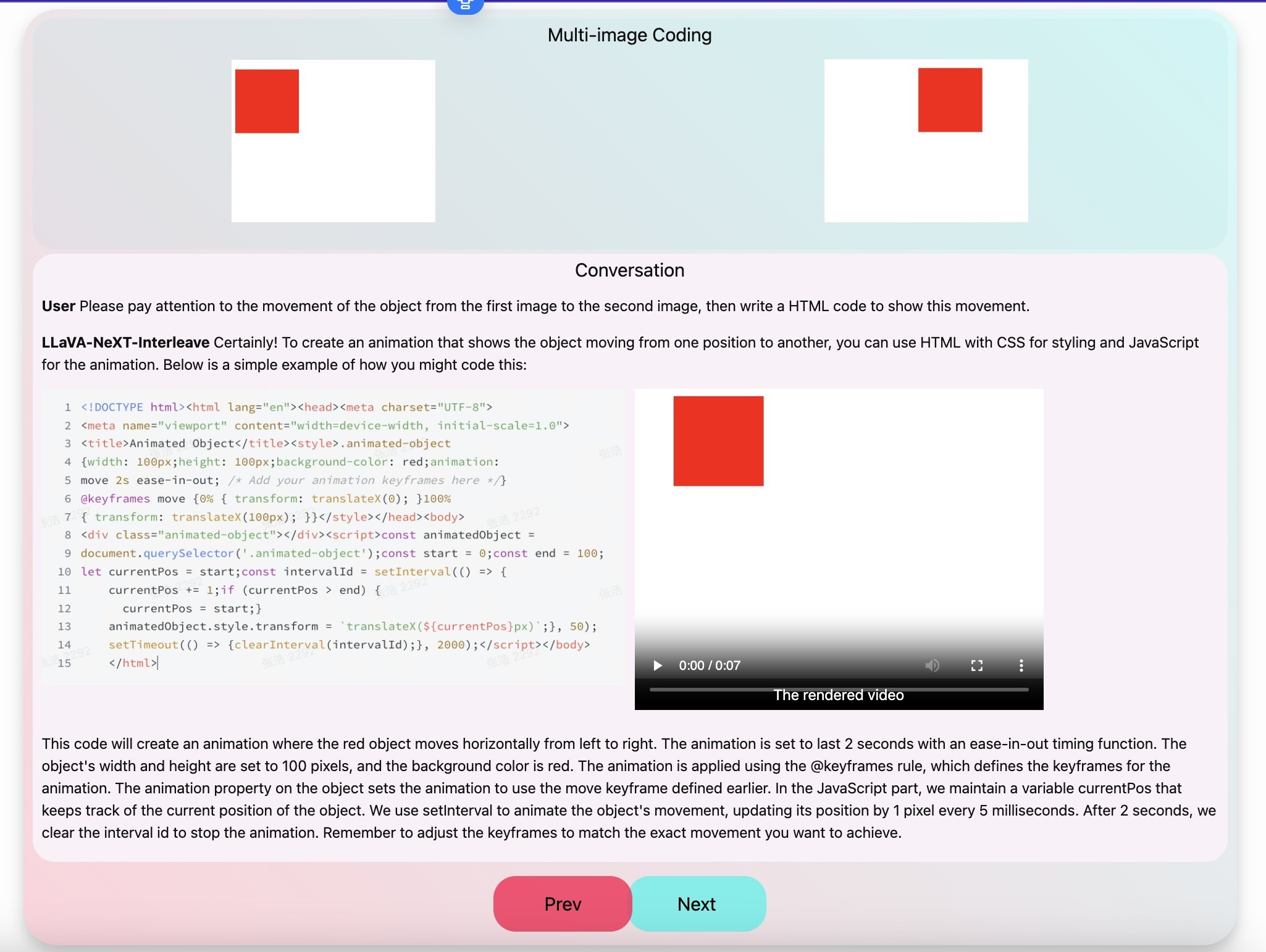

The model can do task transfer from single image tasks to multiple images 🤯 The authors have trained the model on single images and code yet the model can solve coding with multiple images.

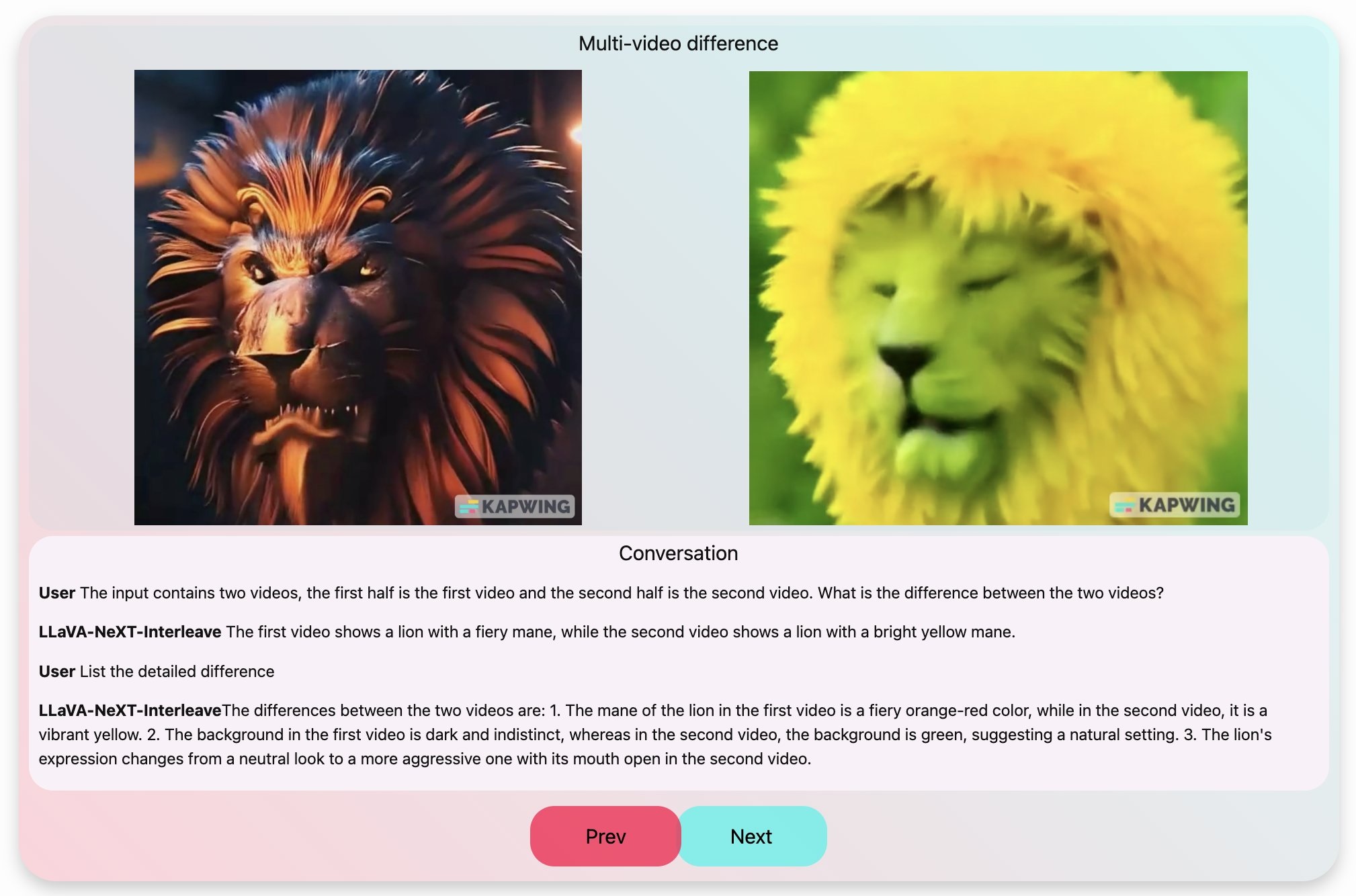

Same applies to other modalities, see below for video:

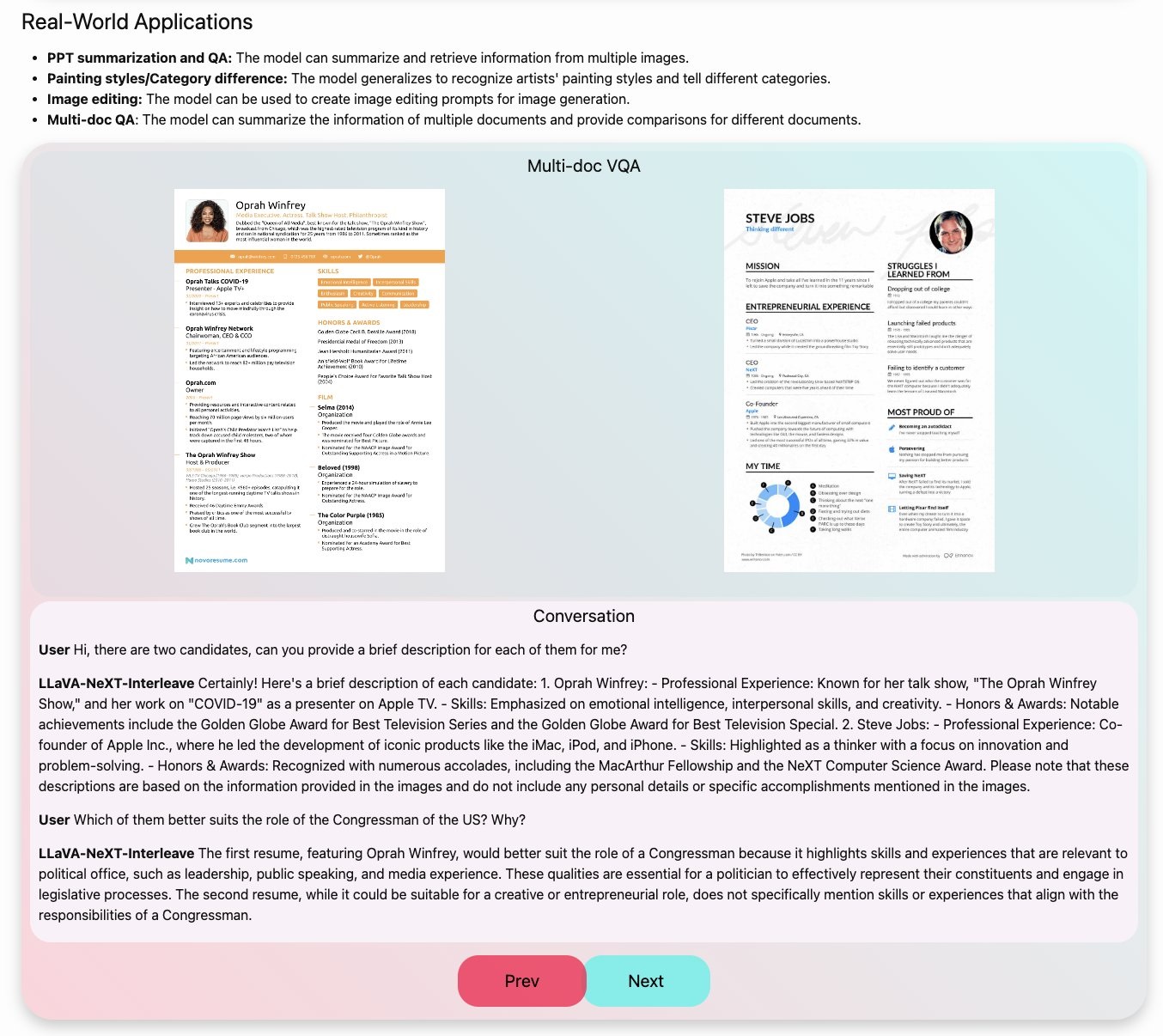

The model also has document understanding capabilities and many real-world application areas

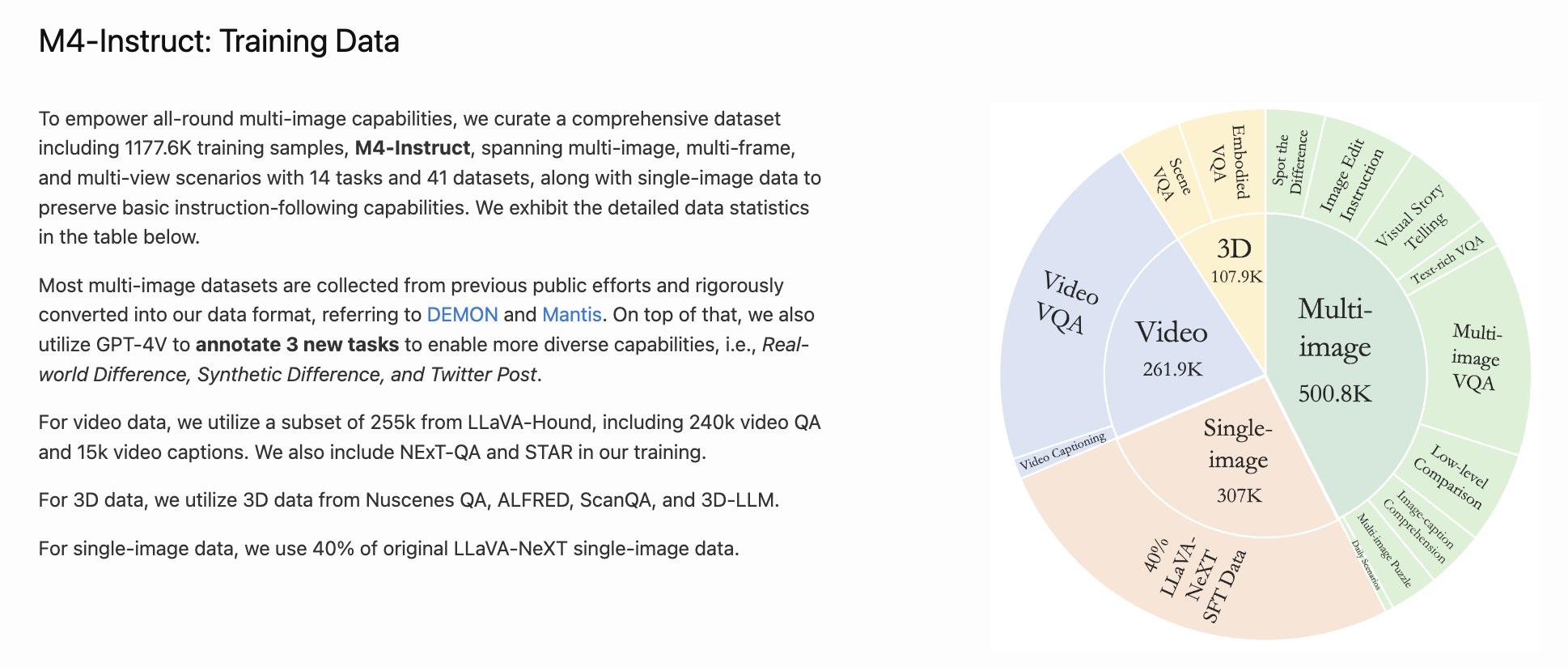

This release also comes with the dataset this model was fine-tuned on 📖 M4-Instruct-Data

Ressources:

LLaVA-NeXT: Tackling Multi-image, Video, and 3D in Large Multimodal Models by Feng Li, Renrui Zhang*, Hao Zhang, Yuanhan Zhang, Bo Li, Wei Li, Zejun Ma, Chunyuan Li (2024) GitHub

Original tweet (July 17, 2024)