Spaces:

Running

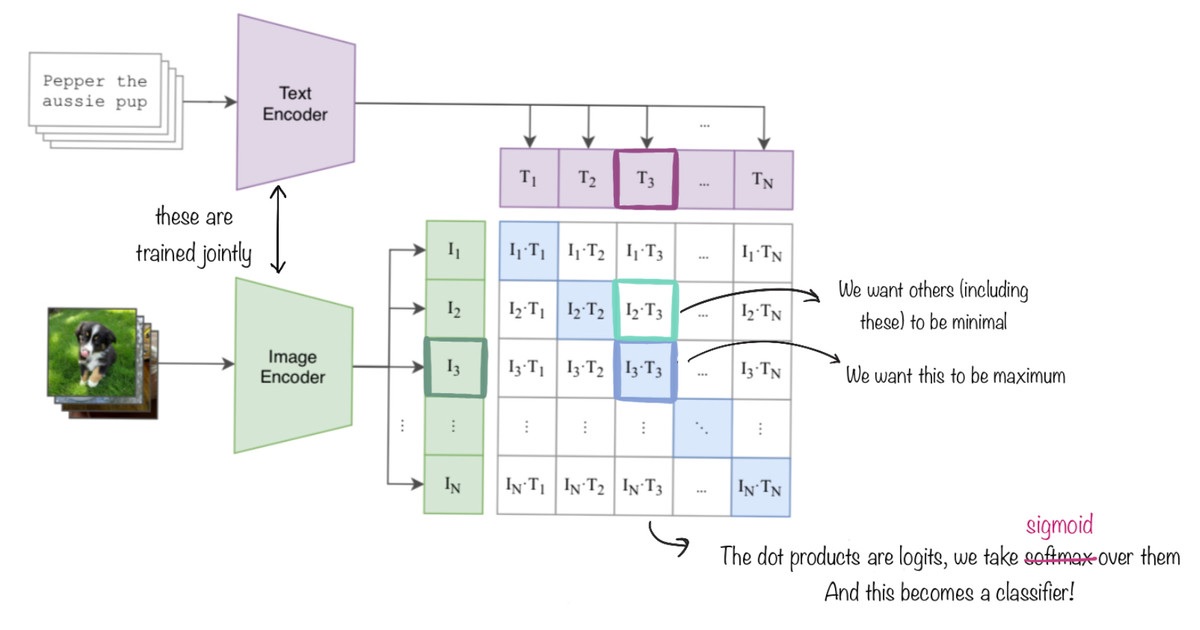

SigLIP just got merged to 🤗transformers and it's super easy to use! To celebrate this, I have created a repository on various SigLIP based projects! But what is it and how does it work? SigLIP an vision-text pre-training technique based on contrastive learning. It jointly trains an image encoder and text encoder such that the dot product of embeddings are most similar for the appropriate text-image pairs. The image below is taken from CLIP, where this contrastive pre-training takes place with softmax, but SigLIP replaces softmax with sigmoid. 📎

Highlights✨

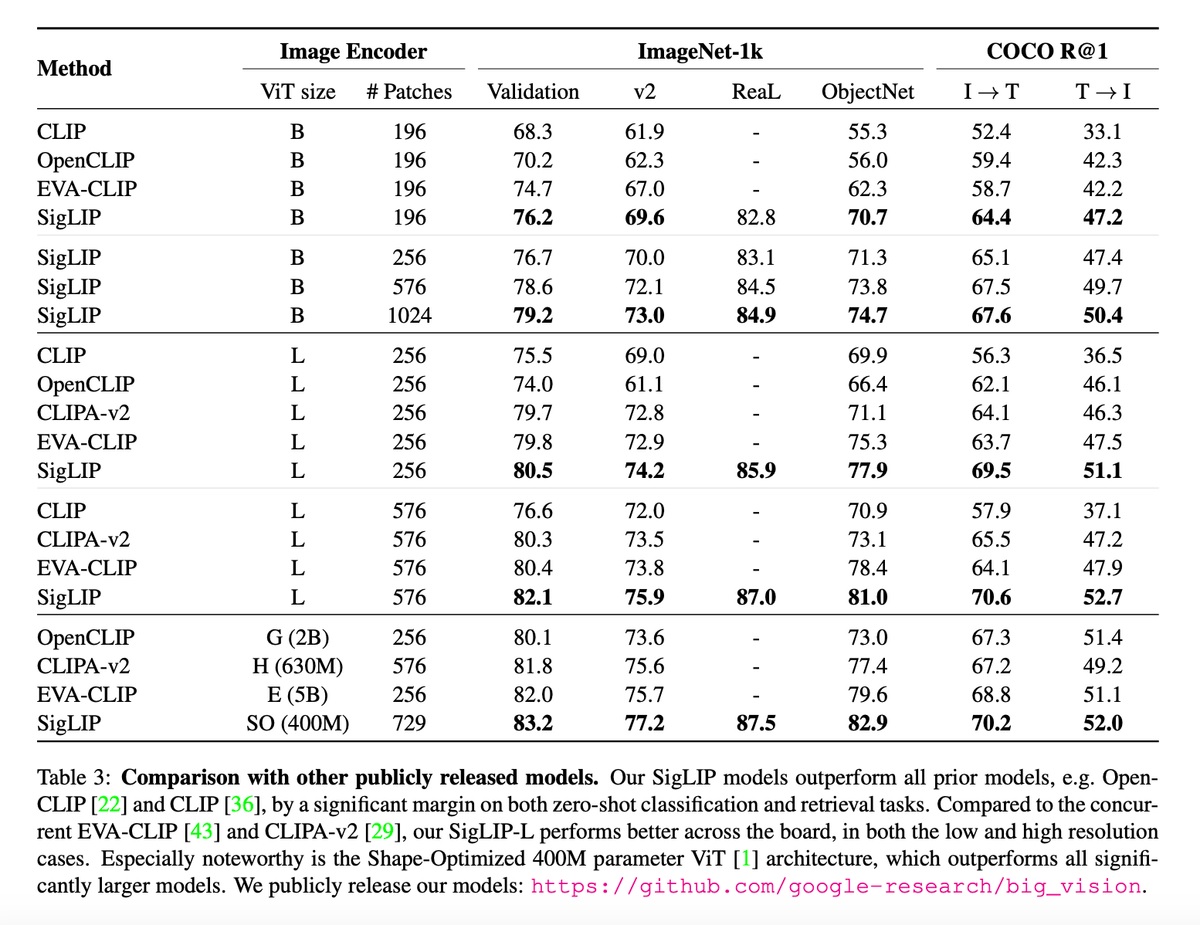

🖼️📝 Authors used medium sized B/16 ViT for image encoder and B-sized transformer for text encoder

😍 More performant than CLIP on zero-shot

🗣️ Authors trained a multilingual model too!

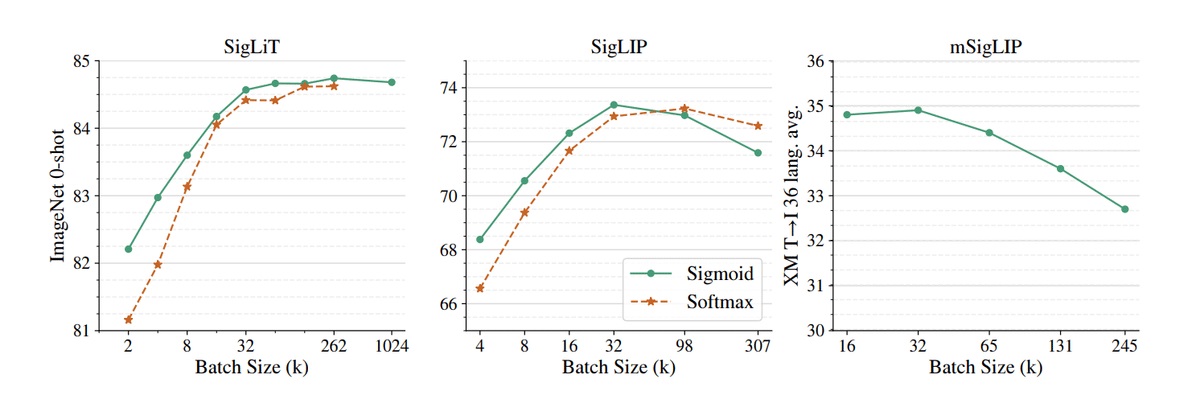

⚡️ Super efficient, sigmoid is enabling up to 1M items per batch, but the authors chose 32k (see saturation on perf below)

Below you can find prior CLIP models and SigLIP across different image encoder sizes and their performance on different datasets 👇🏻

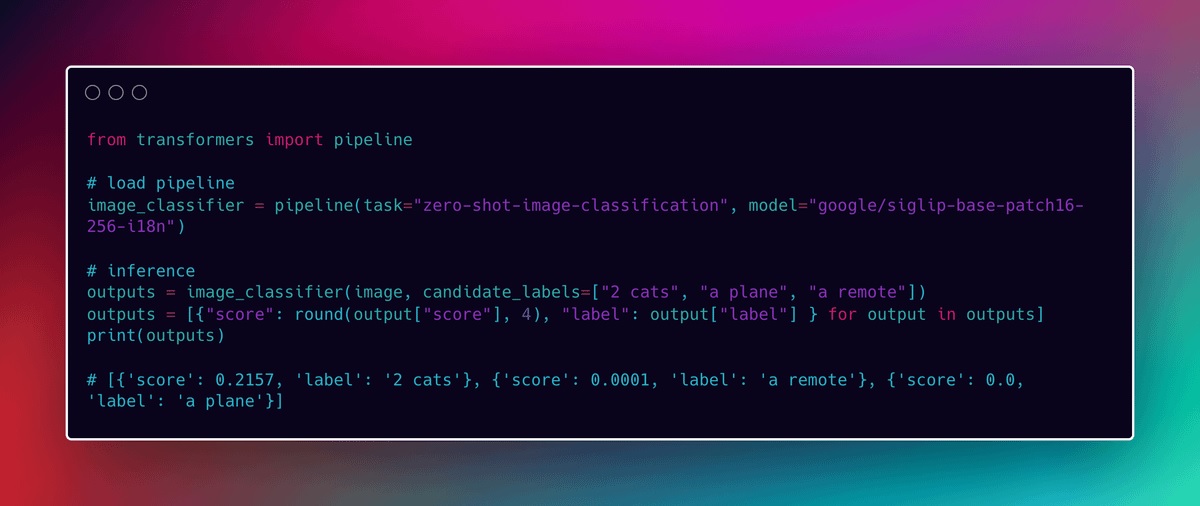

With 🤗 Transformers integration there comes zero-shot-image-classification pipeline, makes SigLIP super easy to use!

What to use SigLIP for? 🧐

Honestly the possibilities are endless, but you can use it for image/text retrieval, zero-shot classification, training multimodal models!

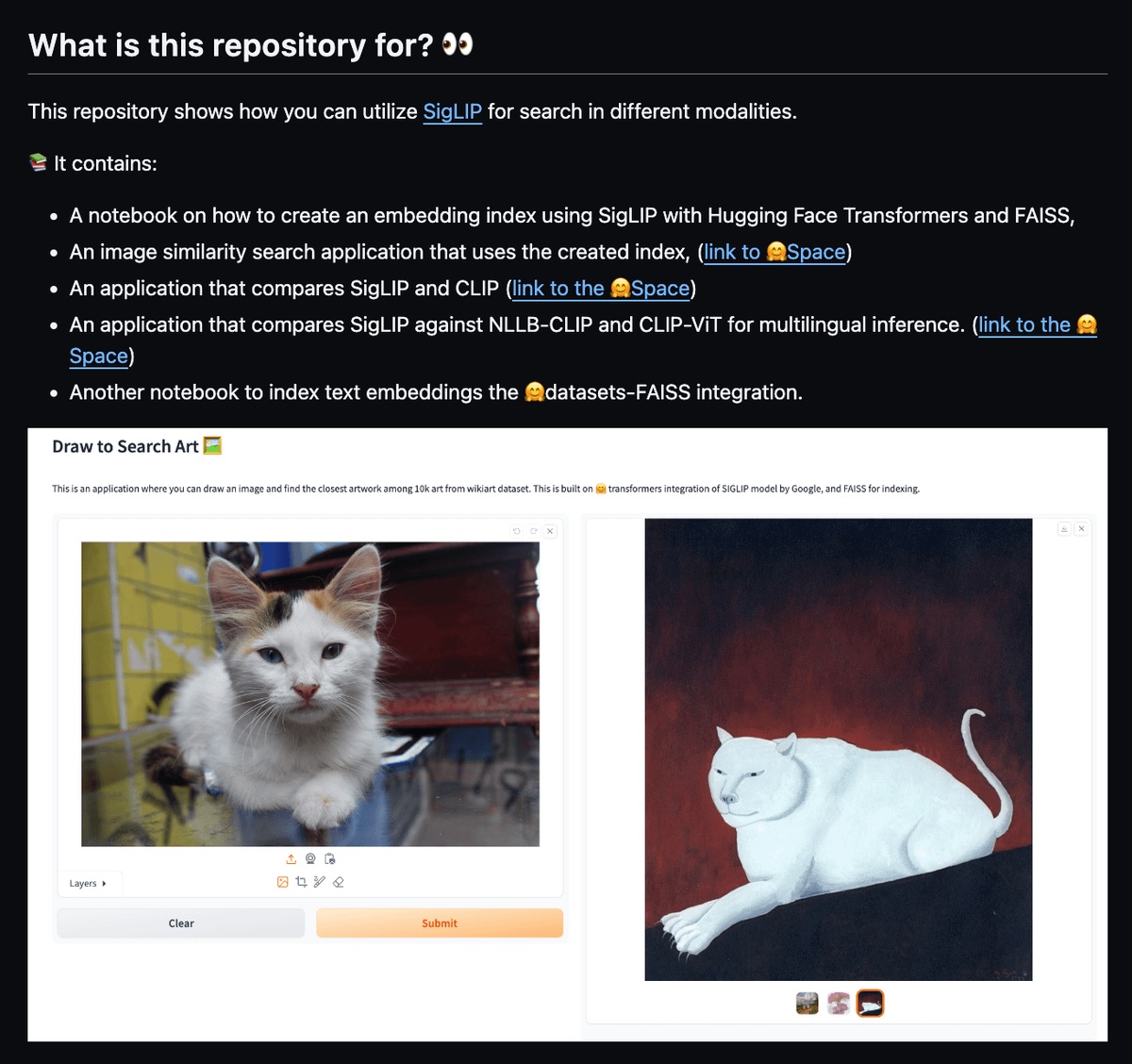

I have made a repository with notebooks and applications that are also hosted on Spaces .

I have built "Draw to Search Art" where you can input image (upload one or draw) and search among 10k images in wikiart!

I've also built apps to compareCLIP and SigLIP outputs.

Ressources:

[Sigmoid Loss for Language Image Pre-Training](Sigmoid Loss for Language Image Pre-Training)

by Xiaohua Zhai, Basil Mustafa, Alexander Kolesnikov, Lucas Beyer (2023)

GitHub Hugging Face documentation

Original tweet (January 11. 2024)